@(信息科学原理)

导论

香农熵

信息:

h(x)=−logp(x)

H(X,Y)=−∑x∈XP(x)log(P(x))=Ex∼Plog(P(x))

其中

0log0=0

,并且定义

log1e=1nats

和

log12=1bits

联合熵

H(X,Y)=−∑x∈X,y∈YP(x,y)logP(x,y)=Ex∼PlogP(x,y)

互信息

I(X,Y)=∑x∈X,y∈YP(x,y)logP(x,y)P(X)P(Y)=Ex,y∼PlogP(x,y)P(X)P(Y)=DKL(P(x,y)∣∣P(X)P(Y))

扫描二维码关注公众号,回复:

2565539 查看本文章

衡量两个信息的相关性大小的量

条件熵

H(Y|X)=−∑x∈X,y∈YP(x,y)logP(y|x)=−∑x∈X,y∈YP(x,y)logP(x,y)P(x)=∑x∈X,y∈YP(x,y)logP(x)P(x,y)=Ex,y∼PlogP(x)P(x,y)

知道的信息越多,随机事件的不确定性就越小

proof:

H(X,Y)=H(X)+H(Y|X)

:

H(X,Y)=−∑x∈X,y∈YP(x,y)logP(x,y)=−∑x∈X,y∈YP(x,y)log[P(y|x)P(x)]=−∑x∈X,y∈YP(x,y)[logP(y|x)+logP(x)]=−∑x∈X,y∈YP(x,y)logP(y|x)+[−∑x∈XP(x)logP(x)]=H(Y|X)+H(x)

proof:

H(X,Y|Z)=H(X|Z)+H(Y|X,Z)

H(X,Y|Z)=−∑x,y,zP(x,y,z)logP(x,y|z)=−∑x,y,zP(x,y,z)log[P(x,y,z)P(z)]=−∑x,y,zP(x,y,z)log[P(x,y,z)P(x,z)P(x,z)p(z)]=[−∑x,y,zP(x,y,z)logP(x,y,z)P(x,z)]+[−∑x,y,zP(x,y,z)logP(x,z)P(z)]=[−∑x,y,zP(x,y,z)logP(x,y,z)P(x,z)]+[−∑x,zP(x,z)logP(x,z)P(z)]=H(Y|X,Z)+H(X|Z)

相对熵(KL-散度)

DKL(P∣∣Q)=∑x∈XP(x)logP(x)Q(x)=Ex∼P[logP(x)Q(x)]=Ex∼P[logP(x)−logQ(x)]

note:

DKL(P∣∣Q)≥0

,用于衡量两个分布的相似性

交叉熵

H(P,Q)=H(P)+DKL(P∣∣Q)H(P,Q)=−Ex∼PlogQ(x)

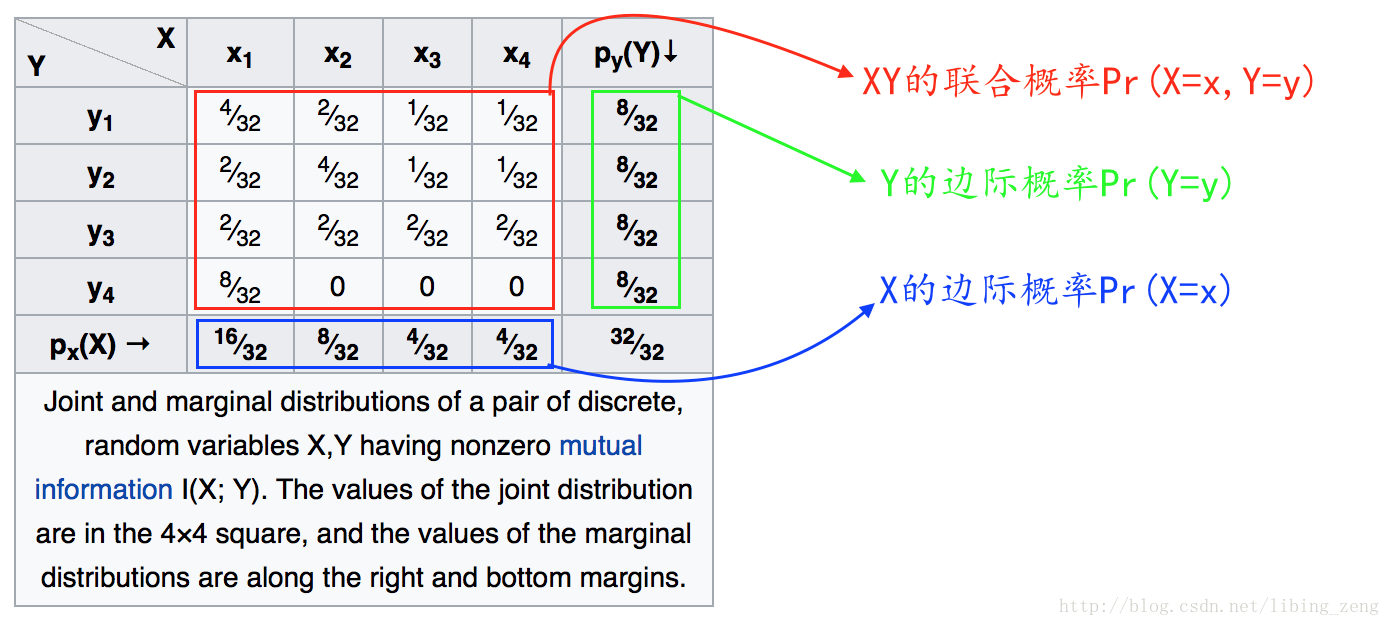

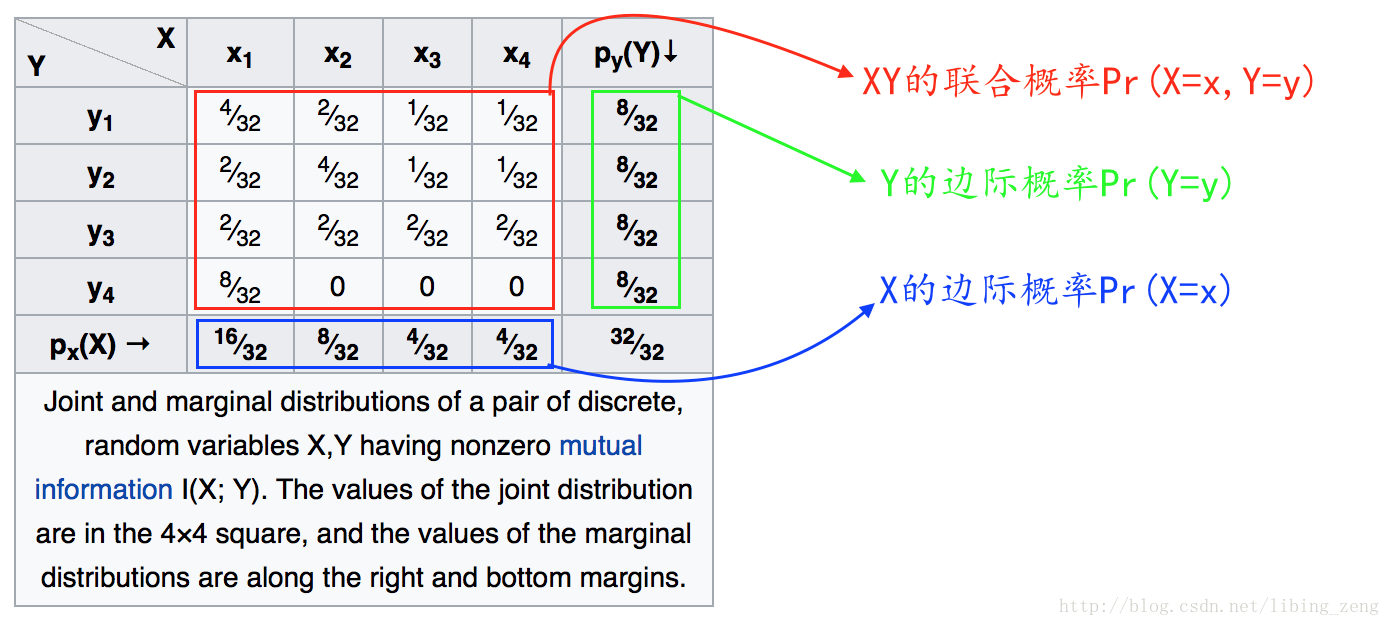

边缘概率,条件概率,联合概率

- 边缘概率就是计算每一边

- 联合概率计算的是

P(X=x,Y=y)=P(y|x)P(x)

- 条件概率计算的是

P(y|x)=P(x,y)P(x)

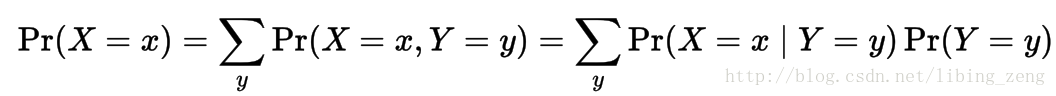

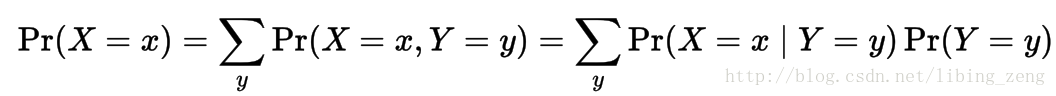

对于离散的随机变量:

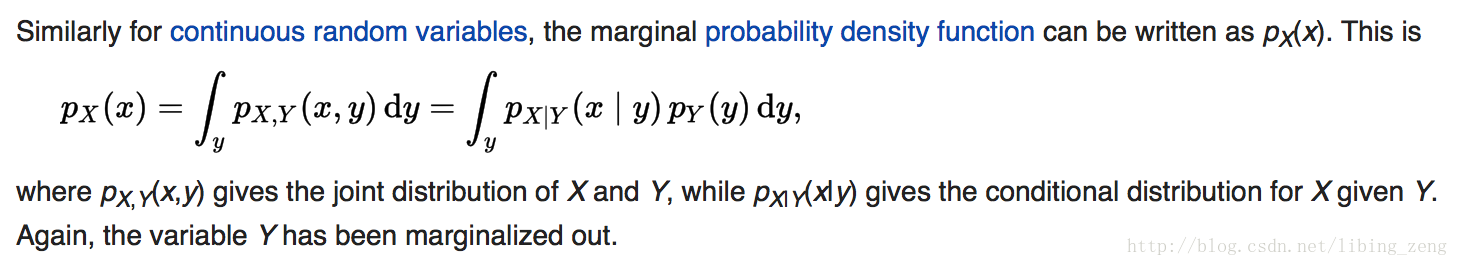

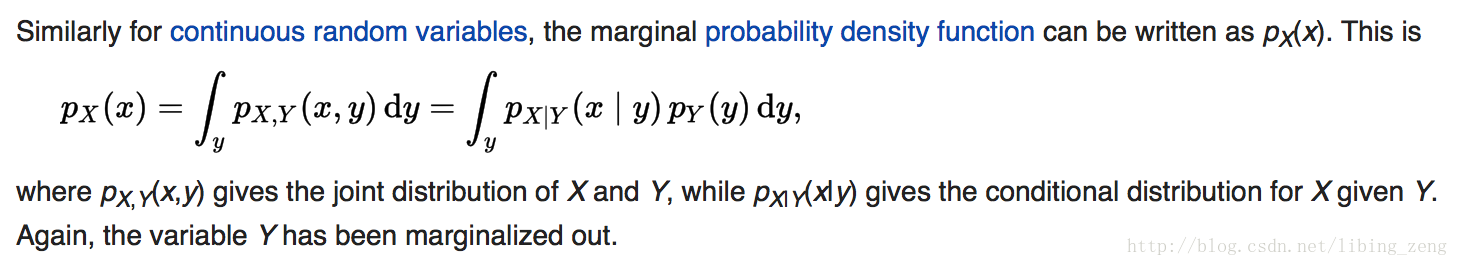

对于连续的随机变量:

example

H(X)=−∑x∈XP(x)logp(x)=12log2+14log4+18log8+18log8=74log2=74bits

H(X|Y)=−∑x∈Xy∈YP(x,y)logP(x,y)P(y)=432log1/44/32+232log1/42/32+232log1/42/32+⋅⋅⋅=118bits

H(X,Y)=−∑x∈Xy∈YP(x,y)logP(x,y)=278bits