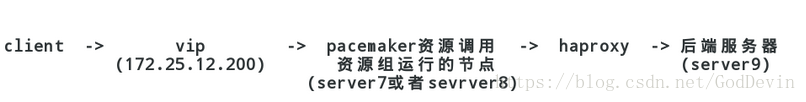

引用例图

[root@server1 haproxy]# yum install pacemaker -y

[root@server1 haproxy]# cd /etc/cluster/

[root@server1 cluster]# ls

cman-notify.d fence_xvm.key

[root@server1 cluster]# cd /etc/corosync/

[root@server1 corosync]# ls

amf.conf.example corosync.conf.example.udpu uidgid.d

corosync.conf.example service.d

[root@server1 corosync]# cp corosync.conf.example corosync.conf

[root@server1 corosync]# vim corosync.conf

***************************************************

# Please read the corosync.conf.5 manual page

compatibility: whitetank

totem {

version: 2

secauth: off

threads: 0

interface {

ringnumber: 0

bindnetaddr: 172.25.18.0 ##域

mcastaddr: 226.94.1.1

mcastport: 5405

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: yes

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service{ ##添加pacemaker服务

name:pacemaker

ver:0 ##0表示pacemaker跟随软件自启

}

*****************************************************

[root@server1 corosync]# scp corosync.conf server4:/etc/corosync/

[root@server1 ~]# /etc/init.d/corosync start

[root@server4 ~]# /etc/init.d/corosync start

[root@server4 ~]# crm_verify -L

Errors found during check: config not valid

-V may provide more details

[root@server4 ~]# crm_verify -LV ##有报错,因为默认引导fence,而fence还未配置

error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

crmsh 和 pcs

[root@server4 ~]# yum install crmsh.x86_64 0:1.2.6-0.rc2.2.1 pssh.x86_64 0:2.3.1-2.1 -y

[root@server1 ~]# yum install crmsh.x86_64 0:1.2.6-0.rc2.2.1 pssh.x86_64 0:2.3.1-2.1 -y

[root@server4 ~]# crm

****************************************************************

crm(live)# configure

crm(live)configure# show

node server1

node server4

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2"

crm(live)configure# property stonith-enabled=false ##先关掉fence

crm(live)configure# commit

****************************************************************

[root@server4 ~]# crm_verify -LV ##关掉fence后不报错

[root@server4 ~]# crm

crm(live)# configure

shoecrm(live)configure# show

node server1

node server4

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=172.25.18.100 cidr_netmask=24 op monitor interval=1min

##配置vip heartbeat工具 vip netmask 健康监测时间

crm(live)configure# commit

crm(live)configure# bye

[root@server1 ~]# vim /etc/haproxy/haproxy.cfg

frontend public

bind *:80 ##监听所有的80端口(为了监听vip)

[root@server4 ~]# crm_mon ##动态监听

**************************************************************

Last updated: Tue Apr 17 13:18:05 2018

Last change: Tue Apr 17 13:12:45 2018 via cibadmin on server4

Stack: classic openais (with plugin)

Current DC: server4 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

1 Resources configured

Online: [ server1 server4 ]

vip (ocf::heartbeat:IPaddr2): Started server1

**************************************************************

[root@server4 ~]# crm

***************************************************************

crm(live)# configure

crm(live)configure# show

node server1

node server4

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.18.100" cidr_netmask="24" \

op monitor interval="1min"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# property no-quorum-policy=ignore ##宕机后vip可以浮动

crm(live)configure# commit

byecrm(live)configure# bye

***************************************************************

测试:

[root@server1 x86_64]# /etc/init.d/corosync stop

[root@server4 ~]# crm_mon ##server1转移到server4

[root@server1 ~]# cd rpmbuild/RPMS/x86_64/

[root@server1 x86_64]# scp haproxy-1.6.11-1.x86_64.rpm server4:~/

[root@server4 ~]# rpm -ivh haproxy-1.6.11-1.x86_64.rpm

[root@server4 ~]# /etc/init.d/haproxy start

[root@server1 x86_64]# scp /etc/haproxy/haproxy.cfg server4:/etc/haproxy/

[root@server1 x86_64]# /etc/init.d/haproxy stop ##启动集群管理1时一定要关闭haproxy和取消开机自启

Shutting down haproxy: [ OK ]

[root@server1 x86_64]# chkconfig --list haproxy

haproxy 0:off 1:off 2:off 3:off 4:off 5:off 6:off

[root@server1 x86_64]# crm

***************************************************************************

crm(live)# configure

crm(live)configure# show

node server1

node server4

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.18.100" cidr_netmask="24" \

op monitor interval="1min"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

crm(live)configure# primitive haproxy lsb:haproxy op monitor interval=1min

crm(live)configure# commit

crm(live)configure# group lbgroup vip haproxy ##建立组,使crm_mon中vip和haproxy在同一server上

crm(live)configure# commit

crm(live)configure# bye

****************************************************************************

[root@server1 x86_64]# crm node standby ##控制下线

[root@server1 x86_64]# crm node online ##控制上线

[root@server1 x86_64]# stonith_admin -I ##检验fence

[root@server1 x86_64]# stonith_admin -M -a fence_xvm ##查看

[root@foundation18 Desktop]# systemctl status fence_virtd.service ##物理机上开启fence服务

[root@server4 cluster]# crm

crm(live)# configure

crm(live)configure# primitive vmfence stonith:fence_xvm params pcmk_host_map="server1:host1;server4:host4" op monitor interval=1min ##设置fence

crm(live)configure# commit

crm(live)configure# bye

[root@server4 cluster]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=true ##开启fence

crm(live)configure# commit

crm(live)configure# bye

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

[root@server1 ~]# crm

crm(live)# resource

crm(live)resource# cleanup vmfence ##配错fence后清除更新

Cleaning up vmfence on server1

Cleaning up vmfence on server4

Waiting for 1 replies from the CRMd. OK

crm(live)resource# show

Resource Group: lbgroup

vip (ocf::heartbeat:IPaddr2): Started

haproxy (lsb:haproxy): Started

vmfence (stonith:fence_xvm): Started

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

检测:

[root@server1 ~]# echo c > /proc/sysrq-trigger ##看fence机制是否成功

echo c > /proc/sysrq-trigger

[root@server1 x86_64]# vim /etc/haproxy/haproxy.cfg

*******************************************************************

global

maxconn 10000

stats socket /var/run/haproxy.stat mode 600 level admin

log 127.0.0.1 local0

uid 200

gid 200

chroot /var/empty

daemon

*******************************************************************