server2,server3:集群节点

server5,server6:后端服务器一、配置Haproxy

HAProxy 是一款提供高可用性、负载均衡以及基于TCP(第四层)和HTTP(第七层)应用的代理软件。也是是一种高效、可靠、免费的高可用及负载均衡解决方案,非常适合于高负载站点的七层数据请求。客户端通过HAProxy代理服务器获得站点页面,而代理服务器收到客户请求后根据负载均衡的规则将请求数据转发给后端真实服务器。

同一客户端访问服务器,HAProxy保持回话的三种方案:

1 HAProxy将客户端ip进行Hash计算并保存,由此确保相同IP访问时被转发到同一真实服务器上。

2 HAProxy依靠真实服务器发送给客户端的cookie信息进行回话保持。

3 HAProxy保存真实服务器的session及服务器标识,实现会话保持功能。在server2:

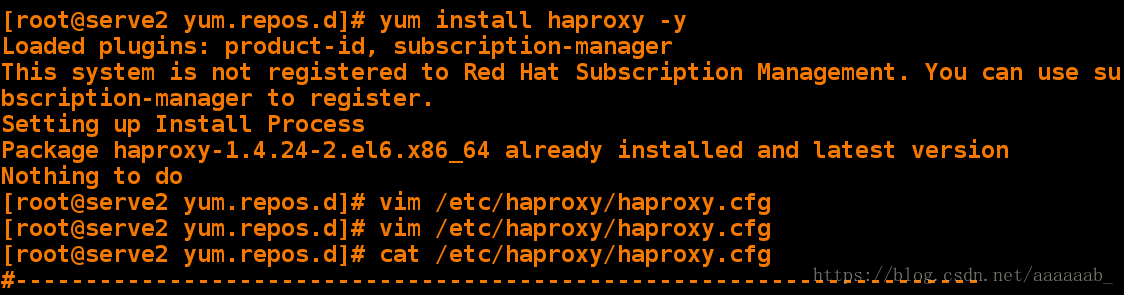

[root@serve2 yum.repos.d]# yum install haproxy -y 安装haproxy

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Setting up Install Process

Package haproxy-1.4.24-2.el6.x86_64 already installed and latest version

Nothing to do

[root@serve2 yum.repos.d]# vim /etc/haproxy/haproxy.cfg 编辑主配置文件

[root@serve2 yum.repos.d]# cat /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 65535

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

stats uri /admin/stats 健康检查

monitor-uri /monitoruri 监控管理

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend main *:5000

# acl url_static path_beg -i /static /images /javascript /stylesheets

# acl url_static path_end -i .jpg .gif .png .css .js

# use_backend static if url_static

bind 172.25.254.100:80 添加虚拟IP

default_backend static

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend static

balance roundrobin

server static 172.25.254.6:80 check 添加后端IP

server static 172.25.254.7:80 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend app

balance roundrobin

server app1 127.0.0.1:5001 check

server app2 127.0.0.1:5002 check

server app3 127.0.0.1:5003 check

server app4 127.0.0.1:5004 check[root@serve2 yum.repos.d]# ip addr add 172.25.254.100/24 dev eth0 添加虚拟IP

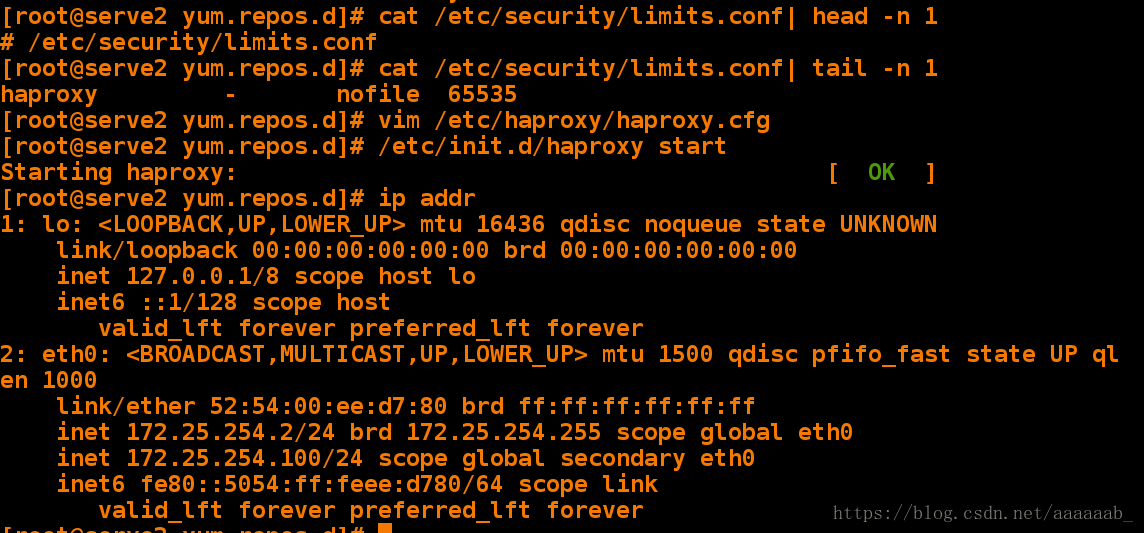

[root@serve2 yum.repos.d]# vim /etc/security/limits.conf

[root@serve2 yum.repos.d]# cat /etc/security/limits.conf| tail -n 1

haproxy - nofile 65535

[root@serve2 yum.repos.d]# /etc/init.d/haproxy start 打开服务

Starting haproxy: [ OK ]

[root@serve2 yum.repos.d]# ip addr 查看IP

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:ee:d7:80 brd ff:ff:ff:ff:ff:ff

inet 172.25.254.2/24 brd 172.25.254.255 scope global eth0

inet 172.25.254.100/24 scope global secondary eth0

inet6 fe80::5054:ff:feee:d780/64 scope link

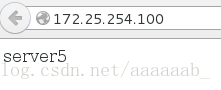

valid_lft forever preferred_lft foreverserver1上Haproxy实现轮询: 测试

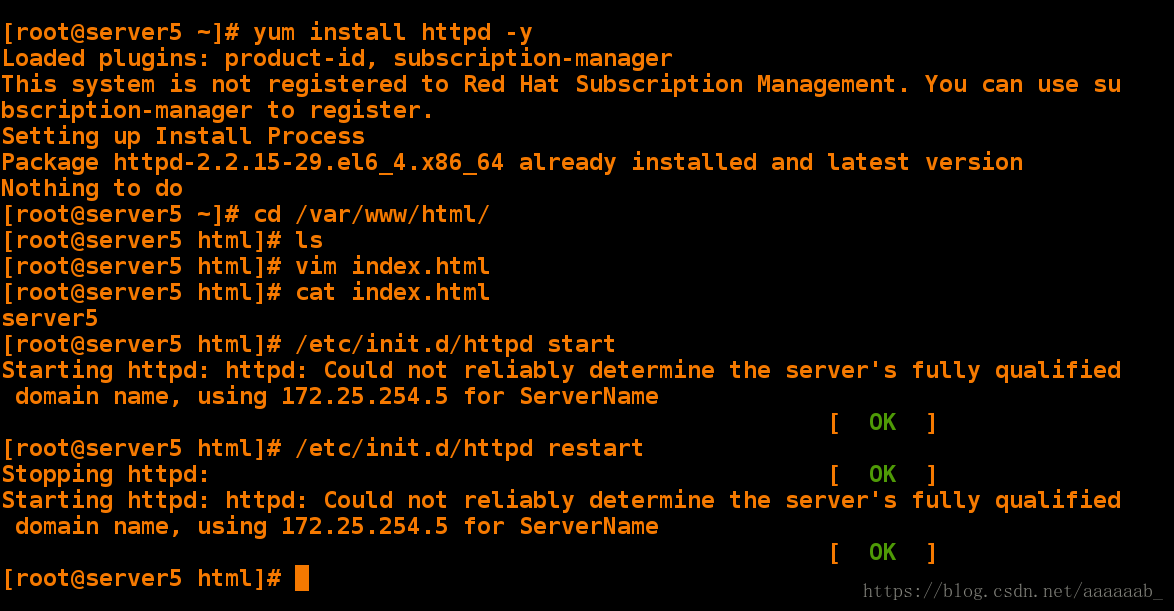

配置后端服务器server5:

[root@server5 ~]# yum install httpd -y 安装阿帕其

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Setting up Install Process

Package httpd-2.2.15-29.el6_4.x86_64 already installed and latest version

Nothing to do

[root@server5 ~]# cd /var/www/html/ 编写访问目录

[root@server5 html]# ls

[root@server5 html]# vim index.html

[root@server5 html]# cat index.html

server5

[root@server5 html]# /etc/init.d/httpd start 打开阿帕其

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.254.5 for ServerName

[ OK ]

[root@server5 html]# /etc/init.d/httpd restart 重启阿帕其

Stopping httpd: [ OK ]

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.254.5 for ServerName

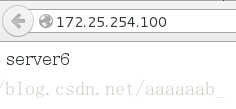

[ OK ]配置后端服务器server6:

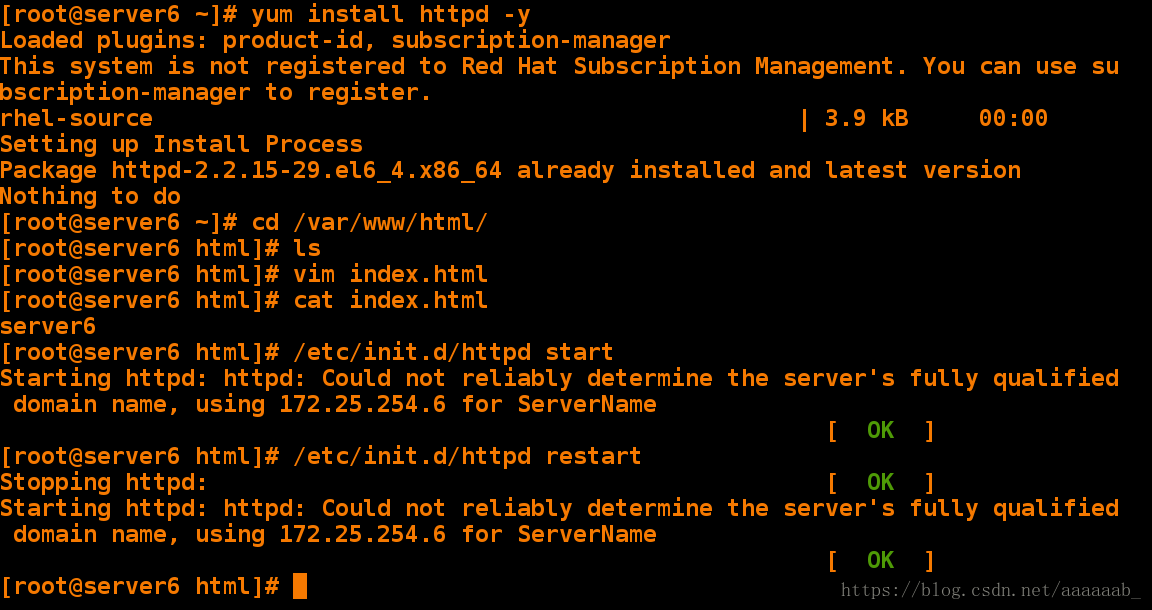

[root@server6 ~]# yum install httpd -y 安装阿帕其

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

rhel-source | 3.9 kB 00:00

Setting up Install Process

Package httpd-2.2.15-29.el6_4.x86_64 already installed and latest version

Nothing to do

[root@server6 ~]# cd /var/www/html/ 编写访问目录

[root@server6 html]# ls

[root@server6 html]# vim index.html

[root@server6 html]# cat index.html

server6

[root@server6 html]# /etc/init.d/httpd start 打开阿帕其

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.254.6 for ServerName

[ OK ]

[root@server6 html]# /etc/init.d/httpd restart重启阿帕其

Stopping httpd: [ OK ]

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.254.6 for ServerName

[ OK ]在真机写入解析在浏览器测试可以正常轮询:

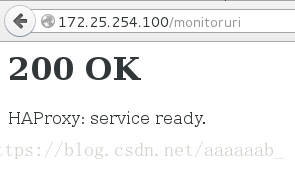

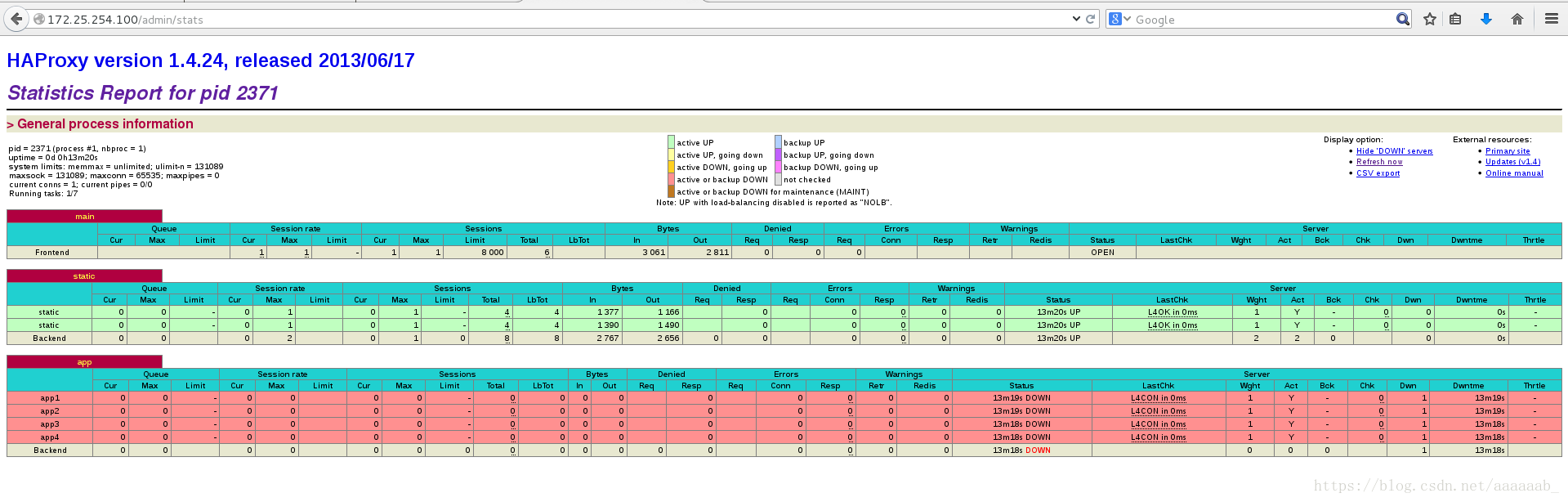

在浏览器测试健康检查:

在浏览器测试监控界面:

在server3和server2作同样的配置防止一个节点坏掉系统崩溃:

[root@serve3 ~]# yum install haproxy -y 安装haproxy服务

[root@serve3 ~]# scp server2:/etc/haproxy/haproxy.cfg /etc/haproxy/

root@server2's password:

haproxy.cfg 100% 3301 3.2KB/s 00:00

[root@serve3 ~]# ip addr add 172.25.254.100/24 dev eth0 添加虚拟IP

[root@serve3 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:42:fc:3a brd ff:ff:ff:ff:ff:ff

inet 172.25.254.3/24 brd 172.25.254.255 scope global eth0

inet 172.25.254.100/24 scope global secondary eth0

inet6 fe80::5054:ff:fe42:fc3a/64 scope link

valid_lft forever preferred_lft forever

[root@serve3 ~]# 二、配置pacemaker:

Pacemaker是一个集群资源管理器。它利用集群基础构件

(OpenAIS 、heartbeat或corosync)提供的消息和成

员管理能力来探测并从节点或资源级别的故障中恢复,以实现

群集服务(亦称资源)的最大可用性。

Corosync是集群管理套件的一部分,它在传递信息的时候可以

通过一个简单的配置文件来定义信息传递的方式和协议等。

传递心跳信息和集群事务信息,pacemaker工作在资源分配层,提供资

源管理器的功能,并以crmsh这个资源配置的命令接口来配置资源

在server2:

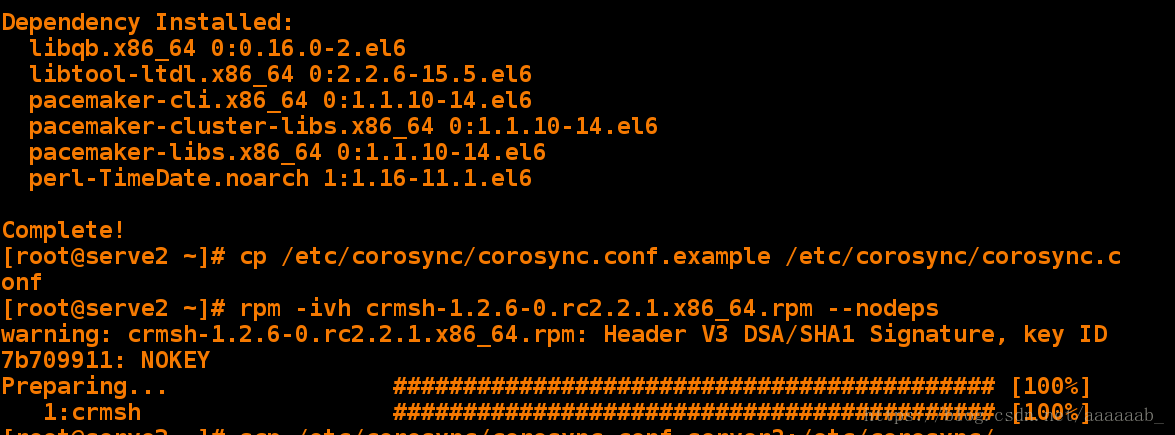

[root@serve2 ~]# yum install pacemaker corosync -y 安装pacemaker

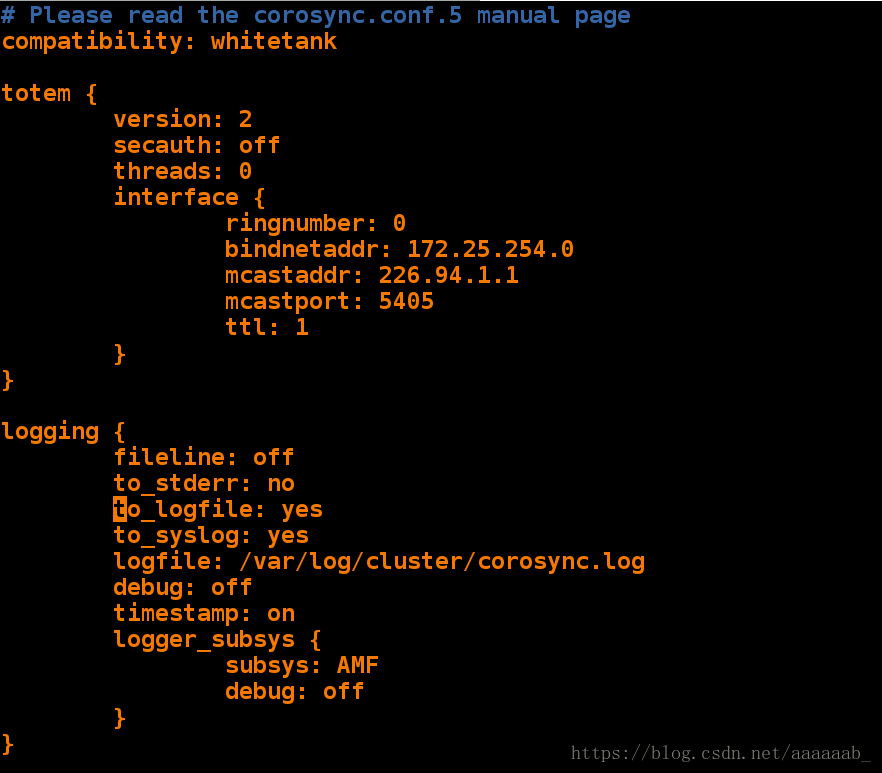

[root@serve2 ~]# cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf

[root@serve2 ~]# rpm -ivh crmsh-1.2.6-0.rc2.2.1.x86_64.rpm --nodeps 安装crm

warning: crmsh-1.2.6-0.rc2.2.1.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 7b709911: NOKEY

Preparing... ########################################### [100%]

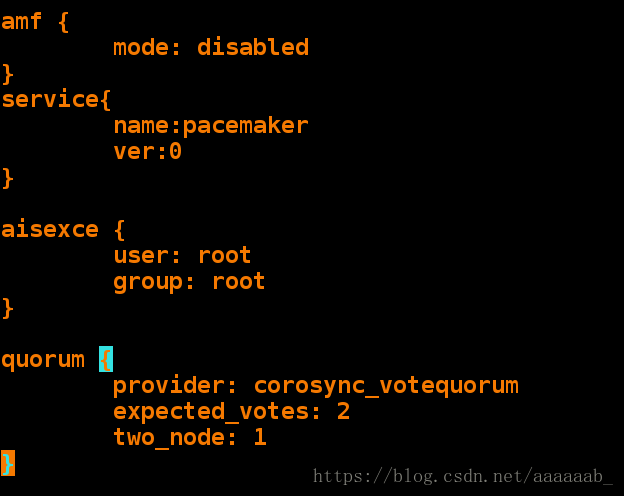

1:crmsh ########################################### [100%] [root@serve2 ~]# vim /etc/corosync/corosync.conf 修改配置文件

[root@serve2 ~]# scp crmsh-1.2.6-0.rc2.2.1.x86_64.rpm server3:/root 将配置文件传到server3

root@server3's password:

crmsh-1.2.6-0.rc2.2.1.x86_64.rpm 100% 483KB 483.4KB/s 00:00

[root@serve2 ~]# /etc/init.d/pacemaker start 开启服务

[root@serve2 ~]# scp /etc/corosync/corosync.conf server3:/etc/corosync/

root@server3's password:

corosync.conf 100% 642 0.6KB/s 00:00

[root@serve2 ~]# /etc/init.d/corosync start 开启服务

Starting Corosync Cluster Engine (corosync): [ OK ]

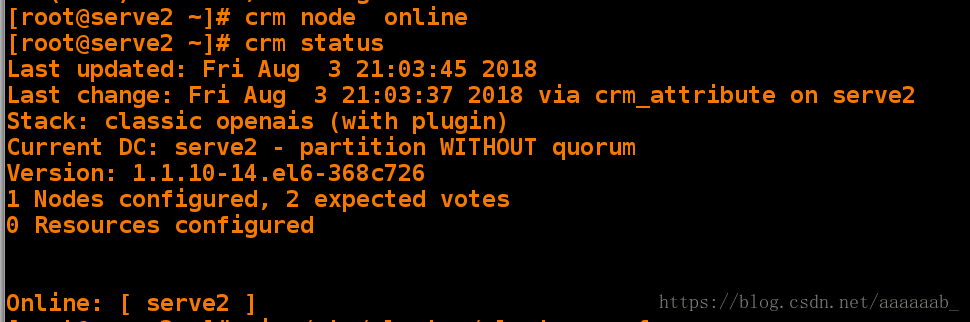

[root@serve2 ~]# crm 只有server2状态

crm(live)# status

Last updated: Fri Aug 3 20:47:36 2018

Last change: Fri Aug 3 20:45:38 2018 via crmd on serve2

Stack: classic openais (with plugin)

Current DC: serve2 - partition WITHOUT quorum

Version: 1.1.10-14.el6-368c726

1 Nodes configured, 2 expected votes

0 Resources configured

Online: [ serve2 ]

crm(live)# quit

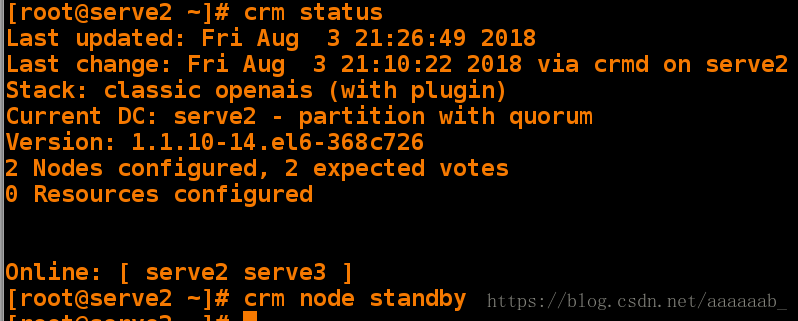

bye[root@serve2 ~]# crm status

Last updated: Fri Aug 3 21:26:49 2018

Last change: Fri Aug 3 21:10:22 2018 via crmd on serve2

Stack: classic openais (with plugin)

Current DC: serve2 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

Online: [ serve2 serve3 ]

[root@serve2 ~]# crm node standby 将server2节点关闭在server3:

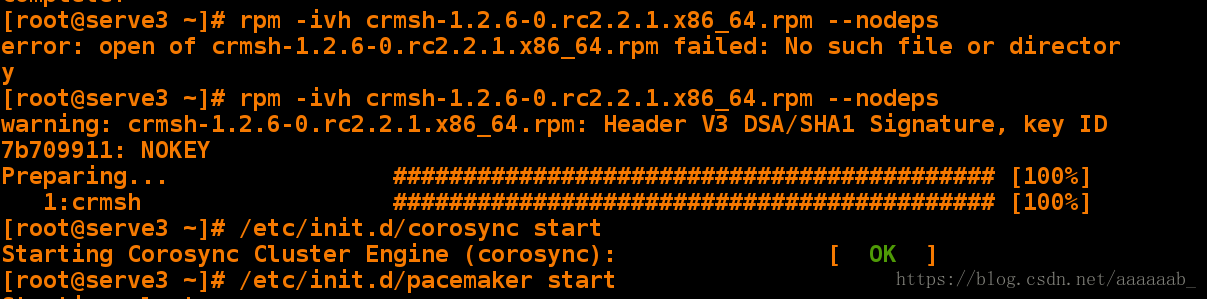

[root@serve3 ~]# yum install pacemaker corosync -y 安装pacemaker安装包

[root@serve3 ~]# rpm -ivh crmsh-1.2.6-0.rc2.2.1.x86_64.rpm --nodeps

error: open of crmsh-1.2.6-0.rc2.2.1.x86_64.rpm failed: No such file or directory

[root@serve3 ~]# rpm -ivh crmsh-1.2.6-0.rc2.2.1.x86_64.rpm --nodeps

warning: crmsh-1.2.6-0.rc2.2.1.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 7b709911: NOKEY

Preparing... ########################################### [100%]

1:crmsh ########################################### [100%]

[root@serve3 ~]# /etc/init.d/corosync start 打开服务

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@serve3 ~]# /etc/init.d/pacemaker start 打开服务[root@serve3 ~]# crm status 查看状态server2和server3工作

Last updated: Fri Aug 3 21:25:33 2018

Last change: Fri Aug 3 21:10:22 2018 via crmd on serve2

Stack: classic openais (with plugin)

Current DC: serve2 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

Online: [ serve2 serve3 ]

[root@serve3 ~]# crm status server2关闭之后

Last updated: Fri Aug 3 21:27:18 2018

Last change: Fri Aug 3 21:27:12 2018 via crm_attribute on serve2

Stack: classic openais (with plugin)

Current DC: serve2 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

Node serve2: standby

Online: [ serve3 ]

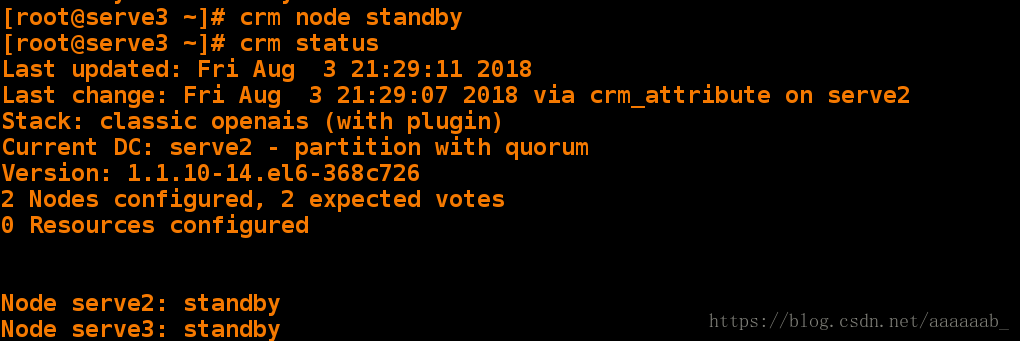

[root@serve3 ~]# crm node standby 将server3关闭没有节点工作

[root@serve3 ~]# crm status

Last updated: Fri Aug 3 21:29:11 2018

Last change: Fri Aug 3 21:29:07 2018 via crm_attribute on serve2

Stack: classic openais (with plugin)

Current DC: serve2 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

Node serve2: standby

Node serve3: standby