此文章主要是结合哔站shuhuai008大佬的白板推导视频:生成对抗网络_54min

全部笔记的汇总贴:机器学习-白板推导系列笔记

一、例子

其中国宝是一个静态的,不会改变,工艺品和这个节目的鉴定水平是动态的,可学习的。

目标:成为高水平、可以以假乱真的大师。

- 高水平的鉴赏专家(手段)

- 高水平的工艺品大师(目标)

(高大师(高专家))

二、数学描述

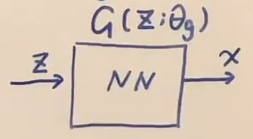

我们将上图转化为数学符号

古人: { x i } i = 1 N : P d a t a \{x_i\}_{i=1}^N:P_{data} { xi}i=1N:Pdata

工艺品: P g ( x ; θ g ) : g e n e r a t o r ( P z ( z ) + G ( z ; θ g ) ) Z ∼ P Z ( z ) x = G ( Z ; θ g ) P_g(x;\theta_g):generator(P_z(z)+G(z;\theta_g))\;\;\;\;\;\;Z\sim P_Z(z)\;\;\;\;\;\;\;\;\;x=G(Z;\theta_g) Pg(x;θg):generator(Pz(z)+G(z;θg))Z∼PZ(z)x=G(Z;θg)

x x x是国宝的概率: D ( x ; θ d ) D(x;\theta_d) D(x;θd)

高专家:

如果 x x x来自与 P d a t a P_{data} Pdata,则 D ( x ) D(x) D(x)相对较高。(可以改写为 log D ( x ) \log D(x) logD(x))

如果 x x x来自与 P g P_{g} Pg(相当于 Z Z Z来自于 P z P_z Pz),则 D ( x ) D(x) D(x)相对较低(可以改写为 log ( 1 − D ( G ( z ) ) ) \log (1-D(G(z))) log(1−D(G(z))),则这个应该较高)max D [ E x ∼ P d a t a [ log D ( x ) ] + E z ∼ P z [ log ( 1 − D ( G ( z ) ) ) ] ] \max_D\Bigg[E_{x\sim P_{data}}\Big[\log D(x)\Big]+E_{z\sim P_{z}}\Big[\log (1-D(G(z)))\Big]\Bigg] Dmax[Ex∼Pdata[logD(x)]+Ez∼Pz[log(1−D(G(z)))]]

扫描二维码关注公众号,回复: 12192665 查看本文章

高大师:

如果 x x x来自与 P g P_{g} Pg(相当于 Z Z Z来自于 P z P_z Pz),则 D ( x ) D(x) D(x)相对较高(可以改写为 log ( 1 − D ( G ( z ) ) ) \log (1-D(G(z))) log(1−D(G(z))),则这个应该较低)

min G E z ∼ P z [ log ( 1 − D ( G ( z ) ) ) ] \min_GE_{z\sim P_{z}}\Big[\log (1-D(G(z)))\Big] GminEz∼Pz[log(1−D(G(z)))]

总目标:

min G max D [ E x ∼ P d a t a [ log D ( x ) ] + E z ∼ P z [ log ( 1 − D ( G ( z ) ) ) ] ] \min_G\max_D\Bigg[E_{x\sim P_{data}}\Big[\log D(x)\Big]+E_{z\sim P_{z}}\Big[\log (1-D(G(z)))\Big]\Bigg] GminDmax[Ex∼Pdata[logD(x)]+Ez∼Pz[log(1−D(G(z)))]]

三、全局最优解

y ∣ x : d i s c r i m i n a t o r y|x:discriminator y∣x:discriminator

| y / x y/x y/x | 1 | 0 |

|---|---|---|

| p p p | D ( x ) D(x) D(x) | 1 − D ( x ) 1-D(x) 1−D(x) |

记

V ( D , G ) = E x ∼ P d a t a [ log D ( x ) ] + E x ∼ P g [ log ( 1 − D ( x ) ) ] V(D,G)=E_{x\sim P_{data}}\Big[\log D(x)\Big]+E_{x\sim P_{g}}\Big[\log (1-D(x))\Big] V(D,G)=Ex∼Pdata[logD(x)]+Ex∼Pg[log(1−D(x))]

固定 G G G,求 D ∗ D^* D∗,记作 D G ∗ D^*_G DG∗:

max D V ( D , G ) \max_DV(D,G) DmaxV(D,G)

max D V ( D , G ) = ∫ P d a t a ⋅ log D d x + ∫ P g ⋅ log ( 1 − D ) d x = ∫ [ P d a t a ⋅ log D + P g ⋅ log ( 1 − D ) ] d x \max_DV(D,G)=\int P_{data}\cdot\log D{d}x+\int P_g\cdot\log(1-D){d}x\\=\int\Big[P_{data}\cdot\log D+ P_g\cdot\log(1-D)\Big]{d}x DmaxV(D,G)=∫Pdata⋅logDdx+∫Pg⋅log(1−D)dx=∫[Pdata⋅logD+Pg⋅log(1−D)]dx

关于 D D D求偏导:

∂ ∂ D ( max D V ( D , G ) ) = ∂ ∂ D ∫ [ P d a t a ⋅ log D + P g ⋅ log ( 1 − D ) ] d x = ∫ ∂ ∂ D [ P d a t a ⋅ log D + P g ⋅ log ( 1 − D ) ] d x = ∫ [ P d a t a ⋅ 1 D + P g ⋅ − 1 1 − D ] d x \frac{\partial }{\partial D}(\max_DV(D,G))=\frac{\partial }{\partial D}\int\Big[P_{data}\cdot\log D+ P_g\cdot\log(1-D)\Big]{d}x\\=\int\frac{\partial }{\partial D}\Big[P_{data}\cdot\log D+ P_g\cdot\log(1-D)\Big]{d}x\\=\int\Big[P_{data}\cdot\frac1D+ P_g\cdot\frac{-1}{1-D}\Big]{d}x ∂D∂(DmaxV(D,G))=∂D∂∫[Pdata⋅logD+Pg⋅log(1−D)]dx=∫∂D∂[Pdata⋅logD+Pg⋅log(1−D)]dx=∫[Pdata⋅D1+Pg⋅1−D−1]dx

令导数为 0 0 0,得到:

D G ∗ = P d a t a P d a t a + P g D^*_G=\frac{P_{data}}{P_{data}+P_g} DG∗=Pdata+PgPdata

将 D G ∗ D^*_G DG∗代入,则有:

min G max D V ( D , G ) = min G V ( D G ∗ , G ) = min G E x ∼ P d a t a [ log P d a t a P d a t a + P g ] + E x ∼ P g [ log ( 1 − P d a t a P d a t a + P g ) ] = min G E x ∼ P d a t a [ log P d a t a P d a t a + P g ] + E x ∼ P g [ log P g P d a t a + P g ] = min G E x ∼ P d a t a [ log P d a t a P d a t a + P g 2 ⋅ 1 2 ] + E x ∼ P g [ log P g P d a t a + P g 2 ⋅ 1 2 ] = min G K L ( P d a t a ∣ ∣ P d a t a + P g 2 ) + K L ( P g ∣ ∣ P d a t a + P g 2 ) − log 4 ≥ − log 4 \min_G\max_D V(D,G)=\min_G V(D_G^*,G)\\=\min_GE_{x\sim P_{data}}\Big[\log \frac{P_{data}}{P_{data}+P_g}\Big]+E_{x\sim P_{g}}\Big[\log (1-\frac{P_{data}}{P_{data}+P_g})\Big]\\=\min_GE_{x\sim P_{data}}\Big[\log \frac{P_{data}}{P_{data}+P_g}\Big]+E_{x\sim P_{g}}\Big[\log \frac{P_{g}}{P_{data}+P_g}\Big]\\=\min_GE_{x\sim P_{data}}\Big[\log \frac{P_{data}}{\frac{P_{data}+P_g}2}\cdot\frac12\Big]+E_{x\sim P_{g}}\Big[\log \frac{P_{g}}{\frac{P_{data}+P_g}2}\cdot\frac12\Big]\\=\min_G KL(P_{data}||\frac{P_{data}+P_g}2)+KL(P_g||\frac{P_{data}+P_g}2)-\log 4\\\ge -\log 4 GminDmaxV(D,G)=GminV(DG∗,G)=GminEx∼Pdata[logPdata+PgPdata]+Ex∼Pg[log(1−Pdata+PgPdata)]=GminEx∼Pdata[logPdata+PgPdata]+Ex∼Pg[logPdata+PgPg]=GminEx∼Pdata[log2Pdata+PgPdata⋅21]+Ex∼Pg[log2Pdata+PgPg⋅21]=GminKL(Pdata∣∣2Pdata+Pg)+KL(Pg∣∣2Pdata+Pg)−log4≥−log4

当 P d a t a = P d a t a + P g 2 = P g P_{data}=\frac{P_{data}+P_g}2=P_g Pdata=2Pdata+Pg=Pg时,“=”成立。 \;

此时, P g ∗ = P d a t a , D g ∗ = 1 2 P^*_g=P_{data},D^*_g=\frac12 Pg∗=Pdata,Dg∗=21

\;

\;

\;

下一章传送门:白板推导系列笔记(三十二)-变分自编码器