由于latex对于代码不太友好把代码放在下面:

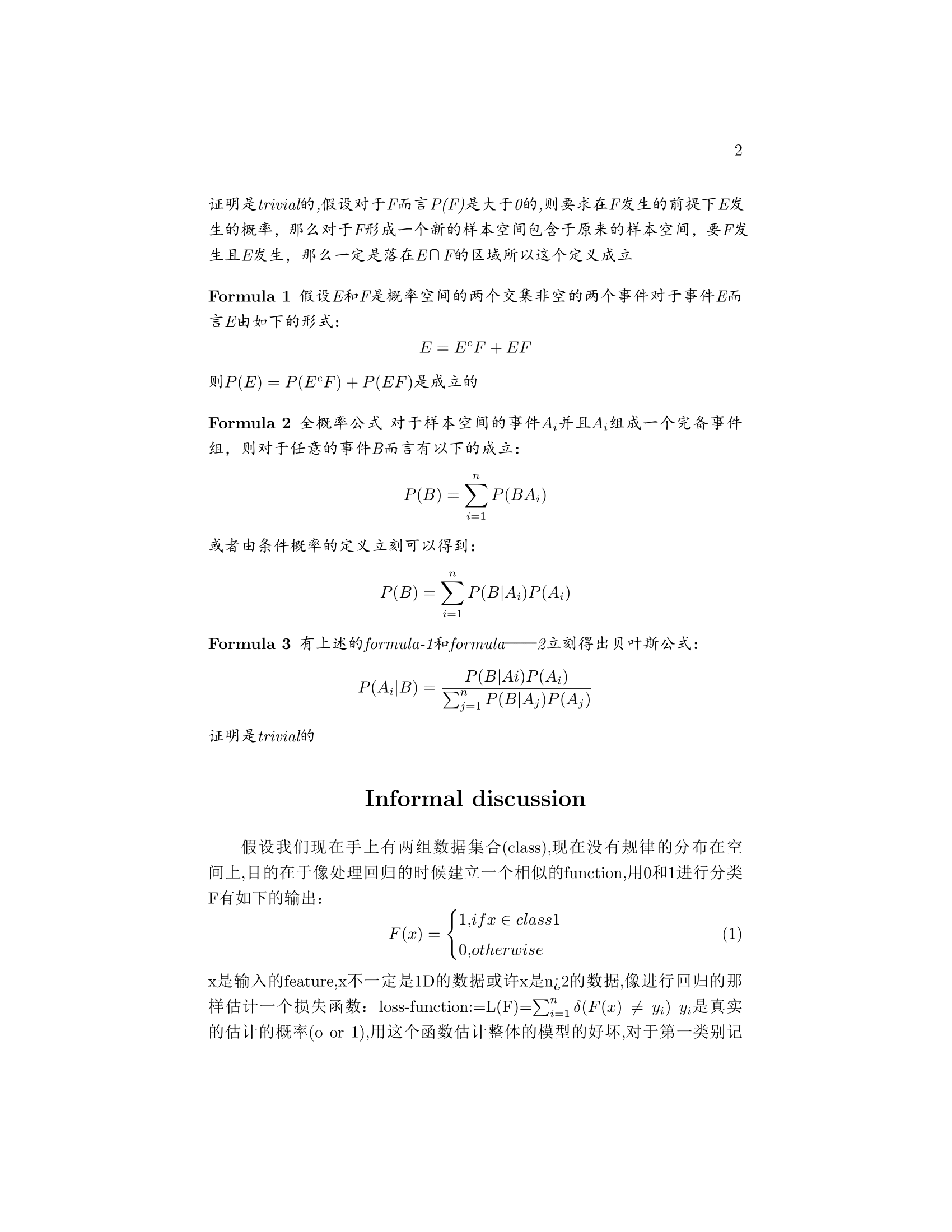

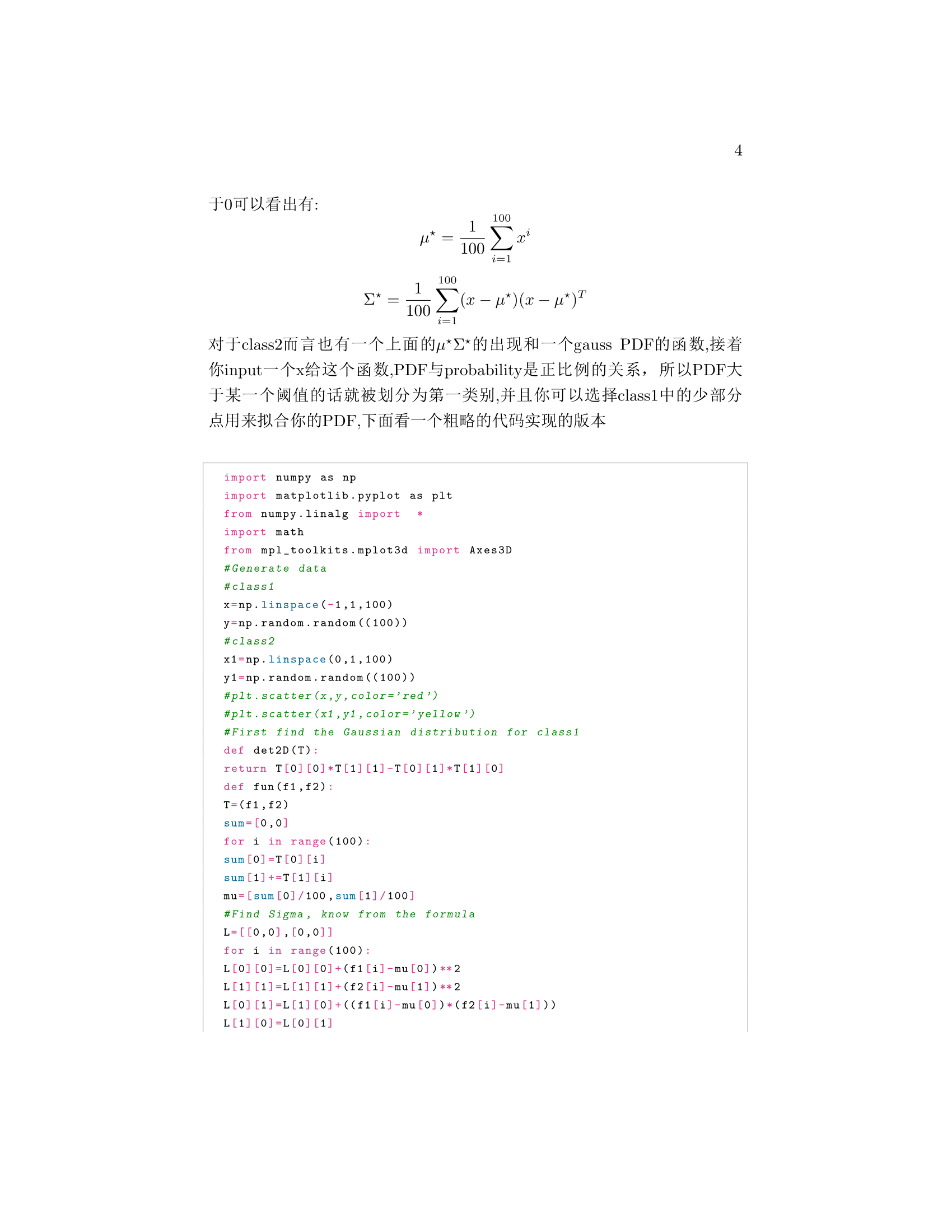

import numpy as np import matplotlib.pyplot as plt from numpy.linalg import * import math from mpl_toolkits.mplot3d import Axes3D #生成数据 #class1 x=np.linspace(-1,1,100) y=np.random.random((100)) #class2 x1=np.linspace(0,1,100) y1=np.random.random((100)) #plt.scatter(x,y,color='red') #plt.scatter(x1,y1,color='yellow') #先对class1求高斯分布 def det2D(T): return T[0][0]*T[1][1]-T[0][1]*T[1][0] def fun(f1,f2): T=(f1,f2) sum=[0,0] for i in range(100): sum[0]=T[0][i] sum[1]+=T[1][i] mu=[sum[0]/100,sum[1]/100] #求Sigma,由公式可知 L=[[0,0],[0,0]] for i in range(100): L[0][0]=L[0][0]+(f1[i]-mu[0])**2 L[1][1]=L[1][1]+(f2[i]-mu[1])**2 L[0][1]=L[1][0]+((f1[i]-mu[0])*(f2[i]-mu[1])) L[1][0]=L[0][1] L[0][0],L[1][0],L[1][1],L[0][1]=L[0][0]/100,L[1][0]/100,L[1][1]/100,L[0][1]/100 return L,mu sigma,mu=fun(x,y) sigma_inv=np.linalg.inv(sigma) mu=np.array(mu) sigma=np.array(sigma) def Gauss(x): x=np.array(x) num=det(sigma_inv) med1=1/(math.sqrt(2*np.pi*num)) med2=np.exp((-1/2)*((x-mu).transpose().dot(sigma_inv)).dot(x-mu)) return med1*med2 #绘制高斯函数图 text=[x,y] text2=[x1,y1] res1=[]#class1的高斯函数值 res2=[]#class2的高斯函数值 for i in range(100): res1.append(Gauss([text[0][i],text[1][i]])) res2.append(Gauss([text2[0][i],text2[1][i]])) res2,res1=np.array(res2),np.array(res1) #开始绘制图像 t=res1-res2 t1=0 for i in range(100): if t[i]>0: t1=t1+1 print(t1) #t1的个数衡量对于class1的函数模型对于class2有没有偏差

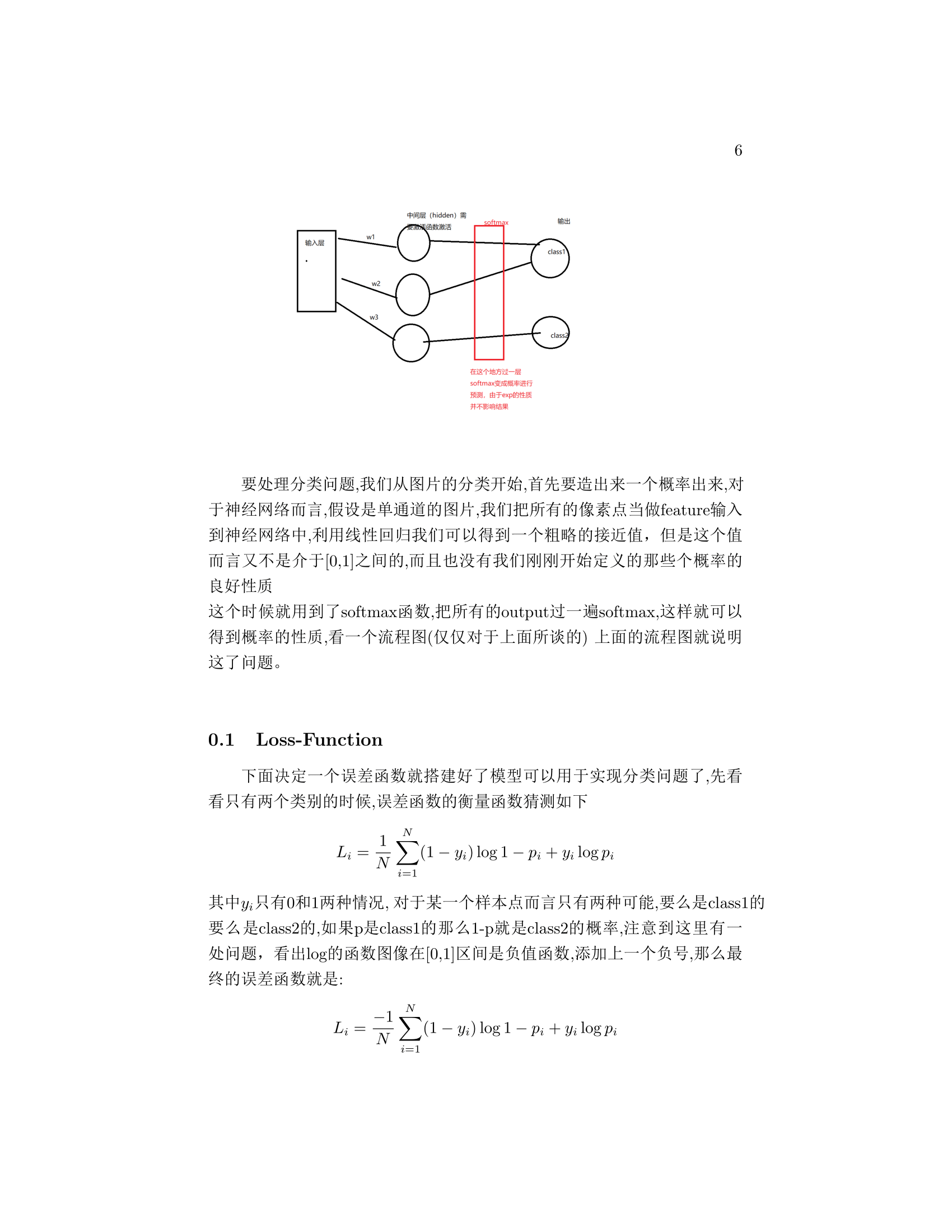

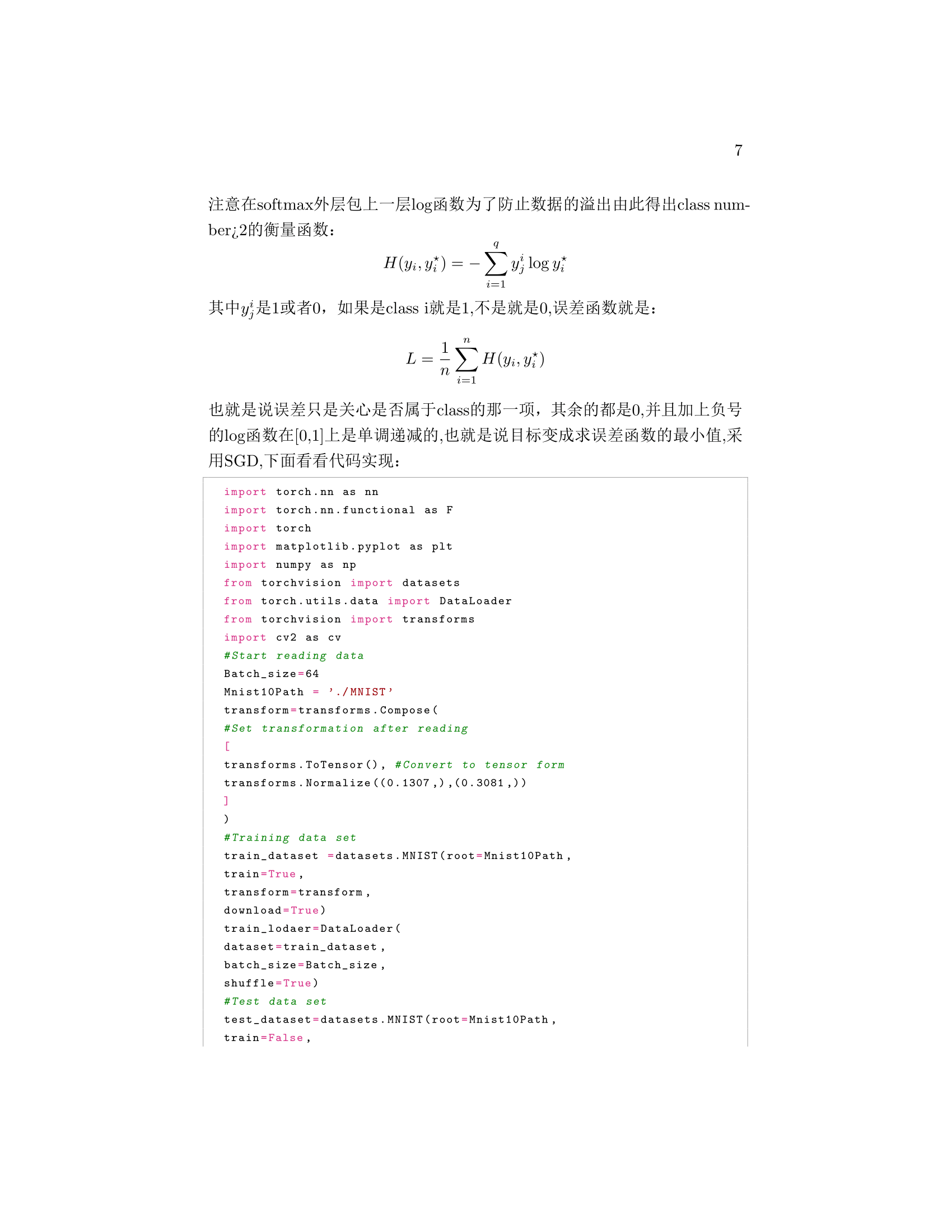

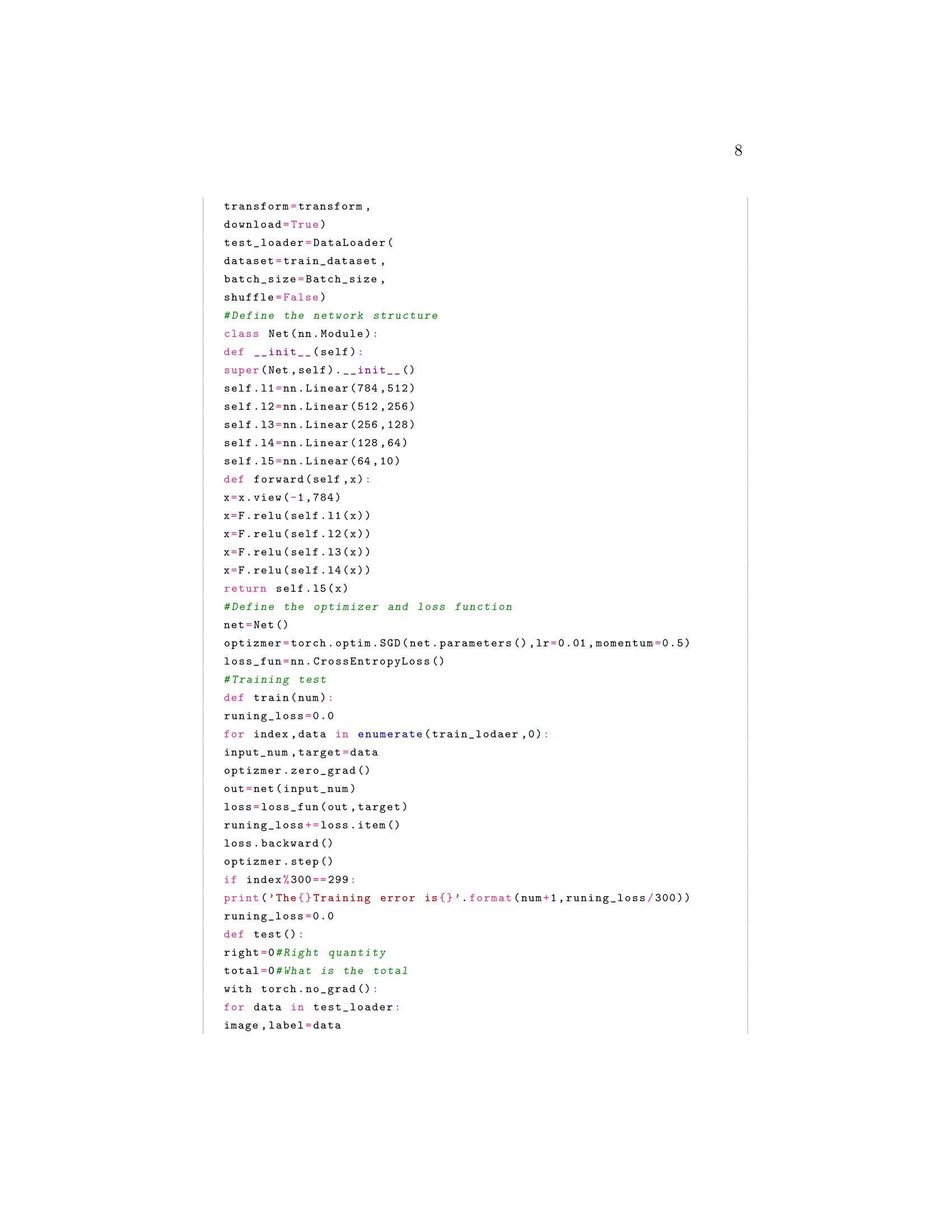

1 import torch.nn as nn 2 import torch.nn.functional as F 3 import torch 4 import matplotlib.pyplot as plt 5 import numpy as np 6 from torchvision import datasets 7 from torch.utils.data import DataLoader 8 from torchvision import transforms 9 import cv2 as cv 10 #开始读取数据 11 Batch_size=64 12 Mnist10Path = './MNIST' 13 transform=transforms.Compose( 14 #设置读取之后的变换 15 [ 16 transforms.ToTensor(), #转换成tensor的形式 17 transforms.Normalize((0.1307,),(0.3081,)) 18 ] 19 ) 20 #训练数据集 21 train_dataset =datasets.MNIST(root=Mnist10Path, 22 train=True, 23 transform=transform, 24 download=True) 25 train_lodaer=DataLoader( 26 dataset=train_dataset, 27 batch_size=Batch_size, 28 shuffle=True) 29 #测试数据集 30 test_dataset=datasets.MNIST(root=Mnist10Path, 31 train=False, 32 transform=transform, 33 download=True) 34 test_loader=DataLoader( 35 dataset=train_dataset, 36 batch_size=Batch_size, 37 shuffle=False) 38 #定义网络结构 39 class Net(nn.Module): 40 def __init__(self): 41 super(Net,self).__init__() 42 self.l1=nn.Linear(784,512) 43 self.l2=nn.Linear(512,256) 44 self.l3=nn.Linear(256,128) 45 self.l4=nn.Linear(128,64) 46 self.l5=nn.Linear(64,10) 47 def forward(self,x): 48 x=x.view(-1,784) 49 x=F.relu(self.l1(x)) 50 x=F.relu(self.l2(x)) 51 x=F.relu(self.l3(x)) 52 x=F.relu(self.l4(x)) 53 return self.l5(x) 54 #定义优化器和损失函数 55 net=Net() 56 optizmer=torch.optim.SGD(net.parameters(),lr=0.01,momentum=0.5) 57 loss_fun=nn.CrossEntropyLoss() 58 #训练的测试 59 def train(num): 60 runing_loss=0.0 61 for index,data in enumerate(train_lodaer,0): 62 input_num,target=data 63 optizmer.zero_grad() 64 out=net(input_num) 65 loss=loss_fun(out,target) 66 runing_loss+=loss.item() 67 loss.backward() 68 optizmer.step() 69 if index%300==299: 70 print('第{}次训练误差是{}'.format(num+1,runing_loss/300)) 71 runing_loss=0.0 72 def test(): 73 right=0#正确的数量 74 total=0#总数一共是多少 75 with torch.no_grad(): 76 for data in test_loader: 77 image,label=data 78 test_out=net(image) 79 _,pre=torch.max(test_out.data,dim=1) 80 total+=label.size(0)#每一轮对64个图片进行测试 81 right+=(pre==label).sum().item()#64个图片中正确预测到的有多少个 82 print('Correct rate is {}%'.format(100*(right/total))) 83 if __name__=='__main__': 84 for i in range(3): 85 train(i) 86 test() 87 88