题目太长啦!文档下载【传送门】

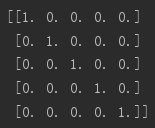

第1题

简述:设计一个5*5的单位矩阵。

1 import numpy as np 2 A = np.eye(5) 3 print(A)

运行结果:

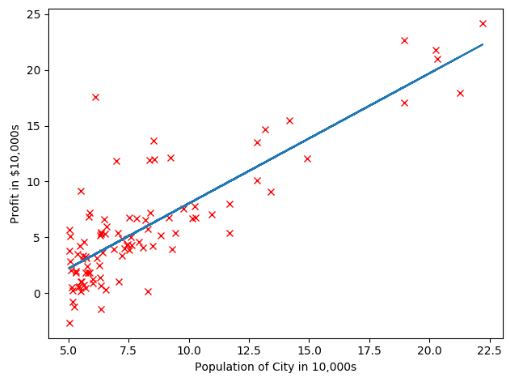

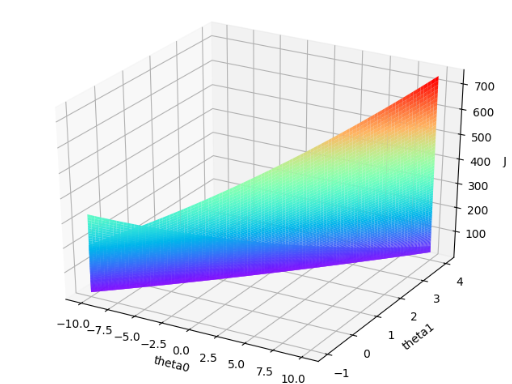

第2题

简述:实现单变量线性回归。

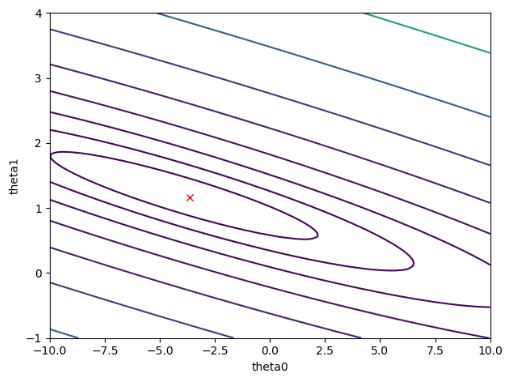

1 import numpy as np 2 import matplotlib.pyplot as plt 3 from mpl_toolkits.mplot3d import Axes3D 4 5 #-----------------计算代价值函数----------------------- 6 def computeCost(X, y, theta): 7 m = np.size(X[:,0]) 8 J = 1/(2*m)*np.sum((np.dot(X,theta)-y)**2) 9 return J 10 11 12 #----------------根据人口预测利润---------------------- 13 #读取数据集中数据,第一列是人口数据,第二列是利润数据 14 data = np.loadtxt('ex1data1.txt',delimiter=",",dtype="float") 15 m = np.size(data[:,0]) 16 # print(data) 17 18 #------------------绘制样本点-------------------------- 19 X = data[:,0:1] 20 y = data[:,1:2] 21 plt.plot(X,y,"rx") 22 plt.xlabel('Population of City in 10,000s') 23 plt.ylabel('Profit in $10,000s') 24 # plt.show() 25 26 #-----------------梯度下降计算局部最优解---------------- 27 #添加第一列1 28 one = np.ones(m) 29 X = np.insert(X,0,values=one,axis=1) 30 # print(X) 31 32 #设置α、迭代次数、θ 33 theta = np.zeros((2,1)) 34 iterations = 1500 35 alpha = 0.01 36 37 #梯度下降,并显示线性回归 38 J_history = np.zeros((iterations,1)) 39 for iter in range(0,iterations): 40 theta = theta - alpha/m*np.dot(X.T,(np.dot(X,theta)-y)) 41 J_history[iter] = computeCost(X,y,theta) 42 plt.plot(data[:,0],np.dot(X,theta),'-') 43 plt.show() 44 # print(theta) 45 # print(J_history) 46 47 #--------------------显示三维图------------------------ 48 theta0 = np.linspace(-10,10,100) 49 theta1 = np.linspace(-1,4,100) 50 J_vals = np.zeros((np.size(theta0),np.size(theta1))) 51 for i in range(0,np.size(theta0)): 52 for j in range(0,np.size(theta1)): 53 t = np.asarray([theta0[i],theta1[j]]).reshape(2,1) 54 J_vals[i,j] = computeCost(X,y,t) 55 # print(J_vals) 56 J_vals = J_vals.T #需要转置一下,否则轴会反 57 fig1 = plt.figure() 58 ax = Axes3D(fig1) 59 ax.plot_surface(theta0,theta1,J_vals,rstride=1,cstride=1,cmap=plt.get_cmap('rainbow')) 60 ax.set_xlabel('theta0') 61 ax.set_ylabel('theta1') 62 ax.set_zlabel('J') 63 plt.show() 64 65 #--------------------显示轮廓图----------------------- 66 lines = np.logspace(-2,3,20) 67 plt.contour(theta0,theta1,J_vals,levels = lines) 68 plt.xlabel('theta0') 69 plt.ylabel('theta1') 70 plt.plot(theta[0],theta[1],'rx') 71 plt.show()

运行结果:

扫描二维码关注公众号,回复:

7445066 查看本文章

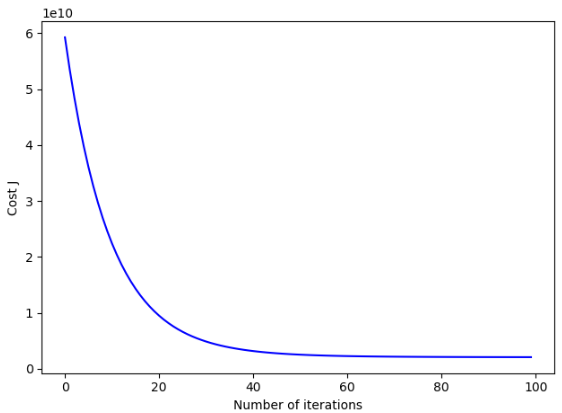

第3题

简述:实现多元线性回归。

1 import numpy as np 2 import matplotlib.pyplot as plt 3 4 #-----------------计算代价值函数----------------------- 5 def computeCost(X, y, theta): 6 m = np.size(X[:,0]) 7 J = 1/(2*m)*np.sum((np.dot(X,theta)-y)**2) 8 return J 9 10 #-------------------根据面积和卧室数量预测房价---------- 11 #读取数据集中数据,第一列是面积数据,第二列是卧室数量,第三列是房价 12 data = np.loadtxt('ex1data2.txt',delimiter=",",dtype="float") 13 m = np.size(data[:,0]) 14 # print(data) 15 X = data[:,0:2] 16 y = data[:,2:3] 17 18 #----------------------均值归一化--------------------- 19 mu = np.mean(X,0) 20 sigma = np.std(X,0) 21 X_norm = np.divide(np.subtract(X,mu),sigma) 22 one = np.ones(m) #添加第一列1 23 X_norm = np.insert(X_norm,0,values=one,axis=1) 24 # print(mu) 25 # print(sigma) 26 # print(X_norm) 27 28 #----------------------梯度下降----------------------- 29 alpha = 0.05 30 num_iters = 100 31 theta = np.zeros((3,1)); 32 J_history = np.zeros((num_iters,1)) 33 for iter in range(0,num_iters): 34 theta = theta - alpha/m*np.dot(X_norm.T,(np.dot(X_norm,theta)-y)) 35 J_history[iter] = computeCost(X_norm,y,theta) 36 # print(theta) 37 x_col = np.arange(0,num_iters) 38 plt.plot(x_col,J_history,'-b') 39 plt.xlabel('Number of iterations') 40 plt.ylabel('Cost J') 41 plt.show() 42 43 #----------使用上述结果对[1650,3]的数据进行预测-------- 44 test1 = [1,1650,3] 45 test1[1:3] = np.divide(np.subtract(test1[1:3],mu),sigma) 46 price = np.dot(test1,theta) 47 print(price) #输出预测结果[292455.63375132] 48 49 #-------------使用正规方程法求解---------------------- 50 one = np.ones(m) 51 X = np.insert(X,0,values=one,axis=1) 52 theta = np.dot(np.dot(np.linalg.pinv(np.dot(X.T,X)),X.T),y) 53 # print(theta) 54 price = np.dot([1,1650,3],theta) 55 print(price) #输出预测结果[293081.46433497]

运行结果:【一个疑惑>>两种方法求解的估算价格很小,但θ相差较大?】