http://openclassroom.stanford.edu/MainFolder/DocumentPage.php?course=MachineLearning&doc=exercises/ex2/ex2.html

练习1 的作业 :

我使用的 Octave ,

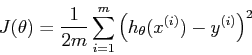

Understanding ![]()

We'd like to understand better what gradient descent has done, and visualize the relationship between the parameters![]() and

and ![]() . In this problem, we'll plot

. In this problem, we'll plot ![]() as a 3D surface plot. (When applying learning algorithms, we don't usually try to plot

as a 3D surface plot. (When applying learning algorithms, we don't usually try to plot ![]() since usually

since usually ![]() is very high-dimensional so that we don't have any simple way to plot or visualize

is very high-dimensional so that we don't have any simple way to plot or visualize ![]() . But because the example here uses a very low dimensional

. But because the example here uses a very low dimensional ![]() , we'll plot

, we'll plot ![]() to gain more intuition about linear regression.) Recall that the formula for

to gain more intuition about linear regression.) Recall that the formula for ![]() is

is

程序如下:

x = load('C:/Users/samsung/Desktop/ex2Data/ex2x.dat');

y = load('C:/Users/samsung/Desktop/ex2Data/ex2y.dat');

m= length(y);

x = [ones(m,1),x];

J_vals = zeros(100,100);

theta0_vals = linspace(-3,3,100);

theta1_vals = linspace(-1,1,100);

for i =1:length(theta0_vals)

for j = 1:length(theta1_vals)

t=[theta0_vals(i);theta1_vals(j)];

J_vals(i,j) = (x*t - y)'*(x*t-y)/(2*m);

end

end

J_vals = J_vals';

figure;

surf(theta0_vals, theta1_vals, J_vals);

xlabel('\theta_0'); ylabel('\theta_1');

练习 2 :

x = load('C:/Users/samsung/Desktop/ex2Data/ex2x.dat');

y = load('C:/Users/samsung/Desktop/ex2Data/ex2y.dat');

figure % open a new figure window

plot(x, y, 'o');

ylabel('Height in meters');

xlabel('Age in years');

theta = [0;0];

alfa = 0.07;

m = length(y);

x = [ones(m,1),x];

##temp = x(:,2)' *(x*theta -y)/m * alfa;

#for i = 1:1500

#theta =theta - x' *(x*theta -y)/ m * alfa;

#end ##z这里的循环使用了 梯度下降迭代法,求出 theta

theta = inv(x'*x)*x'*y; ##是同一公式,计算速度快

hold on % Plot new data without clearing old plot

plot(x(:,2), x*theta, '-')

% remember that x is now a matrix with 2 columns

% and the second column contains the time info

legend('Training data', 'Linear regression')