从原始文本到tensorflow1.4代码实现(一)线性回归(Linear Regression,LR)

前言:

线性回归算是比较容易理解和实现的机器学习算法了,初中x与y的函数关系: y=ax+b就是她的一个特例,只是在这里,a,b参数都是标量,x与y也是标量,现在,我们常用的就是使用矩阵W、Y或者张量W、Y来拓展它,从某种意义上来说,标量也是一种张量,只是特殊情况罢了。文绉绉的说,线性回归的目标,便是从一组包含各种特征和类别的数据集中,找到特征与结果之间的线性关系(假设数据集的特征与类别存在线性关系)。

参考:(1)把数字标签转化成onehot标签:https://blog.csdn.net/a_yangfh/article/details/77911126

(2)使用numpy随机打散训练数据:https://blog.csdn.net/kaizhongwang/article/details/78864090

(3)存储和读取数据(numpy、pickle):https://blog.csdn.net/cfyzcc/article/details/51804466

(4)tensorflow实现线性回归:https://blog.csdn.net/monkey131499/article/details/52145254

代码实现:

要跑的话直接到后面运行总代码就好

tensorflow: 1.4

python: 3.6

1.首先是数据处理:

数据从这里下载:机器学习的UCI数据集:https://archive.ics.uci.edu/ml/machine-learning-databases/iris/

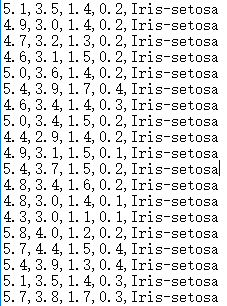

Iris花的数据集,这里有75个数据,有3种花,每个花有4个特征。

具体数据如下:

代码(数据处理,将txt读取,转化为数字):

import tensorflow as tf

import numpy as np

import pickle

filename = 'E:/workspace/dataSet/Classic/sentence/iris.data.txt'

###将iris数据标签转化为number形式

def readChangeIris(filename):

file = open(filename, 'r', encoding='gbk')

iris_XY = []

label_dict = {}

while file.readline():

text = file.readline()

if '' is not text:

texts = text.strip().split(',')

mid_itis_trainX = []

for i in range(len(texts)-1):

wordNumber = float(texts[i]) #注意,是float而不是int

mid_itis_trainX.append(wordNumber)

irisY = texts[len(texts)-1].strip()

if irisY not in label_dict : #构建字典, 存储标签对应的类别数值

label_dict[irisY] = len(label_dict) + 1

irisLabel = label_dict[irisY]

mid_itis_trainX.append(irisLabel)

iris_XY.append(mid_itis_trainX)

file.close()

return iris_XY, label_dict

2.数据存储(numpy,pickle):

###写成文件形式

def writePickle():

iris_XY, label_dict = readChangeIris(filename)

iris_XY_numpy = np.array(iris_XY) #list转化为numpy,好切片

print('readchange ok!')

irisNumber = open('irisNumber.pkl', 'wb')

pickle.dump(iris_XY_numpy, irisNumber)

irisNumber.close()

def loadPickle():

pkl_file = open('irisNumber.pkl', 'rb')

irisXY = pickle.load(pkl_file)

# 注意切片

iris_X = irisXY[:,0:4]

iris_yy = irisXY[:, 4]

iris_Y = []

for y in iris_yy:

if y == 1:

iris_Y.append([1, 0, 0])

if y == 2:

iris_Y.append([0, 1, 0])

if y == 3:

iris_Y.append([0, 0, 1])

iris_Y_numpy = np.array(iris_Y)

return iris_X, iris_Y_numpy3.数据读取与打散:

writePickle()

iris_X, iris_Y = loadPickle()

# onehotLabels = digit2onehot(iris_yy, 3)

indices = np.random.permutation(iris_X.shape[0])

# indices = numpy.random.permutation(len(data_x))

rand_data_x = iris_X[indices]

rand_data_y = iris_Y[indices]

iris_train_X = rand_data_x[1:75, :]

iris_train_Y = rand_data_y[1:75, :]

iris_test_X = rand_data_x[55:75, :]

iris_test_Y = rand_data_y[55:75, :]4.线性回归:

in_size = 4

out_size = 3

x = tf.placeholder(tf.float32, [None, in_size])

y = tf.placeholder(tf.float32, [None, out_size])

W=tf.Variable(tf.random_normal([in_size, out_size]), name='W')

b=tf.Variable(tf.zeros([1,out_size]), name='b') + 0.2 #不设为0

WXB = tf.matmul(x, W) + b

pred = tf.nn.softmax(WXB)

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y*tf.log(pred),reduction_indices=[1])) #loss

train_step=tf.train.GradientDescentOptimizer(0.008).minimize(cross_entropy)

init=tf.initialize_all_variables()

with tf.Session() as sess:

sess.run(init)

for i in range(1600):

sess.run([train_step],feed_dict={x:iris_train_X,y:iris_train_Y})

if i % 100 == 0:

print("ready go!")

y_pre = sess.run(pred, feed_dict={x:iris_test_X})#需要run才嫩

correct_prediction = tf.equal(tf.argmax(y_pre, 1), tf.argmax(iris_test_Y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

result = sess.run(accuracy, feed_dict={x: iris_test_X, y: iris_test_Y})

cross_entropy_loss = sess.run(cross_entropy, feed_dict={x: iris_train_X, y: iris_train_Y})

print('预测结果:',[y_pre,'\n',iris_test_Y],'损失函数:',cross_entropy_loss,'\t\t','准确率: ',result)

ccd = 05.总代码:

import tensorflow as tf

import numpy as np

import pickle

filename = 'E:/workspace/dataSet/Classic/sentence/iris.data.txt'

###将iris数据标签转化为number形式

def readChangeIris(filename):

file = open(filename, 'r', encoding='gbk')

iris_XY = []

label_dict = {}

while file.readline():

text = file.readline()

if '' is not text:

texts = text.strip().split(',')

mid_itis_trainX = []

for i in range(len(texts)-1):

wordNumber = float(texts[i]) #注意,是float而不是int

mid_itis_trainX.append(wordNumber)

irisY = texts[len(texts)-1].strip()

if irisY not in label_dict : #构建字典, 存储标签对应的类别数值

label_dict[irisY] = len(label_dict) + 1

irisLabel = label_dict[irisY]

mid_itis_trainX.append(irisLabel)

iris_XY.append(mid_itis_trainX)

file.close()

return iris_XY, label_dict

#测试

iris_XY, label_dict = readChangeIris(filename)

###写成文件形式

def writePickle():

iris_XY, label_dict = readChangeIris(filename)

iris_XY_numpy = np.array(iris_XY) #list转化为numpy,好切片

print('readchange ok!')

irisNumber = open('irisNumber.pkl', 'wb')

pickle.dump(iris_XY_numpy, irisNumber)

irisNumber.close()

def loadPickle():

pkl_file = open('irisNumber.pkl', 'rb')

irisXY = pickle.load(pkl_file)

# 注意切片

iris_X = irisXY[:,0:4]

iris_yy = irisXY[:, 4]

iris_Y = []

for y in iris_yy:

if y == 1:

iris_Y.append([1, 0, 0])

if y == 2:

iris_Y.append([0, 1, 0])

if y == 3:

iris_Y.append([0, 0, 1])

iris_Y_numpy = np.array(iris_Y)

return iris_X, iris_Y_numpy

#读写数据

writePickle()

iris_X, iris_Y = loadPickle()

# onehotLabels = digit2onehot(iris_yy, 3)

indices = np.random.permutation(iris_X.shape[0])

# indices = numpy.random.permutation(len(data_x))

rand_data_x = iris_X[indices]

rand_data_y = iris_Y[indices]

iris_train_X = rand_data_x[1:75, :]

iris_train_Y = rand_data_y[1:75, :]

iris_test_X = rand_data_x[55:75, :]

iris_test_Y = rand_data_y[55:75, :]

in_size = 4

out_size = 3

x = tf.placeholder(tf.float32, [None, in_size])

y = tf.placeholder(tf.float32, [None, out_size])

W=tf.Variable(tf.random_normal([in_size, out_size]), name='W')

b=tf.Variable(tf.zeros([1,out_size]), name='b') + 0.2 #不设置为0

WXB = tf.matmul(x, W) + b

pred = tf.nn.softmax(WXB)

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y*tf.log(pred),reduction_indices=[1])) #loss

train_step=tf.train.GradientDescentOptimizer(0.008).minimize(cross_entropy)

init=tf.initialize_all_variables()

with tf.Session() as sess:

sess.run(init)

for i in range(1600):

sess.run([train_step],feed_dict={x:iris_train_X,y:iris_train_Y})

if i % 100 == 0:

print("ready go!")

y_pre = sess.run(pred, feed_dict={x:iris_test_X})#需要run才嫩

correct_prediction = tf.equal(tf.argmax(y_pre, 1), tf.argmax(iris_test_Y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

result = sess.run(accuracy, feed_dict={x: iris_test_X, y: iris_test_Y})

cross_entropy_loss = sess.run(cross_entropy, feed_dict={x: iris_train_X, y: iris_train_Y})

print('预测结果:',[y_pre,'\n',iris_test_Y],'损失函数:',cross_entropy_loss,'\t\t','准确率: ',result)6.不动手不知道,好多坑,尤其是数据处理,各种格式,各种数据类型

希望对你有帮助!