一. 线性回归是逻辑回归的基础,通俗来说,就是找到输出 X 与输入 Y 之间的关系 。

二. 直接上代码,如下 :

# coding:utf-8

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

# Step 1: data construction

data = np.asarray([

[6.2, 29.],

[9.5, 44.],

[10.5, 36.],

[7.7, 37.],

[8.6, 53.],

[34.1, 68.],

[11., 75.],

[6.9, 18.],

[7.3, 31.],

[15.1, 25.],

[29.1, 34.],

[2.2, 14.],

[5.7, 11.],

[2., 11.],

[2.5, 22.],

[4., 16.],

[5.4, 27.],

[2.2, 9.],

[7.2, 29.],

[15.1, 30.],

[16.5, 40.],

[18.4, 32.],

[36.2, 41.],

[39.7, 147.],

[18.5, 22.],

[23.3, 29.],

[12.2, 46.],

[5.6, 23.],

[21.8, 4.],

[21.6, 31.],

[9., 39.],

[3.6, 15.],

[5., 32.],

[28.6, 27.],

[17.4, 32.],

[11.3, 34.],

[3.4, 17.],

[11.9, 46.],

[10.5, 42.],

[10.7, 43.],

[10.8, 34.],

[4.8, 19.]])

n_samples = data.shape[0]

# Step 2: create placeholders for input X and label Y

X = tf.placeholder(tf.float32, name='X')

Y = tf.placeholder(tf.float32, name='Y')

# Step 3: create weight and bias, initialized to 0

w = tf.Variable(0.0, name='weights')

b = tf.Variable(0.0, name='bias')

# Step 4: build model to predict Y

Y_predicted = X * w + b

# Step 5: use the square error as the loss function

loss = tf.square(Y - Y_predicted, name='loss')

# Step 6: using gradient descent with learning rate of 0.001 to minimize loss

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.001).minimize(loss)

# Step 7: train the model for 50 epochs

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(50):

total_loss = 0

for x, y in data:

# Session runs train_op and fetch values of loss

_, sub_loss = sess.run([optimizer, loss], feed_dict={X: x, Y: y})

total_loss += sub_loss

print('Epoch {0}: {1}'.format(i, total_loss/n_samples))

# Step 8: output the values of w and b

w, b = sess.run([w, b])

print("w:[", w, "] b[", b, "]")

# Step 9: draw the result

X, Y = data.T[0], data.T[1]

plt.plot(X, Y, 'bo', label='Real data')

plt.plot(X, X * w + b, 'r', label='Predicted data')

plt.legend()

plt.show()

三. 输出

Console 输出为

Epoch 0: 2069.6319333978354

Epoch 1: 2117.0123581953535

Epoch 2: 2092.302723001866

Epoch 3: 2068.5080461938464

Epoch 4: 2045.591184088162

Epoch 5: 2023.5146448101316

Epoch 6: 2002.2447619835536

Epoch 7: 1981.748338803649

Epoch 8: 1961.9944411260742

Epoch 9: 1942.9520116143283

Epoch 10: 1924.5930823644712

Epoch 11: 1906.8898800636332

Epoch 12: 1889.8164505837929

Epoch 13: 1873.347133841543

Epoch 14: 1857.4588400604468

Epoch 15: 1842.1278742424079

Epoch 16: 1827.332495119955

Epoch 17: 1813.0520579712022

Epoch 18: 1799.2660847636982

Epoch 19: 1785.9562132299961

Epoch 20: 1773.1024853109072

Epoch 21: 1760.689129482884

Epoch 22: 1748.6984157081515

Epoch 23: 1737.1138680398553

Epoch 24: 1725.920873066732

Epoch 25: 1715.1046249579008

Epoch 26: 1704.6500954309377

Epoch 27: 1694.5447134910141

Epoch 28: 1684.7746311347667

Epoch 29: 1675.328450968245

Epoch 30: 1666.1935385839038

Epoch 31: 1657.3584002084322

Epoch 32: 1648.8122658529207

Epoch 33: 1640.5440742547091

Epoch 34: 1632.5446836102221

Epoch 35: 1624.8043315147183

Epoch 36: 1617.3126799958602

Epoch 37: 1610.0622532456405

Epoch 38: 1603.0433557207386

Epoch 39: 1596.2479176106197

Epoch 40: 1589.668056331575

Epoch 41: 1583.2965242617897

Epoch 42: 1577.126371285745

Epoch 43: 1571.1501190634

Epoch 44: 1565.360979151513

Epoch 45: 1559.7523780798629

Epoch 46: 1554.3184364555138

Epoch 47: 1549.0529469620615

Epoch 48: 1543.950059985476

Epoch 49: 1539.0050282141283

w:[ 1.99747 ] b[ 12.5687 ]绘制输出为

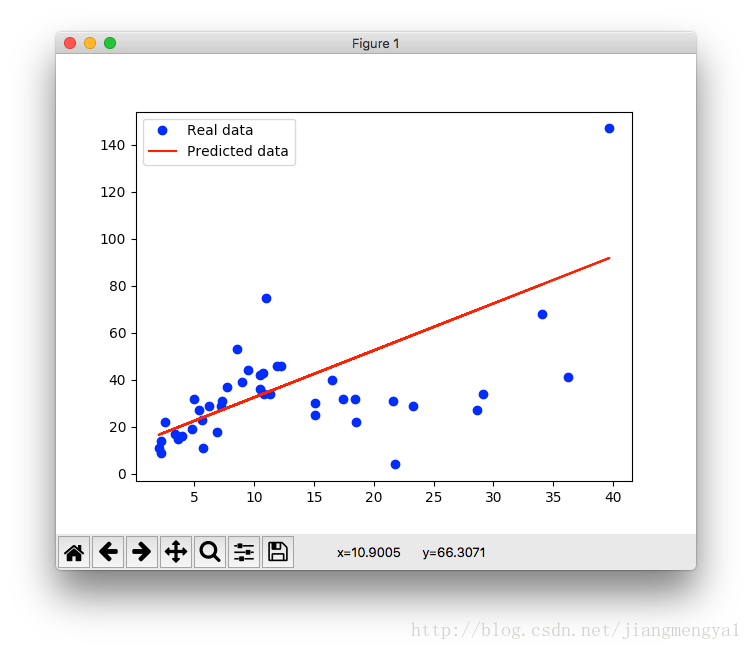

我们预测的直线模型就是图中的红色直线,该直线模拟了空间里点的分布方向。

如果要更精确地对空间里点的分布进行模拟,则需要适当复杂的模型以及随之更多的参数。