一、Auto-Encoders(AE)实战

导入模块

# 图片的重建

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

# 使用GPU,设置内存自动增长

from tensorflow.compat.v1 import ConfigProto

from tensorflow.compat.v1 import InteractiveSession

config = ConfigProto()

config.gpu_options.allow_growth = True

session = InteractiveSession(config=config)

import numpy as np

import random

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

from tensorflow import keras

from tensorflow.keras import Sequential,layers

# 设置随机数种子

def seed_everying(SEED):

os.environ['TF_DETERMINISTIC_OPS'] = '1'

os.environ['PYTHONHASHSEED'] = str(SEED)

random.seed(SEED)

np.random.seed(SEED)

tf.random.set_seed(SEED)

seed_everying(42)

assert tf.__version__.startswith('2.')

保存图片

# 定义Image保存函数,将多张Image保存到一张Image里面去

def save_images(imgs,name):

news_im = Image.new('L',(280,280))

index = 0

# 将10张Image保存到一张Image里面去

for i in range(0,280,28):

for j in range(0,280,28):

im = imgs[index]

im = Image.fromarray(im,mode='L')

news_im.paste(im,(i,j))

index += 1

news_im.save(name)

加载数据集并进行预处理

h_dim = 20 # 将原来的784维的数据降维到20维上

batchsz = 512

lr = 1e-3

# 加载数据集

(x_train, y_train), (x_test, y_test) = keras.datasets.fashion_mnist.load_data()

x_train, x_test = x_train.astype(np.float32) / 255., x_test.astype(np.float32) / 255.

# 无监督学习,我们不需要使用label,即y_train、y_test不使用

train_db = tf.data.Dataset.from_tensor_slices((x_train))

train_db = train_db.shuffle(batchsz * 5).batch(batchsz)

test_db = tf.data.Dataset.from_tensor_slices((x_test))

test_db = test_db.batch(batchsz)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

搭建网络

# 建立模型

class AE(keras.Model):

def __init__(self):

super(AE, self).__init__()

# Encoders-编码

self.encoder = Sequential([

layers.Dense(256, activation=tf.nn.relu),

layers.Dense(128, activation=tf.nn.relu),

layers.Dense(h_dim)

])

# Decoders-解码

self.decoder = Sequential([

layers.Dense(128, activation=tf.nn.relu),

layers.Dense(256, activation=tf.nn.relu),

layers.Dense(784)

])

def call(self, inputs, training=None):

# [b,784] -> [b,10]

h = self.encoder(inputs)

# [b,10] -> [b,784]

x_hat = self.decoder(h)

return x_hat

全部代码:

# 图片的重建

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

# 使用GPU,设置内存自动增长

from tensorflow.compat.v1 import ConfigProto

from tensorflow.compat.v1 import InteractiveSession

config = ConfigProto()

config.gpu_options.allow_growth = True

session = InteractiveSession(config=config)

import numpy as np

import random

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

from tensorflow import keras

from tensorflow.keras import Sequential, layers

# 设置随机数种子

# 随机数种子

def seed_everying(SEED):

os.environ['TF_DETERMINISTIC_OPS'] = '1'

os.environ['PYTHONHASHSEED'] = str(SEED)

random.seed(SEED)

np.random.seed(SEED)

tf.random.set_seed(SEED)

seed_everying(42)

assert tf.__version__.startswith('2.')

# 定义Image保存函数,将多张Image保存到一张Image里面去

def save_images(imgs, name):

news_im = Image.new('L', (280, 280))

index = 0

# 将10张Image保存到一张Image里面去

for i in range(0, 280, 28):

for j in range(0, 280, 28):

im = imgs[index]

im = Image.fromarray(im, mode='L')

news_im.paste(im, (i, j))

index += 1

news_im.save(name)

h_dim = 20 # 将原来的784维的数据降维到20维上

batchsz = 512

lr = 1e-3

# 加载数据集

(x_train, y_train), (x_test, y_test) = keras.datasets.fashion_mnist.load_data()

x_train, x_test = x_train.astype(np.float32) / 255., x_test.astype(np.float32) / 255.

# 无监督学习,我们不需要使用label,即y_train、y_test不使用

train_db = tf.data.Dataset.from_tensor_slices((x_train))

train_db = train_db.shuffle(batchsz * 5).batch(batchsz)

test_db = tf.data.Dataset.from_tensor_slices((x_test))

test_db = test_db.batch(batchsz)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

# 建立模型

class AE(keras.Model):

def __init__(self):

super(AE, self).__init__()

# Encoders-编码

self.encoder = Sequential([

layers.Dense(256, activation=tf.nn.relu),

layers.Dense(128, activation=tf.nn.relu),

layers.Dense(h_dim)

])

# Decoders-解码

self.decoder = Sequential([

layers.Dense(128, activation=tf.nn.relu),

layers.Dense(256, activation=tf.nn.relu),

layers.Dense(784)

])

def call(self, inputs, training=None):

# [b,784] -> [b,10]

h = self.encoder(inputs)

# [b,10] -> [b,784]

x_hat = self.decoder(h)

return x_hat

model = AE()

model.build(input_shape=(None, 784))

# 查看网络结构

model.summary()

optimizer = tf.optimizers.Adam(lr=lr)

# training

for epoch in range(50):

for step, x in enumerate(train_db):

# [b,28,28] -> [b,784]

x = tf.reshape(x, [-1, 784])

with tf.GradientTape() as tape:

x_rec_logits = model.call(x)

rec_loss = tf.losses.binary_crossentropy(x, x_rec_logits, from_logits=True)

rec_loss = tf.reduce_mean(rec_loss)

grads = tape.gradient(rec_loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

# 打印training进度

if step % 100 == 0:

print(epoch, step, float(rec_loss))

# evaluation

x = next(iter(test_db))

# training时,将x打平了

x = tf.reshape(x, [-1, 784])

logits = model.predict(x)

x_hat = tf.sigmoid(logits)

# [b,784] => [b,28,28]

x_hat = tf.reshape(x_hat, [-1, 28, 28])

# [b,28,28] => [2b,28,28]

# x_concat = tf.concat([x, x_hat], axis=0)

x_concat = x_hat

x_concat = x_concat.numpy() * 255.

x_concat = x_concat.astype(np.uint8)

save_images(x_concat, 'ae_images/rec_epoch_%d.png' % epoch)

(60000, 28, 28) (60000,)

(10000, 28, 28) (10000,)

Model: "ae"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

sequential (Sequential) multiple 235146

_________________________________________________________________

sequential_1 (Sequential) multiple 235920

=================================================================

Total params: 471,066

Trainable params: 471,066

Non-trainable params: 0

_________________________________________________________________

0 0 0.6929969787597656

0 100 0.3294386863708496

1 0 0.31267669796943665

1 100 0.30951932072639465

2 0 0.2991226315498352

2 100 0.30571430921554565

3 0 0.30124884843826294

3 100 0.29927968978881836

4 0 0.29633498191833496

4 100 0.29800713062286377

5 0 0.28601688146591187

...

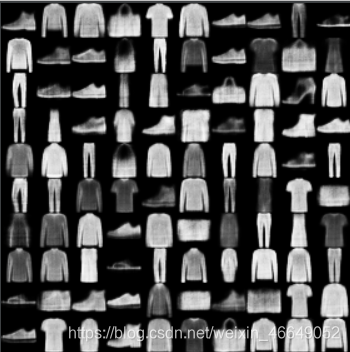

每5epoch展示图片

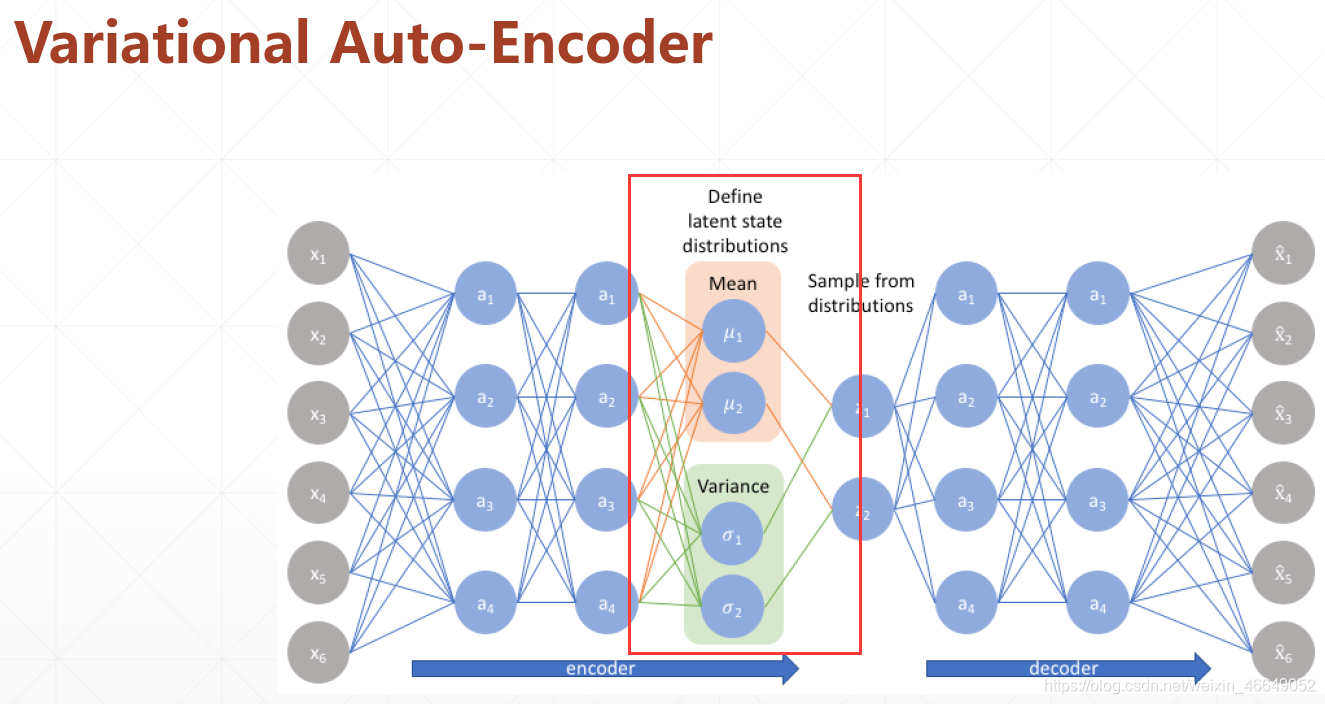

二、Varational Auto Encoder(VAE)实战

导入模块

# 图片的重建

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

# 使用GPU,设置内存自动增长

from tensorflow.compat.v1 import ConfigProto

from tensorflow.compat.v1 import InteractiveSession

config = ConfigProto()

config.gpu_options.allow_growth = True

session = InteractiveSession(config=config)

import numpy as np

import random

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

from tensorflow import keras

from tensorflow.keras import Sequential, layers

# 设置随机数种子

def seed_everying(SEED):

os.environ['TF_DETERMINISTIC_OPS'] = '1'

os.environ['PYTHONHASHSEED'] = str(SEED)

random.seed(SEED)

np.random.seed(SEED)

tf.random.set_seed(SEED)

seed_everying(42)

assert tf.__version__.startswith('2.')

保存图片

# 定义Image保存函数,将多张Image保存到一张Image里面去

def save_images(imgs,name):

news_im = Image.new('L',(280,280))

index = 0

# 将10张Image保存到一张Image里面去

for i in range(0,280,28):

for j in range(0,280,28):

im = imgs[index]

im = Image.fromarray(im,mode='L')

news_im.paste(im,(i,j))

index += 1

news_im.save(name)

加载数据并进行预处理

z_dim = 10

batchsz = 256

lr = 1e-3

# 加载数据集

(x_train, y_train), (x_test, y_test) = keras.datasets.fashion_mnist.load_data()

x_train, x_test = x_train.astype(np.float32) / 255., x_test.astype(np.float32) / 255.

# 无监督学习,我们不需要使用label,即y_train、y_test不使用

train_db = tf.data.Dataset.from_tensor_slices((x_train))

train_db = train_db.shuffle(batchsz * 5).batch(batchsz)

test_db = tf.data.Dataset.from_tensor_slices((x_test))

test_db = test_db.batch(batchsz)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

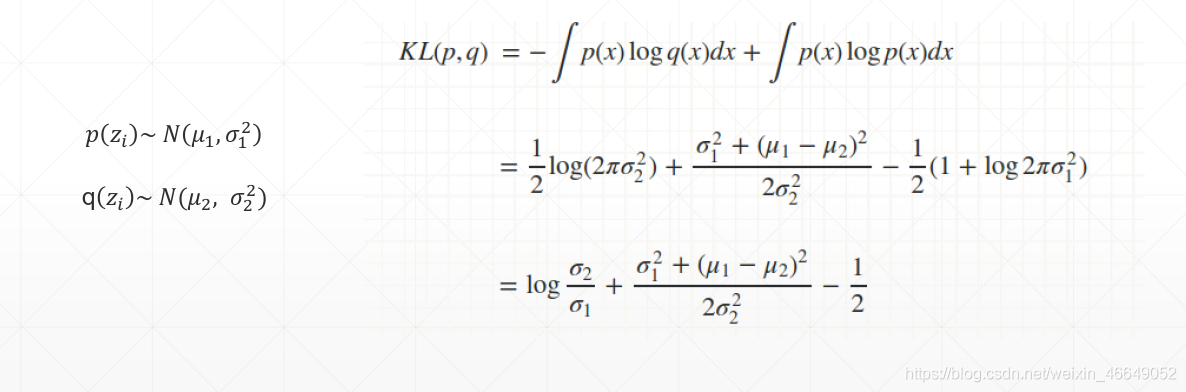

损失函数定义

rec_loss = tf.nn.sigmoid_cross_entropy_with_logits(labels = x,logits = x_hat)

rec_loss = tf.reduce_sum(rec_loss) / x.shape[0]

# compute KL divergence (mu, var) ~N(0,1)

# p~N(mu,∨var) q~N(0,1) 故u2=0,σ2=1

kl_div = -0.5 * (2*log_var + 1 - mu ** 2 - tf.exp(log_var))

kl_div = tf.reduce_mean(kl_div) / x.shape[0]

loss = rec_loss + 1. * kl_div

构建网络

class VAE(keras.Model):

def __init__(self):

super(VAE, self).__init__()

# Encoder - 编码

self.fc1 = layers.Dense(128)

self.fc2 = layers.Dense(z_dim) # get mean prediction

self.fc3 = layers.Dense(z_dim) # get var prediction

# Decoder

self.fc4 = layers.Dense(128)

self.fc5 = layers.Dense(784)

# 定义编码函数

def encoder(self, x):

h = tf.nn.relu(self.fc1(x))

# get mean

mu = self.fc2(h)

# get variance

log_var = self.fc3(h)

return mu, log_var

# 定义解码函数

def decoder(self, z):

out = tf.nn.relu(self.fc4(z))

out = self.fc5(out)

return out

# 定义z函数

def reparameterize(self, mu, log_var):

eps = tf.random.normal(log_var.shape)

# 标准差

std = tf.exp(log_var) ** 0.5

# std = tf.exp(log_var * 0.5)

z = mu + std * eps

return z

# 定义前向传播

def call(self, inputs, training=None):

# [b,784] => [b,z_dim],[b,z_dim]

mu, log_var = self.encoder(inputs)

# reparameterization trick

z = self.reparameterize(mu, log_var)

x_hat = self.decoder(z)

# 除了前向传播不同以外,还有一个约束,使得mu,var趋向于正态分布

return x_hat, mu, log_var

全部代码

# 图片的重建

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

# 使用GPU,设置内存自动增长

from tensorflow.compat.v1 import ConfigProto

from tensorflow.compat.v1 import InteractiveSession

config = ConfigProto()

config.gpu_options.allow_growth = True

session = InteractiveSession(config=config)

import numpy as np

import random

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

from tensorflow import keras

from tensorflow.keras import Sequential, layers

# 设置随机数种子

def seed_everying(SEED):

os.environ['TF_DETERMINISTIC_OPS'] = '1'

os.environ['PYTHONHASHSEED'] = str(SEED)

random.seed(SEED)

np.random.seed(SEED)

tf.random.set_seed(SEED)

seed_everying(42)

assert tf.__version__.startswith('2.')

def save_images(imgs, name):

new_im = Image.new('L', (280, 280))

index = 0

for i in range(0, 280, 28):

for j in range(0, 280, 28):

im = imgs[index]

im = Image.fromarray(im, mode='L')

new_im.paste(im, (i, j))

index += 1

new_im.save(name)

z_dim = 10

batchsz = 256

lr = 1e-3

# 加载数据集

(x_train, y_train), (x_test, y_test) = keras.datasets.fashion_mnist.load_data()

x_train, x_test = x_train.astype(np.float32) / 255., x_test.astype(np.float32) / 255.

# 无监督学习,我们不需要使用label,即y_train、y_test不使用

train_db = tf.data.Dataset.from_tensor_slices((x_train))

train_db = train_db.shuffle(batchsz * 5).batch(batchsz)

test_db = tf.data.Dataset.from_tensor_slices((x_test))

test_db = test_db.batch(batchsz)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

class VAE(keras.Model):

def __init__(self):

super(VAE, self).__init__()

# Encoder - 编码

self.fc1 = layers.Dense(128)

self.fc2 = layers.Dense(z_dim) # get mean prediction

self.fc3 = layers.Dense(z_dim) # get var prediction

# Decoder

self.fc4 = layers.Dense(128)

self.fc5 = layers.Dense(784)

# 定义编码函数

def encoder(self, x):

h = tf.nn.relu(self.fc1(x))

# get mean

mu = self.fc2(h)

# get variance

log_var = self.fc3(h)

return mu, log_var

# 定义解码函数

def decoder(self, z):

out = tf.nn.relu(self.fc4(z))

out = self.fc5(out)

return out

# 定义z函数

def reparameterize(self, mu, log_var):

eps = tf.random.normal(log_var.shape)

# 标准差

std = tf.exp(log_var) ** 0.5

# std = tf.exp(log_var * 0.5)

z = mu + std * eps

return z

# 定义前向传播

def call(self, inputs, training=None):

# [b,784] => [b,z_dim],[b,z_dim]

mu, log_var = self.encoder(inputs)

# reparameterization trick

z = self.reparameterize(mu, log_var)

x_hat = self.decoder(z)

# 除了前向传播不同以外,还有一个约束,使得mu,var趋向于正态分布

return x_hat, mu, log_var

model = VAE()

model.build(input_shape=(4, 784)) # 这一个与往常构造的时候有区别

optimizer = tf.optimizers.Adam(lr=lr)

for epoch in range(50):

for step, x in enumerate(train_db):

# [b,28,28] => [b,784]

x = tf.reshape(x, [-1, 784])

with tf.GradientTape() as tape:

x_hat, mu, log_var = model(x)

rec_loss = tf.nn.sigmoid_cross_entropy_with_logits(labels = x,logits = x_hat)

rec_loss = tf.reduce_sum(rec_loss) / x.shape[0]

# compute KL divergence (mu, var) ~N(0,1)

# p~N(mu,∨var) q~N(0,1) 故u2=0,σ2=1

kl_div = -0.5 * (2*log_var + 1 - mu ** 2 - tf.exp(log_var))

kl_div = tf.reduce_mean(kl_div) / x.shape[0]

loss = rec_loss + 1. * kl_div

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

if step % 100 == 0:

print(epoch, step, 'kl div:', float(kl_div), 'rec loss:', float(loss))

# evaluation-测试生成效果

z = tf.random.normal((batchsz,z_dim))

logits = model.decoder(z)

x_hat = tf.sigmoid(logits)

x_hat = tf.reshape(x_hat,[-1,28,28]).numpy()* 255.

x_hat = x_hat.astype(np.uint8)

save_images(x_hat,'vae_image/sampled_epoch%d.png'%epoch)

# 重建图片

x = next(iter(test_db))

x = tf.reshape(x, [-1, 784])

x_hat_logits, _, _ = model(x)

x_hat = tf.sigmoid(x_hat_logits)

x_hat = tf.reshape(x_hat, [-1, 28, 28]).numpy() * 255.

x_hat = x_hat.astype(np.uint8)

save_images(x_hat,'vae_image_1/sampled_epoch%d.png'%epoch)

(60000, 28, 28) (60000,)

(10000, 28, 28) (10000,)

0 0 kl div: 0.001522199483588338 rec loss: 545.1880493164062

0 100 kl div: 0.12963518500328064 rec loss: 276.12176513671875

0 200 kl div: 0.15164682269096375 rec loss: 247.87600708007812

1 0 kl div: 0.1401350051164627 rec loss: 252.12911987304688

1 100 kl div: 0.14920486509799957 rec loss: 241.8990478515625

1 200 kl div: 0.16304132342338562 rec loss: 233.77679443359375

2 0 kl div: 0.16015133261680603 rec loss: 238.06817626953125

2 100 kl div: 0.15632715821266174 rec loss: 240.16061401367188

2 200 kl div: 0.157673180103302 rec loss: 233.2672882080078

3 0 kl div: 0.1759733110666275 rec loss: 230.8567352294922

3 100 kl div: 0.16381677985191345 rec loss: 230.72335815429688

3 200 kl div: 0.15521030128002167 rec loss: 233.7858123779297

4 0 kl div: 0.16824732720851898 rec loss: 227.0357208251953

4 100 kl div: 0.16316646337509155 rec loss: 235.9604949951172

4 200 kl div: 0.1655232012271881 rec loss: 229.02232360839844

...

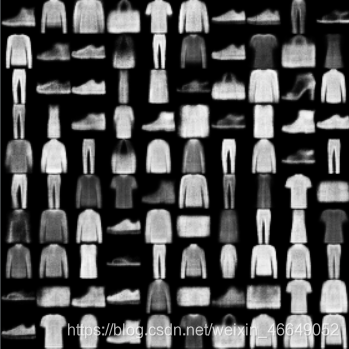

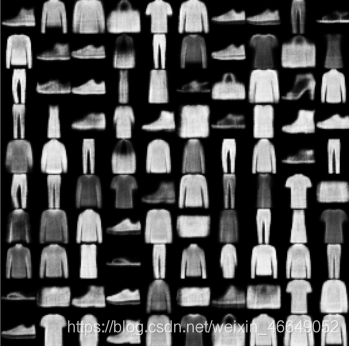

每5epoch打印一次: