Learning Settings 1.2

-

The goal of Zero-Shot Learning: learn the Classifier .

-

During Model learning : if Information about testing instances is involved , the learned model is transductive for these specific testing instances.

-

In Zero-Shot Learning , this transduction can be embodied in two progressive degrees:

- Transductive for specific unseen classes

- Transductive for specific testing instances

This is different from the well-known transductive setting in semisupervised learning . which is just for the testing instances .

In the seeting that is transductive for specific testing instances , The transductive degree goes further.

The testing instances are also involved in model learning . and the model is optimized for these specific testing instances.

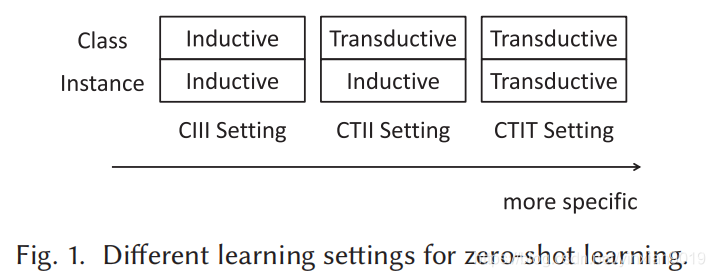

Based on the degree of transduction, we categorize zero-shot learning into three learning settings:- Class-Inductive Instance-Inductive Setting(CIII) :

Only labeled training instances and seen class prototypes are used in model learning.

- Class-transductive Instance-Inductive Setting(“CTII”)

Labeled traning instances , seen class protptypes , unseen class prototype are used in model learning .

- Class-Transductive Instance-Transductive Setting(“CTIT”)

Labeled training instances , seen class protptypes ,unlabeled testing instances , and unseen class prototypes ,unlabeled testing instances , and unseen class prototypes are used in model learning .

As Fig.1 we can see, from CIII to CTIT , the classifier is learned with increasingly specific testing instances 's information .

In machine-learning methods , as the distributions of the training and the testing instances are different , the performance of the model learned with the training instances will decrease when applied to the testing instances.

This phenomenon is more severe in zero-shot learning , as the classes covered by the training and the testing instances are disjoint , this phenomenon is usually referred to as domain shift.

Under the CIII setting , as no information about the testing instances is involved in model learning , the problem of domain shift is severe in some methods under this setting.

However , as the models under this setting are not optimized for specific unseen classes testing instances , when new unseen classes or testing instances need to be classified , the generalization ability of models learned under this setting is usually better than models learned under the CTTI of CTIT settings.

Under the CTII setting , as the unseen class prototypes are involved in model learning , the problem of domain shift is less severe .

However , the ability of CTII methods to generalize to new unseen classes is limited.

Under the CTIT setting , as the models are otimied for specific unseen classes and testing instances , the problem of domain shift is the least sefere among these three learning settings.

However , the generalization ability to new unseen classes and testing instances is also the most limited.

We will introduce methods In Section 3, under these three learning settings separately .