机器学习(周志华) 西瓜书 第七章课后习题7.3—— Python实现

-

实验题目

试编程实现拉普拉斯修正的朴素贝叶斯分类器,并以西瓜数据集3.0为训练集,对P.151“测1”样本进行判别。

-

实验原理

拉普拉斯修正避免了因训练集样本不充分而导致概率估值为0的问题

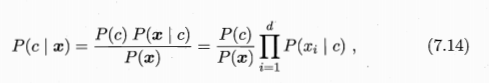

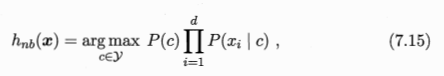

朴素贝叶斯分类器:

朴素贝叶斯分类器表达式

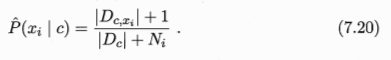

拉普拉斯修正后的类先验概率计算式

拉普拉斯修正后的离散属性条件概率计算式

连续属性条件概率计算式

-

实验过程

数据集获取

获取书中的西瓜数据集3.0,并存为data_3.txt

编号,色泽,根蒂,敲声,纹理,脐部,触感,密度,含糖率,好瓜

1,青绿,蜷缩,浊响,清晰,凹陷,硬滑,0.697,0.46,是

2,乌黑,蜷缩,沉闷,清晰,凹陷,硬滑,0.774,0.376,是

3,乌黑,蜷缩,浊响,清晰,凹陷,硬滑,0.634,0.264,是

4,青绿,蜷缩,沉闷,清晰,凹陷,硬滑,0.608,0.318,是

5,浅白,蜷缩,浊响,清晰,凹陷,硬滑,0.556,0.215,是

6,青绿,稍蜷,浊响,清晰,稍凹,软粘,0.403,0.237,是

7,乌黑,稍蜷,浊响,稍糊,稍凹,软粘,0.481,0.149,是

8,乌黑,稍蜷,浊响,清晰,稍凹,硬滑,0.437,0.211,是

9,乌黑,稍蜷,沉闷,稍糊,稍凹,硬滑,0.666,0.091,否

10,青绿,硬挺,清脆,清晰,平坦,软粘,0.243,0.267,否

11,浅白,硬挺,清脆,模糊,平坦,硬滑,0.245,0.057,否

12,浅白,蜷缩,浊响,模糊,平坦,软粘,0.343,0.099,否

13,青绿,稍蜷,浊响,稍糊,凹陷,硬滑,0.639,0.161,否

14,浅白,稍蜷,沉闷,稍糊,凹陷,硬滑,0.657,0.198,否

15,乌黑,稍蜷,浊响,清晰,稍凹,软粘,0.36,0.37,否

16,浅白,蜷缩,浊响,模糊,平坦,硬滑,0.593,0.042,否

17,青绿,蜷缩,沉闷,稍糊,稍凹,硬滑,0.719,0.103,否算法实现

数据定义,定义属性及其取值种类、类标签种类

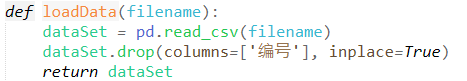

读取数据函数

生成预测数据

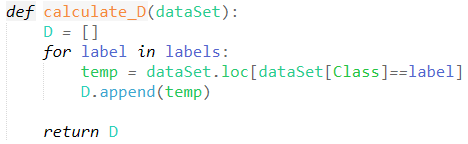

按标签类别生成不同标签类样本组成的集合

计算类先验概率

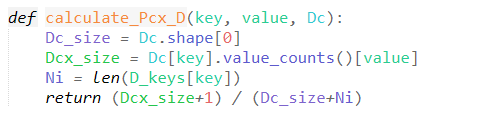

计算离散属性条件概率

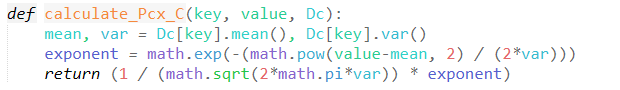

计算连续属性条件概率

计算将测试样本判定为label的概率可信度

预测测试样本的标签

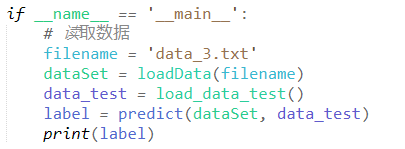

main函数,调用上述功能函数

-

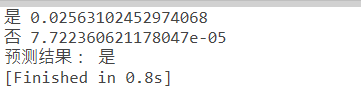

实验结果

-

程序清单:

import math

import numpy as np

import pandas as pd

D_keys = {

'色泽': ['青绿', '乌黑', '浅白'],

'根蒂': ['蜷缩', '硬挺', '稍蜷'],

'敲声': ['清脆', '沉闷', '浊响'],

'纹理': ['稍糊', '模糊', '清晰'],

'脐部': ['凹陷', '稍凹', '平坦'],

'触感': ['软粘', '硬滑'],

}

Class, labels = '好瓜', ['是', '否']

# 读取数据

def loadData(filename):

dataSet = pd.read_csv(filename)

dataSet.drop(columns=['编号'], inplace=True)

return dataSet

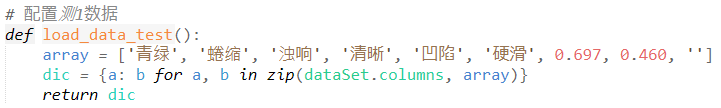

# 配置测1数据

def load_data_test():

array = ['青绿', '蜷缩', '浊响', '清晰', '凹陷', '硬滑', 0.697, 0.460, '']

dic = {a: b for a, b in zip(dataSet.columns, array)}

return dic

def calculate_D(dataSet):

D = []

for label in labels:

temp = dataSet.loc[dataSet[Class]==label]

D.append(temp)

return D

def calculate_Pc(Dc, D):

D_size = D.shape[0]

Dc_size = Dc.shape[0]

N = len(labels)

return (Dc_size+1) / (D_size+N)

def calculate_Pcx_D(key, value, Dc):

Dc_size = Dc.shape[0]

Dcx_size = Dc[key].value_counts()[value]

Ni = len(D_keys[key])

return (Dcx_size+1) / (Dc_size+Ni)

def calculate_Pcx_C(key, value, Dc):

mean, var = Dc[key].mean(), Dc[key].var()

exponent = math.exp(-(math.pow(value-mean, 2) / (2*var)))

return (1 / (math.sqrt(2*math.pi*var)) * exponent)

def calculate_probability(label, Dc, dataSet, data_test):

prob = calculate_Pc(Dc, dataSet)

for key in Dc.columns[:-1]:

value = data_test[key]

if key in D_keys:

prob *= calculate_Pcx_D(key, value, Dc)

else:

prob *= calculate_Pcx_C(key, value, Dc)

return prob

def predict(dataSet, data_test):

# mu, sigma = dataSet.mean(), dataSet.var()

Dcs = calculate_D(dataSet)

max_prob = -1

for label, Dc in zip(labels, Dcs):

prob = calculate_probability(label, Dc, dataSet, data_test)

if prob > max_prob:

best_label = label

max_prob = prob

print(label, prob)

return best_label

if __name__ == '__main__':

# 读取数据

filename = 'data_3.txt'

dataSet = loadData(filename)

data_test = load_data_test()

label = predict(dataSet, data_test)

print('预测结果:', label)