机器学习(周志华) 西瓜书 第三章课后习题3.3—— Python实现

个人原创,禁止转载——Zetrue_Li

复制下列数据并粘贴到记事本,保存为data.txt:

编号,色泽,根蒂,敲声,纹理,脐部,触感,密度,含糖率,好瓜

1,青绿,蜷缩,浊响,清晰,凹陷,硬滑,0.697,0.46,是

2,乌黑,蜷缩,沉闷,清晰,凹陷,硬滑,0.774,0.376,是

3,乌黑,蜷缩,浊响,清晰,凹陷,硬滑,0.634,0.264,是

4,青绿,蜷缩,沉闷,清晰,凹陷,硬滑,0.608,0.318,是

5,浅白,蜷缩,浊响,清晰,凹陷,硬滑,0.556,0.215,是

6,青绿,稍蜷,浊响,清晰,稍凹,软粘,0.403,0.237,是

7,乌黑,稍蜷,浊响,稍糊,稍凹,软粘,0.481,0.149,是

8,乌黑,稍蜷,浊响,清晰,稍凹,硬滑,0.437,0.211,是

9,乌黑,稍蜷,沉闷,稍糊,稍凹,硬滑,0.666,0.091,否

10,青绿,硬挺,清脆,清晰,平坦,软粘,0.243,0.267,否

11,浅白,硬挺,清脆,模糊,平坦,硬滑,0.245,0.057,否

12,浅白,蜷缩,浊响,模糊,平坦,软粘,0.343,0.099,否

13,青绿,稍蜷,浊响,稍糊,凹陷,硬滑,0.639,0.161,否

14,浅白,稍蜷,沉闷,稍糊,凹陷,硬滑,0.657,0.198,否

15,乌黑,稍蜷,浊响,清晰,稍凹,软粘,0.36,0.37,否

16,浅白,蜷缩,浊响,模糊,平坦,硬滑,0.593,0.042,否

17,青绿,蜷缩,沉闷,稍糊,稍凹,硬滑,0.719,0.103,否Python代码:

# 对率回归 西瓜数据集3.0ɑ

# -*- coding: utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

def loadData(filename):

dataSet = pd.read_csv(filename)

return dataSet

def processData(dataSet):

dataSet['b'] = 1

x = np.array(dataSet[['密度', '含糖率', 'b']])

y = np.array(dataSet[['好瓜']].replace(['是', '否'], [1, 0]))

return x, y

def p0_function(xi, beta):

return 1 - p1_function(xi, beta)

def p1_function(xi, beta):

beta_T_x = np.dot(beta.T, xi)

exp_beta_T_x = np.exp(beta_T_x)

return exp_beta_T_x / (1+exp_beta_T_x)

def l_function(beta, x, y):

#计算当前3.27式的l值

result = 0

for xi, yi in zip(x, y):

xi = xi.reshape(xi.shape[0], 1)

# beta.T与x相乘, beta_T_x表示β转置乘以x

beta_T_x = np.dot(beta.T, xi)

exp_beta_T_x = np.exp(beta_T_x)

result += -yi*beta_T_x + np.log(1+exp_beta_T_x)

return result

def run(x, y, iterate=100):

#定义初始参数

#β列向量

beta = np.zeros((x.shape[1], 1))

beta[-1] = 1

old_l = 0 #3.27式l值的记录,这是上一次迭代的l值

cur_iter = 0

while cur_iter < iterate:

cur_iter += 1

cur_l = l_function(beta, x, y)

# 迭代终止条件

if np.abs(cur_l - old_l) <= 10e-5:

# 精度,二者差在0.00001以内就认为收敛

# 满足条件直接跳出循环

break

# print(cur_l)

old_l = cur_l

d1_beta, d2_beta = 0, 0

# 牛顿迭代法更新β

for xi, yi in zip(x, y):

xi = xi.reshape(xi.shape[0], 1)

p1 = p1_function(xi, beta)

# 求关于β的一阶导数

d1_beta -= np.dot(xi, yi-p1)

# 求关于β的一阶导数

d2_beta += np.dot(xi, xi.T) * p1 * (1-p1)

#print(beta)

try:

beta = beta - np.dot(np.linalg.inv(d2_beta), d1_beta)

except Exception as e:

break

return beta

def test(beta, x, y):

for xi, yi in zip(x, y):

xi = xi.reshape(xi.shape[0], 1)

beta_T_x = np.dot(beta.T, xi)

y_test = np.exp(beta_T_x)

print(yi, y_test)

if __name__=="__main__":

# 读取数据

filename = 'data.txt'

dataSet = loadData(filename)

# 预处理数据

x, y = processData(dataSet)

beta = run(x, y)

accuracy = 0

for xi, yi in zip(x, y):

p1 = p1_function(xi, beta)

judge = 0 if p1 < 0.5 else 1

# print(yi[0], judge)

accuracy += (judge == yi[0])

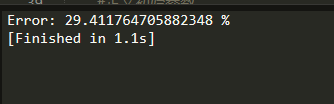

error = 1 - accuracy/dataSet.shape[0]

print('Error:', error*100, '%')实现结果:

错误率: