自动编码器是一种比较好理解的神经网络结构。它是一种无监督的学习特征方法(从自己到自己)。下面以一个例子来介绍。

使用的数据集:[数据集](https://archive.ics.uci.edu/ml/machine-learning-databases/00310/),下载后进行解压。

首先给出完整代码:

#coding=utf-8

#用sklearn对数据集进行处理

import tensorflow as tf

from sklearn.preprocessing import scale#

import pandas as pd

import numpy as np

training_data = pd.read_csv("UJIndoorLoc/trainingData.csv",header=0)

training_x = scale(np.asarray(training_data.ix[:,0:520]))

training_y = np.asarray(training_data["BUILDINGID"].map(str) + training_data["FLOOR"].map(str))

training_y = np.asarray(pd.get_dummies(training_y))

test_dataset = pd.read_csv("UJIndoorLoc/validationData.csv",header=0)

test_x = scale(np.asarray(test_dataset.ix[:,0:520]))

test_y = np.asarray(test_dataset["BUILDINGID"].map(str) + test_dataset["FLOOR"].map(str))

test_y = np.asarray(pd.get_dummies(test_y))

output = training_y.shape[1]

X = tf.placeholder(tf.float32,shape=[None,520])#网络输入

Y = tf.placeholder(tf.float32,[None,output])

#定义神经网络

def neural_network():

#Encoder

e_w_1 = tf.Variable(tf.truncated_normal([520,256],stddev=0.1))

e_b_1 = tf.Variable(tf.constant(0.0,shape=[256]))

e_w_2 = tf.Variable(tf.truncated_normal([256,128],stddev=0.1))

e_b_2 = tf.Variable(tf.constant(0.0,shape=[128]))

e_w_3 = tf.Variable(tf.truncated_normal([128,64],stddev=0.1))

e_b_3 = tf.Variable(tf.constant(0.0,shape=[64]))

#Decoder

d_w_1 = tf.Variable(tf.truncated_normal([64,128],stddev=0.1))

d_b_1 = tf.Variable(tf.constant(0.0,shape=[128]))

d_w_2 = tf.Variable(tf.truncated_normal([128,256],stddev=0.1))

d_b_2 = tf.Variable(tf.constant(0.0,shape=[256]))

d_w_3 = tf.Variable(tf.truncated_normal([256,520],stddev=0.1))

d_b_3 = tf.Variable(tf.constant(0.0,shape=[520]))

#DNN

w_1 = tf.Variable(tf.truncated_normal([64,128],stddev=0.1))

b_1= tf.Variable(tf.constant(0.0,shape=[128]))

w_2= tf.Variable(tf.truncated_normal([128,128],stddev=0.1))

b_2 = tf.Variable(tf.constant(0.0,shape=[128]))

w_3 = tf.Variable(tf.truncated_normal([128,output],stddev=0.1))

b_3 = tf.Variable(tf.constant(0.0,shape=[output]))

#####

layer_1 = tf.nn.tanh(tf.add(tf.matmul(X,e_w_1),e_b_1))

layer_2 = tf.nn.tanh(tf.add(tf.matmul(layer_1,e_w_2),e_b_2))

encoded = tf.nn.tanh(tf.add(tf.matmul(layer_2,e_w_3),e_b_3))

layer_4 = tf.nn.tanh(tf.add(tf.matmul(encoded,d_w_1),d_b_1))

layer_5 = tf.nn.tanh(tf.add(tf.matmul(layer_4,d_w_2),d_b_2))

decoded = tf.nn.tanh(tf.add(tf.matmul(layer_5,d_w_3),d_b_3))

layer_7 = tf.nn.relu(tf.add(tf.matmul(encoded,w_1),b_1))

layer_8 = tf.nn.relu(tf.add(tf.matmul(layer_7,w_2),b_2))

out = tf.nn.softmax(tf.add(tf.matmul(layer_8,w_3),b_3))

return (decoded, out)

#训练神经网络

def train_neural_networks():

decoded, predict_output = neural_network()

us_cost_function = tf.reduce_mean(tf.pow(X-decoded,2))

s_cost_function = -tf.reduce_sum(Y * tf.log(predict_output) )

us_optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(us_cost_function)

s_optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(s_cost_function)

correct_prediction = tf.equal(tf.argmax(predict_output, 1), tf.argmax(Y,1))

accuray = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

training_epochs = 20

batch_size = 10

total_batches = training_data.shape[0]

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

#autoencoder一种非监督学习算法

for epoch in range(training_epochs):

epoch_costs = np.empty(0)

for b in range(total_batches):

offset = (b* batch_size)%(training_x.shape[0] - batch_size)

batch_x = training_x[offset:(offset+batch_size),:]

_,c = sess.run([us_optimizer,us_cost_function],feed_dict={X:batch_x})

epoch_costs = np.append(epoch_costs,c)

print("Eopch:",epoch," Loss: ",np.mean(epoch_costs))

print("----------------------------------------------")

# ---------------- Training NN - Supervised Learning ------------------ #

for epoch in range(training_epochs):

epoch_costs = np.empty(0)

for b in range(total_batches):

offset = (b * batch_size) % (training_x.shape[0]- batch_size)

batch_x = training_x[offset:(offset+batch_size), :]

batch_y = training_y[offset:(offset+batch_size), :]

_,c = sess.run([s_optimizer,s_cost_function],feed_dict={X:batch_x,Y:batch_y})

epoch_costs = np.append(epoch_costs,c)

accuray_in_train_set = sess.run(accuray, feed_dict={X:training_x,Y:training_y})

accuray_in_test_set = sess.run(accuray,feed_dict={X: test_x,Y: test_y})

print("Epoch: ",epoch, " Loss:",np.mean(epoch_costs)," Accuracy: ", accuray_in_train_set, ' ', accuray_in_test_set)

train_neural_networks() (1)加载数据集;

training_data = pd.read_csv("UJIndoorLoc/trainingData.csv",header=0)

training_x = scale(np.asarray(training_data.ix[:,0:520]))

training_y = np.asarray(training_data["BUILDINGID"].map(str) + training_data["FLOOR"].map(str))

training_y = np.asarray(pd.get_dummies(training_y))

test_dataset = pd.read_csv("UJIndoorLoc/validationData.csv",header=0)

test_x = scale(np.asarray(test_dataset.ix[:,0:520]))

test_y = np.asarray(test_dataset["BUILDINGID"].map(str) + test_dataset["FLOOR"].map(str))

test_y = np.asarray(pd.get_dummies(test_y))这里,我们使用了sklearn对数据集进行处理。接下来定义神经网络:

(2)定义神经网络

def neural_network():

#Encoder(3层)

e_w_1 = tf.Variable(tf.truncated_normal([520,256],stddev=0.1))

e_b_1 = tf.Variable(tf.constant(0.0,shape=[256]))

e_w_2 = tf.Variable(tf.truncated_normal([256,128],stddev=0.1))

e_b_2 = tf.Variable(tf.constant(0.0,shape=[128]))

e_w_3 = tf.Variable(tf.truncated_normal([128,64],stddev=0.1))

e_b_3 = tf.Variable(tf.constant(0.0,shape=[64]))

#Decoder(3层)

d_w_1 = tf.Variable(tf.truncated_normal([64,128],stddev=0.1))

d_b_1 = tf.Variable(tf.constant(0.0,shape=[128]))

d_w_2 = tf.Variable(tf.truncated_normal([128,256],stddev=0.1))

d_b_2 = tf.Variable(tf.constant(0.0,shape=[256]))

d_w_3 = tf.Variable(tf.truncated_normal([256,520],stddev=0.1))

d_b_3 = tf.Variable(tf.constant(0.0,shape=[520]))

#DNN(3层)

w_1 = tf.Variable(tf.truncated_normal([64,128],stddev=0.1))

b_1= tf.Variable(tf.constant(0.0,shape=[128]))

w_2= tf.Variable(tf.truncated_normal([128,128],stddev=0.1))

b_2 = tf.Variable(tf.constant(0.0,shape=[128]))

w_3 = tf.Variable(tf.truncated_normal([128,output],stddev=0.1))

b_3 = tf.Variable(tf.constant(0.0,shape=[output]))

#####

layer_1 = tf.nn.tanh(tf.add(tf.matmul(X,e_w_1),e_b_1))

layer_2 = tf.nn.tanh(tf.add(tf.matmul(layer_1,e_w_2),e_b_2))

encoded = tf.nn.tanh(tf.add(tf.matmul(layer_2,e_w_3),e_b_3))

layer_4 = tf.nn.tanh(tf.add(tf.matmul(encoded,d_w_1),d_b_1))

layer_5 = tf.nn.tanh(tf.add(tf.matmul(layer_4,d_w_2),d_b_2))

decoded = tf.nn.tanh(tf.add(tf.matmul(layer_5,d_w_3),d_b_3))

layer_7 = tf.nn.relu(tf.add(tf.matmul(encoded,w_1),b_1))

layer_8 = tf.nn.relu(tf.add(tf.matmul(layer_7,w_2),b_2))

out = tf.nn.softmax(tf.add(tf.matmul(layer_8,w_3),b_3))

return (decoded, out)三层编码,三层解码,两层全连接,一个输出层;输出为解码器的输出与与猜测输出。

训练过程中先进行自编码的训练,用于特征提取,然后用于训练、测试网络的精确率。

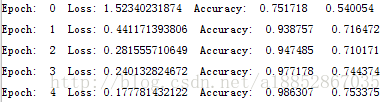

运行结果如下: