Residual Network:https://blog.csdn.net/koala_tree/article/details/78583979

以下是YOLO部分:

1、模型细节:(来自:https://blog.csdn.net/koala_tree/article/details/78690396)

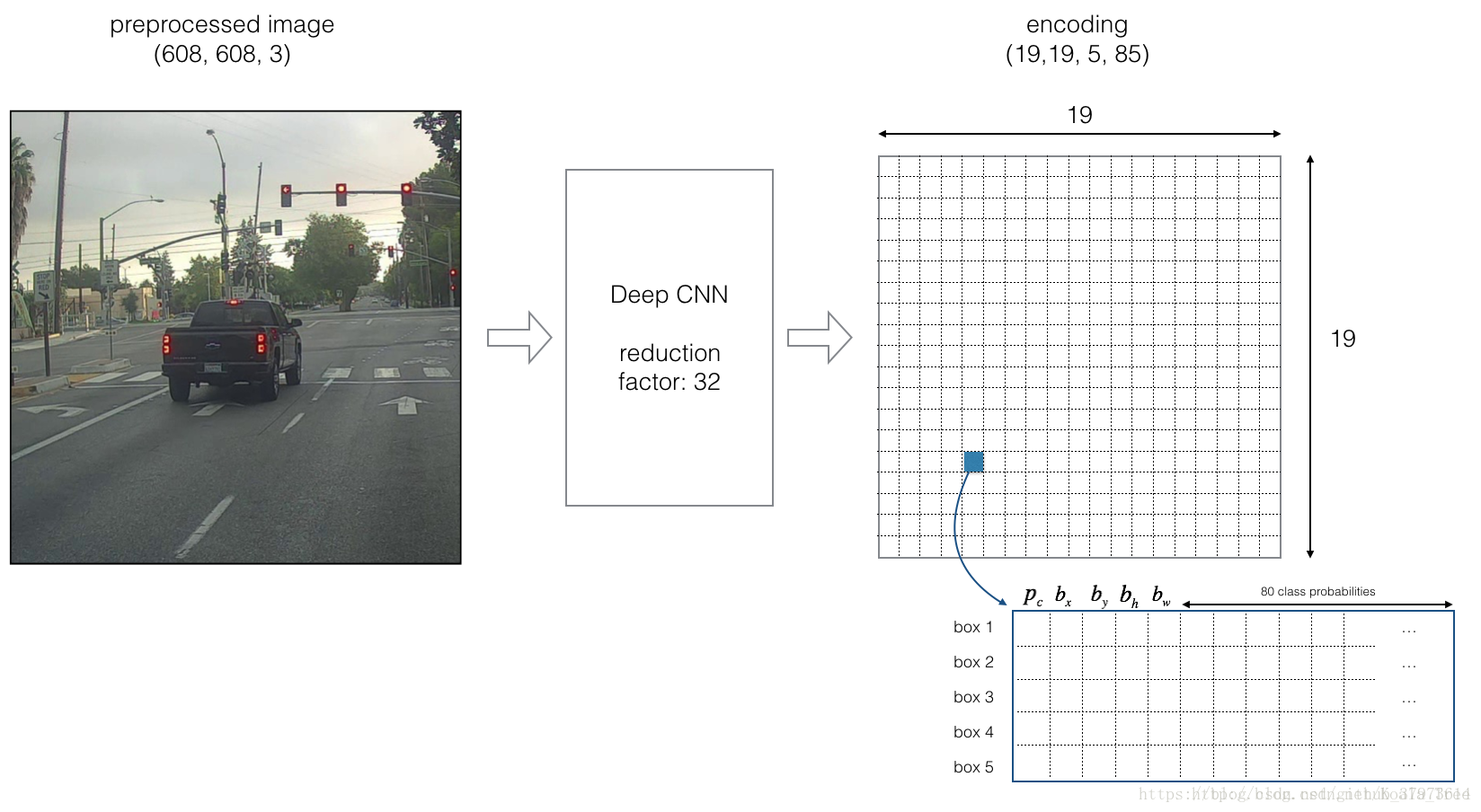

- The input is a batch of images of shape (m, 608, 608, 3)

- The output is a list of bounding boxes along with the recognized classes. Each bounding box is represented by 6 numbers as explained above. If you expand c into an 80-dimensional vector, each bounding box is then represented by 85 numbers.(在这里,一共有80个不同的类别)

We will use 5 anchor boxes. So you can think of the YOLO architecture as the following: IMAGE (m, 608, 608, 3) -> DEEP CNN -> ENCODING (m, 19, 19, 5, 85).

If the center/midpoint of an object falls into a grid cell, that grid cell is responsible for detecting that object.

Anchor boxes are defined only by their width and height.

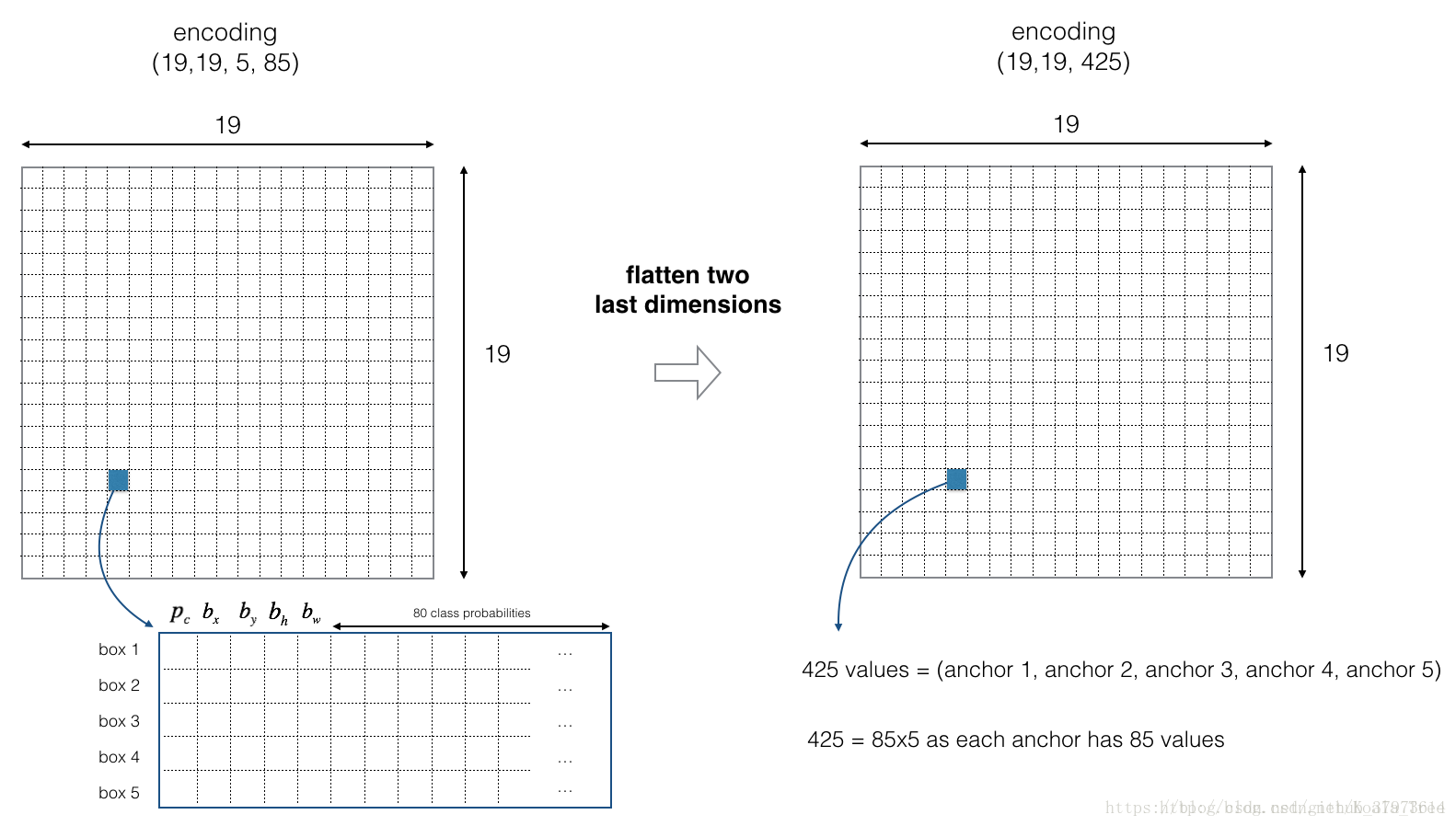

For simplicity, we will flatten the last two last dimensions of the shape (19, 19, 5, 85) encoding. So the output of the Deep CNN is (19, 19, 425).

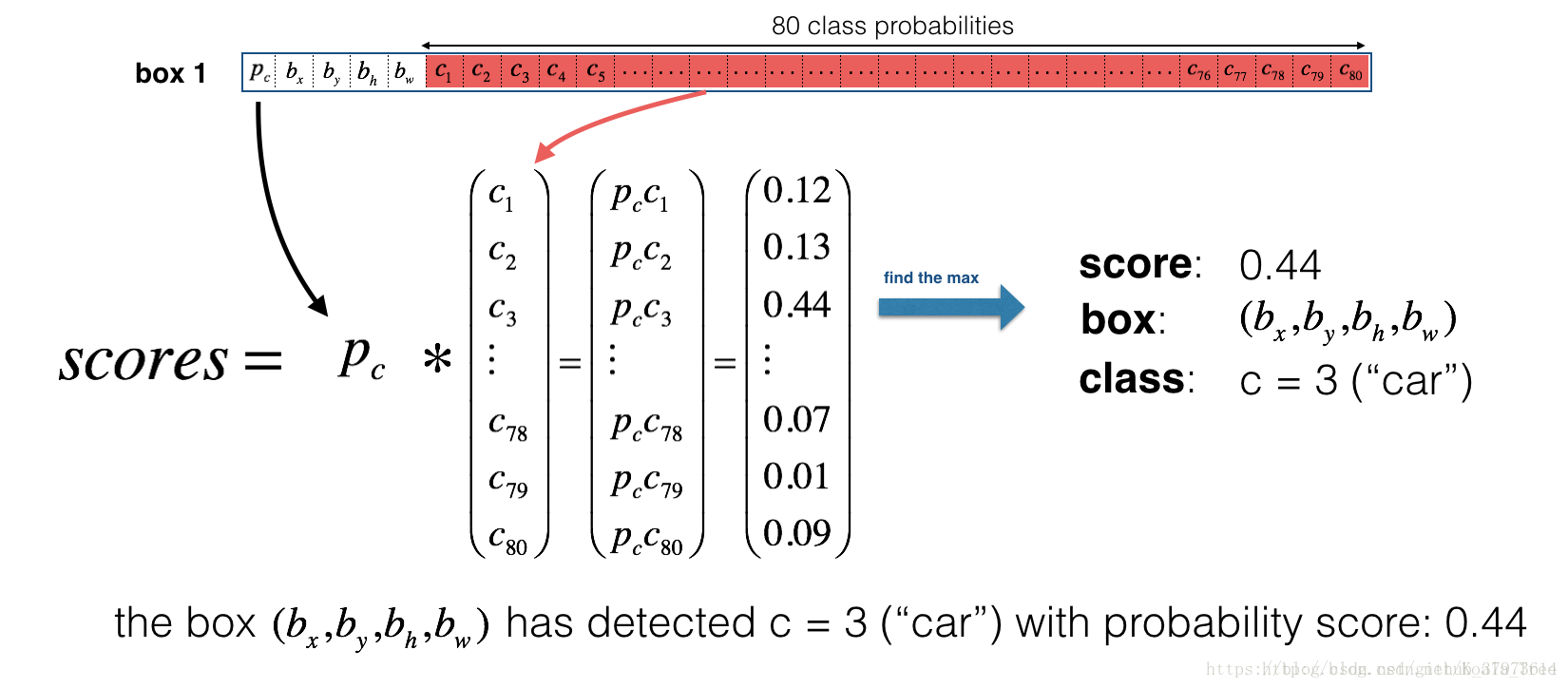

Now, for each box (of each cell) we will compute the following elementwise product and extract a probability that the box contains a certain class.(softmax)

Here’s one way to visualize what YOLO is predicting on an image:

- For each of the 19x19 grid cells, find the maximum of the probability scores (taking a max across both the 5 anchor boxes and across different classes).

- Color that grid cell according to what object that grid cell considers the most likely.

Doing this results in this picture:

IOU:

- In this exercise only, we define a box using its two corners (upper left and lower right): (x1, y1, x2, y2) rather than the midpoint and height/width.

- To calculate the area of a rectangle you need to multiply its height (y2 - y1) by its width (x2 - x1)

- You’ll also need to find the coordinates (xi1, yi1, xi2, yi2) of the intersection of two boxes. Remember that:

- xi1 = maximum of the x1 coordinates of the two boxes

- yi1 = maximum of the y1 coordinates of the two boxes

- xi2 = minimum of the x2 coordinates of the two boxes

- yi2 = minimum of the y2 coordinates of the two boxes

we use the convention that (0,0) is the top-left corner of an image, (1,0) is the upper-right corner, and (1,1) the lower-right corner.

def iou(box1, box2):

"""Implement the intersection over union (IoU) between box1 and box2

Arguments:

box1 -- first box, list object with coordinates (x1, y1, x2, y2)

box2 -- second box, list object with coordinates (x1, y1, x2, y2)

"""

# Calculate the (y1, x1, y2, x2) coordinates of the intersection of box1 and box2. Calculate its Area.

xi1 = max(box1[0], box2[0])

yi1 = max(box1[1], box2[1])

xi2 = min(box1[2], box2[2])

yi2 = min(box1[3], box2[3])

inter_area = (xi2 - xi1) * (yi2 - yi1)

# Calculate the Union area by using Formula: Union(A,B) = A + B - Inter(A,B)

box1_area = (box1[2] - box1[0]) * (box1[3] - box1[1])

box2_area = (box2[2] - box2[0]) * (box2[3] - box2[1])

union_area = box1_area + box2_area - inter_area

# compute the IoU

iou = inter_area / union_area

return iou非极大值抑制函数:

def yolo_non_max_suppression(scores, boxes, classes, max_boxes = 10, iou_threshold = 0.5):

"""

Applies Non-max suppression (NMS) to set of boxes

Arguments:

scores -- tensor of shape (None,), output of yolo_filter_boxes()

boxes -- tensor of shape (None, 4), output of yolo_filter_boxes() that have been scaled to the image size (see later)

classes -- tensor of shape (None,), output of yolo_filter_boxes()

max_boxes -- integer, maximum number of predicted boxes you'd like

iou_threshold -- real value, "intersection over union" threshold used for NMS filtering

Returns:

scores -- tensor of shape (, None), predicted score for each box

boxes -- tensor of shape (4, None), predicted box coordinates

classes -- tensor of shape (, None), predicted class for each box

Note: The "None" dimension of the output tensors has obviously to be less than max_boxes. Note also that this

function will transpose the shapes of scores, boxes, classes. This is made for convenience.

"""

max_boxes_tensor = K.variable(max_boxes, dtype='int32') # tensor to be used in tf.image.non_max_suppression()

K.get_session().run(tf.variables_initializer([max_boxes_tensor])) # initialize variable max_boxes_tensor

# Use tf.image.non_max_suppression() to get the list of indices corresponding to boxes you keep

### START CODE HERE ### (≈ 1 line)

nms_indices = tf.image.non_max_suppression(boxes, scores, max_boxes, iou_threshold, name=None)

### END CODE HERE ###

# Use K.gather() to select only nms_indices from scores, boxes and classes

### START CODE HERE ### (≈ 3 lines)

scores = K.gather(scores, nms_indices)

boxes = K.gather(boxes, nms_indices)

classes = K.gather(classes, nms_indices)

### END CODE HERE ###

return scores, boxes, classes一个步骤的整合:

def yolo_eval(yolo_outputs, image_shape = (720., 1280.), max_boxes=10, score_threshold=.6, iou_threshold=.5):

"""

Converts the output of YOLO encoding (a lot of boxes) to your predicted boxes along with their scores, box coordinates and classes.

Arguments:

yolo_outputs -- output of the encoding model (for image_shape of (608, 608, 3)), contains 4 tensors:

box_confidence: tensor of shape (None, 19, 19, 5, 1)

box_xy: tensor of shape (None, 19, 19, 5, 2)

box_wh: tensor of shape (None, 19, 19, 5, 2)

box_class_probs: tensor of shape (None, 19, 19, 5, 80)

image_shape -- tensor of shape (2,) containing the input shape, in this notebook we use (608., 608.) (has to be float32 dtype)

max_boxes -- integer, maximum number of predicted boxes you'd like

score_threshold -- real value, if [ highest class probability score < threshold], then get rid of the corresponding box

iou_threshold -- real value, "intersection over union" threshold used for NMS filtering

Returns:

scores -- tensor of shape (None, ), predicted score for each box

boxes -- tensor of shape (None, 4), predicted box coordinates

classes -- tensor of shape (None,), predicted class for each box

"""

### START CODE HERE ###

# Retrieve outputs of the YOLO model (≈1 line)

box_confidence, box_xy, box_wh, box_class_probs = yolo_outputs

# Convert boxes to be ready for filtering functions

boxes = yolo_boxes_to_corners(box_xy, box_wh)

# Use one of the functions you've implemented to perform Score-filtering with a threshold of score_threshold (≈1 line)

scores, boxes, classes = yolo_filter_boxes(box_confidence, boxes, box_class_probs, score_threshold)

# Scale boxes back to original image shape.

boxes = scale_boxes(boxes, image_shape)

# Use one of the functions you've implemented to perform Non-max suppression with a threshold of iou_threshold (≈1 line)

scores, boxes, classes = yolo_non_max_suppression(scores, boxes, classes, max_boxes, iou_threshold)

### END CODE HERE ###

return scores, boxes, classes最终返回scores,boxes, classes的信息。

相当于是通过已经训练好到YOLO网络,我们来做一个后期到非极大值抑制的处理,最终得到想要的boxes信息。并且输出,以及在原始图片上将box绘制出来。

summary for YOLO:

- Input image (608, 608, 3)

- The input image goes through a CNN, resulting in a (19,19,5,85) dimensional output.

- After flattening the last two dimensions, the output is a volume of shape (19, 19, 425):

- Each cell in a 19x19 grid over the input image gives 425 numbers.

- 425 = 5 x 85 because each cell contains predictions for 5 boxes, corresponding to 5 anchor boxes, as seen in lecture.

- 85 = 5 + 80 where 5 is because has 5 numbers, and and 80 is the number of classes we’d like to detect

- You then select only few boxes based on:

- Score-thresholding: throw away boxes that have detected a class with a score less than the threshold

- Non-max suppression: Compute the Intersection over Union and avoid selecting overlapping boxes

- This gives you YOLO’s final output.