Convolution

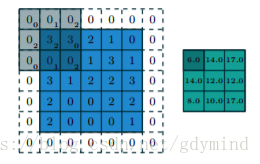

把各个*input feature maps分别经过一个kernel的卷积结果相加,得到一个* output feature map:

Output feature map’s shape

Convolution layer

Convolution layer的output feature map的shape与下列变量有关:

- input feature map shape

- kernel size

(usually odd)

- zero padding

- stride

| Name | ||||

|---|---|---|---|---|

| no zero padding & unit stride | ||||

| unit strides | ||||

| Half (or same) paddings | ||||

| Full padding |

举例来说,下图表现了Half padding:

Fully-connected layers

Output shape与input shape独立。

注:更多详细的数学运算上的关系可以看这篇paper:A guide to convolution arithmetic for deep learning.

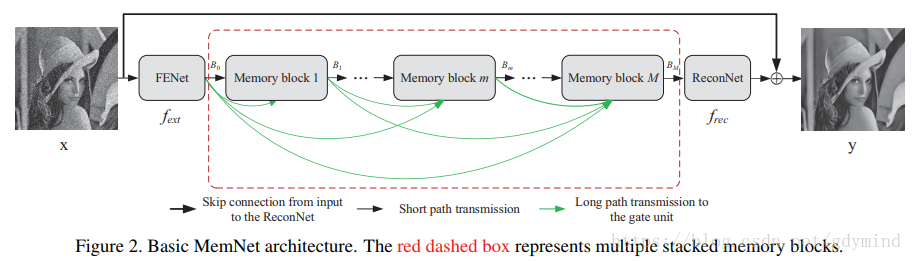

Blocks & Deep neural network

Deep eural Netword由于其deep的特性具有很强的特征提取能力。整个Network的主要部分可以由多个相同的blocks依次串联而成。

以下图中的MemNet为例,red dashed box中的部分就是由基本的Memory Blocks串联成的:

下面提到的各种blocks有一个很关键的特征: they create paths (skip connections) from early layers to later layers.

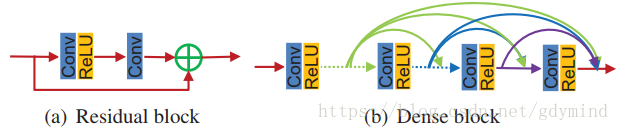

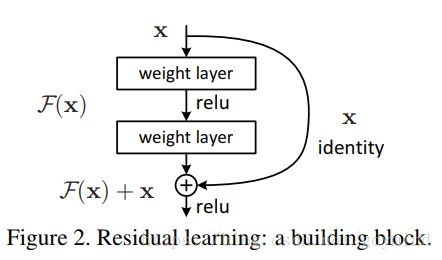

Residual block & skip connection

Residual block中使用一个skip conncetion,将一个conv layer的input连接到output,即增加了一个identical mapping。

这要做的好处有:

- easier to learn the expected mapping

- alleviate the gradient vanishing problem

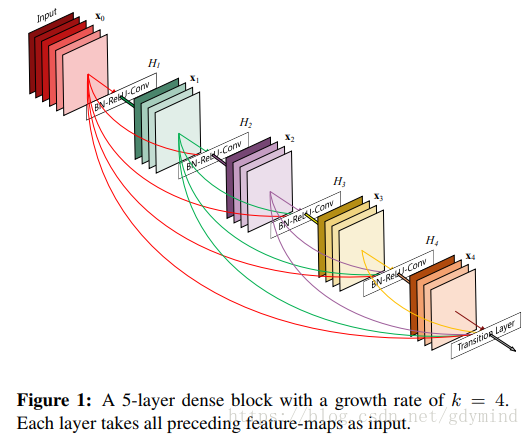

Dense Block

在一个dense block的内部,每一对*layers之间都有从前向后*的连接。

也就是说,若一个block内有

个layers,则连接总数为

。

growth rate: 每个layer产生的feature map数量叫做growth rate。

设这个数字为

,则一个block的内部的第

个layer的输入feature map数量为

,其中

为整个block接受的输入数量。

这样做的好处有:

- alleviate the gradient vanishing problem

- strengthen feature propagation and encourage feature reuse: as info about the input or gradient passes through many layers, it can vanish and “wash out” by the time it reaches the end (or beginning) of the network

- reduce the number of parameters: because there is no need to relearn redundant feature-maps, DenseNet layers are very narrow

- regularing effect: it reduces overfitting on tasks with smaller training set sizes

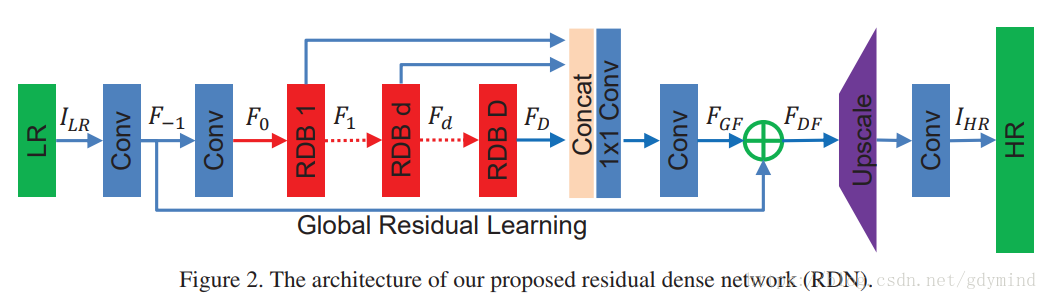

Resiudual Dense Block

Review of Residual Block and Dense Block

Resiudual Dense Block

首先对前面进行了综合,即:

之后加了 的Conv,用于feature fusion。

Residual Dense Network

网络architecture如下,该网络实现SISR (single image super resolution)任务。

其中:

- LR为输入的low resolution图像

- 前两层conv为shallow feature extraction net (SEFNEt),用于提取浅层信息

- 中间为residuaal dense blocks和dense feature fusion (DFF)

- 最后是用于放大的up-sampling net (UPNet)