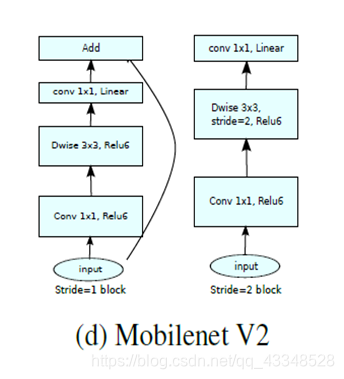

- Mobilenet v2主体结构:

针对CIFAR10,没有考虑resize成224x224x3大小的input,还是保留其32x32x3的原始大小,因此相对应的调整了网络的结构,稍微有所缩减。

- 代码:

# Feel free to play with this cell

tf.reset_default_graph()

X = tf.placeholder(tf.float32, [None, 32, 32, 3])

y = tf.placeholder(tf.int64, [None])

is_training = tf.placeholder(tf.bool)

def mobilenet_v2_func_blocks(is_training):

def conv2d(inputs,filters,kernel_size,stride,scope=''):

with tf.variable_scope(scope):

with tf.variable_scope('conv2d'):

outputs = tf.layers.conv2d(inputs,filters,kernel_size,strides=(stride,stride),padding = 'same')

outputs = tf.layers.batch_normalization(outputs,training=is_training)

outputs = tf.nn.relu(outputs)

return outputs

def _1x1_con2d(inputs,filters,stride):

with tf.variable_scope('1x1_conv2d'):

outputs = tf.layers.conv2d(inputs,filters,[1,1],strides=(stride,stride),padding='same')

outputs = tf.layers.batch_normalization(outputs,training=is_training)

return outputs

def expansion_conv2d(inputs,expansion,stride):

input_shape = inputs.get_shape().as_list()

filters = input_shape[3] * expansion

kernel_size = [1,1]

with tf.variable_scope('expansion_1x1_conv2d'):

outputs = tf.layers.conv2d(inputs,filters,kernel_size,strides=(stride,stride),padding='same')

outputs = tf.layers.batch_normalization(outputs,training=is_training)

outputs = tf.nn.relu6(outputs)

return outputs

def projection_conv2d(inputs,filters,stride):

kernel_size = [1,1]

with tf.variable_scope('projection_1x1_conv2d'):

outputs = tf.layers.conv2d(inputs,filters,kernel_size,strides=(stride,stride),padding='same')

outputs = tf.layers.batch_normalization(outputs,training=is_training)

return outputs

def depthwise_conv2d(inputs,depthwise_filters,depthwise_conv_kernel_size,stride):

with tf.variable_scope('depthwise_conv2d'):

#outputs = tf.contrib.layers.separable_conv2d(inputs,None,depthwise_conv_kernel_size,stride=(stride,stride),padding='SAME')

outputs = tf.contrib.layers.separable_conv2d(inputs,depthwise_filters,depthwise_conv_kernel_size,stride=(stride,stride),padding='same')

outputs = tf.layers.batch_normalization(outputs,training=is_training)

outputs = tf.nn.relu(outputs)

return outputs

def avg_pool2d(inputs,scope=''):

input_shape = inputs.get_shape().as_list()

pool_height = input_shape[1]

pool_width = input_shape[2]

with tf.variable_scope(scope):

outputs = tf.layers.average_pooling2d(inputs,[pool_height,pool_width],strides=(1,1),padding='valid')

return outputs

def inverted_residual_block(inputs,filters,stride,expansion=6,scope=''):

depthwise_conv_kernel_size = [3,3]

pointwise_conv_filters = filters

with tf.variable_scope(scope):

net = inputs

net = expansion_conv2d(net,expansion,stride=1)

depthwise_filters = net.get_shape().as_list()[3]

net = depthwise_conv2d(net,depthwise_filters,depthwise_conv_kernel_size,stride = stride)

net = projection_conv2d(net,pointwise_conv_filters,stride=1)

if stride ==1:

if net.get_shape().as_list()[3]!=inputs.get_shape().as_list()[3]:

inputs = _1x1_con2d(inputs,net.get_shape().as_list()[3],stride = 1)

net = net + inputs

return net

else:

return net

func_blocks = {}

func_blocks['conv2d'] = conv2d

func_blocks['inverted_residual_block'] = inverted_residual_block

func_blocks['avg_pool2d'] = avg_pool2d

return func_blocks

def mobilenet_v2(inputs,y,is_training):

func_blocks = mobilenet_v2_func_blocks(is_training)

_conv2d = func_blocks['conv2d']

_inverted_residual_block = func_blocks['inverted_residual_block']

_avg_pool2d = func_blocks['avg_pool2d']

with tf.variable_scope('mobilenet_v2',[inputs]):

net = inputs

net = _conv2d(net,32,[3,3],stride=2,scope='block0_0')

print('!!debug block0,net shape is:{}'.format(net.get_shape()))

net = _inverted_residual_block(net,16,stride=1,expansion=1,scope='block1_0')

print('!!debug block1_0,net shape is:{}'.format(net.get_shape()))

net = _inverted_residual_block(net,24,stride=2,scope='block2_0')

net = _inverted_residual_block(net,24,stride=1,scope='block2_1')

print('!!debug block2,net shape is:{}'.format(net.get_shape()))

net = _inverted_residual_block(net,32,stride=2,scope='block3_0')

net = _inverted_residual_block(net,32,stride=1,scope='block3_1')

net = _inverted_residual_block(net,32,stride=1,scope='block3_2')

print('!!debug block3,net shape is:{}'.format(net.get_shape()))

net = _inverted_residual_block(net,64,stride=2,scope='block4_0')

net = _inverted_residual_block(net,64,stride=1,scope='block4_1')

net = _inverted_residual_block(net,64,stride=1,scope='block4_2')

net = _inverted_residual_block(net,64,stride=1,scope='block4_3')

print('!!debug block4,net shape is:{}'.format(net.get_shape()))

net = _inverted_residual_block(net,96,stride=1,scope='block5_0')

net = _inverted_residual_block(net,96,stride=1,scope='block5_1')

net = _inverted_residual_block(net,96,stride=1,scope='block5_2')

print('!!debug block5,net shape is:{}'.format(net.get_shape()))

net = _conv2d(net,160,[1,1],stride=1,scope='block6_0')

print('!!debug block6,net shape is:{}'.format(net.get_shape()))

net = _avg_pool2d(net,scope='block7')

flatten = tf.contrib.layers.flatten(net)

y_out = tf.layers.dense(flatten,10)

print('!!debug ,net shape is:{}'.format(y_out.get_shape()))

return y_out

print('my model')

y_out = mobilenet_v2(X,y,is_training)

print('come on')

mean_loss = None

optimizer = None

print('loss')

total_loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels= tf.one_hot(y,10),logits=y_out))

#loss = tf.losses.sparse_softmax_cross_entropy( labels = y, logits = y_out)

mean_loss = tf.reduce_mean(total_loss)

optimizer = tf.train.RMSPropOptimizer(1e-3)

-

结果: