系列连载目录

- 请查看博客 《Paper》 4.1 小节 【Keras】Classification in CIFAR-10 系列连载

学习借鉴

- github:BIGBALLON/cifar-10-cnn

- 知乎专栏:写给妹子的深度学习教程

- Mobilenet Caffe 代码:https://github.com/shicai/MobileNet-Caffe/blob/master/mobilenet_deploy.prototxt

- Mobilenet Keras 代码:https://github.com/Hedlen/Mobilenet-Keras/blob/master/model/mobilenet.py

参考

代码下载

- 链接:https://pan.baidu.com/s/1UYbkq56IVBB-fHHlG7p3yg

提取码:lpjd

硬件

- TITAN XP

文章目录

1 理论基础

参考【MobileNet】《MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications》

精度相当,参数量更少,计算量更少,速度更快

2 Mobilenet 代码实现

2.1 mobilenet

1)导入库,设置好超参数

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="0"

import keras

from keras.datasets import cifar10

from keras import backend as K

from keras.layers import Input, Conv2D,GlobalAveragePooling2D, Dense, BatchNormalization, Activation

from keras.models import Model

from keras.layers import DepthwiseConv2D

from keras import optimizers,regularizers

from keras.preprocessing.image import ImageDataGenerator

from keras.initializers import he_normal

from keras.callbacks import LearningRateScheduler, TensorBoard, ModelCheckpoint

num_classes = 10

batch_size = 64 # 64 or 32 or other

epochs = 300

iterations = 782

USE_BN=True

DROPOUT=0.2 # keep 80%

CONCAT_AXIS=3

weight_decay=1e-4

DATA_FORMAT='channels_last' # Theano:'channels_first' Tensorflow:'channels_last'

log_filepath = './mobilenet'

2)数据预处理并设置 learning schedule

def color_preprocessing(x_train,x_test):

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

mean = [125.307, 122.95, 113.865]

std = [62.9932, 62.0887, 66.7048]

for i in range(3):

x_train[:,:,:,i] = (x_train[:,:,:,i] - mean[i]) / std[i]

x_test[:,:,:,i] = (x_test[:,:,:,i] - mean[i]) / std[i]

return x_train, x_test

def scheduler(epoch):

if epoch < 100:

return 0.01

if epoch < 200:

return 0.001

return 0.0001

# load data

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

x_train, x_test = color_preprocessing(x_train, x_test)

3)定义网络结构

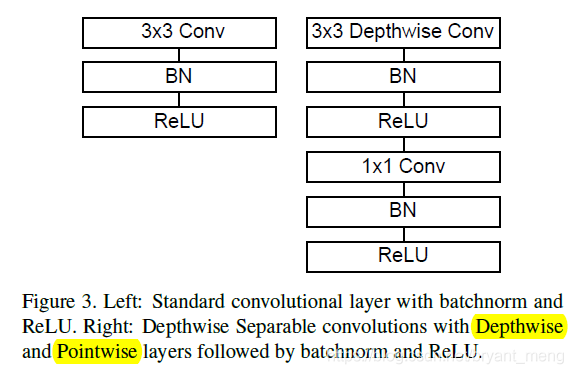

depth-wise separable convolution

keras官方文档:https://keras.io/layers/convolutional/#depthwiseconv2d

def depthwise_separable(x,params):

# f1/f2 filter size, s1 stride of conv

(s1,f2) = params

x = DepthwiseConv2D((3,3),strides=(s1[0],s1[0]), padding='same')(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = Conv2D(int(f2[0]), (1,1), strides=(1,1), padding='same')(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

return x

- s1 控制 depth-wise convolution 的 stride,虽然 channels 固定,同输入一样,但是可以通过步长来改变输入的 resolution.

- f2 表示 point-wise convolution 的 filters 数量,也即输出的 channels

4)搭建网络

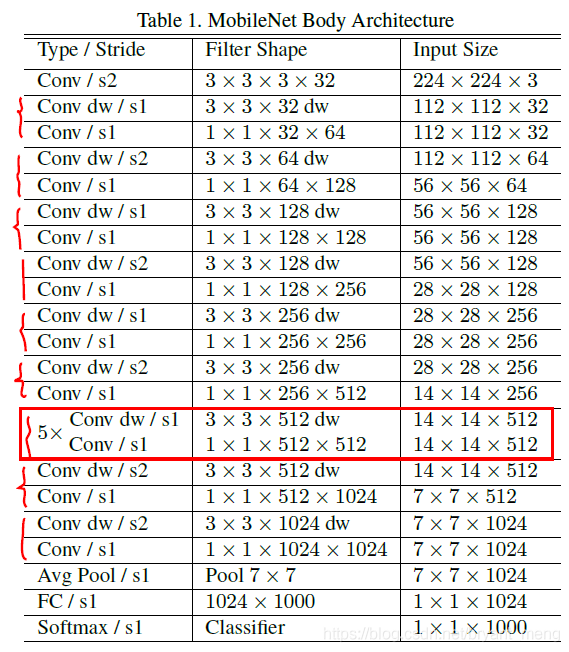

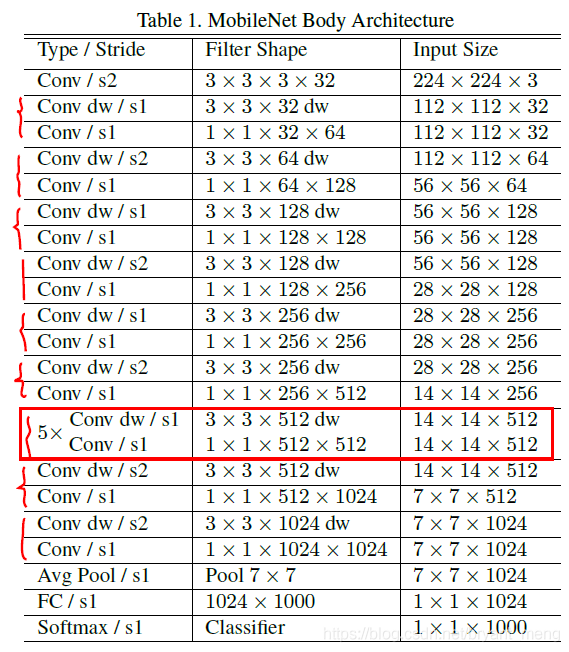

用 3)中设计好的模块来搭建网络,整体 architecture 如下:

括号表示 depth-wise separable convolution = depth-wise convolution + point-wise convolution

Down sampling ( ) is handled with strided convolution in the depthwise convolutions as well as in the first layer.

def MobileNet(img_input,shallow=False, classes=10):

"""Instantiates the MobileNet.Network has two hyper-parameters

which are the width of network (controlled by alpha)

and input size.

# Arguments

alpha: optional parameter of the network to change the

width of model.

shallow: optional parameter for making network smaller.

classes: optional number of classes to classify images

into.

"""

x = Conv2D(int(32), (3,3), strides=(2,2), padding='same')(img_input)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = depthwise_separable(x,params=[(1,),(64,)])

x = depthwise_separable(x,params=[(2,),(128,)])

x = depthwise_separable(x,params=[(1,),(128,)])

x = depthwise_separable(x,params=[(2,),(256,)])

x = depthwise_separable(x,params=[(1,),(256,)])

x = depthwise_separable(x,params=[(2,),(512,)])

if not shallow:

for _ in range(5):

x = depthwise_separable(x,params=[(1,),(512,)])

x = depthwise_separable(x,params=[(2,),(1024,)])

x = depthwise_separable(x,params=[(1,),(1024,)])

x = GlobalAveragePooling2D()(x)

out = Dense(classes, activation='softmax')(x)

return out

5)生成模型

img_input=Input(shape=(32,32,3))

output = MobileNet(img_input)

model=Model(img_input,output)

model.summary()

Total params: 3,250,058

Trainable params: 3,228,170

Non-trainable params: 21,888

6)开始训练

# set optimizer

sgd = optimizers.SGD(lr=.1, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

# set callback

tb_cb = TensorBoard(log_dir=log_filepath, histogram_freq=0)

change_lr = LearningRateScheduler(scheduler)

cbks = [change_lr,tb_cb]

# set data augmentation

datagen = ImageDataGenerator(horizontal_flip=True,

width_shift_range=0.125,

height_shift_range=0.125,

fill_mode='constant',cval=0.)

datagen.fit(x_train)

# start training

model.fit_generator(datagen.flow(x_train, y_train,batch_size=batch_size),

steps_per_epoch=iterations,

epochs=epochs,

callbacks=cbks,

validation_data=(x_test, y_test))

model.save('mobilenet.h5')

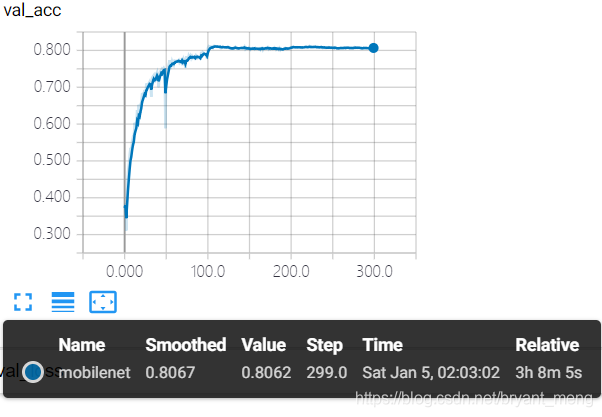

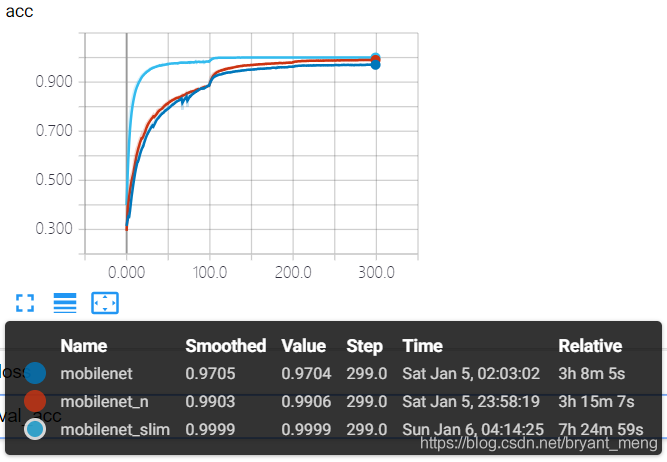

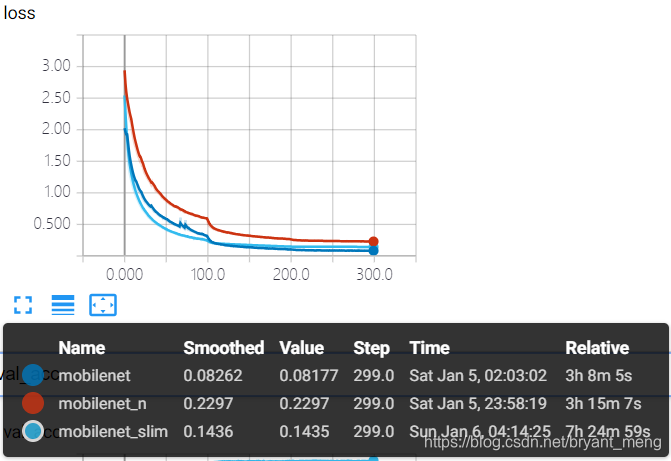

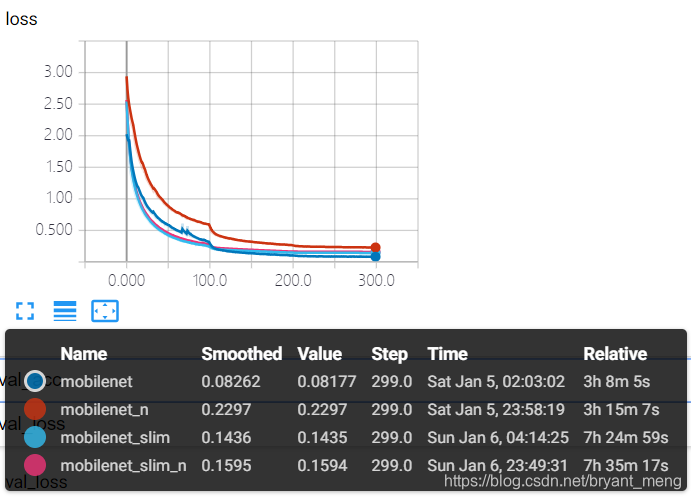

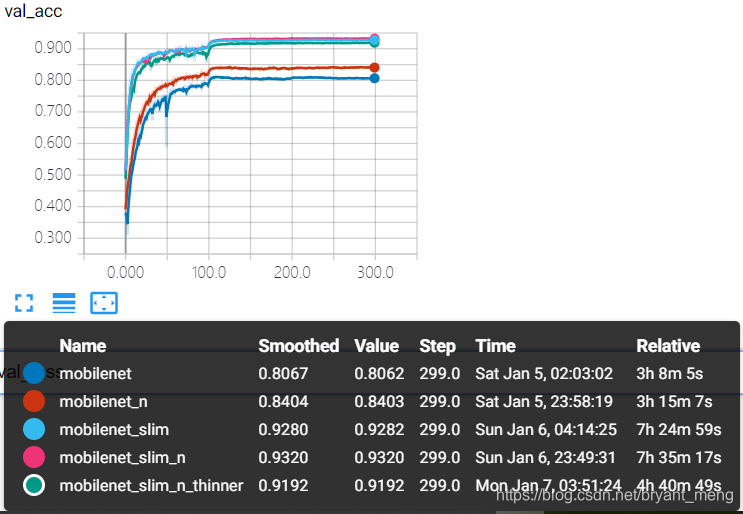

7)结果分析

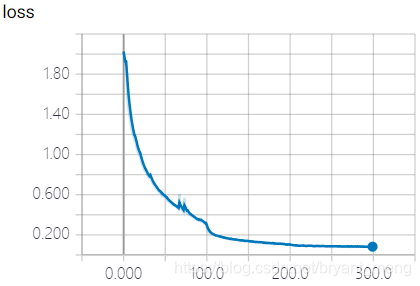

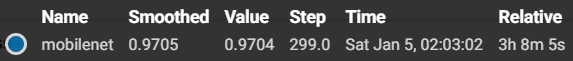

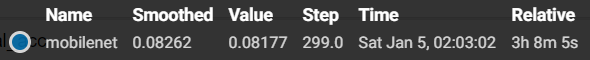

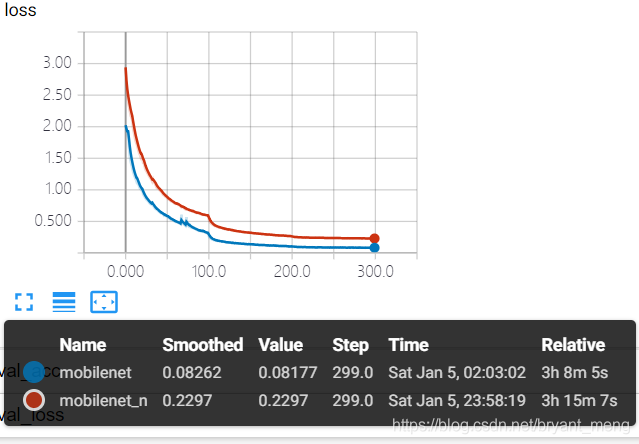

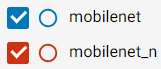

training accuracy 和 training loss

- accuracy

- loss

97% +

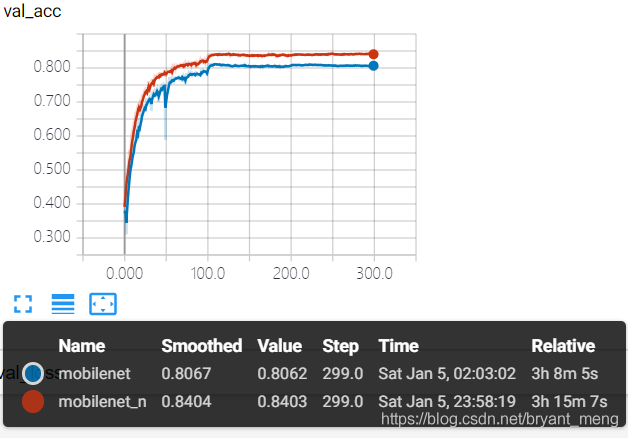

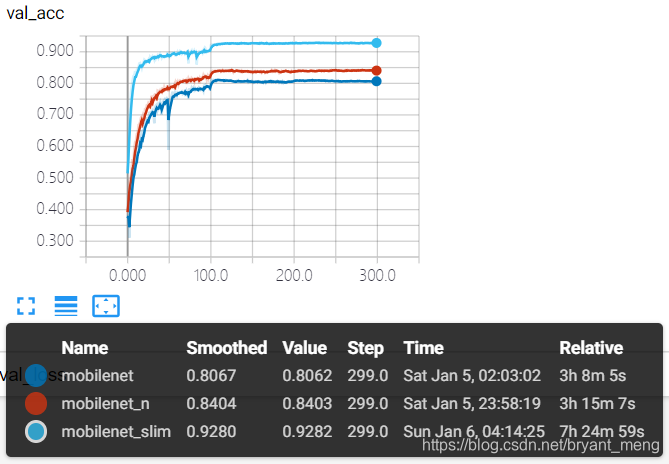

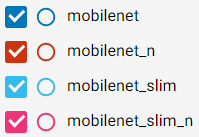

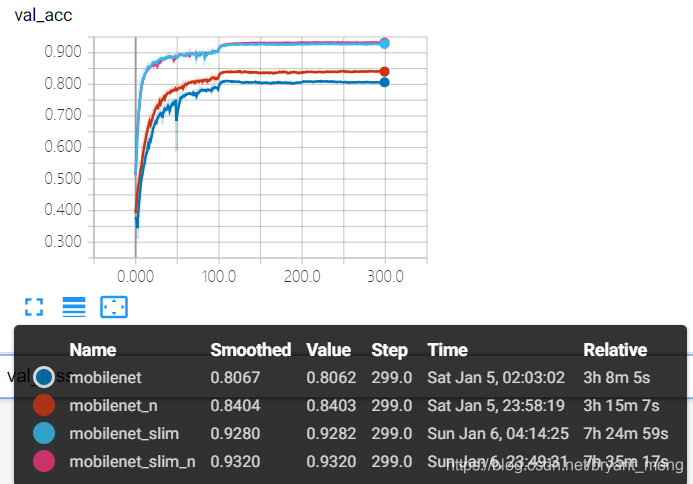

test accuracy 和 test loss

…………

精度很低,80%+,耐克出现,过拟合严重。很好理解,在 imagenet 上,输入224,最后一次 convolution 的 resolution 为 7×7,down sampling 了5次(

),CIFAR-10 输入32,down sampling 4 次之后,convolution 的作用就很有限了。

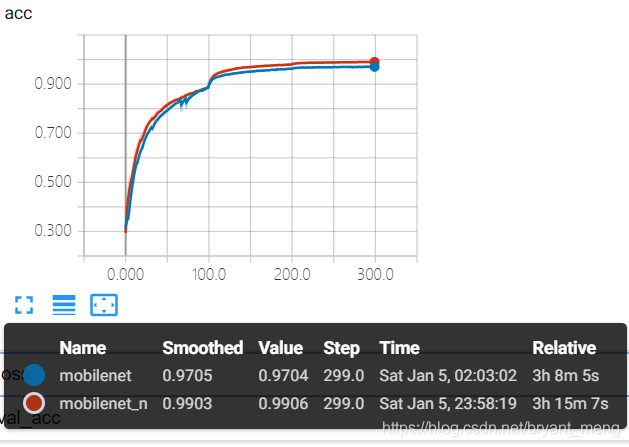

2.2 mobilenet_n

2.1 明显过拟合

- 我们在 point-wise 里加正则化策略,为啥不在 depth-wise convolution 中也加入 l2 regularization + weight decay 策略呢?因为论文中有一句是这么说的:put very little or no weight decay (l2 regularization) on the depthwise filters since their are so few parameters in them,我们暂时只改 point-wise convolution 部分;

- 改变 depth-wise 和 point-wise convolution 的初始化策略,默认

glorot_uniform,我们用he_normal

代码修改部分如下

def depthwise_separable(x,params):

# f1/f2 filter size, s1 stride of conv

(s1,f2) = params

x = DepthwiseConv2D((3,3),strides=(s1[0],s1[0]), padding='same',depthwise_initializer="he_normal")(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

x = Conv2D(int(f2[0]), (1,1), strides=(1,1), padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

return x

其它部分代码同 mobilenet

参数量如下(不变):

Total params: 3,250,058

Trainable params: 3,228,170

Non-trainable params: 21,888

- mobilenet

Total params: 3,250,058

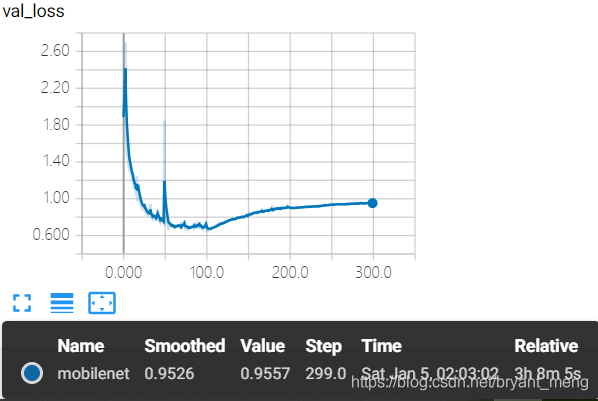

结果分析如下:

training accuracy 和 training loss

test accuracy 和 test loss

…………

精度提升了,84%+,还是耐克出现,过拟合严重。还是那样理解,在 imagenet 上,输入224,最后一次 convolution 的 resolution 为 7×7,down sampling 了5次(

),CIFAR-10 输入32,down sampling 4 次之后,convolution 的作用就很有限了。

2.3 mobilenet_slim

在2.2小节的基础上,类似 【Keras-Inception-resnet v1】CIFAR-10、【Keras-Inception-resnet v2】CIFAR-10,我们把前三个 down sampling 取消,也即前三个stride=2 改为 stride =1,保证 feature map 的 resolution.

代码修改部分如下:

def MobileNet(img_input,shallow=False, classes=10):

"""Instantiates the MobileNet.Network has two hyper-parameters

which are the width of network (controlled by alpha)

and input size.

# Arguments

alpha: optional parameter of the network to change the

width of model.

shallow: optional parameter for making network smaller.

classes: optional number of classes to classify images

into.

"""

# change stride

x = Conv2D(int(32), (3,3), strides=(1,1), padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input) # change stride

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = depthwise_separable(x,params=[(1,),(64,)])

x = depthwise_separable(x,params=[(1,),(128,)])# change stride

x = depthwise_separable(x,params=[(1,),(128,)])

x = depthwise_separable(x,params=[(1,),(256,)])# change stride

x = depthwise_separable(x,params=[(1,),(256,)])

x = depthwise_separable(x,params=[(2,),(512,)])

if not shallow:

for _ in range(5):

x = depthwise_separable(x,params=[(1,),(512,)])

x = depthwise_separable(x,params=[(2,),(1024,)])

x = depthwise_separable(x,params=[(1,),(1024,)])

x = GlobalAveragePooling2D()(x)

out = Dense(classes, activation='softmax')(x)

return out

其它部分代码同:mobilenet_n

参数量如下(不变):

Total params: 3,250,058

Trainable params: 3,228,170

Non-trainable params: 21,888

- mobilenet

Total params: 3,250,058 - mobilenet_n

Total params: 3,250,058

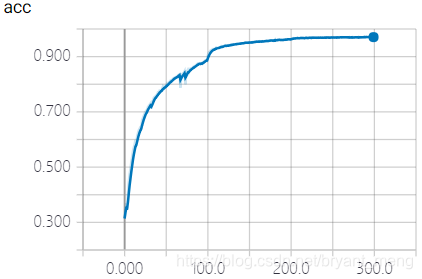

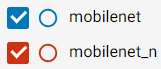

结果分析如下:

training accuracy 和 training loss

到 99.9%了,好兆头!训练时间增加了很多,很好理解,因为 resolution 提高了,计算量自然提高了

test accuracy 和 test loss

…………

精度92%+,缓解了过拟合

2.4 mobilenet_slim_n

在2.3的基础上,尝试对 depth-wise convolution 来一下 l2 regularization + weight decay

论文中说了 put very little or no weight decay (l2 regularization) on the depthwise filters since their are so few parameters in them

代码修改部分如下

def depthwise_separable(x,params):

# f1/f2 filter size, s1 stride of conv

(s1,f2) = params

x = DepthwiseConv2D((3,3),strides=(s1[0],s1[0]), padding='same',

depthwise_initializer="he_normal",depthwise_regularizer=regularizers.l2(weight_decay))(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

x = Conv2D(int(f2[0]), (1,1), strides=(1,1), padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

return x

其它部分的代码同mobilenet_slim

参数量如下(不变):

Total params: 3,250,058

Trainable params: 3,228,170

Non-trainable params: 21,888

- mobilenet

Total params: 3,250,058 - mobilenet_n

Total params: 3,250,058 - mobilenet_slim

Total params: 3,250,058

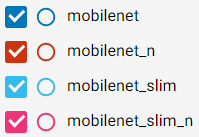

结果分析如下:

training accuracy 和 training loss

和 mobilenet_slim 差不多

test accuracy 和 test loss

…………

精度突破93%,略胜一筹

2.5 mobilenet_slim_n_thinner

在 2.4 小节的基础上,我们把下表中,红色框框部分去掉,也即 5x 的结构

代码修改部分如下:

可以删掉相应结构的代码,也可以直接把函数定义部分的形参 shallow=True 从 False 改为 True

def MobileNet(img_input,shallow=True, classes=10):

"""Instantiates the MobileNet.Network has two hyper-parameters

which are the width of network (controlled by alpha)

and input size.

# Arguments

alpha: optional parameter of the network to change the

width of model.

shallow: optional parameter for making network smaller.

classes: optional number of classes to classify images

into.

"""

# change stride

x = Conv2D(int(32), (3,3), strides=(1,1), padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = depthwise_separable(x,params=[(1,),(64,)])

x = depthwise_separable(x,params=[(1,),(128,)])# change stride

x = depthwise_separable(x,params=[(1,),(128,)])

x = depthwise_separable(x,params=[(1,),(256,)])# change stride

x = depthwise_separable(x,params=[(1,),(256,)])

x = depthwise_separable(x,params=[(2,),(512,)])

if not shallow:

for _ in range(5):

x = depthwise_separable(x,params=[(1,),(512,)])

x = depthwise_separable(x,params=[(2,),(1024,)])

x = depthwise_separable(x,params=[(1,),(1024,)])

x = GlobalAveragePooling2D()(x)

out = Dense(classes, activation='softmax')(x)

return out

其它部分代码同 mobilenet_slim_n

参数量如下(减少):

Total params: 1,890,698

Trainable params: 1,879,050

Non-trainable params: 11,648

- mobilenet

Total params: 3,250,058 - mobilenet_n

Total params: 3,250,058 - mobilenet_slim

Total params: 3,250,058 - mobilenet_slim_n

Total params: 3,250,058

结果分析如下:

test accuracy 和 test loss

速度大幅度提升,精度有所牺牲

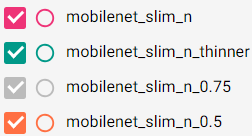

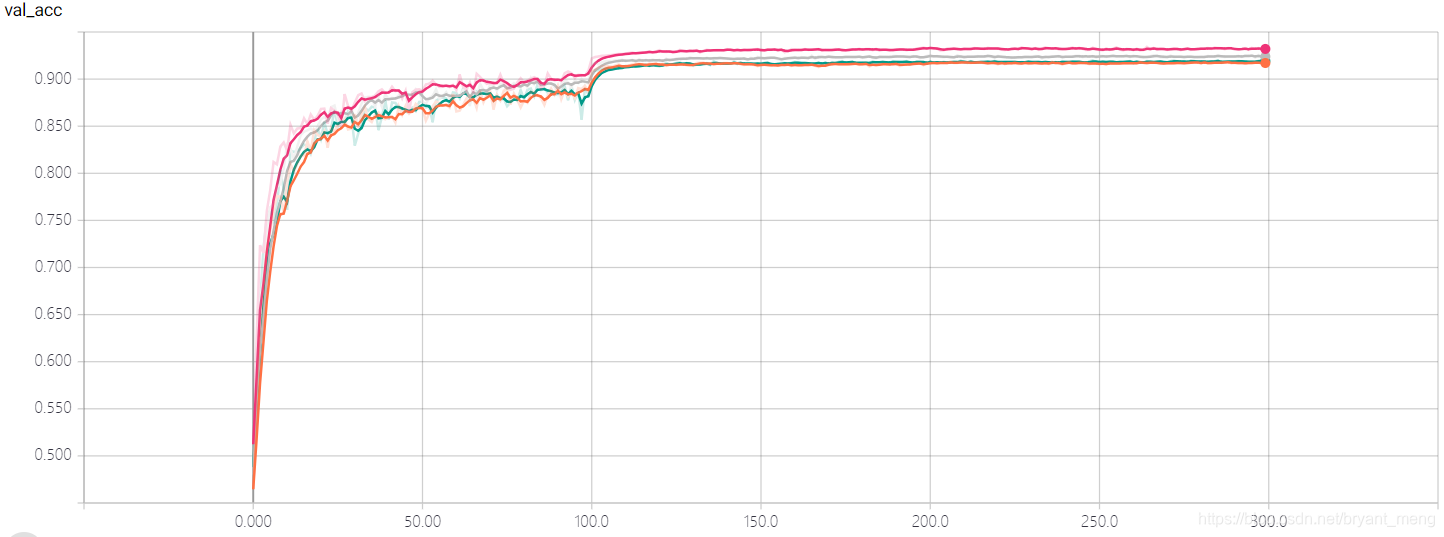

2.6 mobilenet_slim_n_0.75 / 0.5

在 2.4 小节的基础上,加入论文中 width multiplier 的 hyper parameters,也即

修改代码部分如下:

- 设置 超参数(0.75 或者 0.5)

alpha = 0.75

- 修改 depth-wise separable convolution

def depthwise_separable(x,params):

# f1/f2 filter size, s1 stride of conv

(s1,f2) = params

x = DepthwiseConv2D((3,3),strides=(s1[0],s1[0]), padding='same',

depthwise_initializer="he_normal",depthwise_regularizer=regularizers.l2(weight_decay))(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

x = Conv2D(int(alpha*f2[0]), (1,1), strides=(1,1), padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

return x

- 修改网络结构的第一个卷积

x = Conv2D(int(alpha*32), (3,3), strides=(1,1), padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

其它部分代码同 mobilenet_slim_n

参数量如下(减少,和 mobilnet_slim_n_thinner 的参数量相仿):

- mobilenet

Total params: 3,250,058 - mobilenet_n

Total params: 3,250,058 - mobilenet_slim

Total params: 3,250,058 - mobilenet_slim_n

Total params: 3,250,058 - mobilnet_slim_n_thinner

Total params: 1,890,698 - mobilnet_slim_n_0.75

Total params: 1,848,874 - mobilnet_slim_n_0.5

Total params: 840,138

结果分析如下:

test accuracy 和 test loss

可以看到,0.5 和 thinner 精度和速度相仿,这一小节也算是 trade-off latent 和 accuracy 吧!

论文中,对比了 0.75 Mobilenet 与 Shallow Mobilenet(也就是我们这里的2.5小节) 在 ImageNet 上的表现

3 总结

精度最高的是 mobilenet_slim_n

模型大小

参数量

- mobilenet

Total params: 3,250,058 - mobilenet_n

Total params: 3,250,058 - mobilenet_slim

Total params: 3,250,058 - mobilenet_slim_n

Total params: 3,250,058 - mobilnet_slim_n_thinner

Total params: 1,890,698 - mobilnet_slim_n_0.75

Total params: 1,848,874 - mobilnet_slim_n_0.5

Total params: 840,138