What’s the problem

- Full Connected layers to process image does not account the spatial structure of the images.

- Complicated images with multi-channels. When we try to improve our accuracy, we try to increase the number of layers in our network to make it deeper. That will increase the complexity of network to model more complicated functions. However, it comes at a cost – the number of parameters will rapidly increase. This makes the model more prone to over fitting and prolong training times.

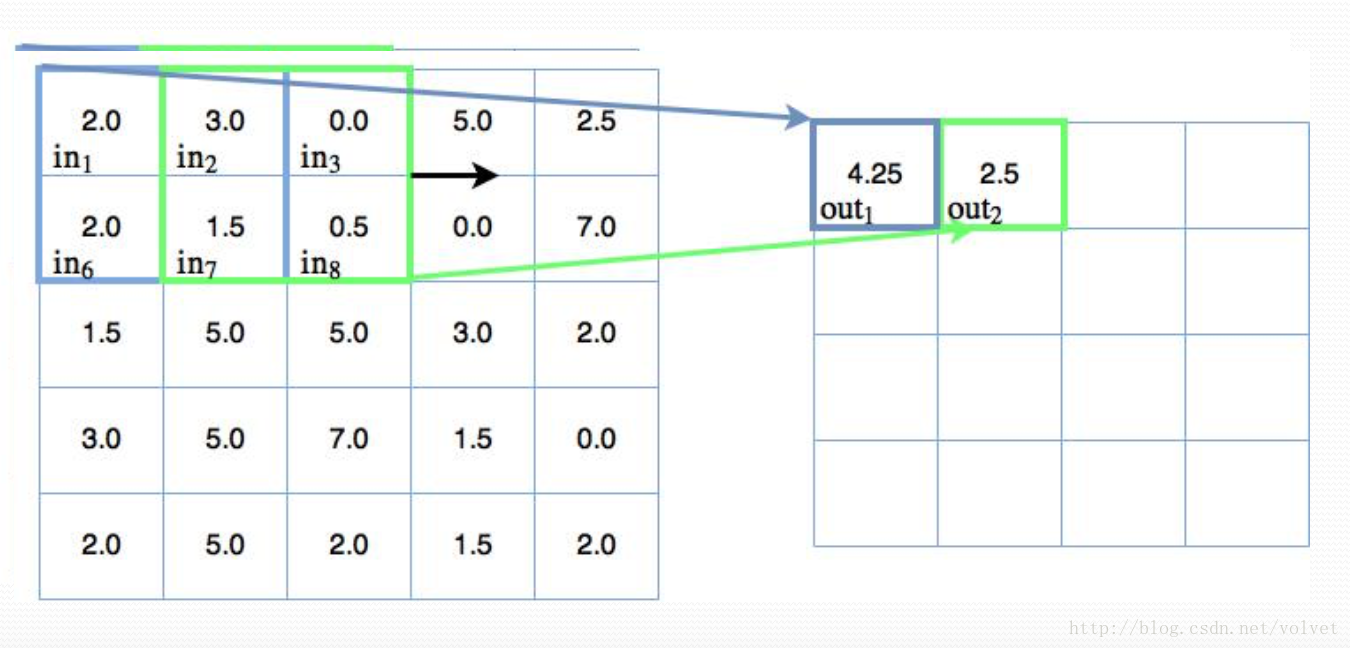

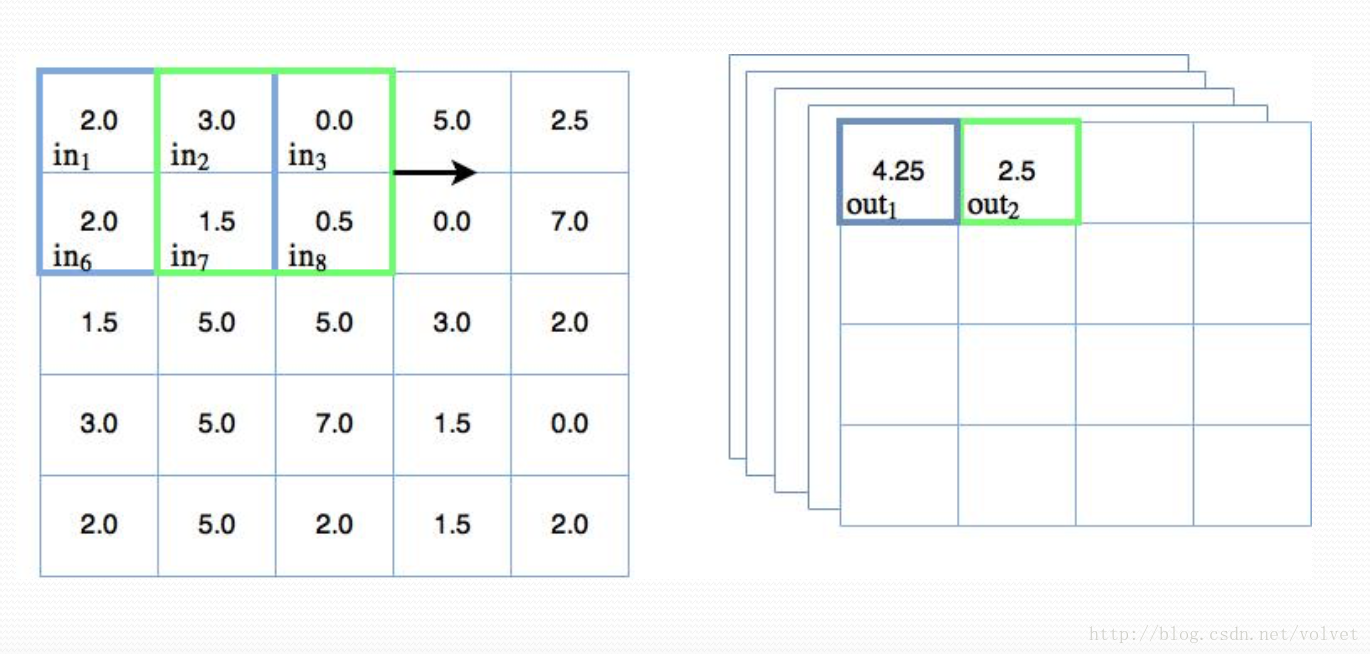

Convolutional

Features Mapping and Multiple Channels

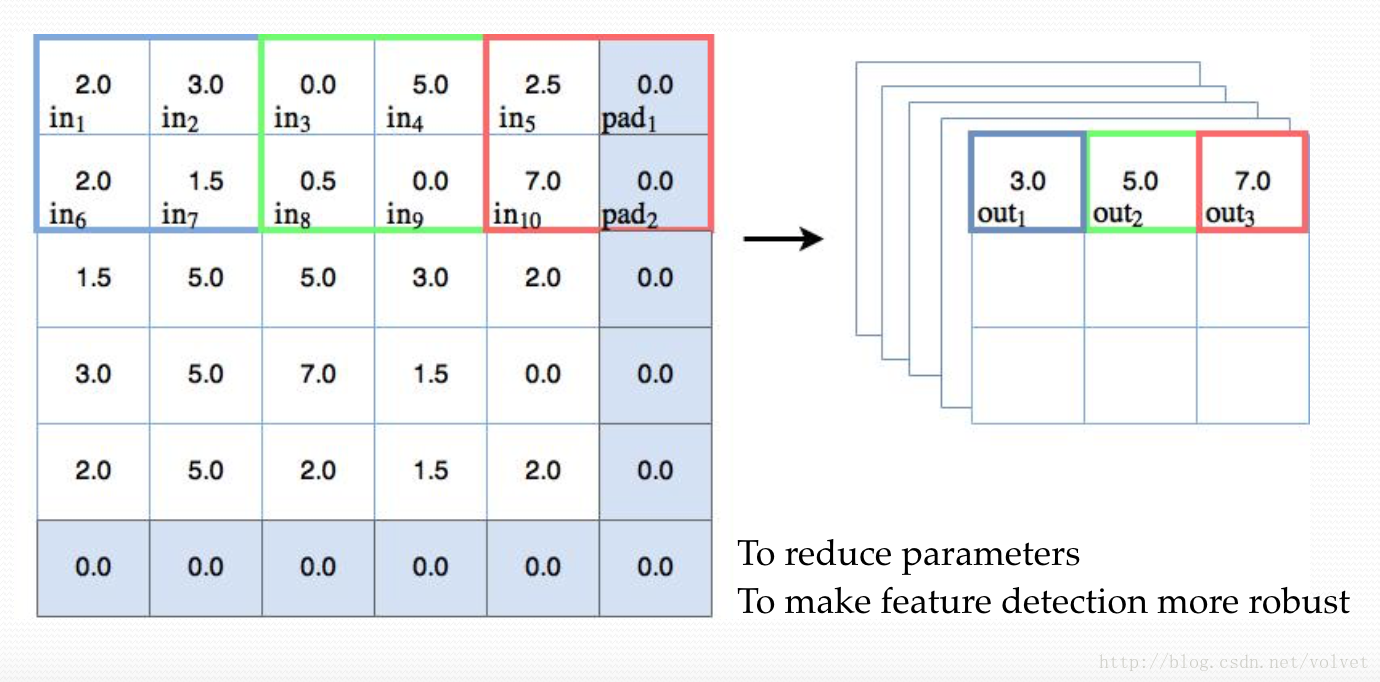

Pooling

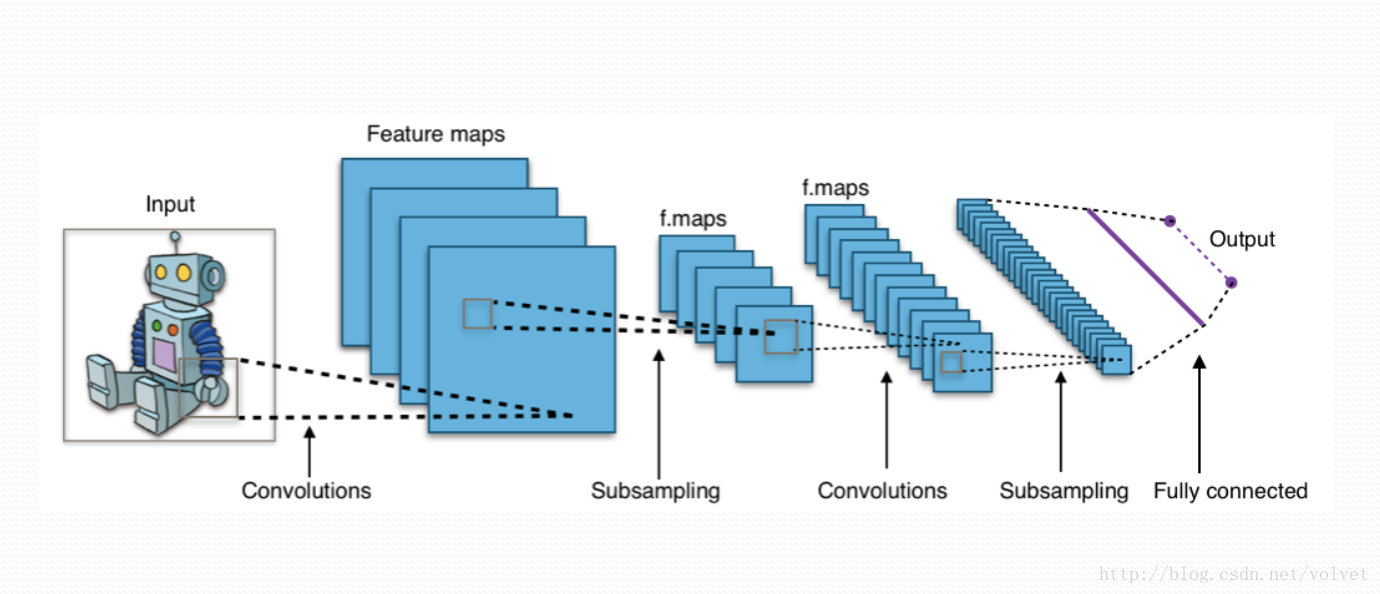

The Final Picture

Sample Code

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Thu Dec 7 09:43:49 2017

@author: volvetzhang

"""

import keras

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D, MaxPooling2D

from keras import backend as K

import matplotlib.pyplot as plt

batch_size = 128

num_classes = 10

epochs = 12

(x_train, y_train), (x_test, y_test) = mnist.load_data()

img_rows = x_train.shape[1]

img_cols = x_train.shape[2]

if K.image_data_format() == 'channels_first':

x_train = x_train.reshape(x_train.shape[0], 1, x_train.shape[1], x_train.shape[2])

x_test= x_test.reshape(x_test.shpae[0], 1, x_test.shape[1], x_test.shape[2])

input_shape = (1, img_rows, img_cols)

else:

x_train = x_train.reshape(x_train.shape[0], x_train.shape[1], x_train.shape[2], 1)

x_test= x_test.reshape(x_test.shape[0], x_test.shape[1], x_test.shape[2], 1)

input_shape = (img_rows, img_cols, 1)

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

model = Sequential()

model.add(Conv2D(32, kernel_size=(3,3), activation='relu', input_shape=input_shape))

model.add(Conv2D(64, kernel_size=(3,3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(num_classes, activation='softmax'))

model.summary()

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adadelta(),

metrics=['accuracy'])

history = model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs, verbose=1,

validation_data=(x_test, y_test))

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('MNIST Training')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

score = model.evaluate(x_test, y_test, verbose=0)

print('Test loss: ', score[0])

print('Test accuracy: ', score[1])Reference

http://adventuresinmachinelearning.com/convolutional-neural-networks-tutorial-tensorflow/