爬取字段,公司名称,职位名称,公司详情的链接,薪资待遇,要求的工作经验年限

1,items中定义爬取字段

import scrapy class ZhilianzhaopinItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() company_name = scrapy.Field() jobName = scrapy.Field() company_url = scrapy.Field() salary = scrapy.Field() workingExp = scrapy.Field()

2,主程序函数

# -*- coding: utf-8 -*- import scrapy from urllib.parse import urlencode import json import math from zhilianzhaopin.items import ZhilianzhaopinItem class ZlzpSpider(scrapy.Spider): name = 'zlzp' # allowed_domains = ['www.zhaopin.com'] start_urls = ['https://fe-api.zhaopin.com/c/i/sou?'] data = { 'start': '0', 'pageSize': '90', 'cityId': '765', 'kw': 'python', 'kt': '3' } def start_requests(self): url = self.start_urls[0]+urlencode(self.data) yield scrapy.Request(url=url,callback=self.parse) def parse(self, response): response = json.loads(response.text) sum = int(response['data']['count']) for res in response['data']['results']: item = ZhilianzhaopinItem() item['company_name'] = res['company']['name'] item['jobName'] = res['jobName'] item['company_url'] = res['company']['url'] item['salary'] = res['salary'] item['workingExp'] = res['workingExp']['name'] yield item for url_info in range(90,sum,90): self.data['start'] = str(url_info) url_i = self.start_urls[0]+urlencode(self.data) yield scrapy.Request(url=url_i,callback=self.parse)

3,settings中设置请求头和打开下载管道

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36' ITEM_PIPELINES = { 'zhilianzhaopin.pipelines.ZhilianzhaopinPipeline': 300, }

4,创建数据库,

5,pipelines.py文件中写入数据库

import pymysql # 写入mysql数据库 class ZhilianzhaopinPipeline(object): conn = None mycursor = None def open_spider(self, spider): print('链接数据库...') self.conn = pymysql.connect(host='172.16.25.4', user='root', password='root', db='scrapy') self.mycursor = self.conn.cursor() def process_item(self, item, spider): print('正在写数据库...') company_name = item['company_name'] jobName = item['jobName'] company_url = item['company_url'] salary = item['salary'] workingExp = item['workingExp'] sql = 'insert into zlzp VALUES (null,"%s","%s","%s","%s","%s")' % (company_name, jobName, company_url,salary,workingExp) bool = self.mycursor.execute(sql) self.conn.commit() return item def close_spider(self, spider): print('写入数据库完成...') self.mycursor.close() self.conn.close()

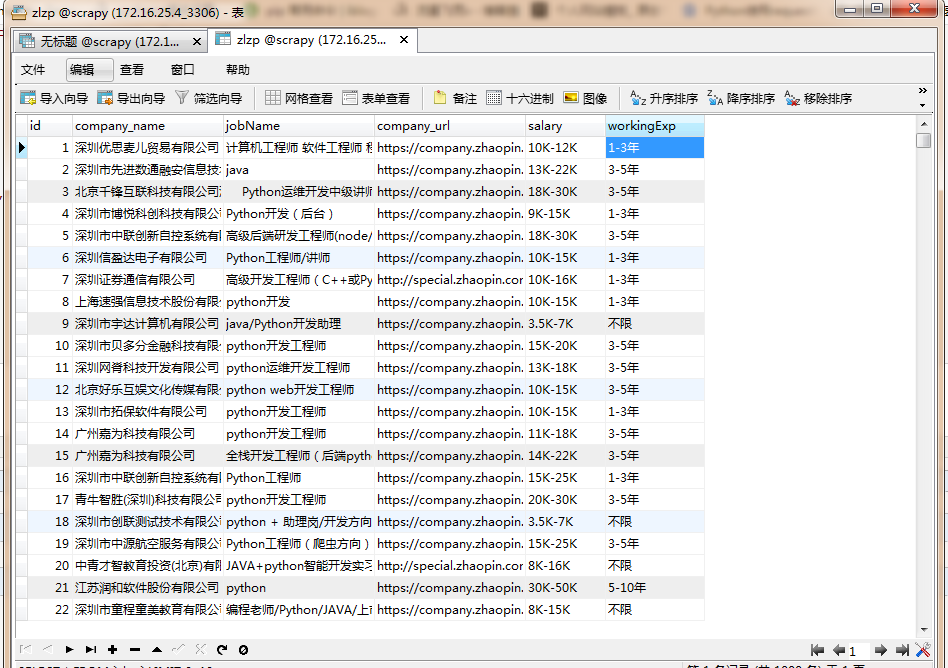

6,查看是否写入成功

done。