目录

-

GoogleLeNet论文

-

tensorflow代码分析

-

小结

GoogleLeNet论文

GoogleLeNet是2014年ImageNet中ILSVRC14竞赛的冠军,和VGG网络是同一届,VGG网络是当年竞赛的亚军。但是实际上两个网络的TOP-5错误率相差并不多,GoogleLeNet的网络结构相对VGG复杂一些,是一个22层的网络,并且提出了一种Inception的结构,是一个很大的进步。

论文地址 论文的标题是“Going deeper with convolutions”,跟VGG一样也是遵循需要将网络设计的更深的思想。网络名字取名为GoogleLeNet是为了向CNN网络的开山鼻祖LeNet致敬。

1.前言

论文中作者花了大量的篇幅来描述当前分类和检测网络取得的成绩,以及遇到的问题,然后引出作者对现状改进的思路。

众所周知,改进深度神经网络最直接的方式是增加网络的大小,包括增加深度和宽度。增加深度就是增加神经网络的层数,增加宽度就是增加每一层的units个数。但是增加了深度和宽度会带来两个问题:1.因为参数变多而使得网络更加容易overfitting,反而降低了精度。2.会大大增加计算量,而且可能训练出来部分参数最终为0,这样在计算资源有限的情况下浪费了计算资源。所以我们最终的目标是:减少参数,减少计算量,增加深度,增加宽度。

解决方法就是将全连接替换为稀疏连接结构,但是现有的计算方式对非均匀的稀疏计算效率非常低,所以作者提出了自己的改进方式。

2.Inception模型

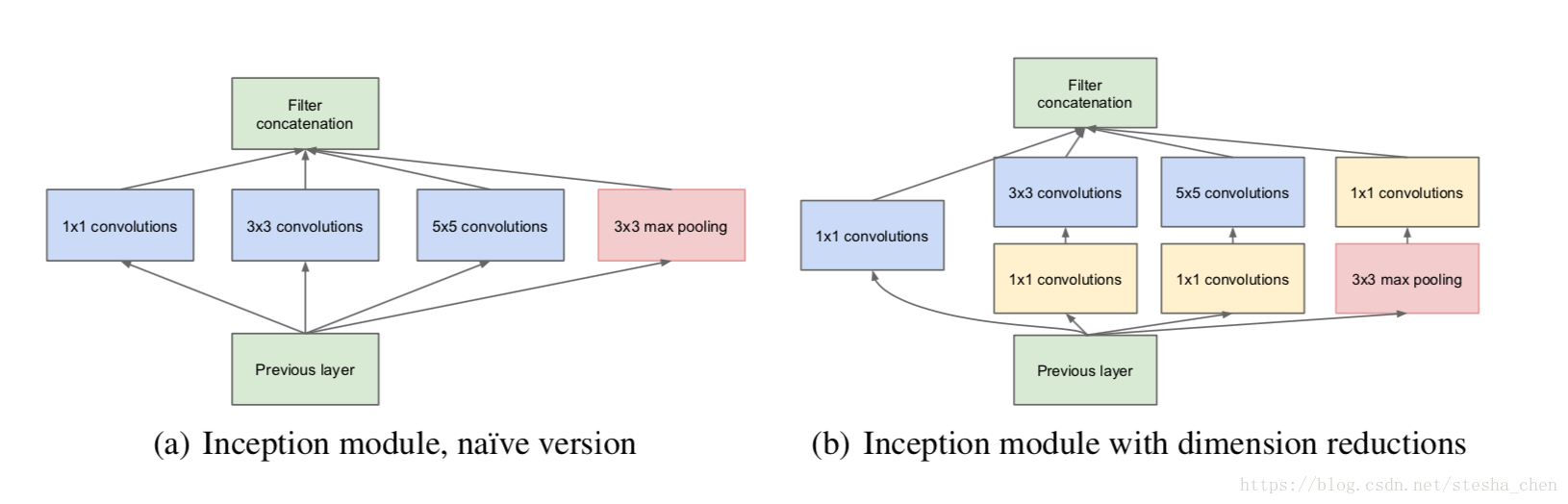

上图是作者提出的两种Inception的模型,b模型是a模型的改进版本。

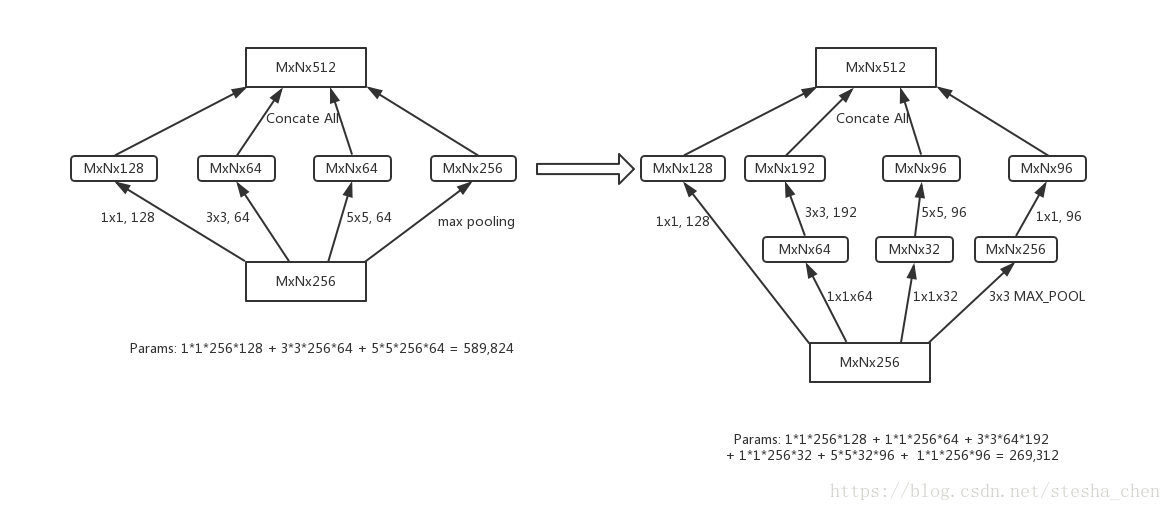

先说a模型,论文中说是以一种稠密组件去逼近和替代一个最优的局部稀疏结构。我的理解是这种连接模型既会有四种连接方式带来的信息汇总,而且相对直接3x3的Conv进行连接的方式参数反而有所减少。为何参数会有减少呢?举个下图的例子(只是举个例子,并不是真实使用情况):

接着作者又发现,虽然参数量是有减少,但是减少的并不是太多,而且如果输入的数据channel值比较大,参数量仍然会比较大,所以作者提出了b模型。b模型相对a模型而言,在使用3x3或者5x5的Conv连接之前用了1x1的Conv进行降维,这样会使参数量再一次减少,并且在1x1Conv后也使用非线性激活就增加了网络的非线性。参数量变化如下图(只是举个例子,并不是真实使用情况):

总之,Inception模型的好处是既能增加网络的深度和宽度,又不会增加计算量,而且稀疏连接的方式还能有助于减少过拟合。

3.网络结构

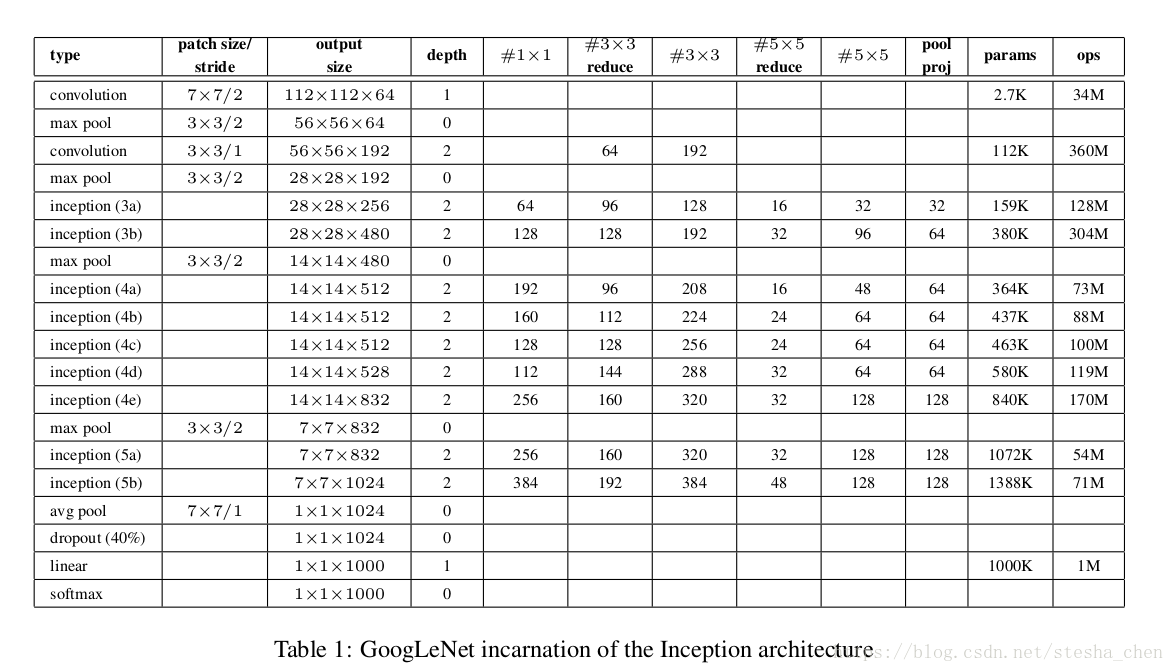

论文中的网络结构如下图:

- Input为224x224x3的RGB图片,同样减去每个颜色通道的均值(同vgg网络一样)

- #3x3 reduce表示在3x3Conv之前1x1Conv连接的channel数量,同样#5x5 reduce表示在5x5Conv之前1x1Conv链接的channel数量.

- pool proj表示inception中max pooling后的1x1Conv连接的channel数量

- 只计算有参数的层数是22层,所有的卷基层都用Relu激活,包括inception内部的卷积

- 作者发现去掉最后的FC,改为avg pool,精确度有提升,但是还要保留后面的dropout

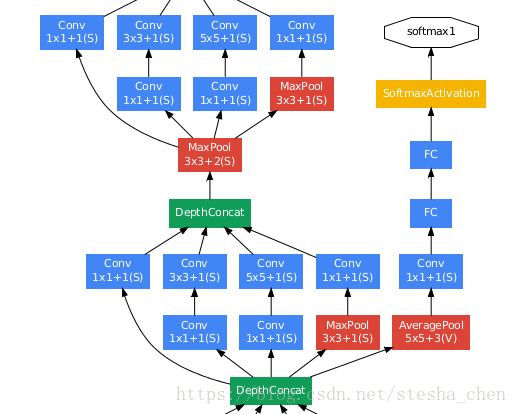

另外作者担心这么深的网络越往后信息的传播能力会受损,但是作者发现中间层的网络还带有比较多的信息,因此在inception(4a)和inception(4d)后面增加了一个比较小的网络,做了softmax输出,和最后的softmax输出相加,但是权重设置为0.3.也就是最后的output是softmax2+0.3*softmax1+0.3*softmax0

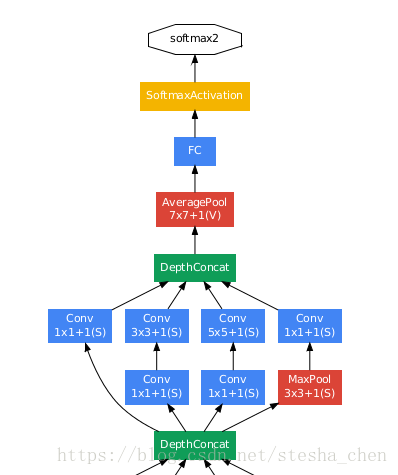

inception(4a)处的softmax

inception(4d)处的softmax

最后output的softmax

对softmax0和softmax1处的小网络的说明:

- average pool是5x5 filter size,stride是3,inception(4a)会得到4x4x512的output,inception(4d)会得到4x4x528的output

- Conv 1x1是128个filter,后面跟着Relu进行激活

- FC是1024 units,后面跟着Relu激活

- 70%units被dropout

- softmax是1000类,但是预测的时候移除

训练时使用随机梯度下降,momentum为0.9,8个epoch学习率下降4%

tensorflow代码分析

代码地址。下面我们对照代码实现和上图中的网络模型来更进一步理解网络。inception的结构主要是在inception_v1_base函数中实现的,函数的实现对照论文中的网络模型也是以一个一个的block为单位。

1. 下面是第一个block,对应论文网络结构表中的第一行。filter size是7x7,filter个数是64,stride是2,padding从slim.arg_scope中可以看出默认是same。

end_point = 'Conv2d_1a_7x7'

net = slim.conv2d(inputs, 64, [7, 7], stride=2, scope=end_point)当padding为SAME时,tf会自动计算需要padding的值使output的size为input size除以stride,所以这里output size会是112x112,如果这里的padding为VALID,那么最后的output size会是109x109,计算方法是(224-7)/2 + 1 = 109

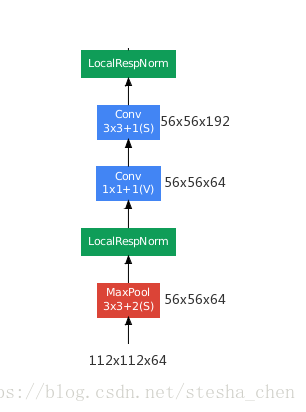

2. 下面是第二个block,有a,b,c三部分,对应论文网络结构表的第二和第三行。因为第三行的depth是2,所以convolution是两层,一个是#3x3 reduce表示的3x3之前的1x1 convolution计算,一个是#3x3 filter的convolution计算。

end_point = 'MaxPool_2a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

...

end_point = 'Conv2d_2b_1x1'

net = slim.conv2d(net, 64, [1, 1], scope=end_point)

...

end_point = 'Conv2d_2c_3x3'

net = slim.conv2d(net, 192, [3, 3], scope=end_point)代码实现中似乎略过了LocalRespNorm

3. 下面是第三个block,也是a,b,c三个部分,对应论文网络结构表中的第四,第五和第六行。

end_point = 'MaxPool_3a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_3b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 96, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 128, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 16, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 32, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 32, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_3c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

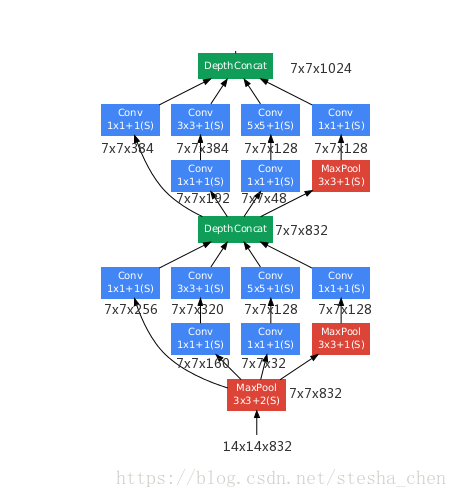

if final_endpoint == end_point: return net, end_points4. 下面是第四个block,有a,b,c,d,e,f这六个部分,对应论文网络结构表的7-12行。

end_point = 'MaxPool_4a_3x3'

net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 96, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 208, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 16, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 48, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 112, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 224, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 24, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4d'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 256, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 24, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4e'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 112, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 144, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 288, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_4f'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 256, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 320, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points5. 下面是第五个block,有a,b,c三个部分。对应论文结构表中的13-15行

end_point = 'MaxPool_5a_2x2'

net = slim.max_pool2d(net, [2, 2], stride=2, scope=end_point)

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_5b'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 256, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 320, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0a_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

end_point = 'Mixed_5c'

with tf.variable_scope(end_point):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 384, [1, 1], scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 384, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0b_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1')

net = tf.concat(

axis=3, values=[branch_0, branch_1, branch_2, branch_3])

end_points[end_point] = net

if final_endpoint == end_point: return net, end_points

主要的网络实现后,在inception_v1中实现了最后的softmax,对应论文网络结构中的最后四行,avg_pool,dropout,linear(用Convolution运算来替代)和softmax(prediction_fn是函数传入的参数,默认为slim.softmax)

# Pooling with a fixed kernel size.

net = slim.avg_pool2d(net, [7, 7], stride=1, scope='AvgPool_0a_7x7')

end_points['AvgPool_0a_7x7'] = net

...

net = slim.dropout(net, dropout_keep_prob, scope='Dropout_0b')

logits = slim.conv2d(net, num_classes, [1, 1], activation_fn=None,

normalizer_fn=None, scope='Conv2d_0c_1x1')

...

end_points['Predictions'] = prediction_fn(logits, scope='Predictions')代码中所有的中间参数都放在了end_points中,便于用户做fine tuning。

小结

GoogleLeNet也叫做inception V1提出了inception block的结构,在不增加网络参数的情况下让网络变的越来越宽,越来越深。用1x1的Conv来做降维,用average pooling来替代全链接层,都是作者提出的改进点,为后续的v2,v3,v4打下了基础。