模型预测结果校准——Isotonic regression

方法简介:

Isotonic Regression: the method used by Zadrozny and Elkan (2002; 2001) to calibrate predictions from boosted naive bayes, SVM, and decision tree models.[1]

Zadrozny and Elkan (2002; 2001) successfully used a more general

method based on Isotonic Regression (Robertson et al.,1988) to calibrate predictions from SVMs, Naive Bayes, boosted Naive Bayes, and decision trees. This method is more general in that the only restriction is that the mapping function be isotonic (monotonically increasing).[1]

Isotonic regression(保序回归) 是一种非参数化方法(The non-parametric approach);

假设模型的预测结果记为fi,真实目标记为yi,那么Isotonic Regression的基本假设为:

其中m是isotonic(单调递增)的函数。

给定数据集

Isotonic Regression的一种求解算法是pair-adjacent violators algorithm(简称PAV算法),时间复杂度是O(N),主要思想是通过不断合并、调整违反单调性的局部区间,使得最终得到的区间满足单调性。PAV算法也是scikit-learn中isotonic regression库的求解算法。该算法的动态效果图可参阅文献[2]。

One algorithm that finds a stepwise constant solution for the Isotonic Regression problem is pair-adjacent violators (PAV) algorithm (Ayer et al., 1955) presented in Table 1.

应用流程:

对于CTR,特征选择的时候,可能会选择很多细粒度的特征,那么直接通过clicks/impressions计算出的点击率会非常不准确。

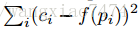

文献[4]中提出基于下式,提出求解t()的一种近似方法。

The methods by Wang et al. [5] and Meyer [6] find a non-decreasing

mapping function t() that minimizes:

其中,ci表示真实label, pi表示模型输出的预测概率。M是一个表示平滑程度的参数,a和b分别表示输入的预测值的范围,

另外,为了维持该模型的识别能力,必须保证该模型是单调递增的。

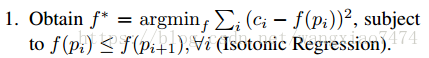

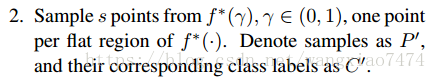

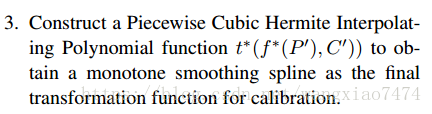

算法实现流程如下:Algorithm 1: Smooth Isotonic Regression

通过Isotonic regression 得到单调且非参数化的函数f(),同时这个函数要使

在经过Isotonic Regression函数映射后的数据中,选择s个典型的点,其预测值和对应的label分别记作集合

对步骤2中采样的点采用Piecewise Cubic Hermite Interpolating Polynomial (PCHIP)方法进行插值,得到平滑后的单调曲线,并将该曲线作为最终进行校准的映射函数。

理论上讲,该方法比Isotonic regression 更加平滑,比sigmoid regression 更加灵活。

适用情况:

Isotonic Regression is a more powerful calibration method that can correct any monotonic distortion. Unfortunately, this extra power comes at a price. A learning curve analysis shows that Isotonic Regression is more prone to overfitting, and thus performs worse than Platt Scaling, when data is scarce.[1]

Isotonic regression 对模型的输出特征没有要求;

适用于样本量多的情形,样本量少时,使用isotonic regression容易过拟合;

Isotonic Regression通常作为辅助其他方法修复因为数据稀疏性导致的矫正结果不平滑问题;[7]

Microsoft在文献[3]中的CTR预估模型的校准上用到Isotonic Regression。

参考文献:

[1] Alexandru Niculescu-Mizil, et al. Predicting Good Probabilities With Supervised Learning. ICML2005.

[2] https://en.wikipedia.org/wiki/Isotonic_regression

[3] Thore graepel, et al. Web-Scale Bayesian Click-Through Rate Prediction for Sponsored Search Advertising in Microsoft’s Bing Search Engine. ICML2010.

[4] Jiang X, Osl M, Kim J, Ohno-Machado L. Smooth Isotonic Regression: A New Method to Calibrate Predictive Models. AMIA Summits on Translational Science Proceedings. 2011;2011:16-20.

[5] X. Wang and F. Li. Isotonic smoothing spline regression. J Comput Graph Stat, 17(1):21–37, 2008.

[6] M. C. Meyer. Inference using shape-restricted regression splines. Annals of Applied Statistics, 2(3):1013–1033, 2008.

[7] <预测模型结果校准>https://sensirly.github.io/prediction-model-calibration/