Abstact

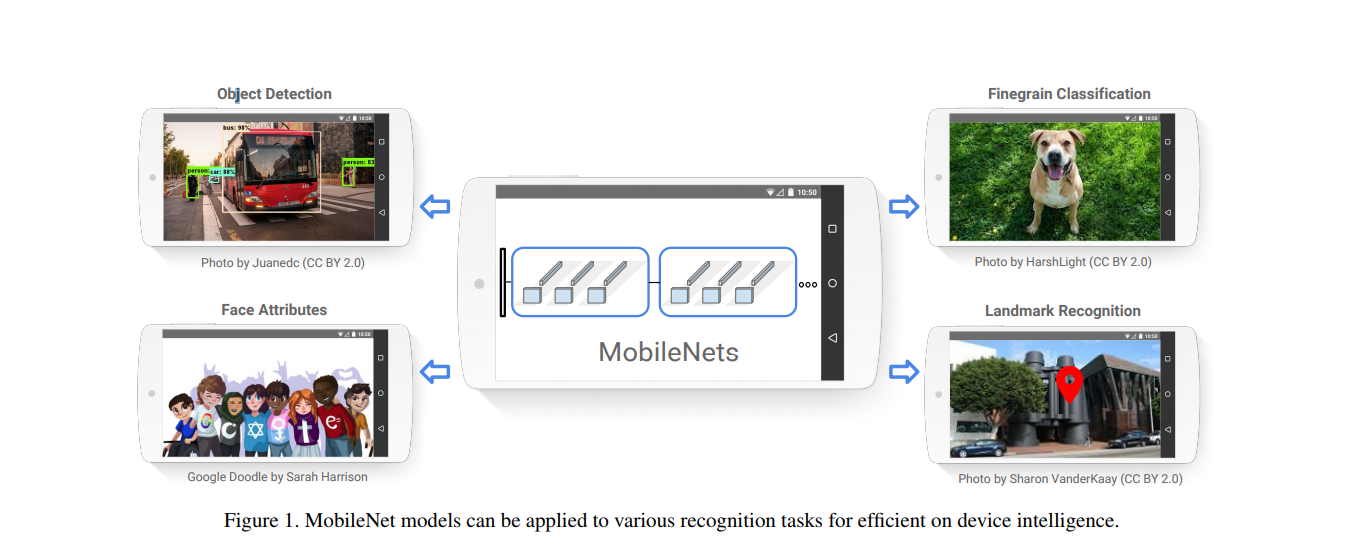

We present a class of efficient models called MobileNets for mobile and embedded vision applications. MobileNets are based on a streamlined architecture that uses depthwise separable convolutions to build light weight deep neural networks. We introduce two simple global hyperparameters that efficiently trade off between latency and accuracy. These hyper-parameters allow the model builder to choose the right sized model for their application based on the constraints of the problem. We present extensive experiments on resource and accuracy tradeoffs and show strong performance compared to other popular models on ImageNet classification. We then demonstrate the effectiveness of MobileNets across a wide range of applications and use cases including object detection, finegrain classification, face attributes and large scale geolocalization.

我们提出一种高效的模型叫做移动网络,为移动和嵌入版使用。MobileNets基于一个流线型结构,使用深度可分离的卷积来构建轻权重的深度神经网络。我们引入两个简单的全局参数,有效地权衡了延迟和精度。这个超参数允许模型生成器来根据问题的限制来U型安泽合适大小的应用程序模型。我们提出了大量的资源和准确性权衡实验,和其他流行的模型相比在ImageNet分类上有更强大的性能。然后,我们证明了MobilNets在广泛应用中的有效性,包括物体检测,细粒分类,人脸属性和大尺度的地理定位。

1. Introduction

Convolutional neural networks have become ubiquitous in computer vision ever since AlexNet [19] popularized deep convolutional neural networks by winning the ImageNet Challenge: ILSVRC 2012 [24]. The general trend has been to make deeper and more complicated networks in order to achieve higher accuracy [27, 31, 29, 8]. However, these advances to improve accuracy are not necessarily making networks more efficient with respect to size and speed. In many real world applications such as robotics, self-driving car and augmented reality, the recognition tasks need to be carried out in a timely fashion on a computationally limited platform.

自从AlexNet赢得了ImageNet挑战:ILSVRC 2012后深度卷积网络得到普及,现在计算机视觉中,卷积神经网络普遍存在。正常的趋势是把网络变得更深,更复杂为了得到更高的准确率。然而,这些精度的提高并不一定能使网络在规模和速度上更有效。在许多现实应用中,比如机器人学,自动驾驶汽车和增强现实,识别任务需要在及时并计算有限的平台上执行。

This paper describes an efficient network architecture and a set of two hyper-parameters in order to build very small, low latency models that can be easily matched to the design requirements for mobile and embedded vision applications. Section 2 reviews prior work in building small models. Section 3 describes the MobileNet architecture and two hyper-parameters width multiplier and resolution multiplier to define smaller and more efficient MobileNets. Section 4 describes experiments on ImageNet as well a variety of different applications and use cases. Section 5 closes with a summary and conclusion.

这个论文描述了一个有效的网络结构合一套两个参数为了建立非常小的,低延时的模型能够更容易匹配移动和嵌入式应用的设计需求。第二部分回顾了在建立小模型上的之前的工作。第三部分描述了MobileNet结构和两个超参数宽度系数和分辨率系数来定义更小更高效的MobileNets。第四部分介绍了ImageNet上的实验,同时还有一些不同的应用和使用场景。第五部分做了总结。

2. Prior Work

There has been rising interest in building small and efficient neural networks in the recent literature, e.g. [16, 34, 12, 36, 22]. Many different approaches can be generally categorized into either compressing pretrained networks or training small networks directly. This paper proposes a class of network architectures that allows a model developer to specifically choose a small network that matches the resource restrictions (latency, size) for their application. MobileNets primarily focus on optimizing for latency but also yield small networks. Many papers on small networks focus only on size but do not consider speed.

最近的一些文献越来越对建立小而高效的神经网络感兴趣。许多不同的方法基本分类为,压缩与训练网络或者直接训练小的网络。这些论文提出一种网络结构让模型开发者更专门为他们的应用选择匹配资源(延时,规模)限制的小的网络。MobileNets主要专注于优化延时,但也是小的网络。许多文献在小的网络只聚焦于尺寸,而忽略了速度。

MobileNets are built primarily from depthwise separable convolutions initially introduced in [26] and subsequently used in Inception models [13] to reduce the computation in the first few layers. Flattened networks [16] build a network out of fully factorized convolutions and showed the potential of extremely factorized networks. Independent of this current paper, Factorized Networks[34] introduces a similar factorized convolution as well as the use of topological connections. Subsequently, the Xception network [3] demonstrated how to scale up depthwise separable filters to out perform Inception V3 networks. Another small network is Squeezenet [12] which uses a bottleneck approach to design a very small network. Other reduced computation networks include structured transform networks [28] and deep fried convnets [37].

MobileNets主要从深度可分离的卷积建立。深度可分离卷积先是在[26]中提出,然后使用在Inception模型中来减少前几层的计算量。扁平化网络建立的网络一个网络不完全分解卷积,并呈极分解网络的潜力。独立于现存文献,分解网络引入了一个相似的分解卷积和拓扑链接的使用。随后,Xception网络证明如何扩展深度方向可分离滤波器执行Inception V3网络。另一个小型网络是Squeezenet,使用了bottleneck方法来设计一个非常小的网络。其他减少了计算量的网络包括结构化的传递网络和deep fried convnets。

A different approach for obtaining small networks is shrinking, factorizing or compressing pretrained networks. Compression based on product quantization [36], hashing [2], and pruning, vector quantization and Huffman coding [5] have been proposed in the literature. Additionally various factorizations have been proposed to speed up pretrained networks [14, 20]. Another method for training small networks is distillation [9] which uses a larger network to teach a smaller network. It is complementary to our approach and is covered in some of our use cases in section 4. Another emerging approach is low bit networks [4, 22, 11].

另一个获得小网络的方法是收缩,分解或压缩一个预训练模型。基于产品量化,哈希和剪枝,向量量化和霍夫曼编码的压缩在文献里被提出。另外的被提出的多种分解方法也是为了提速预训练网络。另一些训练小网络的方法是蒸馏,使用一个更大的网络来教小的网络。它与我们的方法是互补的,在第四部分我们的使用场景中也被提到。另一种新兴的方法是低比特网络。

3. MobileNet Architecture

In this section we first describe the core layers that MobileNet is built on which are depthwise separable filters. We then describe the MobileNet network structure and conclude with descriptions of the two model shrinking hyperparameters width multiplier and resolution multiplier.

在这个部分,我们首先介绍核心层,MobileNet建立的深度可分离滤波器。然后我们介绍MobileNet网络结构并描述两个模型收缩超参数,宽度系数和分辨率系数。

3.1 Depthwise Separable Convolution

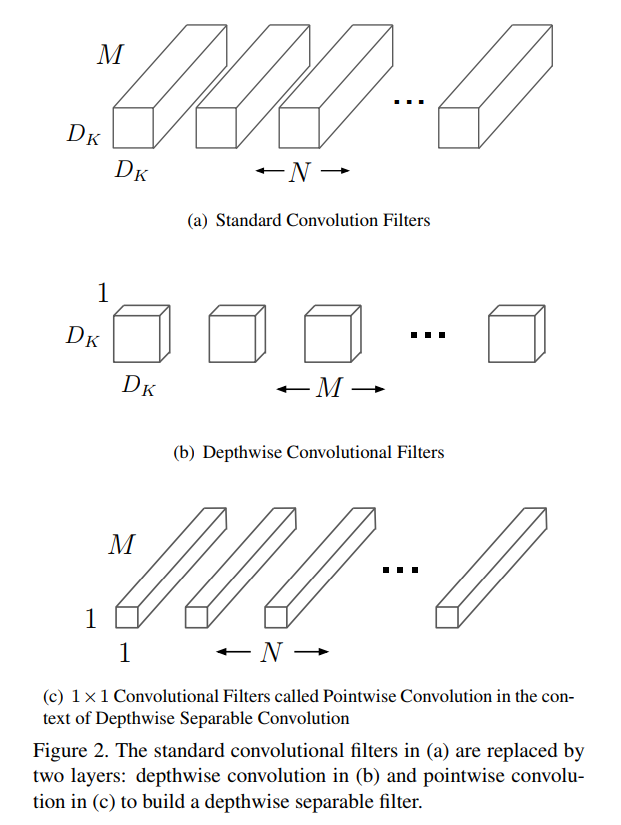

The MobileNet model is based on depthwise separable convolutions which is a form of factorized convolutions which factorize a standard convolution into a depthwise convolution and a 1×1 convolution called a pointwise convolution. For MobileNets the depthwise convolution applies a single filter to each input channel. The pointwise convolution then applies a 1×1 convolution to combine the outputs the depthwise convolution. A standard convolution both filters and combines inputs into a new set of outputs in one step. The depthwise separable convolution splits this into two layers, a separate layer for filtering and a separate layer for combining. This factorization has the effect of drastically reducing computation and model size. Figure 2 shows how a standard convolution 2(a) is factorized into a depthwise convolution 2(b) and a 1 × 1 pointwise convolution 2(c).

MobileNet模型基于深度可分离卷积。深度可分离卷积是一种分解卷积的形式,它把一个标准卷积分解成深度卷积和一个叫做点卷积的1 x 1卷积。对于MobileNets深度卷积对每个输入通道应用一个单独的滤波器。点卷积然后用1 x 1卷积来结合深度卷积的输出。一个标准的卷积滤波器和一步结合输入到一套新的输出。深度可分离卷积把这个分成两层,一个分来的层来滤波,另一个做结合。这种分解具有大幅减少计算量和模型大小的效果。图2显示了一个标准卷积2(a)被分解为深度卷积2(b)和1 x 1点卷积。

A standard convolutional layer takes as input a DF ×DF × M feature map F and produces a DF × DF × N feature map G where DF is the spatial width and height of a square input feature map1 , M is the number of input channels (input depth), DG is the spatial width and height of a square output feature map and N is the number of output channel (output depth).

一个标准的卷积层输入是DF x DF x M的特征图生成DF x DF x N的特征图G,其中,DF是空间宽高方形的输入特征图,M是输入通道的个数,DG是方形输出特征图的空间宽高,N是输出的通道数。

The standard convolutional layer is parameterized by convolution kernel K of size DK ×DK ×M×N where DK is the spatial dimension of the kernel assumed to be square and M is number of input channels and N is the number of output channels as defined previously.

一个标准卷积层参数化为,尺寸为DK x DK x M x N的卷积核K,DK是假设为方形的核的空间维度,M是输入通道的数量,N是之前定义的输出通道的个数。

The output feature map for standard convolution assuming stride one and padding is computed as:

标准卷积的输出特征图假设步长为1,padding计算为:

$$G_{k,l,n} = \sum_{i,j,m}K_{i,j,m,n} · F_{k+i-1,l+j-1,m}$$

Standard convolutions have the computational cost of:

标准卷积计算量为:

$$D_K·D_K·M·N·D_F·D_F$$

where the computational cost depends multiplicatively on the number of input channels M, the number of output channels N the kernel size Dk × Dk and the feature map size DF × DF . MobileNet models address each of these terms and their interactions. First it uses depthwise separable convolutions to break the interaction between the number of output channels and the size of the kernel.

其中,计算成本依赖于输入通道M,输出通道N,核大小Dk x Dk和特征图大小DF x DF的乘积。MobileNet模型解决这些术语以及他们之间的相互作用。首先,它使用了深度可分离卷积来打破输出通道和核大小的相互作用。

The standard convolution operation has the effect of filtering features based on the convolutional kernels and combining features in order to produce a new representation. The filtering and combination steps can be split into two steps via the use of factorized convolutions called depthwise separable convolutions for substantial reduction in computational cost.

标准卷积操作具有基于卷积核的特征滤波和组合特征,为了生成新的表示。滤波和组合的步骤通过使用被称为深度可分离的分解卷积能够分成两步,大幅减少了计算量。

Depthwise separable convolution are made up of two layers: depthwise convolutions and pointwise convolutions. We use depthwise convolutions to apply a single filter per each input channel (input depth). Pointwise convolution, a simple 1×1 convolution, is then used to create a linear combination of the output of the depthwise layer. MobileNets use both batchnorm and ReLU nonlinearities for both layers.

深度可分离卷积由两层组成,深度卷积核点卷积。我们使用深度方向卷积来对每一个输入通道(输入深度)应用一个单独的滤波器。点卷积,一个简单的额1 x 1卷积,之后被用作创造一个深度层的输出的线性组合。MobileNets对两个层都使用batchnorm和ReLU非线性。

Depthwise convolution with one filter per input channel (input depth) can be written as:

每个输入通道的一个滤波器的深度方向卷积可以写成:

$$G_{k,l,m} = \sum_{i,j}K_{i,j,m}·F_{k+i-1,l+j-1,m}$$

where Kˆ is the depthwise convolutional kernel of size DK × DK × M where the mth filter in Kˆ is applied to the mth channel in F to produce the mth channel of the filtered output feature map Gˆ .

其中K^是尺寸为DK x DK x M的深度卷积核,其中K^应用到F中第m个通道来产生滤波后的特征图G^的第m通道。

Depthwise convolution has a computational cost of:

深度卷积的计算量为:

$$D_K·D_K·M·D_F·D_F$$

Depthwise convolution is extremely efficient relative to standard convolution. However it only filters input channels, it does not combine them to create new features. So an additional layer that computes a linear combination of the output of depthwise convolution via 1 × 1 convolution is needed in order to generate these new features.

深度方向卷积相对标准卷积非常高效。然而,它只对输入通道滤波,并不组合他们来创造新的特征。所以,额外的层计算深度卷积的输出的线性组合,通过1 x 1卷积为了生成这些新的特征。

The combination of depthwise convolution and 1 × 1 (pointwise) convolution is called depthwise separable convolution which was originally introduced in [26].

深度卷积的组合和1 x 1点卷积被叫做可分离卷积,在[26]中最早被引入。

Depthwise separable convolutions cost:

深度可分离卷积成本:

$$D_K·D_K·M·D_F·D_F+M·N·D_F·D_F$$

which is the sum of the depthwise and 1 × 1 pointwise convolutions. By expressing convolution as a two step process of filtering and combining we get a reduction in computation of:

这个是1 x 1点卷积的总和。通过表示卷积为滤波和组合的两个阶段我们得到计算上的减少:

$$\frac{D_K·D_K·M·D_F·D_F+M·N·D_F·D_F}{D_K·D_K·M·N·D_F} = \frac{1}{N} + \frac{1}{D_K^2}$$

MobileNet uses 3 × 3 depthwise separable convolutions which uses between 8 to 9 times less computation than standard convolutions at only a small reduction in accuracy as seen in Section 4. Additional factorization in spatial dimension such as in [16, 31] does not save much additional computation as very little computation is spent in depthwise convolutions. Figure 2. The standard convolutional filters in (a) are replaced by two layers: depthwise convolution in (b) and pointwise convolution in (c) to build a depthwise separable filter.

MobileNet使用3x3深度可分离卷积,计算量比标准卷积缩减8到9倍,只有很小的准确率的损失,可以再第4部分看到。另外在空间维度上的分解如[16,31]中,并不省太多额外的计算量,小的计算都是在深度方向的卷积。图2。标准卷积滤波器在(a)中被两个层替代:深度卷积在(b)和点卷积在(c)构建深度可分离滤波器。

3.2 Network Structure and Training

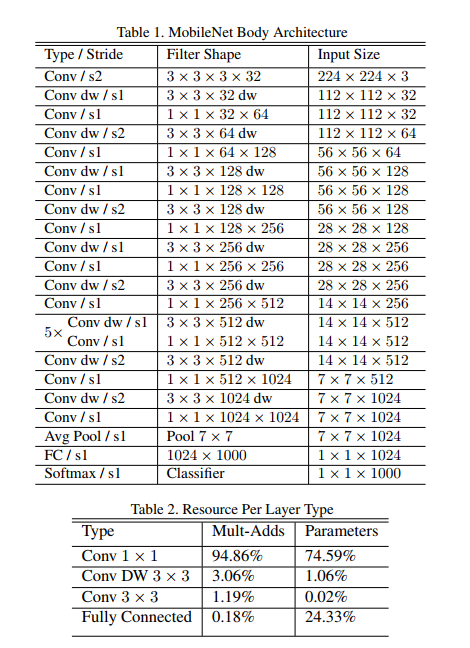

The MobileNet structure is built on depthwise separable convolutions as mentioned in the previous section except for the first layer which is a full convolution. By defining the network in such simple terms we are able to easily explore network topologies to find a good network. The MobileNet architecture is defined in Table 1. All layers are followed by a batchnorm [13] and ReLU nonlinearity with the exception of the final fully connected layer which has no nonlinearity and feeds into a softmax layer for classification. Figure 3 contrasts a layer with regular convolutions, batchnorm and ReLU nonlinearity to the factorized layer with depthwise convolution, 1 × 1 pointwise convolution as well as batchnorm and ReLU after each convolutional layer. Down sampling is handled with strided convolution in the depthwise convolutions as well as in the first layer. A final average pooling reduces the spatial resolution to 1 before the fully connected layer. Counting depthwise and pointwise convolutions as separate layers, MobileNet has 28 layers.

MobileNet结构构造在在之前部分提到的深度可分离卷积,除了第一个全卷积层。通过简单的术语定义网络,我们能够简单探索网络拓扑来找到一个好的网络。MobileNet结构在Table1中定义。所有层都跟着一个batchnorm和ReLu非线性除了最后的没有非线性的全连接层,后面接着softmax层来分类。图3对比了一个正常的卷积层,batchnorm和ReLU非线性和用深度卷积分解的二层,1 x1 点卷积和每个卷积层后的batchnorm,ReLU。降采样通过在深度卷积和第一层做跨步卷积来实现。最后平均池化层减少空间分辨率到1,之后一个全连接层。把深度层和点卷积分开计数,MobileNet一共28层。

It is not enough to simply define networks in terms of a small number of Mult-Adds. It is also important to make sure these operations can be efficiently implementable. For instance unstructured sparse matrix operations are not typically faster than dense matrix operations until a very high level of sparsity. Our model structure puts nearly all of the computation into dense 1 × 1 convolutions. This can be implemented with highly optimized general matrix multiply (GEMM) functions. Often convolutions are implemented by a GEMM but require an initial reordering in memory called im2col in order to map it to a GEMM. For instance, this approach is used in the popular Caffe package [15]. 1×1 convolutions do not require this reordering in memory and can be implemented directly with GEMM which is one of the most optimized numerical linear algebra algorithms. MobileNet spends 95% of it’s computation time in 1 × 1 convolutions which also has 75% of the parameters as can be seen in Table 2. Nearly all of the additional parameters are in the fully connected layer.

多加了几个小数量并不能简单定义网络。确定这些操作能够被有效实现也很重要。比如未结构化的稀疏矩阵操作并不比密集的矩阵速度快。我们的模型结构几乎把所有计算都花在密集的1 x 1卷积上。高度优化的一般矩阵乘法方程可以来实现。通常,卷积通过一个GEMM来实现,但也需要在内存上初始的重新排序叫做im2col来为GEMM寻址。比如,这个方法用在最流行的Caffe包里。1 x 1卷积不需要这个在内存上重新排序,能够直接实现GEMM,这是一个最优化数值线性代数算法。MobileNet花费了95%的计算时间在1x1卷积上,75%个1x1卷积参数可以再Table2上看到。几乎额外的参数都在全连接层了。

MobileNet models were trained in TensorFlow [1] using RMSprop [33] with asynchronous gradient descent similar to Inception V3 [31]. However, contrary to training large models we use less regularization and data augmentation techniques because small models have less trouble with overfitting. When training MobileNets we do not use side heads or label smoothing and additionally reduce the amount image of distortions by limiting the size of small crops that are used in large Inception training [31]. Additionally, we found that it was important to put very little or no weight decay (l2 regularization) on the depthwise filters since their are so few parameters in them. For the ImageNet benchmarks in the next section all models were trained with same training parameters regardless of the size of the model.

MobileNet模型在TensorFlow上训练使用RMSprop和异步梯度下降类似于Inception V3.然而,相较于训练大模型,我们使用更少的正则化和数据增强技术,因为小模型有更少的过拟合问题。当训练MobileNet,我们不用侧头或标签平滑并通过限制小的用在大Inception训练的crops的尺寸,来减少失真的图像的数量。另外,我们发现在深度发现滤波器上放很少甚至没有的权重衰减(l2 正则化)很重要,因此他们放了很少的参数。对于ImageNet benchmarks在下一部分,所有的模型都用了相同的训练参数,无论模型的大小。

3.3 Width Multiplier: Thinner Models

Although the base MobileNet architecture is already small and low latency, many times a specific use case or application may require the model to be smaller and faster. In order to construct these smaller and less computationally expensive models we introduce a very simple parameter α called width multiplier. The role of the width multiplier α is to thin a network uniformly at each layer. For a given layer and width multiplier α, the number of input channels M becomes αM and the number of output channels N becomes αN.

虽然基本的MobileNet结构已经很小,延时也很少,但很多使用场景和应用都需要更小更快的模型。为了建造这些更小更少计算量的模型,我们引入了一个很简单的参数α叫做宽度系数。宽度系数α的角色就是为了在每一层统一变瘦。给定一个层和宽度系数α,输入通道M的数量变成αM, 输出通道N的数量变成αN。

The computational cost of a depthwise separable convolution with width multiplier α is:

带有宽度系数α的深度可分离卷积计算量为:

$$D_K·D_K·αM·D_F·D_F + αM·αN·D_F·D_F$$

where α ∈ (0, 1] with typical settings of 1, 0.75, 0.5 and 0.25. α = 1 is the baseline MobileNet and α < 1 are reduced MobileNets. Width multiplier has the effect of reducing computational cost and the number of parameters quadratically by roughly α2. Width multiplier can be applied to any model structure to define a new smaller model with a reasonable accuracy, latency and size trade off. It is used to define a new reduced structure that needs to be trained from scratch.

其中,α ∈ (0, 1]典型设置为1,0.75,0.5,0.25。α = 1是MobileNet基线α< 1就是减少的MobileNet。宽度系数有减小计算量的作用,大约α平方的参数量。宽度系数能够用在任何模型结构来定义一个新的更小的模型,模型具有合理的准确性,延时,和尺寸权衡。它被用来定义一个需要从零开始训练的新的简化结构。

3.4 Resolution Multiplier: Reduced Representation

The second hyper-parameter to reduce the computational cost of a neural network is a resolution multiplier ρ. We apply this to the input image and the internal representation of every layer is subsequently reduced by the same multiplier. In practice we implicitly set ρ by setting the input resolution.

另一个减少神经网络的计算量的是分辨率系数ρ。我们把这个应用到图片然后每个层的内部表示随后被相同的系数所减少。在实践中我们通过设定输入的分辨率来隐式设置ρ。

We can now express the computational cost for the core layers of our network as depthwise separable convolutions with width multiplier α and resolution multiplier ρ:

我们现在能够表示我们网络的核心层计算量为深度可分离卷积宽度系数α和分辨率系数ρ:

$$D_K·D_K·αM·ρD_F·ρD_F+αM·αN·ρD_F·ρD_F$$

where ρ ∈ (0, 1] which is typically set implicitly so that the input resolution of the network is 224, 192, 160 or 128. ρ = 1 is the baseline MobileNet and ρ < 1 are reduced computation MobileNets. Resolution multiplier has the effect of reducing computational cost by ρ2.

其中ρ ∈ (0, 1],典型的设定输入分辨率网络为224,192,160,或128。ρ = 1是MobileNet基准线,ρ < 1就是减小计算的MobileNets。分辨率系数可以减少计算量${ρ^2}

As an example we can look at a typical layer in MobileNet and see how depthwise separable convolutions, width multiplier and resolution multiplier reduce the cost and parameters. Table 3 shows the computation and number of parameters for a layer as architecture shrinking methods are sequentially applied to the layer. The first row shows the Mult-Adds and parameters for a full convolutional layer with an input feature map of size 14 × 14 × 512 with a kernel K of size 3 × 3 × 512 × 512. We will look in detail in the next section at the trade offs between resources and accuracy.

作为一个例子,我们可以看MobileNet中典型层,同时可以看看深度可分离卷积,宽度系数和分辨率系数减少损失和参数。Table3展示了一层的计算和参数数量,而结构收缩方法随后被用到了层中。第一排显示输入特征图为14 x 14 x 512的,尺寸为3 x 3 x 512 x 512的核K的全卷积层的多加的参数。我们可以在下一部分看到关于权衡资源和准确率的细节。

4. Experiments

In this section we first investigate the effects of depthwise convolutions as well as the choice of shrinking by reducing the width of the network rather than the number of layers. We then show the trade offs of reducing the network based on the two hyper-parameters: width multiplier and resolution multiplier and compare results to a number of popular models. We then investigate MobileNets applied to a number of different applications.

在这一部分我们先看了深度方向卷积的效果,通过减少网络宽度而不是层数的收缩的选择。然后会展示基于两个超参数:宽度系数和分辨率系数的网络和比较更多流行模型的结果。MobileNets应用在不同的应用中。

4.1 Model Choices

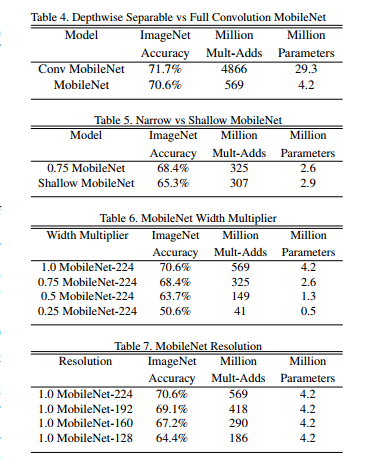

First we show results for MobileNet with depthwise separable convolutions compared to a model built with full convolutions.In Table 4 we see that using depthwise separable convolutions compared to full convolutions only reduces accuracy by 1% on ImageNet was saving tremendously on mult-adds and parameters. We next show results comparing thinner models with width multiplier to shallower models using less layers. To make MobileNet shallower, the 5 layers of separable filters with feature size 14 × 14 × 512 in Table 1 are removed. Table 5 shows that at similar computation and number of parameters, that making MobileNets thinner is 3% better than making them shallower.

首先我们展示深度可分离卷积的MobileNet结果和全卷积模型的比较。在Table 4中我们可以看到使用深度可分离卷积和只是全卷积比较只减少了1%的准确率在ImageNet上,却节省了巨大的增加和参数。然后我们比较了更瘦的层和更浅的层。为了让MobileNet更浅,Table 1中特征尺寸14 x 14 x 512可分离滤波器的五层被去掉了。Table 5展示了相似的计算量和参数,更瘦的MobileNets比更浅的好3%。

4.2 Model Shrinking Hyperparameters

Table 6 shows the accuracy, computation and size trade offs of shrinking the MobileNet architecture with the width multiplier α. Accuracy drops off smoothly until the architecture is made too small at α = 0.25.

表6显示带有宽度系数α的MobileNet结构的准确率,计算和大小权衡。准确度平滑下降直到结构变为太小的值α=0.25

Table 7 shows the accuracy, computation and size trade offs for different resolution multipliers by training MobileNets with reduced input resolutions. Accuracy drops off smoothly across resolution.

表7显示训练减小输入分辨率的MobileNets的不同分辨率系数的准确率,计算量和大小的权衡。准确率随分辨率平滑下降。

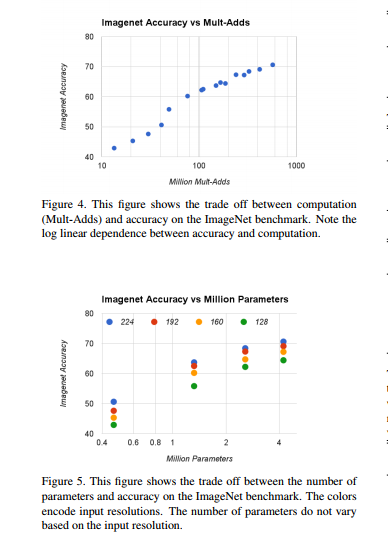

Figure 4 shows the trade off between ImageNet Accuracy and computation for the 16 models made from the cross product of width multiplier α ∈ {1, 0.75, 0.5, 0.25} and resolutions {224, 192, 160, 128}. Results are log linear with a jump when models get very small at α = 0.25.

图4显示宽度系数α ∈ {1, 0.75, 0.5, 0.25}和分辨率 {224, 192, 160, 128}的交叉生成的16个模型的ImageNet准确率和计算量。结果呈log线性,当α=0.25非常小的时候结果会有一个跳跃。

Figure 5 shows the trade off between ImageNet Accuracy and number of parameters for the 16 models made from the cross product of width multiplier α ∈ {1, 0.75, 0.5, 0.25} and resolutions {224, 192, 160, 128}.

图5显示宽度系数α ∈ {1, 0.75, 0.5, 0.25}和分辨率 {224, 192, 160, 128}的交叉生成的16个模型的ImageNet准确率和计算量。

Table 8 compares full MobileNet to the original GoogleNet [30] and VGG16 [27]. MobileNet is nearly as accurate as VGG16 while being 32 times smaller and 27 times less compute intensive. It is more accurate than GoogleNet while being smaller and more than 2.5 times less computation.

表8比较了整个的MobileNet和原始的GoogleNet和VGG16. MobileNet准确率很接近VGG16,但尺寸小了32倍,计算强度也缩小了27倍。准确率比GoogleNet更高,而得到更小的尺寸和缩小的计算量超过2.5倍。

Table 9 compares a reduced MobileNet with width multiplier α = 0.5 and reduced resolution 160 × 160. Reduced MobileNet is 4% better than AlexNet [19] while being 45× smaller and 9.4× less compute than AlexNet. It is also 4% better than Squeezenet [12] at about the same size and 22× less computation.

图9比较了减少的MobileNet和宽度系数α=0.5,和减少分辨率160 x 160.减小的MobileNet4%好于AlexNet,而尺寸是AlexNet的1/45,,计算量小9.4倍。它也好于Squeezenet4%, 同样的尺寸,22倍更少的计算量。

4.3 Fine Grained Recognition

We train MobileNet for fine grained recognition on the Stanford Dogs dataset [17]. We extend the approach of [18] and collect an even larger but noisy training set than [18] from the web. We use the noisy web data to pretrain a fine grained dog recognition model and then fine tune the model on the Stanford Dogs training set. Results on Stanford Dogs test set are in Table 10. MobileNet can almost achieve the state of the art results from [18] at greatly reduced computation and size.

我们训练MobileNet针对细粒识别再Stanford Dogs数据库。我们延伸了我们[18]的方法并从网上采集了一个比[18]大的多的但有噪声的训练集。我们使用网上有噪声数据来预训练细粒够识别模型然后在Stanford Dogs训练集中微调模型。表10中展示了Stanford Dogs的测试集结果。MobileNet几乎能达到最好结果并极大地减少的计算量和尺寸。

4.4 Large Scale Geolocalization

PlaNet [35] casts the task of determining where on earth a photo was taken as a classification problem. The approach divides the earth into a grid of geographic cells that serve as the target classes and trains a convolutional neural network on millions of geo-tagged photos. PlaNet has been shown to successfully localize a large variety of photos and to outperform Im2GPS [6, 7] that addresses the same task.

PlaNet提出确定图片中某个位置当作分类任务。这个方法把地球分成了地理网格,作为目标类,并在数以百万计的地理标记的照片上训练一个卷积神经网络。PlaNet非常成功的定位了大量的图片,并在解决相同的任务上超越了Im2GPS。

We re-train PlaNet using the MobileNet architecture on the same data. While the full PlaNet model based on the Inception V3 architecture [31] has 52 million parameters and 5.74 billion mult-adds. The MobileNet model has only 13 million parameters with the usual 3 million for the body and 10 million for the final layer and 0.58 Million mult-adds. As shown in Tab. 11, the MobileNet version delivers only slightly decreased performance compared to PlaNet despite being much more compact. Moreover, it still outperforms Im2GPS by a large margin.

我们在同样的数据上使用MobileNet结构重新训练了PlaNet。当基于Inception V3结构的全部PlaNet模型有5.2千万个参数和57.4亿个额外值。MobileNet模型仅有1.3千万个参数,其中主体有3百万个,最后的层有1千万个,和58万个额外值。如表11所示,MobileNet在性能上只差一点点,却有很大的压缩。另外,在定位上也大幅度超越Im2GPS。

4.5 Face Attributes

Another use-case for MobileNet is compressing large systems with unknown or esoteric training procedures. In a face attribute classification task, we demonstrate a synergistic relationship between MobileNet and distillation [9], a knowledge transfer technique for deep networks. We seek to reduce a large face attribute classifier with 75 million parameters and 1600 million Mult-Adds. The classifier is trained on a multi attribute dataset similar to YFCC100M [32].

MobileNet的另一个应用是压缩位置或深奥的训练程序的大系统。在人脸属性分类任务,我们验证了MobileNet和提取的协同关系,一个深度网络的知识迁移技术。我们寻找减少大的同游7.5千万参数和16亿额外值得人脸属性分类器。分类器在多属性类似于YFCC100M的数据库中训练。

We distill a face attribute classifier using the MobileNet architecture. Distillation [9] works by training the classifier to emulate the outputs of a larger model2 instead of the ground-truth labels, hence enabling training from large (and potentially infinite) unlabeled datasets. Marrying the scalability of distillation training and the parsimonious parameterization of MobileNet, the end system not only requires no regularization (e.g. weight-decay and early-stopping), but also demonstrates enhanced performances. It is evident from Tab. 12 that the MobileNet-based classifier is resilient to aggressive model shrinking: it achieves a similar mean average precision across attributes (mean AP) as the in-house while consuming only 1% the Multi-Adds.

我们使用MobileNet结构来提取一个人脸属性分类器。Distillation工作通过对较大的模型训练分类器代替真实标签的输出,因此能够训练很大的未标签数据集。把提取训练的可扩展性和MobileNet的简化参数相结合,末端系统不仅不需要正则化(比如权重衰减和早期停止),同时也提高了性能。从表12中可以看出基于MobileNet分类器对收缩模型是有弹性的:它内部有类似的平均精度的属性,同时只增加1%的损耗。

4.6 Object Detection

MobileNet can also be deployed as an effective base network in modern object detection systems. We report results for MobileNet trained for object detection on COCO data based on the recent work that won the 2016 COCO challenge [10]. In table 13, MobileNet is compared to VGG and Inception V2 [13] under both Faster-RCNN [23] and SSD [21] framework. In our experiments, SSD is evaluated with 300 input resolution (SSD 300) and Faster-RCNN is compared with both 300 and 600 input resolution (FasterRCNN 300, Faster-RCNN 600). The Faster-RCNN model evaluates 300 RPN proposal boxes per image. The models are trained on COCO train+val excluding 8k minival images and evaluated on minival. For both frameworks, MobileNet achieves comparable results to other networks with only a fraction of computational complexity and model size.

MobileNet在现代物体检测系统中也是有效的基本模型。我们基于2016赢得COCO挑战的COCO数据训练了物体检测模型。在表13中,MobileNet相比VGG和InceptionV2在同样的Faster-RCNN和SSD框架下。在我们的实验中,SSD输入分辨率300,和Faster-RCNN输入分辨率300和600比较。Faster-RCNN模型每张图300个候选框。我们在COCO train+val上训练,另外还有8k张minival图像,并在minival上验证。对于两个平台,MobileNet相较于其他网络,只占计算复杂度和模型尺寸的一小部分。

4.7 Face Embeddings

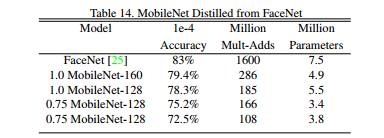

The FaceNet model is a state of the art face recognition model [25]. It builds face embeddings based on the triplet loss. To build a mobile FaceNet model we use distillation to train by minimizing the squared differences of the output of FaceNet and MobileNet on the training data. Results for very small MobileNet models can be found in table 14.

FaceNet模型是人脸识别模型的最佳模型。它基于三元损失建立人脸嵌入。为了建立移动FaceNet模型,我们使用distillation来通过FaceNet和MobileNet在训练数据上输出的最小平方差训练。非常小的MobileNet模型的结果可以在表14中看到.

5. Conclusion

We proposed a new model architecture called MobileNets based on depthwise separable convolutions. We investigated some of the important design decisions leading to an efficient model. We then demonstrated how to build smaller and faster MobileNets using width multiplier and resolution multiplier by trading off a reasonable amount of accuracy to reduce size and latency. We then compared different MobileNets to popular models demonstrating superior size, speed and accuracy characteristics. We concluded by demonstrating MobileNet’s effectiveness when applied to a wide variety of tasks. As a next step to help adoption and exploration of MobileNets, we plan on releasing models in Tensor Flow.

我们提出一种新基于深度可分离卷积的的模型结构叫做MobileNets。我们调查了一些得到高效模型的一些重要设计策略。然后我们解释了如何建立更小更快的MobileNets使用了宽度系数和分辨率系数来做减少尺寸和延时和合理的准确率的权衡。然后我们比较了MobileNets和一些流行的模型,验证了更好的尺寸速度和准确率特征。最后我们阐述了MobileNets在广阔领域任务的有效性。接着,为了帮助采用和体验MobileNets,我们计划发布TensorFlow版模型。