前言

当你在买房子的时候会考虑什么?房子的面积?地理位置?产权年限?是否有地下室?多少楼层?是否学区房?交通是否便利?周围设施是否完整?等等。。。没错,当你想要的要求越来越高时,房子的价格也会越来越高,那么如何根据不同的要求来预测房价呢?这就是该篇博文要讲的内容。感谢kaggle,可以让我们获得那么多的数据来建立模型~~~

好了,其实要求很简单啦,就是根据房子不同的特征(包括面积、位置、产权等)来预测房价!

训练数据

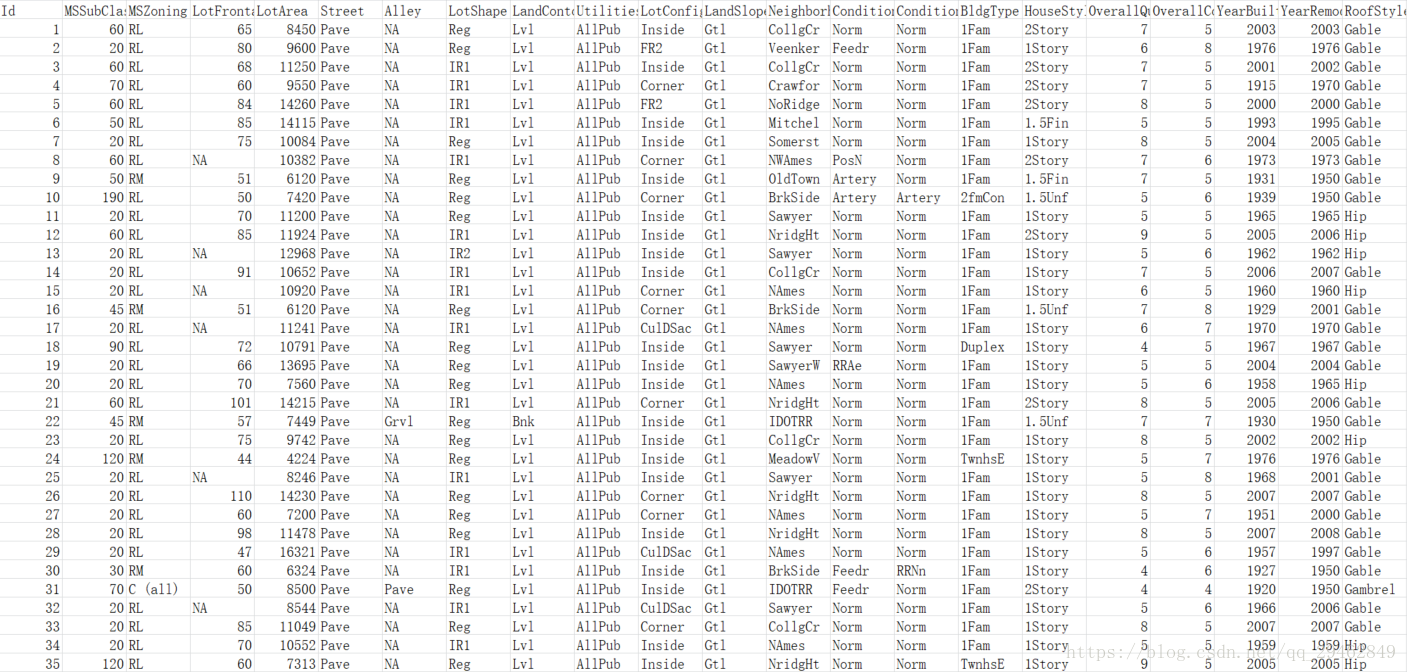

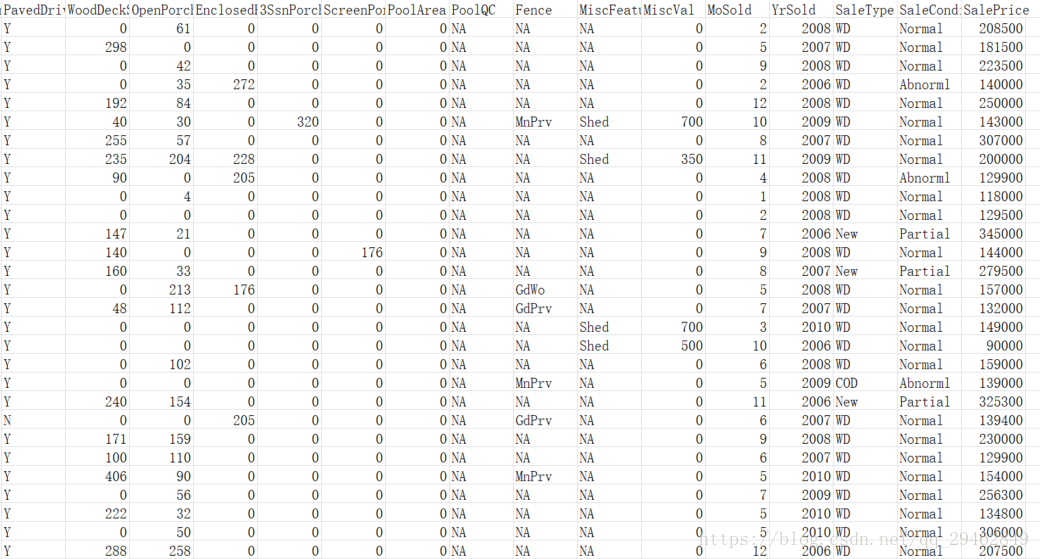

kaggle中的数据大多数是以.csv文件保存,这篇博文所涉及的数据亦是如此,下面来看下训练数据吧:

希望你没有密集恐惧症,一条数据包括房子的各个特征值以及销售价格~这里每条数据一共79个特征,1460个训练数据,1459个测试数据,分别保存在train.csv和test.csv文件中。

实现过程

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import matplotlib.pyplot as plt # Matlab-style plotting

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

color = sns.color_palette()

sns.set_style('darkgrid')

import warnings

def ignore_warn(*args, **kwargs):

pass

warnings.warn = ignore_warn #ignore annoying warning (from sklearn and seaborn)

from scipy import stats

from scipy.stats import norm, skew #for some statistics

train = pd.read_csv('C:/Users/new/Desktop/data/train.csv')

test = pd.read_csv('C:/Users/new/Desktop/data/test.csv')

print(train.shape,test.shape)

# print(train.head(5))

#在这里存取train和test的ID

train_ID = train['Id']

test_ID = test['Id']

#Now drop the 'Id' colum since it's unnecessary for the prediction process.

train.drop("Id", axis = 1, inplace = True)

test.drop("Id", axis = 1, inplace = True)

#check again the data size after dropping the 'Id' variable

print("\nThe train data size after dropping Id feature is : {} ".format(train.shape))

print("The test data size after dropping Id feature is : {} ".format(test.shape))

#把训练数据和测试数据连接到一起,方便后面的统一处理和编码

#这里是记录train和test各有多少数据,既然有连接,到后面处理结束,必然还会有分开,这是用来分开数据的参照

ntrain = train.shape[0]

ntest = test.shape[0]

#保存训练数据的标签y_train

y_train = train.SalePrice.values

all_data = pd.concat((train, test)).reset_index(drop=True)

#把train_data中的SalePrice数据给剔除掉

all_data.drop(['SalePrice'], axis=1, inplace=True)

print("all_data size is : {}".format(all_data.shape))

#[2919,79]

#在这里输出缺失值最严重的前20个特征

all_data_na = (all_data.isnull().sum() / len(all_data)) * 100

all_data_na = all_data_na.drop(all_data_na[all_data_na == 0].index).sort_values(ascending=False)[:30]

missing_data = pd.DataFrame({'Missing Ratio' :all_data_na})

print(missing_data.head(20))

#缺失值的填充,关于缺失值填充内容,可以参考pandas相关介绍

all_data["PoolQC"] = all_data["PoolQC"].fillna("None")

all_data["MiscFeature"] = all_data["MiscFeature"].fillna("None")

all_data["Alley"] = all_data["Alley"].fillna("None")

all_data["Fence"] = all_data["Fence"].fillna("None")

all_data["FireplaceQu"] = all_data["FireplaceQu"].fillna("None")

all_data["LotFrontage"] = all_data.groupby("Neighborhood")["LotFrontage"].transform(

lambda x: x.fillna(x.median()))

for col in ('GarageType', 'GarageFinish', 'GarageQual', 'GarageCond'):

all_data[col] = all_data[col].fillna('None')

for col in ('GarageYrBlt', 'GarageArea', 'GarageCars'):

all_data[col] = all_data[col].fillna(0)

for col in ('BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF','TotalBsmtSF', 'BsmtFullBath', 'BsmtHalfBath'):

all_data[col] = all_data[col].fillna(0)

for col in ('BsmtQual', 'BsmtCond', 'BsmtExposure', 'BsmtFinType1', 'BsmtFinType2'):

all_data[col] = all_data[col].fillna('None')

all_data["MasVnrType"] = all_data["MasVnrType"].fillna("None")

all_data["MasVnrArea"] = all_data["MasVnrArea"].fillna(0)

all_data['MSZoning'] = all_data['MSZoning'].fillna(all_data['MSZoning'].mode()[0])

#在这里有舍弃了一个特征,原因是这个特征对房价的贡献不大,可以舍弃

all_data = all_data.drop(['Utilities'], axis=1)

all_data["Functional"] = all_data["Functional"].fillna("Typ")

all_data['Electrical'] = all_data['Electrical'].fillna(all_data['Electrical'].mode()[0])

all_data['KitchenQual'] = all_data['KitchenQual'].fillna(all_data['KitchenQual'].mode()[0])

all_data['Exterior1st'] = all_data['Exterior1st'].fillna(all_data['Exterior1st'].mode()[0])

all_data['Exterior2nd'] = all_data['Exterior2nd'].fillna(all_data['Exterior2nd'].mode()[0])

all_data['SaleType'] = all_data['SaleType'].fillna(all_data['SaleType'].mode()[0])

all_data['MSSubClass'] = all_data['MSSubClass'].fillna("None")

#判断还有没有未填补的缺失值

all_data_na = (all_data.isnull().sum() / len(all_data)) * 100

all_data_na = all_data_na.drop(all_data_na[all_data_na == 0].index).sort_values(ascending=False)

missing_data = pd.DataFrame({'Missing Ratio' :all_data_na})

print(missing_data.head())

#对一些类别变量进行编码,使其转换成可供机器学习模型处理的数值变量

from sklearn.preprocessing import LabelEncoder

cols = ('FireplaceQu', 'BsmtQual', 'BsmtCond', 'GarageQual', 'GarageCond',

'ExterQual', 'ExterCond','HeatingQC', 'PoolQC', 'KitchenQual', 'BsmtFinType1',

'BsmtFinType2', 'Functional', 'Fence', 'BsmtExposure', 'GarageFinish', 'LandSlope',

'LotShape', 'PavedDrive', 'Street', 'Alley', 'CentralAir', 'MSSubClass', 'OverallCond',

'YrSold', 'MoSold')

# process columns, apply LabelEncoder to categorical features

for c in cols:

lbl = LabelEncoder()

lbl.fit(list(all_data[c].values))

all_data[c] = lbl.transform(list(all_data[c].values))

# shape

print('Shape all_data: {}'.format(all_data.shape))

#[2919,78]

#拿到所有数据

all_data = pd.get_dummies(all_data)

print(all_data.shape)

#分离开train和test

train = all_data[:ntrain]

test = all_data[ntrain:]

#建立回归模型,决策树回归模型,在这里只使用了两种模型,有兴趣的话可以多测试几个模型,看哪个结果比较好

from sklearn.tree import DecisionTreeRegressor

#划分训练集和测试集

train_X, val_X, train_y, val_y = train_test_split(train, y_train,random_state = 0)

# 定义第一个回归模型,决策树回归

melbourne_model = DecisionTreeRegressor()

# Fit model

melbourne_model.fit(train_X, train_y)

# get predicted prices on validation data

val_predictions = melbourne_model.predict(val_X)

#测试该模型的误差

print(mean_absolute_error(val_y, val_predictions))

#定义第二个回归模型,随机森林回归模型

forest_model = RandomForestRegressor()

forest_model.fit(train_X, train_y)

melb_preds = forest_model.predict(val_X)

print(mean_absolute_error(val_y, melb_preds))

#根据结果,可看出随机森林回归模型要好于决策树回归,故采用随机森林回归模型

_forest = RandomForestRegressor()

_forest.fit(train, y_train)

predictions = _forest.predict(test)

print(predictions)

submission = pd.DataFrame(predictions, columns=['SalePrice'])

submission.insert(0, 'id', test_ID)

# Export Submission

submission.to_csv('C:/Users/new/Desktop/data/submission.csv', index = False)#把结果写进提交文件中

最终结果

对test.csv进行预测,得出结果如下:

id SalePrice

1461 124890

1462 160360

1463 186900

1464 186190

1465 202750

1466 183324

1467 163350

1468 176988

1469 177939

1470 107520

1471 200026

1472 147405

1473 108850

1474 158100

1475 143470

1476 393398

1477 252725

1478 302755

1479 241540

1480 410315

1481 288166

1482 202573

1483 176272

训练数据

本博文训练使用的数据可从SalePrice Data 处下载。