code:

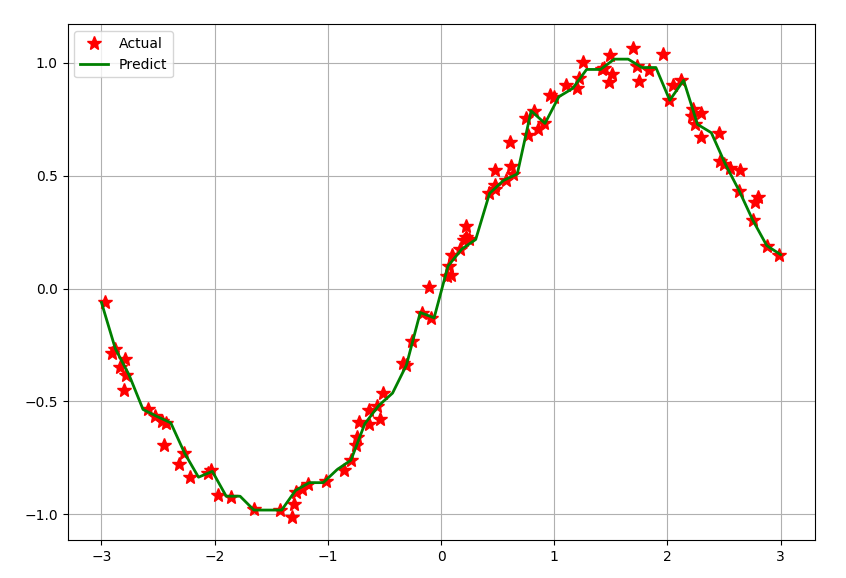

import numpy as np

import matplotlib.pyplot as plt

from sklearn.tree import DecisionTreeRegressor

if __name__ =='__main__':

N = 100

# 获取100个在[-3, 3)之间的数据

x = np.random.rand(N) * 6 - 3

x.sort()

# y值加入了一点随机噪声

y = np.sin(x) + np.random.randn(N) * 0.05

print("y:\n", y)

x = x.reshape(-1, 1)

print("x:\n", x)

# 使用决策树进行训练

reg = DecisionTreeRegressor(criterion='mse', max_depth=9)

dt = reg.fit(x, y)

x_test = np.linspace(-3, 3, 50).reshape(-1, 1)

# 预测值

y_hat = dt.predict(x_test)

plt.plot(x, y, 'r*', ms=10, label='Actual')

plt.plot(x_test, y_hat, 'g-', lw=2, label='Predict')

plt.legend(loc='upper left')

plt.grid()

plt.show()

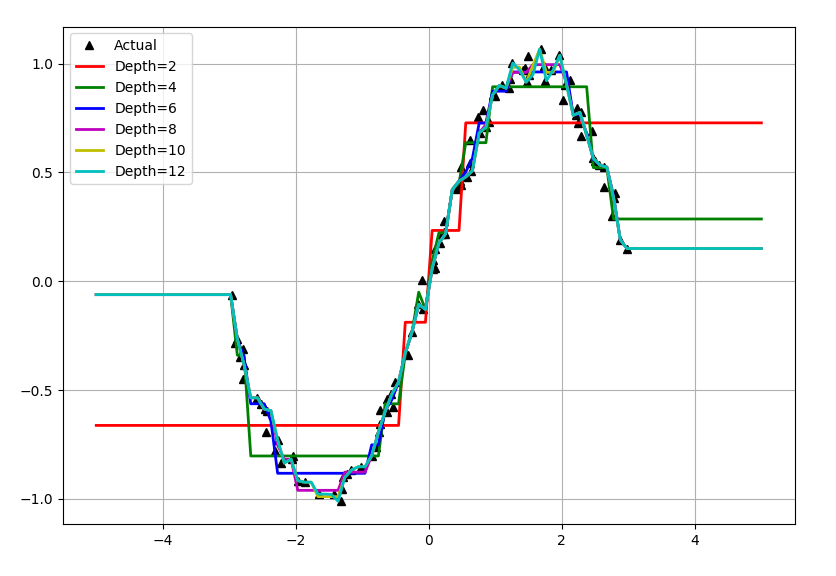

depth = [2, 4, 6, 8, 10, 12]

clr = 'rgbmyc'

reg=[

DecisionTreeRegressor(criterion='mse', max_depth=depth[0]),

DecisionTreeRegressor(criterion='mse', max_depth=depth[1]),

DecisionTreeRegressor(criterion='mse', max_depth=depth[2]),

DecisionTreeRegressor(criterion='mse', max_depth=depth[3]),

DecisionTreeRegressor(criterion='mse', max_depth=depth[4]),

DecisionTreeRegressor(criterion='mse', max_depth=depth[5]),

]

plt.plot(x, y, 'k^', lw=2, label='Actual')

x_test = np.linspace(-5, 5, 100).reshape(-1, 1)

for i, r in enumerate(reg):

dt = r.fit(x, y)

y_hat = dt.predict(x_test)

plt.plot(x_test, y_hat, '-', color=clr[i], lw=2, label='Depth=%d' % depth[i])

plt.legend(loc='upper left')

plt.grid()

plt.show()

树结构越深对数据的拟合越好,可也会可能导致过拟合。