paper:https://arxiv.org/abs/2303.15433

code: https://github.com/VinAIResearch/Anti-DreamBooth.git.

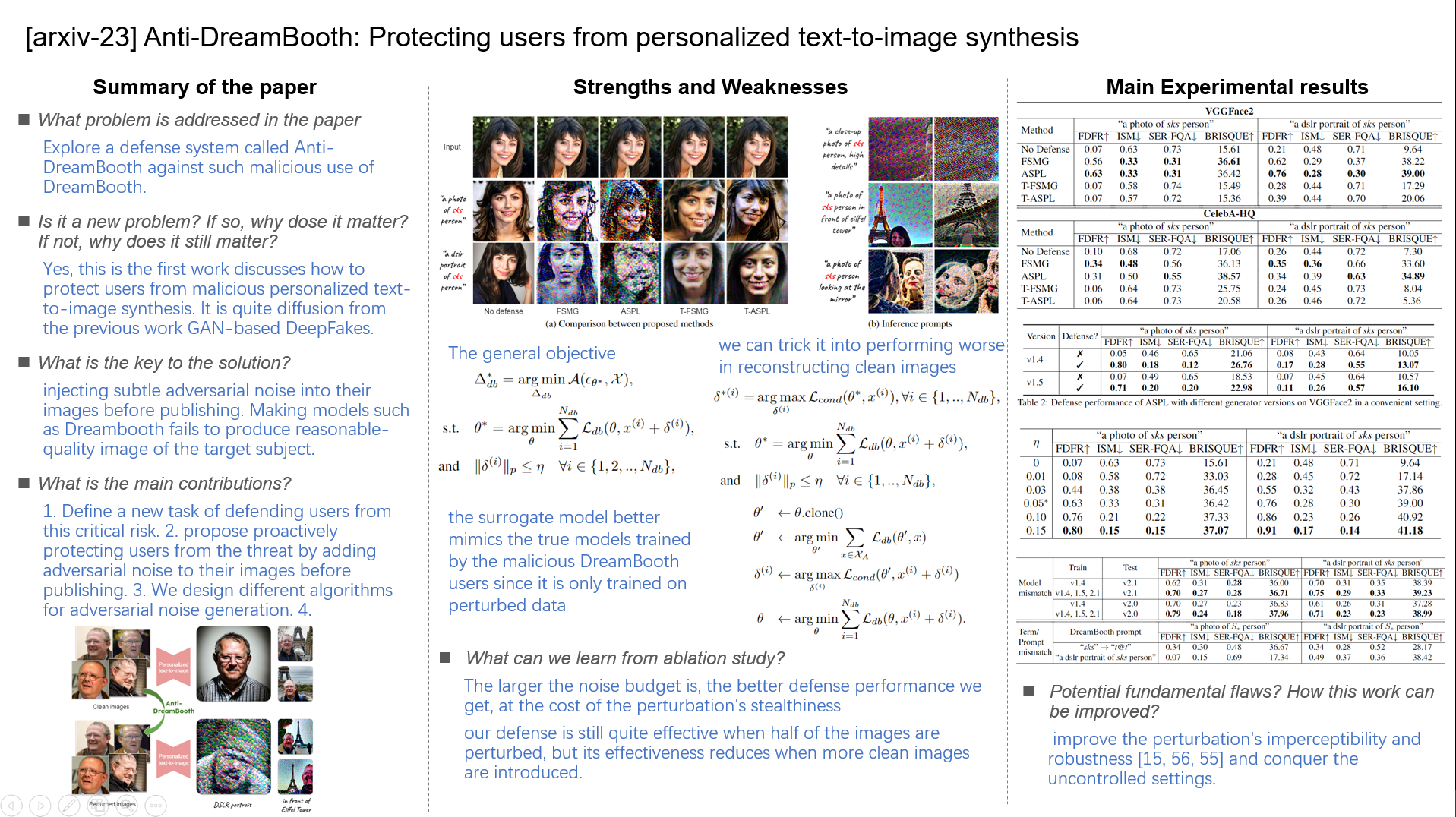

Overview

Abastract

Text-to-image diffusion models are nothing but a revolution, allowing anyone, even without design skills, to create realistic images from simple text inputs. With powerful personalization tools like DreamBooth, they can generate images of a specific person just by learning from his/her few reference images. However, when misused, such a powerful and convenient tool can produce fake news or disturbing content targeting any individual victim, posing a severe negative social impact. In this paper, we explore a defense system called Anti-DreamBooth against such malicious use of DreamBooth. The system aims to add subtle noise perturbation to each user’s image before publishing in order to disrupt the generation quality of any DreamBooth model trained on these perturbed images. We investigate a wide range of algorithms for perturbation optimization and extensively evaluate them on two facial datasets over various text-to-image model versions. Despite the complicated formulation of DreamBooth and Diffusion-based text-to-image models, our methods effectively defend users from the malicious use of those models. Their effectiveness withstands even adverse conditions, such as model or prompt/term mismatching between training and testing. Our code will be available at https://github.com/VinAIResearch/Anti-DreamBooth.git.

Results

Figure 1: A malicious attacker can collect a user’s images to train a personalized text-to-image generator for malicious purposes. Our system, called Anti-DreamBooth, applies imperceptible perturbations to the user’s images before releasing, making any personalized generator trained on these images fail to produce usable images, protecting the user from that threat.

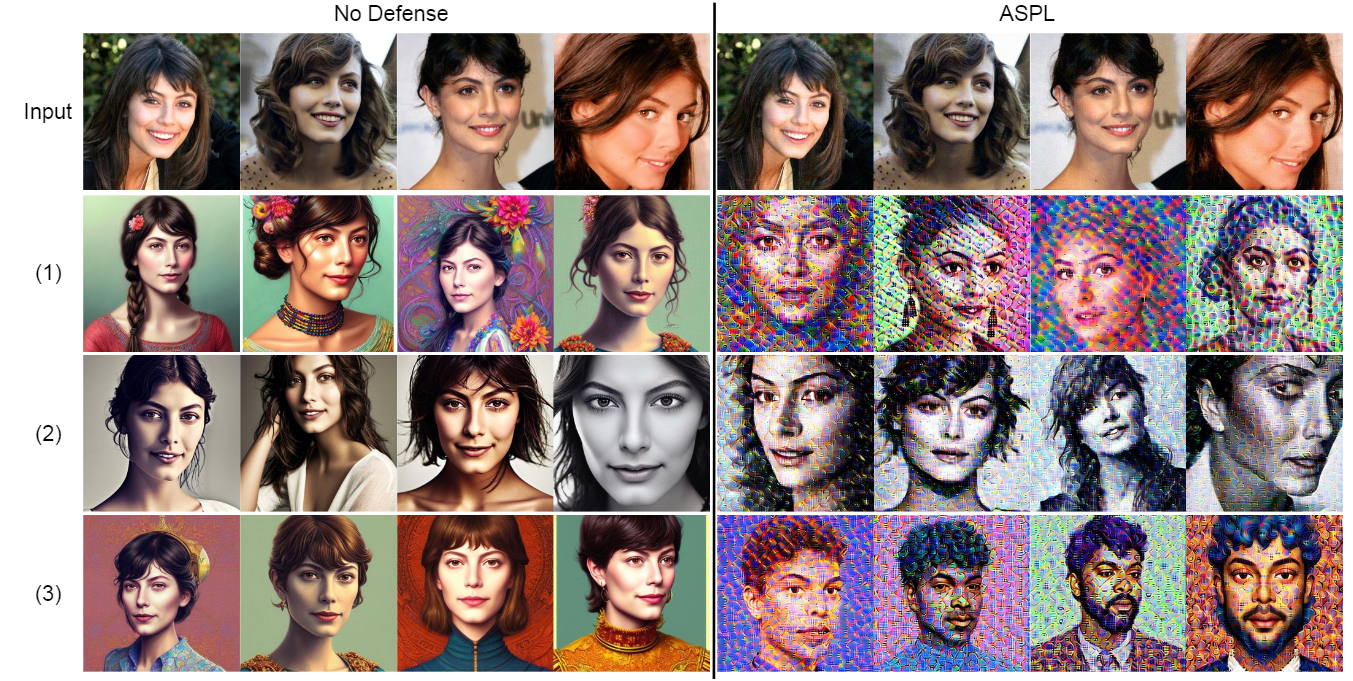

Figure 2: Qualitative defense results for two subjects in VGGFace2 in the convenient setting. Best viewed in zoom.

Figure 3: Disrupting personalized images generated by Astria (SD v1.5 with face detection enabled). The prompts for image generation include: (1) “portrait of sks person portrait wearing fantastic Hand-dyed cotton clothes, embellished beaded feather decorative fringe knots, colorful pigtail, subtropical flowers and plants, symmetrical face, intricate, elegant, highly detailed, 8k, digital painting, trending on pinterest, harper’s bazaar, concept art, sharp focus, illustration, by artgerm, Tom Bagshaw, Lawrence Alma-Tadema, greg rutkowski, alphonse Mucha”, (2) “close up of face of sks person fashion model in white feather clothes, official balmain editorial, dramatic lighting highly detailed”, and (3) “portrait of sks person prince :: by Martine Johanna and Simon St ̊alenhag and Chie Yoshii and Casey Weldon and wlop :: ornate, dynamic, particulate, rich colors, intricate, elegant, highly detailed, centered, artstation, smooth, sharp focus, octane render, 3d”

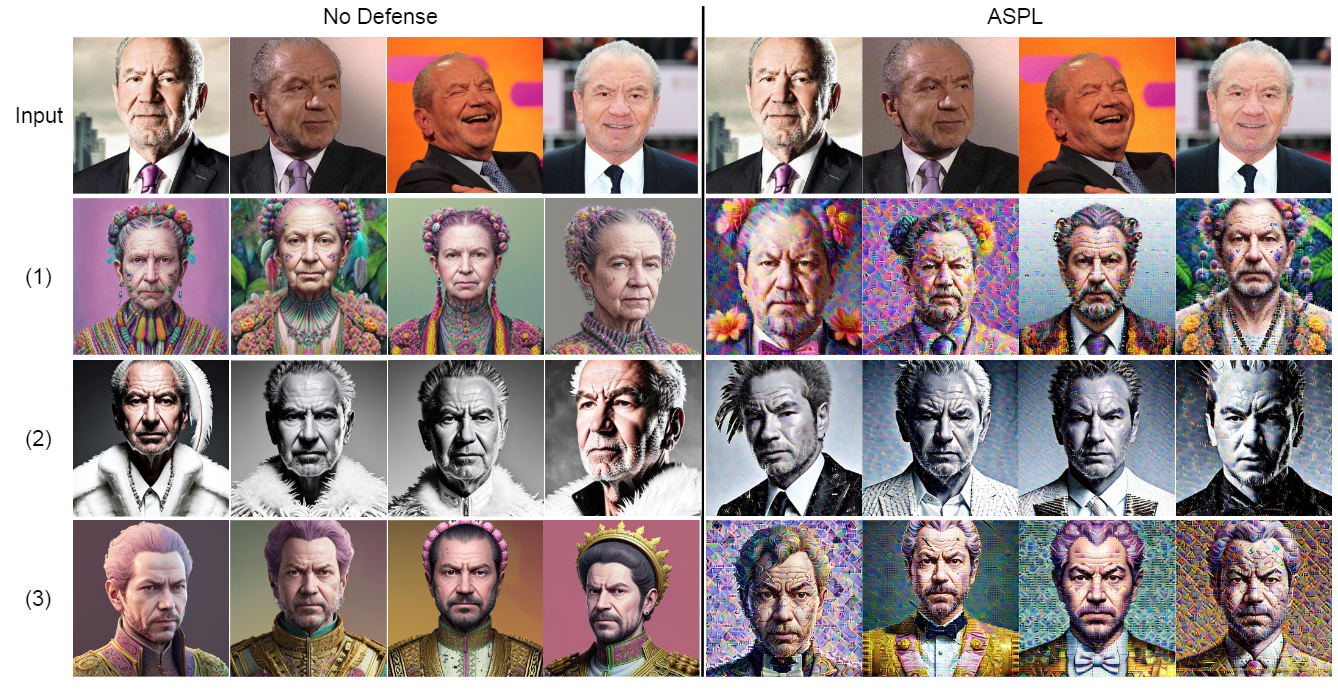

Figure 4: Disrupting personalized images generated by Astria (Protogen with Prism and face detection enabled). The prompts for image generation include: (1) “portrait of sks person portrait wearing fantastic Hand-dyed cotton clothes, embellished beaded feather decorative fringe knots, colorful pigtail, subtropical flowers and plants, symmetrical face, intricate, elegant, highly detailed, 8k, digital painting, trending on pinterest, harper’s bazaar, concept art, sharp focus, illustration, by artgerm, Tom Bagshaw, Lawrence Alma-Tadema, greg rutkowski, alphonse Mucha”, (2) “close up of face of sks person fashion model in white feather clothes, official balmain editorial, dramatic lighting highly detailed”, and (3) “portrait of sks person prince :: by Martine Johanna and Simon St ̊alenhag and Chie Yoshii and Casey Weldon and wlop :: ornate, dynamic, particulate, rich colors, intricate, elegant, highly detailed, centered, artstation, smooth, sharp focus, octane render, 3d”

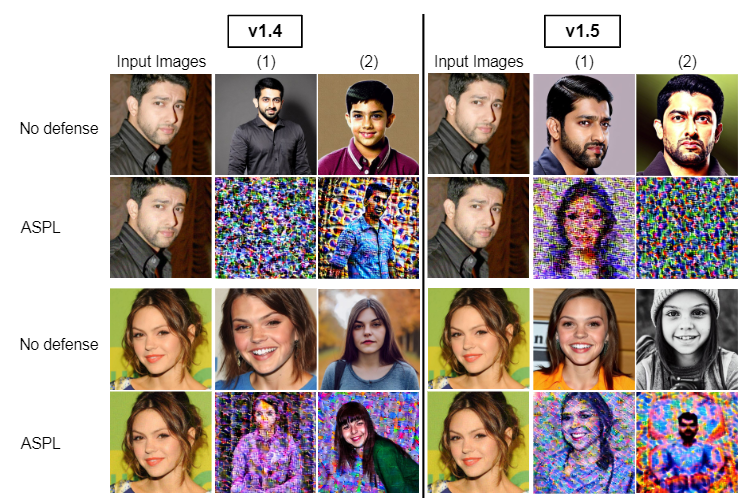

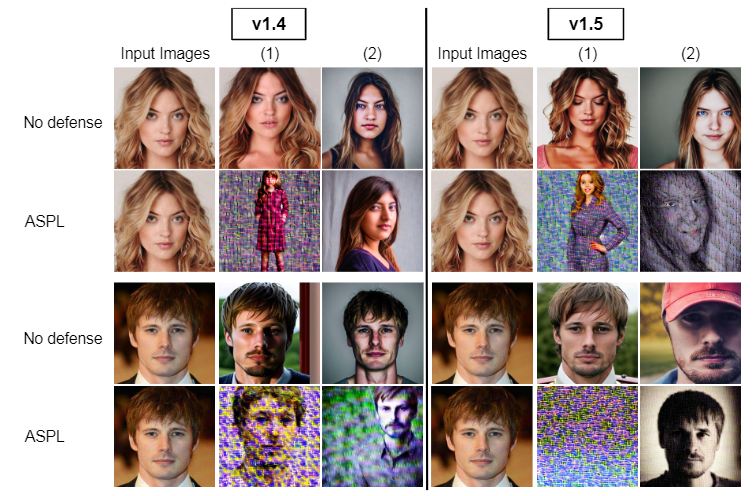

Figure 5: Qualitative results of ASPL with two different versions of SD models (v1.4 and v1.5) on VGGFace2. We provide in each test a single, representative input image. The generation prompts include (1) “a photo of sks person” and (2) “a dslr portrait of sks person”.

Figure 6: Qualitative results of ASPL with two different versions of SD models (v1.4 and v1.5) on CelebA-HQ. We provide in each test a single, representative input image. The generation prompts include (1) “a photo of sks person” and (2) “a dslr portrait of sks person”.

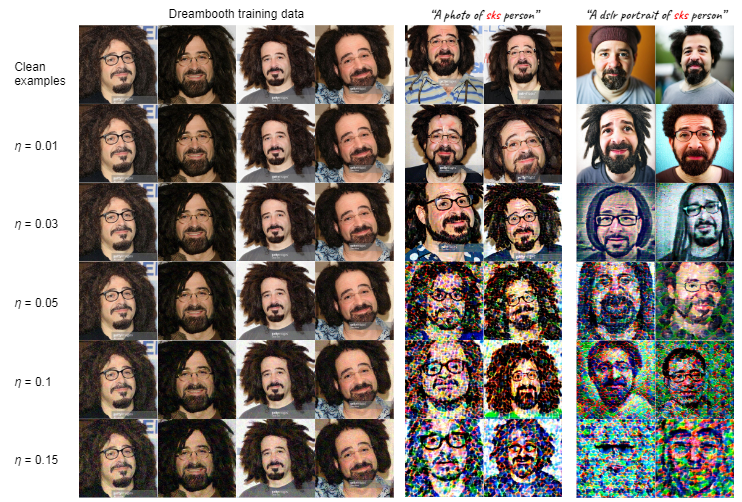

Figure 7: Qualativative results of ASPL with different noise budget on VGGFace2.

Figure 8: Qualitative results of ASPL with different noise budget on CelebA-HQ.

Figure 9: Qualitative results of ASPL in adverse settings on VGGFace2 where the SD model version in perturbation learning mismatches the one used in the DreamBooth finetuning stage (v1.4 → v2.1 and v1.4 → v2.0). We test with two random subjects and denote them in green and red, respectively.

Figure 10: Qualitative results of E-ASPL on VGGFace2, where the ensemble model combines 3 versions of SD models, including v1.4, v1.5, and v2.1. Its performance is validated on two DreamBooth models finetuned on SD v2.1 and v2.0, respectively. We test with two random subjects and denote them in green and red, respectively.

Figure 11: Qualitative results of ASPL on VGGFace2 where the training term and prompt of the target DreamBooth model mismatch the ones in perturbation learning. In the first scenario, the training term is changed from “sks” to “t@t”. In the second scenario, the training prompt is replaced with “a DSLR portrait of sks person” instead of “a photo of sks person”. Here, S∗ is “t@t” for term mismatching and “sks” for prompt mismatching. We test with two random subjects and denote them in green and red, respectively.

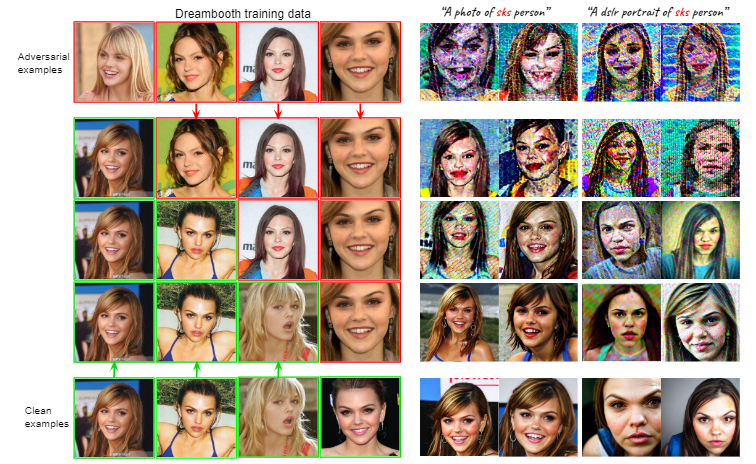

Figure 12: Qualativative results of ASPL in uncontrolled setting on VGGFace2. We denote the perturbed examples and the leaked clean examples in red and green, respectively.

Conclusion

This paper reveals a potential threat of misused DreamBooth models and proposes a framework to counter the threat. Our solution is to perturb users’ images with subtle adversarial noise so that any DreamBooth model trained on those images will produce poor personalized images. The key idea is to mislead the target DreamBooth model to perform poorly in each denoising step on the original, unperturbed images. We designed several algorithms and extensively evaluate them in different settings. Our defense is effective, even in adverse conditions. In the future, we aim to improve the perturbation’s imperceptibility and robustness [15, 56, 55] and conquer the uncontrolled settings.