论文链接:https://arxiv.org/abs/2303.09522

项目主页:https://prompt-plus.github.io/

文章目录

- Overview

-

- What problem is addressed in the paper?

- Is it a new problem? If so, why does it matter? If not, why does it still matter?

- What is the key to the solution?

- What is the results?

- What is the main contribution?

- What can we learn from ablation studies?

- Potential fundamental flaws; how this work can be improved?

- 3. Extended Conditioning Space

- 4. Experiments and Evaluation

- 5. Style Mixing Application

- 6. Conclusions, Limitations, and Future work

Overview

What problem is addressed in the paper?

Textual Conditioning in Text-to-Image Generation

Is it a new problem? If so, why does it matter? If not, why does it still matter?

No, We further introduce Extended Textual Inversion (XTI), where the images are inverted into P+, and represented by per-layer tokens. We show that XTI is more expressive and precise, and converges faster than the original Textual Inversion (TI) space.

What is the key to the solution?

Figure 1. P vs. P+. Standard textual conditioning, where a single text embedding is injected to the network (left), vs. our proposed extended conditioning, where different embeddings are injected into different layers of the U-net (right).

结论:In particular, the coarse layers primarily affect the structure of the image, while the fine layers predominantly influence its appearance.

What is the results?

Figure 2. Shape-Style Mixing in XTI. The extended textual space allows mixing concepts learned from two separate extended textual inversions (XTIs). The inversion of the kitten (right) is injected to the coarse inner layers of the U-net, affecting the shape of the generated image, and the inversion of the cup (left) is injected to the outer layers, affecting the style and appearance.

What is the main contribution?

- We introduce an Extended Textual Conditioning space in text-to-image models, referred to as P+. :This space consists of multiple textual conditions, derived from per-layer prompts, each corresponding to a layer of the denoising U-net of the diffusion model.

- Furthermore, we leverage the distinctive characteristics of P+ to advance the state-of-the-art in object-appearance mixing through text-to-image generation.

What can we learn from ablation studies?

Potential fundamental flaws; how this work can be improved?

3. Extended Conditioning Space

We partitioned the cross-attention layers of the denoising U-net into two subsets: coarse layers with low spatial resolution and fine layers with high spatial resolution.

Figure 3. Per-layer Prompting. We provide different text prompts (a precursor to P+) to different cross-attention layers in the denoising U-net. We see that color (“red”, “green”) is determined by the fine outer layers and content (“cube”, “lizard”) is determined by the coarse inner layers.

3.1. P+ Definition

The process of injecting a text prompt into the network for a particular cross-attention layer is illustrated in Figure 4.

Figure 4. Text-conditioning mechanism of a denoising diffusion model. The prompt “a cat” is processed with a sentence tokenization, by a pretrained textual encoder, and fed into a crossattention layer. Each of the three bars on the left represents a token embedding in P.

the Extended Textual Conditioningspace, denoted by P+, which is defined as follows:

pi represents an individual token embedding corresponding to the i-th cross-attention layer in the denoising U-net.

3.2. Extended Textual Inversion (XTI)

reconstruction objective for the embeddings:

4. Experiments and Evaluation

4.1. P+ Analysis

Cross-Attention Analysis

Figure 5. Object-appearance attention ratio. Mean ratio of attention features of the object token(s) and appearance token(s), per cross-attention layer.

Figure 5 reports the corresponding ratios. The coarse layers (8, 16) attend proportionally more to the object token and fine layers (32, 64) attend more to the appearance token. This experiment gives us the intuition that coarse layers are more responsible for object shape and structure compared to the fine layers.

Attributes Distribution

First, we divide the 16 cross-attention layers into 8 subsets, starting from the the empty set, followed by the middle coarse layer and growing outwards to include the outer fine layers, and finally the full set (see Figure 7 for a visual explanation and the supplementary for the detailed list).

The results averaged over images and prompt pairs are shown in Figure 7.

Figure 7. Relative CLIP similarities for object, color andstyle attributes, by subset of U-net layers. Orange represents the similarity to the first prompt, and blue represents similarity to the second. As we move from left to right, we gradually grow the subset of layers conditioned with the second prompt from coarse to fine.

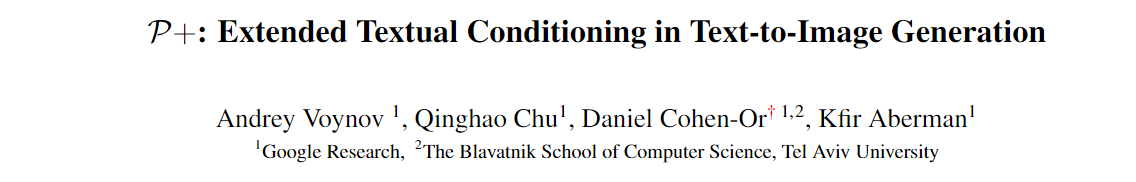

Figure 6 demonstrates this process for a single prompt pair and two image seeds. We start on the left column with all layers conditioned on the first prompt “Blue car, impressionism”. As we move from left to right, we condition more layers from coarse to fine with the other prompt “Red house, graffiti”. Note that even though we already condition some layers on “Red house, graffiti” in the middle column, the house only starts to appear red towards the end when the fine layers are also conditioned on the same prompt

Figure 6. Visualization of mixed conditioning of the U-net cross-attention layers. The rows represent two different starting seeds and the columns represent eight growing subsets of layers, from coarse to fine. We start by conditioning all layers on “Blue car, impressionism” in the left column. As we move right, we gradually condition more layers on “Red house, graffiti”, starting with the innermost coarse layers and then the outer fine layers. Note that shape changes (“house”) take place once we condition the coarse layers, but appearance (“red”) changes only take place after we condition the fine layers.

结论: Thus, coarse layers determine the objectshape and structure of the image, and the fine layers determine the color appearance of the image. style is a more ambiguous descriptor that involves both shape and texture appearance, so every layer has some contribution towards it.

4.2. XTI Evaluation

4.2.2 Quantitative Evaluation

Figure 8. Comparison of Textual Similarity and Subject Similariy. Textual Inversion (TI) [15], Extended Textual Inversion (XTI), DreamBooth [45]. We also evaluate the metrics for both multi-image and single-image inversion setups. For the latter, a subject is represented by a single image. The “Reference” label corresponds to images containing the subject images themselves, while the “Textual description” label used the given text description but replaced the explicit subject’s description (e.g. “a colorful teapot”). The standard error is visualized in the bars.

4.2.3 Human Evaluation

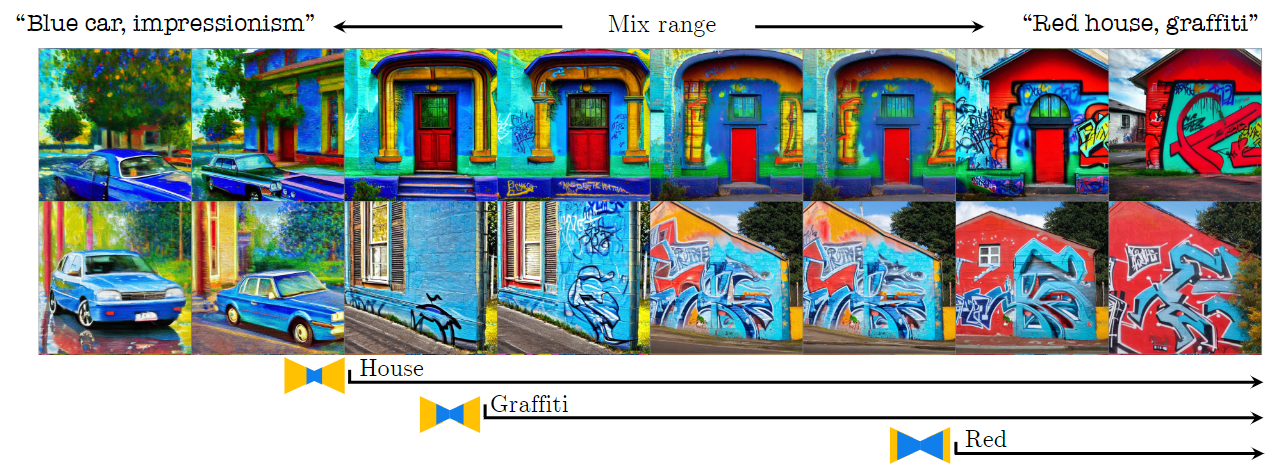

Our method demonstrates less distortion to the original concept and to the target prompt.

Figure 9. Textual Inversion (TI) vs. Extended Textual Inversion (XTI). Column 1: Original concepts. Column 2: TI results. Column 3:XTI results. It can be seen that XTI exhibits superior subject and prompt fidelity, as corroborated by the results of our user study.

To assess the efficacy of our proposed method from a human perspective, we conducted a user study. The study, summarized in Table 1, asked participants to evaluate both Textual Inversion (TI) and Extended Textual Inversion (XTI) based on their fidelity to the original subject and the given prompt. The results show a clear preference for XTI for both subject and text fidelity.

Table 1. User study preferences for subject and text fidelity for TI and XTI. See supplementary material for more details.

4.3. Single Image Inversion

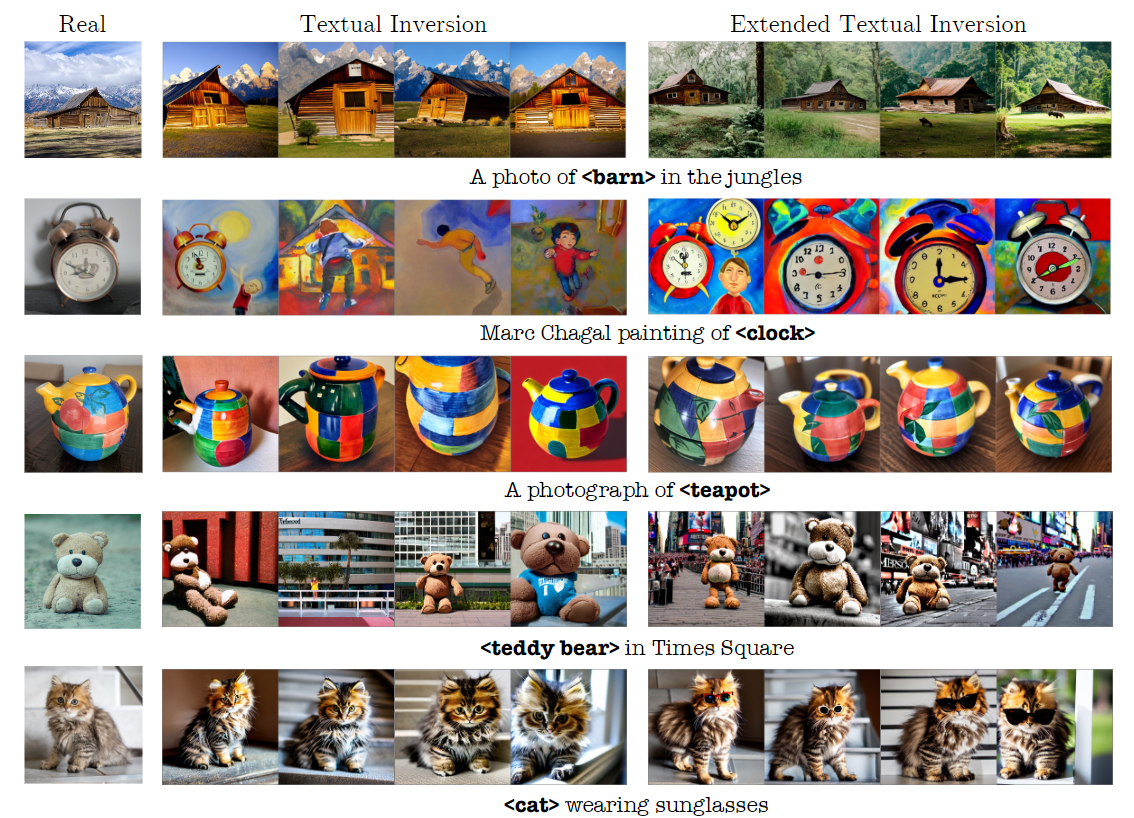

The Extended Textual Inversion also appears to be very effective in a data-hungry setup, when a target subject is represented with a single image.

Figure 10. Single Image Textual Inversion (TI) vs Single Image Extended Textual Inversion (XTI). Column 1: Original concepts.Column 2: TI results. Column 3: XTI results. It can be seen that XTI exhibits superior subject and prompt fidelity and produce plausible results even when trained on a single image.

4.4. Embedding Density

This demonstrates that the proposed approach provides embeddings that are closer to the original distribution, enabling a more natural reconstruction and better editability.

Figure 11. Estimated log-density of the original look-up table token embeddings (gray), embeddings optimized with textual inversion (blue), and embeddings optimized with XTI (orange). Our method demonstrates a more regular representation which is closer to the manifold of natural words

5. Style Mixing Application

Figure 12. Style Mixing in P+. Rows are generated by varying the degree of mixing by adjusting the proportion of layers conditioned on either of the two P+ inversions.

Figure 13. More Style Mixing examples. Top row: Shape source concepts. Left column: Appearance source concepts.

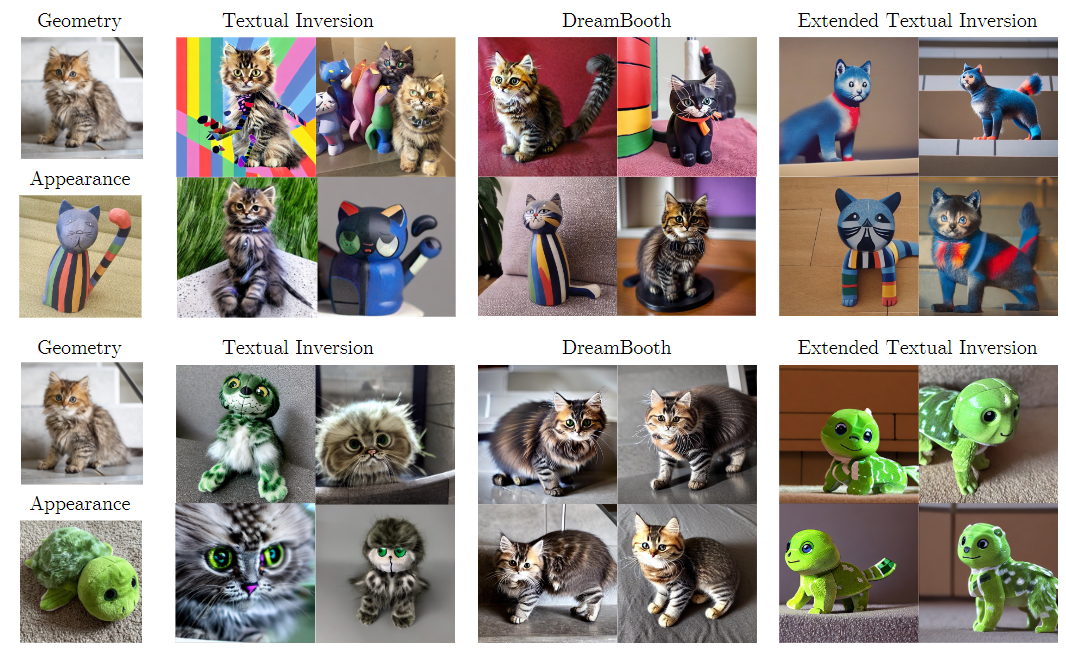

Figure 14. Style Mixing comparison. We compare against Textual Inversion [15] and Dreambooth [45] baselines. For TI we independently invert target subject and target appearance, and generate the images with the sentence “ that looks like”. Prompt variations did not make any remarkable improvements. The style source concept was inverted with style prompts (see [15] for details). DreamBooth was trained with a pair of subjects. Our proposed Extended Textual Inversion clearly outperforms both baselines.

6. Conclusions, Limitations, and Future work

The competence of P+ is demonstrated in the Textual Inversion problem. Our Extended Textual Inversion (XTI) is shown to be more accurate, more expressive, more controllable, and significantly faster. Yet surprisingly, we have not observed any reduction in editability.

The performance of XTI, although impressive, is not flawless. Firstly, it does not perfectly reconstruct the concept in the image, and in that respect, it is still inferior to the reconstruction that can be achieved by fine-tuning the model. Secondly, although XTI is significantly faster than TI, it is a rather slow process. Lastly, the disentanglement among the layers of U-net is not perfect, limiting the degree of control that can be achieved through prompt mixing.

An interesting research avenue is to develop encoders to invert one or a few images into P+, possibly in the spirit of [16], or to study the impact of applying fine-tuning in conjunction of operating in P+.