torch.Tensor is the central class of the package. If you set its attribute .requires_grad as True, it starts to track all operations on it. When you finish your computation you can call .backward() and have all the gradients computed automatically. The gradient for this tensor will be accumulated into .grad attribute.

创建tensor之后,我们要利用.requires_grad,将它设置为True。计算结束后,设置.backward()就可以反向传播,自动求出所有导数。自变量的.grad就是保存导数的地方。

例子:

import torch

x=torch.ones(2,2,requires_grad=True)

print(x)

y=x+2

z=y*y*3

out=z.mean()

print(z,out)

out.backward()

print(x.grad)输出:

tensor([[1., 1.],

[1., 1.]], requires_grad=True)

tensor([[27., 27.],

[27., 27.]], grad_fn=<MulBackward0>) tensor(27., grad_fn=<MeanBackward0>)

tensor([[4.5000, 4.5000],

[4.5000, 4.5000]])解释:

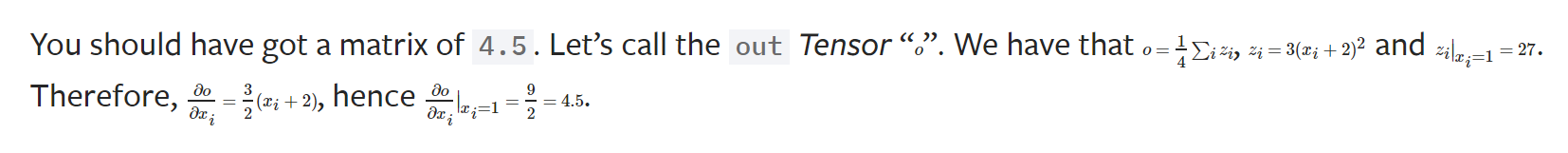

应该会得到数值是4.5的矩阵。我们可以设out为tensor,即张量'O'。根据代码中的设定,对O的自变量x求导数之后的数值就是4.5。