文章目录

Unit 6: How to change the learning algorithm: CartPole

| SUMMARY |

|---|

Estimated time to completion: 2 h

In this unit, we are going to see how to set up the environment in order to be able to use the OpenAI Baselines deepq algorithm. For that, we are going to use a virtual environment. We are going to use the CartPole environment during the Unit.

| END OF SUMMARY |

|---|

OpenAI Baselines is a set of high-quality implementations of reinforcement learning algorithms. These algorithms will make it easier for the research community to replicate, refine, and identify new ideas, and will create good baselines on top of which to build research. Our DQN implementation and its variants are roughly on par with the scores in published papers. We expect they will be used as a base around which new ideas can be added, and as a tool for comparing a new approach against existing ones.

You can have a look at their work here: https://github.com/openai/baselines

This looks quite interesting, right? But the OpenAI baselines only work for Python 3.5 or higher. This can be a little bit problematic since ROS only supports (completely) Python 2.7. This means that our whole system is based on Python 2.7, too, as the main goal of the Robot Ignite Academy is to teach ROS. Well then… what do we do? It’s quite simple, actually. We’ll use a virtual environment. With this virtual environment, we’ll be able to use Python 3.5 for baselines, without needing to change anything in our system. Sounds great, right?

Let’s see how to set up this virtual environment!

Set up the virtual environment

Download the repo

The first thing you need to do in order to set up the OpenAI baselines is to download them, of course!. For that, you can go to your python3_ws/src folder and execute the following command:

Execute in WebShell #1

cd ~/catkin_ws/src

git clone https://github.com/openai/baselines.git

You will now have the baseline repository in your workspace.

Source the virtual environment

As we mentioned previously, the solution to use Python 3 with ROS is to create a virutal environment, where we can install all the Python3 packages that we need. In the case of Robot Ignite Academy, this virtual environment is already provided to you, so that you just have to source it in order to use it.

So, with the virtual environment already created, you can either activate it or deactivate it. To activate it, you will use the following command:

Execute in WebShell #1

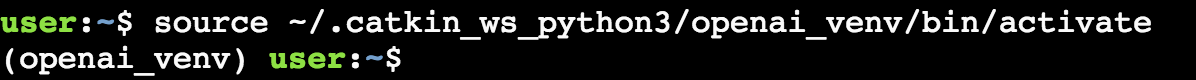

source ~/.catkin_ws_python3/openai_venv/bin/activate

Once the venv is activated, you’ll see that (openai_venv) appears at the left side of the web shell command line.

And for deactivating it:

Execute in WebShell #1

deactivate

When the venv is deactivated, the (openai_venv) addon will disappear.

How to get inside the VENV every time you open a new shell

You will have to do this very often, so here is the command:

Execute in WebShell #1

source ~/.catkin_ws_python3/openai_venv/bin/activate

deepq algorithm

DeepQ is a reinforcement learning algorithm that combines Q-Learning with deep neural networks to let RL work for complex, high-dimensional environments, like video games or robotics. Our goal now will be to make the CartPole environment learn, as we did in the previous chapter, but using the deepq algorithm instead of qlearn.

Also, as you may have learned from the previous chapter, (ideally) you should be able to use the deepq algorithm just by creating a new training script for it, without the need to modify anything in the existing environment structure. Let’s focus on creating this training script for deepq then.

Inside the baselines package you’ve just downloaded, you will see that you have different folders, each one associated with a different learning algorithm. So, inside the deepq folder, you’ll find all the files that are related to this particular learning algorithm. But, as I’ve said before, let’s focus on the training script. Inside the experiments folder, you’ll find a file that is called train_cartpole.py. This is the training script for the deepq algorithm.

As you can see, it’s quite simple. Let’s have a look at it.

| train_cartpole.py |

|---|

import gym

from baselines import deepq

def callback(lcl, _glb):

# stop training if reward exceeds 199

is_solved = lcl['t'] > 100 and sum(lcl['episode_rewards'][-101:-1]) / 100 >= 199

return is_solved

def main():

env = gym.make("CartPole-v0")

act = deepq.learn(

env,

network='mlp',

lr=1e-3,

total_timesteps=100000,

buffer_size=50000,

exploration_fraction=0.1,

exploration_final_eps=0.02,

print_freq=10,

callback=callback

)

print("Saving model to cartpole_model.pkl")

act.save("cartpole_model.pkl")

if __name__ == '__main__':

main()

| train_cartpole.py |

|---|

Let’s analyze a little bit of the above code.

Code Analysis

First of all, in the imports section, we can see that we are importing the deepq algorithm from the baselines module:

from baselines import deepq

Next, we have a callback function. This function is used by deepq in order to know when to stop the training. After each episode, it will check if the reward exceeds a certain value. If the reward exceeds this value, we will know that the agent has finished learning.

def callback(lcl, _glb):

# stop training if reward exceeds 199

is_solved = lcl['t'] > 100 and sum(lcl['episode_rewards'][-101:-1]) / 100 >= 199

return is_solved

Next, we have the main code. We can see the function where deepq is actually learning here.

act = deepq.learn(

env,

network='mlp',

lr=1e-3,

total_timesteps=100000,

buffer_size=50000,

exploration_fraction=0.1,

exploration_final_eps=0.02,

print_freq=10,

callback=callback

)

Here, we are calling the learn() function of deepq, sending it to the environment we have just initialized, and some other parameters that the deepq algorithm needs in order to learn. As you can see, we are also indicating the callback function to call.

Whenever deepq finishes learning, it will save everything into a model using the following line of code:

act.save("cartpole_model.pkl")

And that’s all! Quite simple, right? But, of course, this won’t work for our case. Can you guess why?

Well, it’s because this training script is not connected to either ROS or Gazebo. This means that it’s not connected to the whole environments structure that you learned about in the previous unit. So, our main work now will be to connect this deepq training script to our environments structure. Let’s go for it!

| Exercise 6.1 |

|---|

a) The first thing you’ll need to do is import the environment you want to use for learning, In this case, we’re going to use the CartPole environment. So, add the following to the imports of your code:

from openai_ros.task_envs.cartpole_stay_up import stay_up

b) Also, in order to be able to use ROS with Python, you’ll need to import the rospy module.

import rospy

c) Now, as always when working with ROS, you’ll need to initiate a new ROS node, This will be done at the beginning of the main code. You can add the following line there:

rospy.init_node('cartpole_training', anonymous=True, log_level=rospy.WARN)

d) Finally, we will initialize our CartPole environment so that the deepq algorithm uses it for learning. You can add the following code below the ROS node initialization.

env = gym.make("CartPoleStayUp-v0")

e) And that’s it! Our training script is ready to start working. Execute the training script now and see if your agent starts learning.

python train_cartpole.py

** NOTE: Bear in mind that, in order to train your model, you will need to have the training parameters loaded. If your parameters are not loaded, you will get errors when launching the training. You can load the same parameters you used in the Qlearn example, in Unit 1.**

| End of Exercise 6.1 |

|---|

While the deepq algorithm learns, you’ll see that a recurrent box appears in the console. Something like these:

This box is telling you how the training is going. If your agent is learning, you should see how the mean 100 episode reward value is increasing. This box basically tells you:

- % time spent exploring: How much time the agent spends exploring with the actions. This is without bearing in mind the knowledge the agent already has.

- episodes: The accumulated number of episodes.

- mean 100 episode reward: A mean reward for all the steps you’ve done on those episodes.

- steps: The accumulated number of episodes.

Using the saved model

As you have already seen, once the training has finished, the trained model is saved into a file named cartpole_model.pkl. This file, then, can be used to decide which actions to take so that the CartPole achieves its goal (in this case, staying upright).

In order to use this saved model, you can use a very simple Python script like the one provided by the baselines package. You can find this script in the same folder as the one for training (baselines/deepq/experiments). Here you can have a look at the file, which is named enjoy_cartpole.py.

| enjoy_cartpole.py |

|---|

import gym

from baselines import deepq

def main():

env = gym.make("CartPole-v0")

act = deepq.learn(env, network='mlp', total_timesteps=0, load_path="cartpole_model.pkl")

while True:

obs, done = env.reset(), False

episode_rew = 0

while not done:

env.render()

obs, rew, done, _ = env.step(act(obs[None])[0])

episode_rew += rew

print("Episode reward", episode_rew)

if __name__ == '__main__':

main()

| enjoy_cartpole.py |

|---|

As you can see, the code is very simple. Basically, it loads the saved (and trained) model into the variable act:

act = deepq.learn(env, network='mlp', total_timesteps=0, load_path="cartpole_model.pkl")

And then, it uses it to decide what to do.

obs, rew, done, _ = env.step(act(obs[None])[0])

There are a couple of things we will need to modify in this code, though, in order to make it work. As you already did in the previous exercise, you will need to create the connections to ROS through your own environment (CartPoleStayUp-v0). So, as you should already basically know what to do, I’ll let you do it in the following exercise!

| Exercise 6.2 |

|---|

a) Modify the previous script so that it now uses your own environment and connects to the simulation.

** NOTE: Besides the modifications you already know, in order to make the code work, you’ll need to do some extra modifications. First, in the line where you are calling the step function, you should substitute it with the following line: **

obs, rew, done, _ = env.step(act(np.array(obs)[None])[0])

Also, you will need to import the numpy module. You can use the following line:

import numpy as np

Finally, you will also need to comment the line where the environment is rendered.

#env.render()

b) Execute the modified script and visualize what your CartPole has learned!

| Exercise 6.2 |

|---|

Congratulations!! You are now capable of creating your own Environments structure, and make it learn using the deepq algorithm from baselines.