文章目录

- Hopper Robot

- Tasks to accomplish

- 1. Create all the environments needed in order to be able to train the Hopper robot. First, generate the Robot Environment. You can start with the below templates.

- 2. Generate the Task Environment. You can start with the below template.

- 3. Create a training script that will use the Qlearn algorithm in order to train the Hopper robot.

- 4. Create a training script that will use the deepq algorithm in order to train the Hopper robot.

- 5. Compare the results between the two learning algorithms, and check which one perpforms better. Try to improve the larning script.

Unit 4: Project: Training a Hopper robot

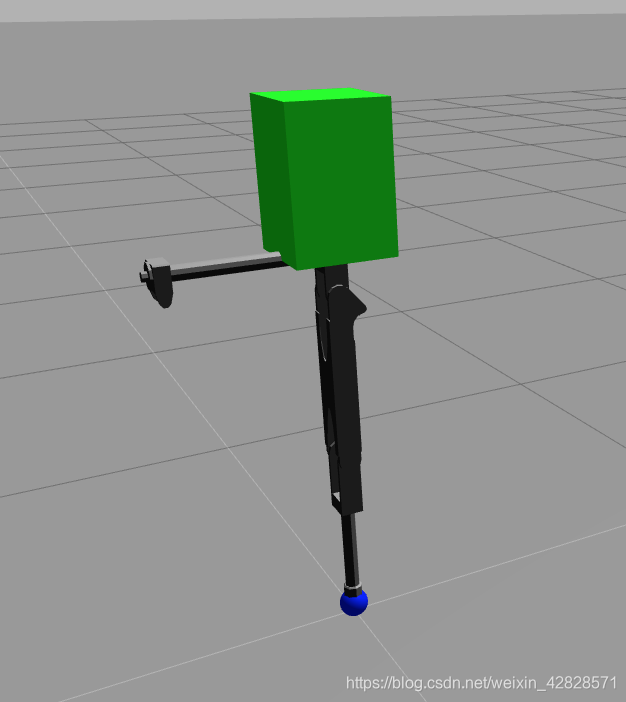

Hopper Robot

For this part of the project, you will use the Hopper robot. The Hopper robot is a one-legged robot whose biggest challenge is being able to stand up.

Tasks to accomplish

1. Create all the environments needed in order to be able to train the Hopper robot. First, generate the Robot Environment. You can start with the below templates.

| hopper_env.py |

|---|

from openai_ros import robot_gazebo_env

class HopperEnv(robot_gazebo_env.RobotGazeboEnv):

"""Superclass for all Robot environments.

"""

def __init__(self):

"""Initializes a new Robot environment.

"""

# Variables that we give through the constructor.

# Internal Vars

self.controllers_list = ['my_robot_controller1','my_robot_controller2', ..., 'my_robot_controllerX']

self.robot_name_space = "my_robot_namespace"

reset_controls_bool = True or False

# We launch the init function of the Parent Class robot_gazebo_env.RobotGazeboEnv

super(MyRobotEnv, self).__init__(controllers_list=self.controllers_list,

robot_name_space=self.robot_name_space,

reset_controls=reset_controls_bool)

# Methods needed by the RobotGazeboEnv

# ----------------------------

def _check_all_systems_ready(self):

"""

Checks that all the sensors, publishers and other simulation systems are

operational.

"""

# TODO

return True

# Methods that the TrainingEnvironment will need to define here as virtual

# because they will be used in RobotGazeboEnv GrandParentClass and defined in the

# TrainingEnvironment.

# ----------------------------

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

raise NotImplementedError()

def _init_env_variables(self):

"""Inits variables needed to be initialized each time we reset at the start

of an episode.

"""

raise NotImplementedError()

def _compute_reward(self, observations, done):

"""Calculates the reward to give based on the observations given.

"""

raise NotImplementedError()

def _set_action(self, action):

"""Applies the given action to the simulation.

"""

raise NotImplementedError()

def _get_obs(self):

raise NotImplementedError()

def _is_done(self, observations):

"""Checks if episode done based on observations given.

"""

raise NotImplementedError()

# Methods that the TrainingEnvironment will need.

# ----------------------------

2. Generate the Task Environment. You can start with the below template.

| hopper_stay_up_env.py |

|---|

from gym import spaces

import hopper_env

from gym.envs.registration import register

import rospy

# The path is __init__.py of openai_ros, where we import the MovingCubeOneDiskWalkEnv directly

timestep_limit_per_episode = 1000 # Can be any Value

register(

id='HopperStayUp-v0',

entry_point='hopper_stay_up_env:HopperStayUpEnv',

timestep_limit=timestep_limit_per_episode,

)

class HopperStayUpEnv(hopper_env.HopperEnv):

def __init__(self):

# Only variable needed to be set here

number_actions = rospy.get_param('/my_robot_namespace/n_actions')

self.action_space = spaces.Discrete(number_actions)

# This is the most common case of Box observation type

high = numpy.array([

obs1_max_value,

obs12_max_value,

...

obsN_max_value

])

self.observation_space = spaces.Box(-high, high)

# Variables that we retrieve through the param server, loded when launch training launch.

# Here we will add any init functions prior to starting the MyRobotEnv

super(MyTrainingEnv, self).__init__()

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

# TODO

def _init_env_variables(self):

"""

Inits variables needed to be initialised each time we reset at the start

of an episode.

:return:

"""

# TODO

def _set_action(self, action):

"""

Move the robot based on the action variable given

"""

# TODO: Move robot

def _get_obs(self):

"""

Here we define what sensor data of our robots observations

To know which Variables we have access to, we need to read the

MyRobotEnv API DOCS

:return: observations

"""

# TODO

return observations

def _is_done(self, observations):

"""

Decide if episode is done based on the observations

"""

# TODO

return done

def _compute_reward(self, observations, done):

"""

Return the reward based on the observations given

"""

# TODO

return reward

# Internal TaskEnv Methods