Chain Rule(链式法则)

Case 1

如果有:

y=g(x) z=h(y)

那么“变量影响链”就有:

Δx→ΔyΔz

因此就有:

dxdz=dydzdxdy

Case 2

如果有:

y=g(s) y=h(s) z=k(x,y)

那么“变量影响链”就有:

因此就有:

dsdz=∂x∂zdsdx+∂y∂zdsdy

Backpropagation(反向传播算法)——实例讲解

定义

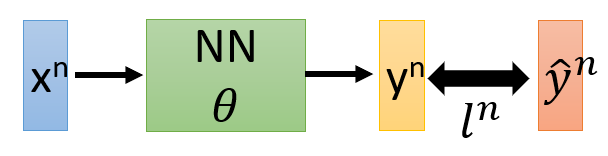

反向传播(英语:Backpropagation,缩写为BP)是“误差反向传播”的简称,是一种与最优化方法(如梯度下降法)结合使用的,用来训练人工神经网络的常见方法。 该方法对网络中所有权重计算损失函数的梯度。 这个梯度会反馈给最优化方法,用来更新权值以最小化损失函数。(误差的反向传播)——维基百科

说明

假设现在有N个样本数据,那么实际上损失函数可以表示为:

L(θ)=n=1∑Nln(θ)

其中

θ为需要学习的参数。

那么现在

ω对

L进行偏微分,实际上是对每个样本数据的损失函数

l(θ)进行偏微分后再求和:

∂ω∂L(θ)=n=1∑N∂ω∂ln(θ)

用代数表示为:

z1=ω11x1+ω12x2+b1 a1=σ(z1)

z2=ω21x1+ω22x2+b2 a2=σ(z2)

z3=ω31a1+ω32a2+b3 a3=σ(z3)

z4=ω41a1+ω42a2+b4 a4=σ(z4)

z5=ω51a3+ω52a4+b5 y1=σ(z5)

z6=ω61a3+ω62a4+b6 y2=σ(z6)

那么我们实际要计算的是:

∂ω∂l=∂ω∂z∂z∂l

即分别计算出

∂ω∂z和

∂z∂l:

Step 1:Forward Pass

这个过程实际上是计算Neural Network的所有

∂ωi1∂zi和

∂ωi2∂zi,即:

∂ω11∂z1=x1 ∂ω12∂z1=x2

∂ω21∂z2=x1 ∂ω22∂z2=x2

∂ω31∂z3=a1 ∂ω32∂z3=a2

∂ω41∂z4=a1 ∂ω42∂z4=a2

∂ω51∂z5=a3 ∂ω52∂z5=a4

∂ω61∂z6=a3 ∂ω62∂z6=a4

如果用具体数值表示的话,那就是下图所示:

因为这个过程必须从输入

x1、

x2开始到输出,否则无法计算出之后的

a1、

a2、

a3、

a4,所以这个过程叫做Forward Pass。

Step 2:Backward Pass

这个过程是计算

∂z∂l的过程,如果我们按照Step 1中的过程来计算的话,就会有如下过程:

∂z1∂l=∂z1∂a1∂a1∂l

其中,

∂z1∂a1=σ′(z1)

∂a1∂l=∂a1∂z3∂z3∂l+∂a1∂z4∂z4∂l=ω31∂z3∂l+ω41∂z4∂l

即:

∂z1∂l=σ′(z1)(ω31∂z3∂l+ω41∂z4∂l)

同理,有:

∂z2∂l=σ′(z2)(ω32∂z3∂l+ω42∂z4∂l)

因此,如果我们要计算出

∂z1∂l和

∂z2∂l,我们还要先计算

∂z3∂l和

∂z4∂l,可以想象出来,我们再计算

∂z3∂l和

∂z4∂l的过程中,肯定还要计算

∂z5∂l和

∂z6∂l…

没错,这是一个递归过程!这还只是个比较简单的例子,如果是比较复杂的深度神经网络的话,时间复杂度必然是很高的,所以说,不能用Forward Pass的方法计算

∂z∂l!

(重点来了!!!)

现在,如果你仔细端详

∂z1∂l=σ′(z1)(ω31∂z3∂l+ω41∂z4∂l) 这个式子,你会发现,式子的形式是不是很像神经元的形式:

∂z3∂l和

∂z4∂l作为输入,

ω31和

ω41作为权重,而

σ′(z1)可以看作是一个数值放大器,放大了

ω31∂z3∂l+ω41∂z4∂l的结果!如下图:

因此,计算

∂z∂l的过程可以用如下图来表示:

这种方法就是Backward Pass,这样就不会出现刚才所说的递归了!

summary

通过Forward Pass计算得到的

∂ω∂z以及Backward Pass计算得到的

∂z∂l,就可以得到

∂ω∂l

至此,“反向传播算法”及公式推导的过程总算是结束啦!我觉得这种思路还是比较好接受的,毕竟是受了“大木博士”的熏陶哈哈。