Machine learning system design

Prioritizing what to work on: Spam classification example

在设计复杂的机器学习系统时将会遇到的主要问题,以及给出一些如何巧妙构建一个复杂的机器学习系统的建议。

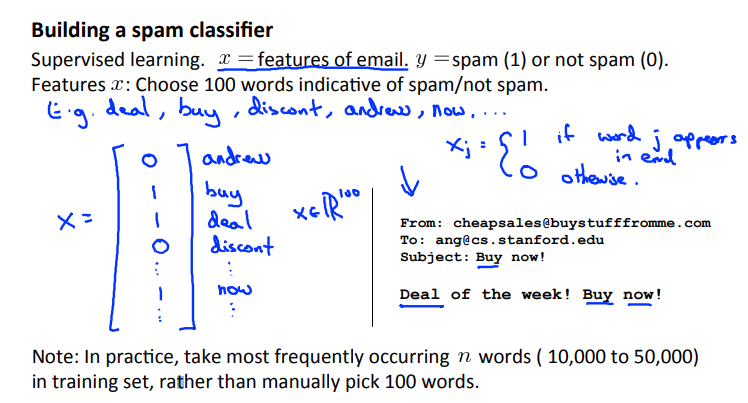

Building a spam classifier 垃圾邮件分类器

思想:通过分词,将一封邮件转化为一个向量,从而将实际生活问题转化为了数学问题。

具体:\(x\)是对应单词是否出现:出现为1,不出现为0;\(y\)表示邮件是否为垃圾邮件,是为1,否为0。

注意:实际应用中,我们更多情况下是选择最常出现的\(n\)个单词,而不是手动挑选100个单词。

How to spend your time to make it have low error? 如何使你的垃圾邮件分类器具有较高准确度?

- Collect lots of data 收集大量数据

- E.g. "honeypot" project.

- E.g. "honeypot" project.

- Develop sophisticated features based on email routing information (from email header). 用更复杂的特征变量。如:邮件的路径信息(通常出现在标题中)

- Develop sophisticated features for message body, e.g. should "discount" and "discounts" be treated as the same word? How about "deal" and "Dealer"? Features about punctuation? 从邮件的正文出发寻找复杂的特征。如:"discount" 和 "discounts"是否该视为同一个单词……

- Develop sophisticated algotithm to detece misspellings (e.g. m0rtgage, med1cine, w4tches.) 构造更加复杂的算法来检测或纠正那些故意的拼写错误,如:"m0rtgage", "med1cine", "w4tches"。

Error analysis

Recommended approach 推荐的方法

- Start with a simple algorithm that you can implement quickly. Implement it and test it on your cross-validation data. 快速实现一个简单的算法,通过交叉验证集进行验证。

- Plot learning curves to decide if more data, more features, ect. are likely to help. 画出学习曲线,观察算法需要做哪方面的改进。

- Error analysis: Manually examine the examples (in cross validation set) that your algorithm made erroes on. See if you spot any systematic trend in what type of examples it is making errors on. 误差分析:手动挑出交叉验证集中计算结果错误的数据,看它们可能犯了什么错误。

例子:

\(m_{CV} = 500\) examples in cross validation set. Algorithm misclassifies 100 emails. 一个垃圾邮件分类器,它的交叉验证集中有500个实例,它错误分类了一百个交叉验证实例。

Manually examine the 100 errors, and categorize them based on: 人工检查这100个错误实例,并将它们分类

- What type of email it is. 这是什么类型的邮件

- What cues (features) you think would have helped the algorithm classify them correctly. 哪些变量能帮助这个算法来正确分类它们

如果区分后发现算法总是在某一方面表现的很差/对于某种特征处理的不好,那就重点处理这种情况。

The importance of numerical evaluation

Should discount/discounts/discounted/discounting be treated as the same word? Can use "stemming" software (E.g. "Porter stemmer")? --> university, universe

假设我们正在决定是否应该将这些单词视为等同,一种方法是检查这些单词开头几个字母。在NLP中,这种方法是通过一种叫词干提取的软件实现的。因为这种方法只会检测单词的前几个字母,所以也会产生相应的问题,如将"university, universe"这两个单词视为同一个单词。

Error analysis may not be helpful for deciding if this is likely to improve performance. Only solution is to try it and see if it works.

误差分析也不能帮助你决定词干提取是否是一个好方法。最好的方法是着手一试并看它表现如何。

Need numerical evalution (e.g., cross validation error) of algorithm's performance with and without stemming.

需要量化的数值评估(如交叉验证集的误差):使用词干提取和不使用词干提取算法的误差分别是多少。

其它方面也同样适用。如,是否要区分单词大小写。

Error metrics for skewed classes

偏斜类问题的错误指标

Cancer classification example

Train logistic regression model \(h_\theta(x)\). (\(y = 1\) if cancer, \(y = 0\) otherwise) 训练逻辑回归模型来预测病人是否得了癌症

Find that you got 1% error on test set. (99% correct diagnoses) 用测试集检查后发现它只有1%的错误

Only 0.50% of patients have cancer. 但是在测试集中只有0.50%的患者真正得了癌症,此时用下面的代码将会得出预测误差更小的结果,但它是一个非机器学习代码。

function y = predictCancer(x)

y = 0; %ignore x!

return当样本中正例和负例比率非常接近于一个极端时,通过总是预测\(y = 1\)或\(y = 0\),算法可能表现得非常好,这种情况就叫做偏斜类。

总结:假设有个算法,精确度是99.2%,对算法进行一定改动后得到了99.5%的精确度,但这是否就可以认为是算法的一个提升?用某个实数来作为评估度量值的一个好处就是,它可以帮助我们迅速决定是否需要对算法做出一些改进。而使用分类误差或分类精确度来作为评估度量,可能会产生上面出现的那种局面,所以当遇到这样一个偏斜类时,我们希望有一个不同的误差度量值。

Precision/Recall 查准率/召回率

\(y = 1\) in presence of rare class that we want to detect

Precision

Of all patients where we predicted \(y = 1\), what fraction actually has cancer? 我们预测得病的人中,有多少是真正得病的?

\[ Precision = \frac{True\ positive}{\#Predicted\ positive} = \frac{True\ positive}{True\ positive + False\ positive} \tag1 \]Recall

Of all patients that actually have cancer, what fraction did we correctly detece as having cancer? 真正得病的人中,我们预测出了多少?

\[ Recall = \frac{True\ positive}{\#Actual\ positive} = \frac{True\ positive}{True\ positive + False\ negative} \tag2 \]

此例列表结果如下

| Actual class 1 | Actual class 0 | |

|---|---|---|

| Predicted class 1 | True positive | False positive |

| Predicted class 0 | False negative | True negative |

备注:在定义查准率和召回率时,习惯性的使用\(y = 1\)表示出现的很少的类,如检测癌症的例子。

Trading off precision and recall

Relation between precision and recall

Logistic regression: \(0 \leq h_\theta(x) \leq 1\)

Predict \(1\) if \(h_\theta(x) \ge 0.5\)

Predict \(0\) if \(h_\theta(x) \lt 0.5\)

Suppose we want to predict \(y = 1\) (cancer) only if very confident.

--> High precision, low recall.

--> 将0.5改为0.7,将会提高查准率,但是会降低召回率。

Suppose we want to avoid missing too many cases of cancer (avoid false negatives).

--> High recall, low precision.

--> 将0.5改为0.3,将会提高召回率,但是会降低查准率。

More generally: Predict \(1\) if \(h_\theta(x) \ge\) thershold. 当\(h_\theta(x)\)大于某个临界值时就可预测值为1,临界值取决于我们的目的(提高查准率还是召回率)。

\(F_1\) Score (F score)

How to compare precision/recall numbers? 我们该如何比较不同的查准率和召回率呢?

| Precision(P) | Recall(R) | Average | \(F_1\) Score | |

|---|---|---|---|---|

| Algorithm 1 | 0.5 | 0.4 | 0.45 | 0.444 |

| Algorithm 2 | 0.7 | 0.1 | 0.4 | 0.175 |

| Algorithm 3 | 0.02 | 1.0 | 0.51 | 0.0392 |

法一: Average: \(\frac{P+R}{2}\),不一定是个好的方法,如上面的例子中Algorithm 3效果并不好,但它通过此方法得到的值是最高的。

法二: \(F_1\) Score: \(2\frac{PR}{P+R}\),此方法会给查找率和召回率中较低的值一个更高的权重,因此此方法要想有个较大的权值,必须两者相差不大。

Data for machine learning

Designing a high accutancy learning system

真正能够提高算法性能的,是你能够给予一个算法大量的训练数据。

"It's not who has the best algorithm that wins. It's who has the most data."

这在很多情况下是适用的。

Large data rationale 大数据基础

Assume feature \(x \in R^{(n+1)}\) has sufficient information to predict \(y\) accurately. 在特征值有足够的信息来准确预测\(y\)的情况下。

- Example: For breakfast I ate ____ eggs. 此时可给出正确答案,因答案唯一。

- Counterexample: Predict housing price from only size (\(feet^2\)) and no other features. 这种情况下无法给出精确的答案,除了房子尺寸还需考虑其他特征。

- Useful test: Given the input \(x\), can a human expert confidently predict \(y\)? 对于要预测的问题,如果是个相应领域的人类专家,那他是否能准确预测\(y\)的值呢?

分析:

Use a learning algorithm with many parameters (e.g. logistic regression/linear regression with many features; neural network with many hidden units).

使用多参数的学习算法(如:有很多参数的逻辑回归/线性回归算法;有许多隐藏单元的神经网络),此时会有低偏差(low bias) --> \(J_{train}(\Theta)\)将会很小。

Use a very large training set (unlikely to overfit)

使用很大的训练集(不太可能过拟合), --> \(J_{train}(\Theta) \approx J_{test}(\Theta)\)。

上面两者结合 --> \(J_{test}(\Theta)\)将会很小。