实验环境:

操作系统:rhel6.5

server1 ip : 172.25.80.1

server2 ip : 172.25.80.2

客户主机ip: 172.25.80.250

1.配置高级yum源

vim /etc/yum.repos.d/rhel-source.repo

[rhel-source]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.80.250/rhel6.5

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.80.250/rhel6.5/HighAvailability

gpgcheck=0

[LoadBalancer]

name=LoadBalancer

baseurl=http://172.25.80.250/rhel6.5/LoadBalancer

gpgcheck=0

[ResilientStorage]

name=ResilientStorage

baseurl=http://172.25.80.250/rhel6.5/ResilientStorage

gpgcheck=0

[ScalableFileSystem]

name=ScalableFileSystem

baseurl=http://172.25.80.250/rhel6.5/ScalableFileSystem

gpgcheck=0

[root@server1 ~]# yum clean all

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Cleaning repos: HighAvailability LoadBalancer ResilientStorage

: ScalableFileSystem rhel-source

Cleaning up Everything

[root@server1 ~]# yum repolist

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

HighAvailability | 3.9 kB 00:00

HighAvailability/primary_db | 43 kB 00:00

LoadBalancer | 3.9 kB 00:00

LoadBalancer/primary_db | 7.0 kB 00:00

ResilientStorage | 3.9 kB 00:00

ResilientStorage/primary_db | 47 kB 00:00

ScalableFileSystem | 3.9 kB 00:00

ScalableFileSystem/primary_db | 6.8 kB 00:00

rhel-source | 3.9 kB 00:00

rhel-source/primary_db | 3.1 MB 00:00

repo id repo name status

HighAvailability HighAvailability 56

LoadBalancer LoadBalancer 4

ResilientStorage ResilientStorage 62

ScalableFileSystem ScalableFileSystem 7

rhel-source Red Hat Enterprise Linux 6Server - x86_64 - Source 3,690

repolist: 3,819

2.在server1,server2安装所需软件

[root@server1 ~]# yum install pacemaker pssh-2.3.1-2.1.x86_64.rpm crmsh-1.2.6-0.rc2.2.1.x86_64.rpm httpd -y

vim /var/www/html/index.html

server1

[root@server2 ~]# yum install pacemaker pssh-2.3.1-2.1.x86_64.rpm crmsh-1.2.6-0.rc2.2.1.x86_64.rpm httpd -y

vim /var/www/html/index.html

server2

3.修改配置文件

cd /etc/corosync/

ls

corosync.conf.example corosync.conf.example.udpu service.d uidgid.d

cp corosync.conf.example corosync.conf

vim corosync.conf

4 totem {

5 version: 2

6 secauth: off

7 threads: 0

8 interface {

9 ringnumber: 0

10 bindnetaddr: 172.25.80.100

11 mcastaddr: 226.94.1.1

12 mcastport: 5405

13 ttl: 1

14 }

15 }

35 service {

36 name:pacemaker

37 ver:0

38 }

将修改好的配置文件传给server2

[root@server1 corosync]# scp corosync.conf [email protected]:/etc/corosync/

[email protected]'s password:

corosync.conf 100% 483 0.5KB/s 00:00

4.server1,和server2都启动corosync服务

[root@server1 corosync]# /etc/init.d/corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@server2 ~]# /etc/init.d/corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

5.设置集群资源

在server2:

[root@server2 corosync]# crm

输入conifgure进行配置

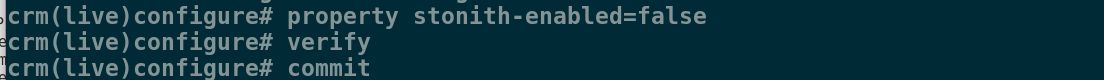

1.将fence设备关闭

crm(live)configure# property stonith-enabled=false

crm(live)configure# verify

crm(live)configure# commit

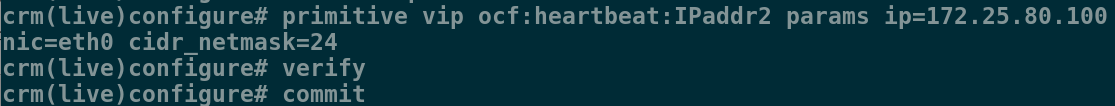

2.先设置集群资源VIP,再设置apache服务

crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=172.25.80.100 nic=eth1cidr_netmask=24

crm(live)configure# verify

crm(live)configure# commit .

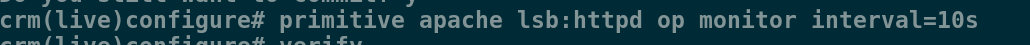

3.添加资源:启动脚本

crm(live)configure# primitive apache lsb:httpd op monitor interval=10s

crm(live)configure# verify

crm(live)configure# commit

此时,可以在server1进行动态监控。

[root@server1 corosync]# crm_mon

动态监控下看到此时的VIP在server2上,httpd在server1上

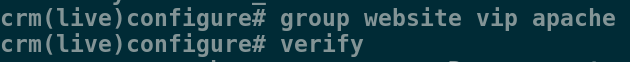

6.设置资源组

crm(live)configure# group website vip apache

crm(live)configure# verify

crm(live)configure# commit

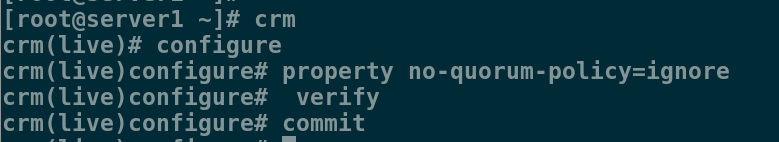

[root@server1 corosync]# crm

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# verify

crm(live)configure# commit

7.测试