题目:

已知图像的尺寸(1000*900),对于同一个相机,前后两次标定结果不一样,求出每个像素点相差多少,并且以可视化的结果显示。

调用opencv的undistortPoints函数实现

- 实现代码1

void opencv_compute_error()

{

Mat k_1 = (Mat_<double>(3, 3) <<

intrinsic_1[0], 0, intrinsic_1[1],

0, intrinsic_1[2], intrinsic_1[3],

0, 0, 1);

Mat Distort_Coefficients_1= (Mat_<double>(8, 1) <<

intrinsic_1[4], intrinsic_1[5], intrinsic_1[6], intrinsic_1[7],

intrinsic_1[8], intrinsic_1[9], intrinsic_1[10], intrinsic_1[11]);

Mat k_2 = (Mat_<double>(3, 3) <<

intrinsic_2[0], 0, intrinsic_2[1],

0, intrinsic_2[2], intrinsic_2[3],

0, 0, 1);

Mat Distort_Coefficients_2 = (Mat_<double>(8, 1) <<

intrinsic_2[4], intrinsic_2[5], intrinsic_2[6], intrinsic_2[7],

intrinsic_2[8], intrinsic_2[9], intrinsic_2[10], intrinsic_2[11]);

Mat error_gray(image_size[0], image_size[1], CV_8UC1);

Mat error_color(image_size[0], image_size[1], CV_8UC3);

for (int j = 0; j < image_size[0]; j = j + 1) { //j是行

for (int i = 0; i < image_size[1]; i = i + 1) { //i是列,

Point2d pixel_undis = Point2d(i, j); //带畸变的像素坐标

vector<Point2d> pixel_array_1;

vector<Point2d> pixel_undis_array_1;

pixel_undis_array_1.push_back(pixel_undis);

undistortPoints(pixel_undis_array_1, pixel_array_1, k_1, Distort_Coefficients_1); //矫正左边的点

//需要将变换后的点先变化为齐次坐标系,然后还需要乘以相机参数矩阵,才是最终的变化的坐标。

Point2d _pixel_1_; //去畸变的归一化平面坐标

_pixel_1_ = pixel_array_1.front();

Point2d pixel_1; //去畸变后的像素坐标

camera2pixel(k_1, _pixel_1_, pixel_1);

vector<Point2d> pixel_array_2;

vector<Point2d> pixel_undis_array_2;

pixel_undis_array_2.push_back(pixel_undis);

undistortPoints(pixel_undis_array_2, pixel_array_2, k_2, Distort_Coefficients_2); //矫正左边的点

//需要将变换后的点先变化为齐次坐标系,然后还需要乘以相机参数矩阵,才是最终的变化的坐标。

Point2d _pixel_2_; //去畸变的归一化平面坐标

_pixel_2_ = pixel_array_2.front();

Point2d pixel_2;

camera2pixel(k_2, _pixel_2_, pixel_2);

double error = 0;

error = sqrt((pixel_1.x - pixel_2.x) * (pixel_1.x - pixel_2.x) +

(pixel_1.y - pixel_2.y) * (pixel_1.y - pixel_2.y));

double error_ = 128 * error / max_error[0] + 128;

if (error_ > 255)error_ = 255;

error_gray.at<uchar>(j, i) = error_;

}

}

/*调用applyColorMap,生成灰度图后转成rgb图(映射模式COLORMAP_JET)*/

applyColorMap(error_gray, error_color, COLORMAP_JET);

imshow(error_color);

}

inline void camera2pixel(const Mat &k, const Point2d &point_cam, Point2d &point_uv) {

point_uv.x = k.at<double>(0, 0) * point_cam.x + k.at<double>(0, 2);

point_uv.y = k.at<double>(1, 1) * point_cam.y + k.at<double>(1, 2);

}

- 实现代码二

#include <iostream>

#include<opencv2\highgui.hpp>

#include<opencv2\imgproc\imgproc.hpp>

#include<opencv2\core.hpp>

using namespace std;

using namespace cv;

inline void camera2pixel(const Mat &k, const Point2d &point_cam, Point2d &point_uv) {

point_uv.x = k.at<double>(0, 0) * point_cam.x + k.at<double>(0, 2);

point_uv.y = k.at<double>(1, 1) * point_cam.y + k.at<double>(1, 2);

}

int main()

{

double image_size[2] = { 1000,900 }; //宽 高(列、行)

double max_error = 1;

double intrinsic_1[12] = { 1188.54836163 ,483.05793425 ,1188.48899218, 447.84525074,

- 0.09028194, - 0.05511487 ,0.00040356, - 0.00020461 ,

0.40233945 ,0.09325488, - 0.16500713 ,0.46888250 };

double intrinsic_2[12] = { 1188.66314290 ,483.07098789, 1188.59981177, 447.85142940,

- 0.10511596, 0.05610084, 0.00040631 ,- 0.00020022,

- 0.12873313, 0.07768071 ,- 0.04612981, - 0.09521232 };

Mat k_1 = (Mat_<double>(3, 3) <<

intrinsic_1[0], 0, intrinsic_1[1],

0, intrinsic_1[2], intrinsic_1[3],

0, 0, 1);

Mat Distort_Coefficients_1 = (Mat_<double>(8, 1) <<

intrinsic_1[4], intrinsic_1[5], intrinsic_1[6], intrinsic_1[7],

intrinsic_1[8], intrinsic_1[9], intrinsic_1[10], intrinsic_1[11]);

Mat k_2 = (Mat_<double>(3, 3) <<

intrinsic_2[0], 0, intrinsic_2[1],

0, intrinsic_2[2], intrinsic_2[3],

0, 0, 1);

Mat Distort_Coefficients_2 = (Mat_<double>(8, 1) <<

intrinsic_2[4], intrinsic_2[5], intrinsic_2[6], intrinsic_2[7],

intrinsic_2[8], intrinsic_2[9], intrinsic_2[10], intrinsic_2[11]);

Mat error_gray(image_size[1], image_size[0], CV_8UC1);

Mat error_color(image_size[1], image_size[0], CV_8UC3);

vector<Point2d> pixel_undis;

vector<Point2d> pixel_1;

vector<Point2d> pixel_2;

int i = 0; //i是列

int j = 0; //j是行

for ( j = 0; j < image_size[1]; j++) { //j是行

for ( i = 0; i < image_size[0]; i++) { //i是列,

pixel_undis.push_back(Point2d(i, j));

}

}

undistortPoints(pixel_undis, pixel_1, k_1, Distort_Coefficients_1); //矫正左边的点

undistortPoints(pixel_undis, pixel_2, k_2, Distort_Coefficients_2); //矫正左边的点

i = 0; j = 0;

double error = 0;

int count = 0;

for (int k = 0; k < pixel_1.size(); k++) {

Point2d _pixel_1_= Point2d(pixel_1[k].x, pixel_1[k].y);

Point2d _pixel_2_ = Point2d(pixel_2[k].x, pixel_2[k].y);

Point2d uv_1, uv_2;

camera2pixel(k_1, _pixel_1_, uv_1);

camera2pixel(k_2, _pixel_2_, uv_2);

double x = uv_1.x- uv_2.x;

double y = uv_1.y - uv_2.y;

error = sqrt(x* x + y * y);

if (count>0 && count % 1000 == 0) {

i = 0;

j++;

}

double error_ = 255 * error / max_error;

if (error_ > 255)error_ = 255;

error_gray.at<uchar>(j, i) = error_;

count++;

i++;

}

/*调用applyColorMap,生成灰度图后转成rgb图(映射模式COLORMAP_JET)*/

applyColorMap(error_gray, error_color, COLORMAP_JET);

imshow("error",error_color);

waitKey(1000000);

return 0;

}

-

分析实现代码一:

- undistortPoints函数可以一次性矫正一幅图的像素点,但是我这里需要取出每个像素坐标对应的畸变坐标。

- 因为在调用undistortPoints函数前,需要将像素值转换成vector,在调用后需要将其再取出,再进行投影,然后再计算两者见的误差。导致代码最终的效果很慢,

-

分析实现代码二

- 实现过程:

- 先将未去畸变的点压入vector,

- 通过undistortPoints获得取畸变坐标,

- 通过遍历vector将两次去畸变的归一化平面坐标取出

- 通过内参计算像素坐标

- 计算两个像素间的距离

- 将距离映射到灰度图的亮度,并赋值给对应的坐标

- 实现起来也比较麻烦,其中有3个循环,时间复杂度也较高。导致最终实现所消耗的时间也很多。

- 实现过程:

自己实现去畸变函数

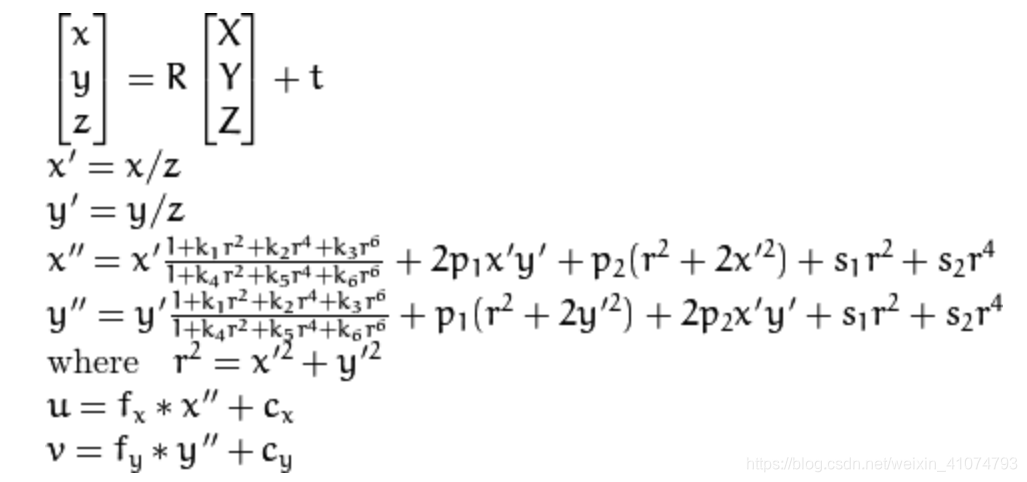

- 畸变公式-opencv官网:

- 将opencv的源码进行修改(源码在C:\opencv\sources\modules\imgproc\src\undistort.cpp)*/

- 关于畸变矫正公式的一些说明:

- 需要将坐标转换成归一化平面坐标(有两种情况);

- 世界坐标 相机坐标 归一化平面坐标(上图的公式就是这种情况)

- 像素坐标 归一化平面(将像素坐标通过相机内参反投影到归一化平面)

- k1、k2、k3、k4、k5、k6为径向失真系数;

- P1、P2是切向畸变系数;

- S1、S2、S3、S4,是薄的棱镜失真系数

- 需要将坐标转换成归一化平面坐标(有两种情况);

void my_compute_error()

{

double image_size[2]={1000,900};

double k1[12]={ }; //已知的12个数据(fx、cx、fy、cy、k1、k2、p1、p2、k3、k4、k5、k6)

double k2[12]={ };

Mat error_color(image_size[1], image_size[0], CV_8UC3);

for (int j = 0; j < image_size[1]; j = j + 1) { //j是行

for (int i = 0; i < image_size[0]; i = i + 1) { //i是列,

double pixel_undis[2] = { i,j };

double pixel_1[2];

double pixel_2[2];

my_undistort_point(pixel_undis, k1, pixel_1);

my_undistort_point(pixel_undis, k2, pixel_2);

double error = 0;

error = sqrt((pixel_1[0] - pixel_2[0]) * (pixel_1[0] - pixel_2[0]) +

(pixel_1[1] - pixel_2[1]) * (pixel_1[1] - pixel_2[1]));

/*自定义rgb颜色代码*/

//红色:畸变方向相反

//绿色:畸变完全相同

enlarge = 255/ max_error[0];

double error_ = error * enlarge;

if (error_ > 255)error_ = 255;

double error_cos = 1; //余弦值,两个畸变向量的余弦值

error_cos = cos_value(pixel_undis, pixel_1, pixel_2);

if (error_cos <= 0) {

error_color.at<Vec3b>(j, i)[0] = 0;

error_color.at<Vec3b>(j, i)[1] = 0;

error_color.at<Vec3b>(j, i)[2] = error_; //red

}

if (error_cos > 0) {

error_color.at<Vec3b>(j, i)[0] = 0;

error_color.at<Vec3b>(j, i)[1] = error_; //gren

error_color.at<Vec3b>(j, i)[2] = 0;

}

}

}

imshow("image", error_color); //显示图像

}

/*去畸变*/

inline void my_undistort_point(double pixel_undis[2], double k[12], double pixel[2]) {

double x, y;

x = (pixel_undis[0] - k[1]) / k[0]; //得到归一化坐标[x,y]^T

y = (pixel_undis[1] - k[3]) / k[2];

double r2, r4, r6, a1, a2, a3, cdist, icdist2;

double xd, yd;

r2 = x * x + y * y;

r4 = r2 * r2;

r6 = r4 * r2;

a1 = 2 * x*y;

a2 = r2 + 2 * x*x;

a3 = r2 + 2 * y*y;

cdist = 1 + k[4] * r2 + k[5] * r4 + k[8] * r6;

icdist2 = 1. / (1 + k[9] * r2 + k[10] * r4 + k[11] * r6);

xd = x * cdist*icdist2 + k[6] * a1 + k[7] * a2;

yd = y * cdist*icdist2 + k[6] * a3 + k[7] * a1;

pixel[0] = xd * k[0] + k[1];

pixel[1] = yd * k[2] + k[3];

}

inline double cos_value(double pixel_undis[2],double pixel_1[2], double pixel_2[2]) {

double d_1[2] = { (pixel_1[0] - pixel_undis[0]),(pixel_1[1] - pixel_undis[1]) };

double d_2[2] = { (pixel_2[0] - pixel_undis[0]),(pixel_2[1] - pixel_undis[1]) };

double d[2] = { (d_1[0] - d_2[0]),(d_1[1] - d_2[1]) };

double dot_product = d_1[0] * d[0] + d_1[1] * d[1];

double length_product = sqrt( (d_1[0] * d_1[0] + d_1[1] * d_1[1]) *(d[0] * d[0] + d[1] * d[1]) );

double error_cos = dot_product / length_product;

return error_cos;

}

分析

对于前后两次标定结果,都属于同一类型的畸变,但是畸变程度不一样,也就是说前后两次畸变点与原点构成的两个向量同向;

假设原始点为

,去畸变后的坐标为

、

;

判断

与

的同向还是反向;

同向:代表C的畸变程度大于B的畸变程度;

反向:代表C的畸变程度小于B的畸变程度;

在程序中,同向采用绿色表示,反向采用红色表示,图像越亮,代表相差值越大;