版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/qzqanzc/article/details/86713731

需求说明

kafka 中保存的数据是满足opentsdb 格式要求的json 数据,需要使用flink 替换现有的kafka consumer ,读取kafka 中的数据发送到opentsdb。暂时不需要加统计和人工只能相关分析算法。

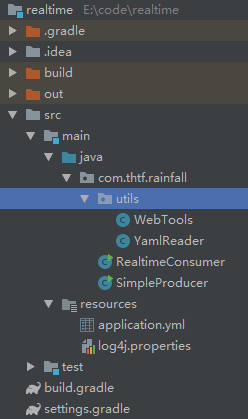

项目结构

build.gradle

plugins {

id 'java'

}

group 'com.kteckl'

version '1.0-SNAPSHOT'

sourceCompatibility = 1.8

repositories {

mavenCentral()

}

dependencies {

compile('org.apache.flink:flink-java:1.7.0')

compile('org.apache.flink:flink-streaming-java_2.11:1.7.0')

compile('org.apache.flink:flink-clients_2.11:1.7.0')

compile('org.apache.flink:flink-connector-kafka-0.11_2.11:1.7.0')

compile('org.yaml:snakeyaml:1.23')

compile group: 'org.slf4j', name: 'slf4j-api', version: '1.7.25'

compile group: 'org.apache.kafka',name:'kafka-clients',version: '2.1.0'

compile group: 'org.slf4j', name: 'slf4j-log4j12', version: '1.7.25'

compile group: 'org.apache.httpcomponents', name: 'httpclient', version: '4.5.6'

testCompile group: 'junit', name: 'junit', version: '4.12'

}

jar {

manifest {

attributes("Main-Class": "com.kteck.RealtimeConsumer",

"Implementation-Title": "Gradle")

}

into('lib') {

from configurations.runtime

}

}

配置文件

主配置文件:application.yml

kafka:

brokers: 192.168.0.100:9092192.168.0.101:9092,192.168.0.102:9092

group: topic

topic: test

partitions: 15

consumer.interval.seconds: 5 #default seconds

tsdb.retry.times: 3

tsdb.url: http://192.168.0.250:4242/api/put

log4j配置文件

log4j.rootLogger=ERROR, console

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d{HH:mm:ss,SSS} %-5p %-60c %x - %m%n

util 包

YamlReader.java

import org.yaml.snakeyaml.Yaml;

import java.io.IOException;

import java.io.InputStream;

import java.util.HashMap;

import java.util.Map;

public class YamlReader {

private static Map<String, Map<String, Object>> properties;

private YamlReader() {

if (SingletonHolder.instance != null) {

throw new IllegalStateException();

}

}

/**

* use static inner class achieve singleton

*/

private static class SingletonHolder {

private static YamlReader instance = new YamlReader();

}

public static YamlReader getInstance() {

return SingletonHolder.instance;

}

//init property when class is loaded

static {

InputStream in = null;

try {

properties = new HashMap<>();

Yaml yaml = new Yaml();

in = YamlReader.class.getClassLoader().getResourceAsStream("application.yml");

properties = yaml.loadAs(in, HashMap.class);

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

in.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

/**

* get yaml property

*

* @param key

* @return

*/

public Object getValueByKey(String root, String key) {

Map<String, Object> rootProperty = properties.get(root);

return rootProperty.getOrDefault(key, "");

}

}

WebTools.java

import org.apache.http.HttpEntity;

import org.apache.http.HttpEntityEnclosingRequest;

import org.apache.http.HttpRequest;

import org.apache.http.NoHttpResponseException;

import org.apache.http.client.HttpRequestRetryHandler;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.client.protocol.HttpClientContext;

import org.apache.http.conn.ConnectTimeoutException;

import org.apache.http.entity.StringEntity;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.impl.conn.PoolingHttpClientConnectionManager;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.io.InterruptedIOException;

import java.net.UnknownHostException;

import java.util.HashMap;

import java.util.Map;

/**

* web tool

*/

public class WebTools {

public static String KEY_STATUS_CODE = "statusCode";

public static String KEY_CONTENT = "content";

private final static PoolingHttpClientConnectionManager poolConnManager = new PoolingHttpClientConnectionManager(); //连接池管理器

private final static HttpRequestRetryHandler httpRequestRetryHandler = (exception, executionCount, context) -> {

if (executionCount >= 5) {

return false;

}

if (exception instanceof NoHttpResponseException) {

return true;

}

if (exception instanceof InterruptedIOException) {

return false;

}

if (exception instanceof UnknownHostException) {

return false;

}

if (exception instanceof ConnectTimeoutException) {

return false;

}

HttpClientContext clientContext = HttpClientContext

.adapt(context);

HttpRequest request = clientContext.getRequest();

if (!(request instanceof HttpEntityEnclosingRequest)) {

return true;

}

return false;

};

static { //类加载的时候 设置最大连接数 和 每个路由的最大连接数

poolConnManager.setMaxTotal(2000);

poolConnManager.setDefaultMaxPerRoute(1000);

}

/**

* ########################### core code#######################

*

* @return

*/

private static CloseableHttpClient getCloseableHttpClient() {

CloseableHttpClient httpClient = HttpClients.custom()

.setConnectionManager(poolConnManager)

.setRetryHandler(httpRequestRetryHandler)

.build();

return httpClient;

}

/**

* buildResultMap

*

* @param response

* @param entity

* @return

* @throws IOException

*/

private static Map<String, Object> buildResultMap(CloseableHttpResponse response, HttpEntity entity) throws

IOException {

Map<String, Object> result;

result = new HashMap<>(2);

result.put(KEY_STATUS_CODE, response.getStatusLine().getStatusCode()); //status code

if (entity != null) {

result.put(KEY_CONTENT, EntityUtils.toString(entity, "UTF-8")); //message content

}

return result;

}

/**

* send json by post method

*

* @param url

* @param message

* @return

* @throws Exception

*/

public static Map<String, Object> postJson(String url, String message) throws Exception {

Map<String, Object> result = null;

CloseableHttpClient httpClient = getCloseableHttpClient();

HttpPost httpPost = new HttpPost(url);

CloseableHttpResponse response = null;

try {

httpPost.setHeader("Accept", "application/json;charset=UTF-8");

httpPost.setHeader("Content-Type", "application/json");

StringEntity stringEntity = new StringEntity(message);

stringEntity.setContentType("application/json;charset=UTF-8");

httpPost.setEntity(stringEntity);

response = httpClient.execute(httpPost);

HttpEntity entity = response.getEntity();

result = buildResultMap(response, entity);

} finally {

if (response != null) {

try {

EntityUtils.consume(response.getEntity());

response.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

return result;

}

}

核心代码

RealtimeConsumer.java

import com.thtf.rainfall.utils.WebTools;

import com.thtf.rainfall.utils.YamlReader;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

import java.util.Properties;

public class RealtimeConsumer {

public static void main(String[] args) throws Exception {

final String topic = YamlReader.getInstance().getValueByKey("kafka", "topic").toString();

final String tsdburl = YamlReader.getInstance().getValueByKey("kafka", "tsdb.url").toString();

Properties properties = buildKafkaProperties();

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

FlinkKafkaConsumer011<String> rainConsumer =

new FlinkKafkaConsumer011(topic, new SimpleStringSchema(), properties);

DataStream<String> dataStream = env.addSource(rainConsumer)

.map(value -> {

WebTools.postJson(tsdburl, value);

return value;

}).setBufferTimeout(100);

env.execute();

}

private static Properties buildKafkaProperties() {

final String brokers = YamlReader.getInstance().getValueByKey("kafka", "brokers").toString();

final String group = YamlReader.getInstance().getValueByKey("kafka", "group").toString();

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", brokers);

properties.setProperty("group.id", group);

return properties;

}

}