1.SVM

(1)这里我选择惩罚系数 做实验,不同的惩罚系数 可能导致结果不同。

- 核函数kernel.m

function K = kernel(X,Y,type,gamma)

switch type

case 'linear' %线性核

K = X*Y';

case 'rbf' %高斯核

m = size(X,1);

K = zeros(m,m);

for i = 1:m

for j = 1:m

K(i,j) = exp(-gamma*norm(X(i,:)-Y(j,:))^2);

end

end

end

end

- 训练函数svmTrain.m

function svm = svmTrain(X,Y,kertype,gamma,C)

%二次规划问题,使用quadprog,详细help quadprog

n = length(Y);

H = (Y*Y').*kernel(X,X,kertype,gamma);

f = -ones(n,1);

A = [];

b = [];

Aeq = Y';

beq = 0;

lb = zeros(n,1);

ub = C*ones(n,1);

a = quadprog(H,f,A,b,Aeq,beq,lb,ub);

epsilon = 3e-5; %阈值可以根据自身需求选择

%找出支持向量

svm_index = find(abs(a)> epsilon);

svm.sva = a(svm_index);

svm.Xsv = X(svm_index,:);

svm.Ysv = Y(svm_index);

svm.svnum = length(svm_index);

svm.a = a;

end

- 预测函数predict1.m

function test = predict1(train_data_name,test_data_name,kertype,gamma,C)

%(1)-------------------training data ready-------------------

train_data = load(train_data_name);

n = size(train_data,2); %data column

train_x = train_data(:,1:n-1);

train_y = train_data(:,n);

%find the position of positive label and negtive label

pos = find ( train_y == 1 );

neg = find ( train_y == -1 );

figure('Position',[400 400 1000 400]);

subplot(1,2,1);

plot(train_x(pos,1),train_x(pos,2),'k+');

hold on;

plot(train_x(neg,1),train_x(neg,2),'bs');

hold on;

%(2)-----------------decision boundary-------------------

train_svm = svmTrain(train_x,train_y,kertype,gamma,C);

%plot the support vector

plot(train_svm.Xsv(:,1),train_svm.Xsv(:,2),'ro');

train_a = train_svm.a;

train_w = [sum(train_a.*train_y.*train_x(:,1));sum(train_a.*train_y.*train_x(:,2))];

train_b = sum(train_svm.Ysv-train_svm.Xsv*train_w)/size(train_svm.Xsv,1);

train_x_axis = 0:1:200;

plot(train_x_axis,-train_b-train_w(1,1)*train_x_axis/train_w(2,1),'-');

legend('1','-1','suport vector','decision boundary');

title('training data')

hold on;

%(3)-------------------testing data ready----------------------

test_data = load(test_data_name);

m = size(test_data,2); %data column

test_x = test_data(:,1:m-1);

test_y = test_data(:,m);

%find the test data positive label and negtive label

test_label = sign(test_x*train_w + train_b);

subplot(1,2,2);

test_pos = find ( test_y == 1 );

test_neg = find ( test_y == -1 );

plot(test_x(test_pos,1),test_x(test_pos,2),'k+');

hold on;

plot(test_x(test_neg,1),test_x(test_neg,2),'bs');

hold on;

%(4)------------------decision boundary -----------------------

test_x_axis = 0:1:200;

plot(test_x_axis,-train_b-train_w(1,1)*test_x_axis/train_w(2,1),'-');

legend('1','-1','decision boundary');

title('testing data');

%print the detail

fprintf('--------------------------------------------\n');

fprintf('training_data: %s\n',train_data_name);

fprintf('testing_data: %s\n',test_data_name);

fprintf('C = %d\n',C);

fprintf('number of test data label: %d\n',size(test_data,1));

fprintf('predict corret number of test data label: %d\n',length(find(test_label==test_y)));

fprintf('Success rate: %.4f\n',length(find(test_label==test_y))/size(test_data,1));

fprintf('--------------------------------------------\n');

end

- 主函数part1.m

kertype = 'linear';

gamma = 0; C = 1;

predict1('training_1.txt','test_1.txt',kertype,gamma,C);

predict1('training_2.txt','test_2.txt',kertype,gamma,C);

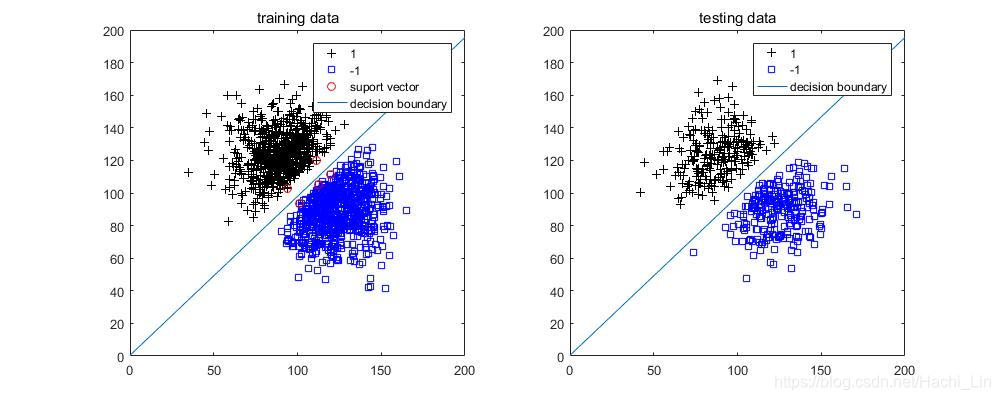

做出图像如下

- training_1.txt 和 test_1.txt

- training_2.txt 和 test_2.txt

(2)测试数据的预测结果如下,可以由上面第(1)问中的代码获得

training_data: training_1.txt

testing_data: test_1.txt

C = 1

number of test data label: 500

predict corret number of test data label: 500

Success rate: 1.0000

training_data: training_2.txt

testing_data: test_2.txt

C = 1

number of test data label: 500

predict corret number of test data label: 500

Success rate: 1.0000

可以发现测试数据的正确率是百分之百,说明决策边界刚好可以把正负样本给完全分隔开。

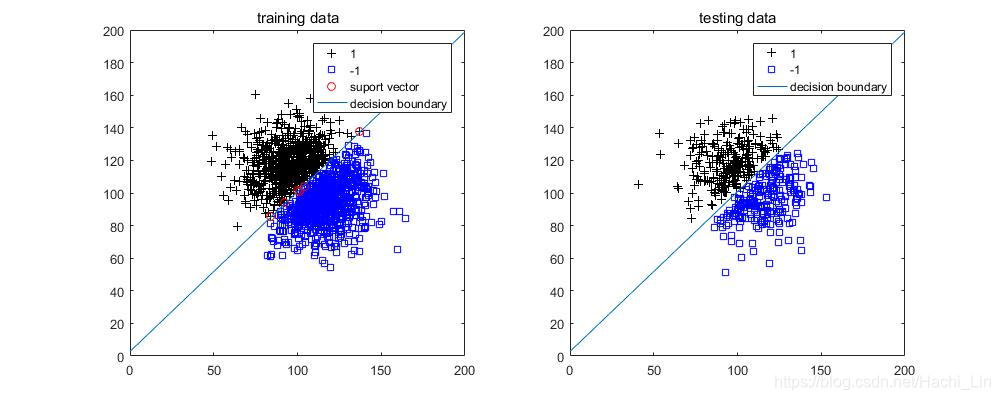

(3)更改第(1)问中的主函数,其余的代码不变

- part1_3.m

kertype = 'linear';

gamma = 0;

C = [0.01,0.1,1,10,100];

for i=1:size(C,2)

predict1('training_1.txt','test_1.txt',kertype,gamma,C(i));

end

for i=1:size(C,2)

predict1('training_2.txt','test_2.txt',kertype,gamma,C(i));

end

观察结果

- training_1.txt 和 test_1.txt

| C | success rate |

|---|---|

| 0.01 | 1.0000 |

| 0.1 | 1.0000 |

| 1 | 1.0000 |

| 10 | 1.0000 |

| 100 | 1.0000 |

- training_2.txt 和 test_2.txt

| C | success rate |

|---|---|

| 0.01 | 1.0000 |

| 0.1 | 1.0000 |

| 1 | 1.0000 |

| 10 | 1.0000 |

| 100 | 1.0000 |

从上面的结果看似乎没差别,这是因为我们的数据集太好了,所以预测正确率都是1。正常的情况下,C比较大的时候表示我们想要犯更少的错误,但margin会稍微小一点;C比较的小的时候表示我们想要更大的margin,划分错误多一点没关系。也就是说C比较大时正确率会高一些,而C比较小正确率会低一些。

2. 手写字体识别

实验给的strimage.m的作用是从train-01-images.svm中选定指定行以图片的形式显示0或1,其中图片的像素是28×28。

(1)以下两个m文件处理train-01-images.svm和test-01-images.svm,其功能是参照strimage.m将数据集每一行先转换为28×28像素的图像,图像的每一点有确定的值。然后将所有的点展开成一行全部存储于hand_digists_train.dat和hand_digists_test.dat。转换后的hand_digists_train.dat大小为12665×785,hand_digists_test.dat的大小为2115×785,每一行中的最后一个数字对应着标签,即第785列。

- re_hand_digits.m

function svm = re_hand_digits(filename,n)

fidin = fopen(filename);

i = 1;

apres = [];

while ~feof(fidin)

tline = fgetl(fidin); % 从文件读行

apres{i} = tline;

i = i+1;

end

grid = zeros(n,784);

label = zeros(n,1);

for k = 1:n

a = char(apres(k));

lena = size(a,2);

xy = sscanf(a(4:lena), '%d:%d');

label(k,1) = sscanf(a(1:3),'%d');

lenxy = size(xy,1);

for i=2:2:lenxy %% 隔一个数

if(xy(i)<=0)

break

end

grid(k,xy(i-1)) = xy(i) * 100/255; %转为有颜色的图像

end

end

svm.grid = grid;

svm.label = label;

end

- save_data.m

svm1 = re_hand_digits('train-01-images.svm',12665);

svm2 = re_hand_digits('test-01-images.svm',2115);

train_x = svm1.grid; train_y = svm1.label;

test_x = svm2.grid; test_y = svm2.label;

train = [train_x,train_y];

test = [test_x,test_y];

[row,col] = size(test);

fid=fopen('hand_digits_test.dat','wt');

for i=1:1:row

for j=1:1:col

if(j==col)

fprintf(fid,'%g\n',test(i,j));

else

fprintf(fid,'%g\t',test(i,j));

end

end

end

fclose(fid);

[row,col] = size(train);

fid=fopen('hand_digits_train.dat','wt');

for i=1:1:row

for j=1:1:col

if(j==col)

fprintf(fid,'%g\n',train(i,j));

else

fprintf(fid,'%g\t',train(i,j));

end

end

end

fclose(fid);

实验虽然要求使用全部的训练集进行训练,但你会发现使用全部训练集的时候,使用quadprog函数会出现内存不足的情况,当然好配置的电脑可以跑完,但我们班的大多数人都是跑内存爆炸的,所以这里我只采用大小为3000的训练集,测试集数量不变,你可以根据自身需求选择,当然这对实验的结果是没多大影响的。

- strimage.m

实验虽然给了strimage.m,但我们还是有必要更改一下这个代码,因为这个代码只能查看训练集的0或1的图像,也就是train-01-images.svm中的图像,我们的目的是它也能查看test-01-images.svm的0或1图像,这个代码其实就加了一个参数filename而已。

function strimage(filename,n)

fidin = fopen(filename);

i = 1;

apres = [];

while ~feof(fidin)

tline = fgetl(fidin); % 从文件读行

apres{i} = tline;

i = i+1;

end

%选中我们选定的第n张图片

a = char(apres(n));

lena = size(a);

lena = lena(2);

%xy存储像素的索引和相应的灰度值

xy = sscanf(a(4:lena), '%d:%d');

lenxy = size(xy);

lenxy = lenxy(1);

grid = [];

grid(784) = 0; %28*28网格,0代表黑色背景

for i=2:2:lenxy %% 隔一个数

if(xy(i)<=0)

break

end

grid(xy(i-1)) = xy(i) * 100/255; %转为有颜色的图像

end

%显示手写数字图像

grid1 = reshape(grid,28,28);

grid1 = fliplr(diag(ones(28,1)))*grid1;

grid1 = rot90(grid1,3);

image(grid1);

hold on;

end

- 预测函数predict2.m

function [test_miss,train_miss] = predict2(train_data_name,test_data_name,kertype,gamma,C)

%(1)-------------------training data ready-------------------

train_data = train_data_name;

n = size(train_data,2);

train_x = train_data(:,1:n-1);

train_y = train_data(:,n);

%(2)-----------------training model-------------------

%二次规划用来求解问题,使用quadprog

n = length(train_y);

H = (train_y*train_y').*kernel(train_x,train_x,kertype,gamma);

f = -ones(n,1); %f'为1*n个-1

A = [];

b = [];

Aeq = train_y';

beq = 0;

lb = zeros(n,1);

if C == 0 %无正则项

ub = [];

else %有正则项

ub = C.*ones(n,1);

end

train_a = quadprog(H,f,A,b,Aeq,beq,lb,ub);

epsilon = 2e-7;

%找出支持向量

sv_index = find(abs(train_a)> epsilon);

Xsv = train_x(sv_index,:);

Ysv = train_y(sv_index);

svnum = length(sv_index);

train_w(1:784,1) = sum(train_a.*train_y.*train_x(:,1:784));

train_b = sum(Ysv-Xsv*train_w)/svnum;

train_label = sign(train_x*train_w+train_b);

train_miss = find(train_label~=train_y);

%(3)-------------------testing data ready----------------------

test_data = test_data_name;

m = size(test_data,2);

test_x = test_data(:,1:m-1);

test_y = test_data(:,m);

test_label = sign(test_x*train_w+train_b);

test_miss = find(test_label~=test_y);

%(4)------------------detail -----------------------;

%print the detail

fprintf('--------------------------------------------\n');

fprintf('C = %d\n',C);

fprintf('number of test data label: %d\n',size(test_data,1));

fprintf('number of train data label: %d\n',size(train_data,1));

fprintf('predict corret number of test data label: %d\n',length(find(test_label==test_y)));

fprintf('predict corret number of train data label: %d\n',length(find(train_label==train_y)));

fprintf('Success rate of test data: %.4f\n',length(find(test_label==test_y))/size(test_data,1));

fprintf('Success rate of train data: %.4f\n',length(find(train_label==train_y))/size(train_data,1));

fprintf('--------------------------------------------\n');

end

- 主函数part2.m

train_data = load('hand_digits_train.dat');

test_data = load('hand_digits_test.dat');

train_len = size(train_data,1); test_len = size(test_data,1);

train_index = randperm(train_len,3000);

test_index = randperm(test_len,2115);

train_select = zeros(3000,785);

test_select = zeros(2115,785);

for i = 1:3000

train_select(i,:) = train_data(train_index(i),:);

end

for i = 1:2115

test_select(i,:) = test_data(test_index(i),:);

end

%无正则项

[train_miss_index,test_miss_index] = predict2(train_select,test_select,'linear',0,0);

%查看被错误分类的手写字体

%训练错误手写字体

for i = 1:length(train_miss_index)

strimage('train-01-images.svm',i);

figure;

end

%测试错误手写字体

for i = 1:length(test_miss_index)

strimage('test-01-images.svm',i);

if i~=length(test_miss_index)

figure;

end

end

- 打印训练错误和测试错误

C = 0

number of test data label: 2115

number of train data label: 3000

predict corret number of test data label: 2108

predict corret number of train data label: 2991

Success rate of test data: 0.9967

Success rate of train data: 0.9970

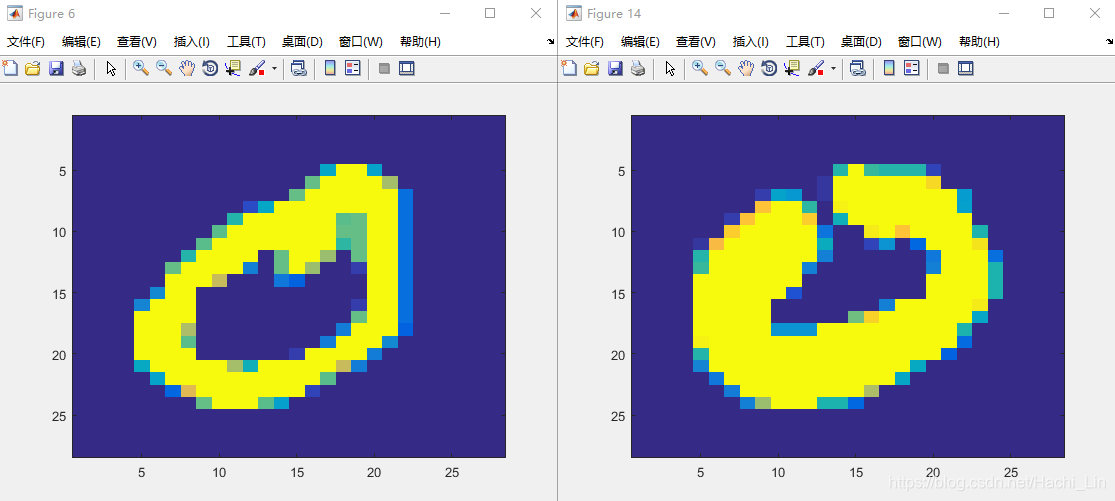

- 附上一张训练错误图像和测试错误图像(左边是训练错误图像,右边是测试错误图像)

可以发现,错误的和书写不规范有很大的关系。

(2)这里主要改的代码是主函数part2.m,其余的不用变。

train_data = load('hand_digits_train.dat');

test_data = load('hand_digits_test.dat');

train_len = size(train_data,1); test_len = size(test_data,1);

train_index = randperm(train_len,3000);

test_index = randperm(test_len,2115);

train_select = zeros(3000,785);

test_select = zeros(2115,785);

for i = 1:3000

train_select(i,:) = train_data(train_index(i),:);

end

for i = 1:2115

test_select(i,:) = test_data(test_index(i),:);

end

C = [0.01,0.1,1,10,100];

%有正则项

for i=1:size(C,2)

predict2(train_select,test_select,'linear',0,C(i));

end

观察结果如下

- 训练误差

| C | success rate |

|---|---|

| 0.01 | 0.9963 |

| 0.1 | 0.9973 |

| 1 | 0.9947 |

| 10 | 0.9993 |

| 100 | 0.9920 |

- 测试误差

| C | success rate |

|---|---|

| 0.01 | 0.9939 |

| 0.1 | 0.9957 |

| 1 | 0.9910 |

| 10 | 0.9991 |

| 100 | 0.9960 |

回答下列问题

(i)从上述结果发现C=100时训练误差最大。

(ii)问题(i)中对应的测试误差为1-0.9960 = 0.0040,在matlab显示2115个测试数据错了17个。

(iii)由表中可以看C在10时测试误差和训练误差都很小,所以我推测C=10左右会使得测试误差很小,当然只是我个人推测。

3. 非线性SVM

- 预测函数predict3.m

function test = predict3(train_name,type,gamma,C)

%-----------------------training data ready------------------------

train = train_name;

[m,n] = size(train);

train_x = train(:,1:n-1);

train_y = train(:,n);

pos = find(train_y == 1);

neg = find(train_y == -1);

plot(train_x(pos,1),train_x(pos,2),'k+');

hold on;

plot(train_x(neg,1),train_x(neg,2),'bs');

hold on;

%-----------------------training model--------------------------

%二次规划用来求解问题,使用quadprog

K = kernel(train_x,train_x,type,gamma);

H = (train_y*train_y').*K;

f = -ones(m,1);

A = [];

b = [];

Aeq = train_y';

beq = 0;

lb = zeros(m,1);

if C == 0

ub = [];

else

ub = C*ones(m,1);

end

a = quadprog(H,f,A,b,Aeq,beq,lb,ub);

epsilon = 1e-5;

%查找支持向量

sv_index = find(abs(a)> epsilon);

Xsv = train_x(sv_index,:);

Ysv = train_y(sv_index);

svnum = length(sv_index);

%make classfication predictions over a grid of values

xplot = linspace(min(train_x(:,1)),max(train_x(:,1)),100)';

yplot = linspace(min(train_x(:,2)),max(train_x(:,2)),100)';

[X,Y] = meshgrid(xplot,yplot);

vals = zeros(size(X));

%calculate decision value

train_a = a;

sum_b = 0;

for k = 1:svnum

sum = 0;

for i = 1:m

sum = sum + train_a(i,1)*train_y(i,1)*K(i,k);

end

sum_b = sum_b + Ysv(k) - sum;

end

train_b = sum_b/svnum;

for i = 1:100

for j = 1:100

x_y = [X(i,j),Y(i,j)];

sum = 0;

for k = 1:m

sum = sum + train_a(k,1)*train_y(k,1)*exp(-gamma*norm(train_x(k,:)-x_y)^2);

end

vals(i,j) = sum + train_b;

end

end

%plot the SVM boundary

colormap bone;

contour(X,Y,vals,[0 0],'LineWidth',2);

title(['\gamma = ',num2str(gamma)]);

end

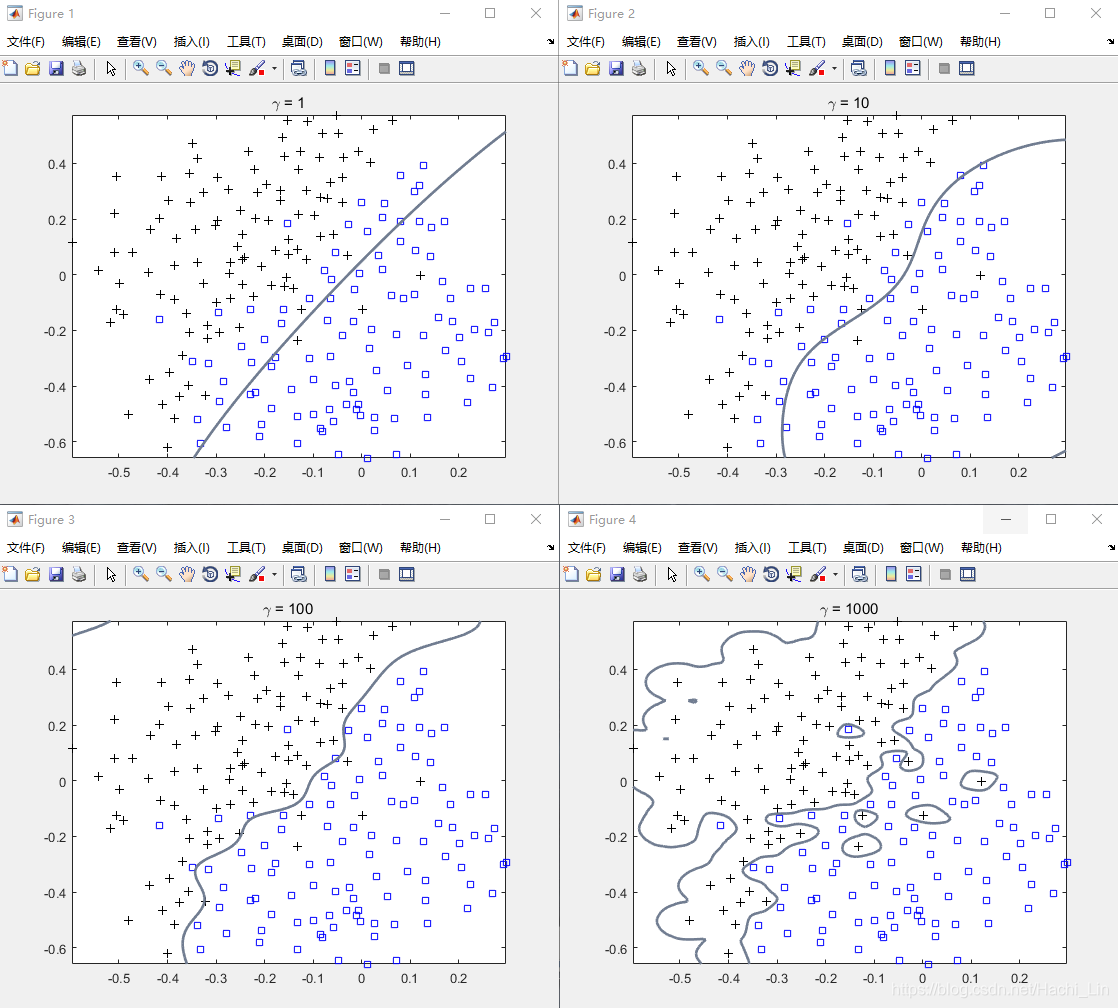

- 主函数part3.m

type = 'rbf';

train_name = load('training_3.text');

gamma = [1,10,100,1000];

C = 1;

for i = 1:length(gamma)

predict3(train_name,type,gamma(i),C);

if i ~= length(gamma)

figure;

end

end

- 实验结果

可以发现γ越大,会出现过拟合现象,而γ越小,会出现欠拟合现象。