Deep Residual Learning for Image Recognition

简介

这是何大佬的一篇非常经典的神经网络的论文,也就是大名鼎鼎的ResNet残差网络,论文主要通过构建了一种新的网络结构来解决当网络层数过高之后更深层的网络的效果没有稍浅层网络好的问题,并且做出了适当解释,用ResNet很好的解决了这个问题。

背景

深度卷积神经网络已经在图像分类问题中大放异彩了,近来的研究也表明,网络的深度对精度起着至关重要的作用。但是,随着网络的加深,有一个问题值得注意,随着网络一直堆叠加深,网络的效果一直会越来越好吗?显然会遇到梯度消失或者是梯度爆炸问题,而这个问题,已经可以通过在初始化的时候归一化输入解决,但是当网络最终收敛之后,又会出现“退化”问题,导致准确率降低(不是overfitting),因此尽管可以不断堆叠网络层数,让其可以训练并且收敛,但是遇到退化问题仍然没办法。作者认为现在通过一些训练手段来解决这个问题远远没有通过改变网络结构来解决这个问题来的更加彻底。图为56层的误差高于20层的误差。

Deep Residual Learning

Residual Learning

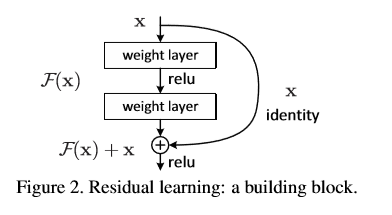

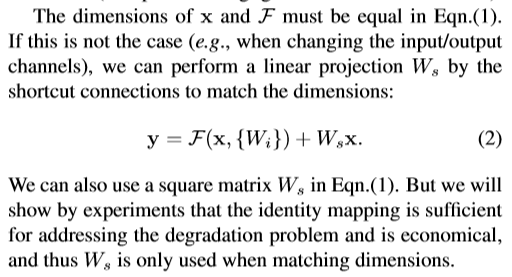

ResNet是通过将一层的输入和另一层的输出结果一起作为一个块的输出,假设x是一个块的输入,一块由两层组成,那么他先经过一个卷积层并且relu激活得到F(x),然后F(x)再经过卷积层之后的结果加上之前的输入x

得到一个结果,将结果通过relu激活作为该块的输出。对于普通的卷积网络,我们输出的是F(x),但是在ResNet中,我们输出的是H(x) = F(x) + x,但是我们仍然你和F(x) = H(x) - x.这样有什么好处呢?这样做改变了学习的目标,把原来学习让目标函数等于一个已知的恒定值改变为使输出与输入的残差为0,也就是恒等映射,导致的是,引入残差后映射对输出的变化更为敏感。

比如把5映射到5.1,那么引入残差前是F'(5)=5.1,引入残差后是H(5)=5.1, H(5)=F(5)+5, F(5)=0.1。这里的F'和F都表示网络参数映射,引入残差后的映射对输出的变化更敏感。比如s输出从5.1变到5.2,映射F'的输出增加了1/51=2%,而对于残差结构输出从5.1到5.2,映射F是从0.1到0.2,增加了100%。明显后者输出变化对权重的调整作用更大,所以效果更好。残差的思想都是去掉相同的主体部分,从而突出微小的变化。

可以看下面这张图理解:

而实际过程中我们会想到,输入x和经过layer之后的输出结果的维度不一样,那么他们就不能被直接相加,为了解决这个问题,我们将x卷积变换一下,将x变换为和输出结果一样的维度就可以了。

可以多个层作为一个块,不一定是两层、三层。

H(x)作者称为shortcut connection,意为将x像短路一样加到F(x)后面作为输出

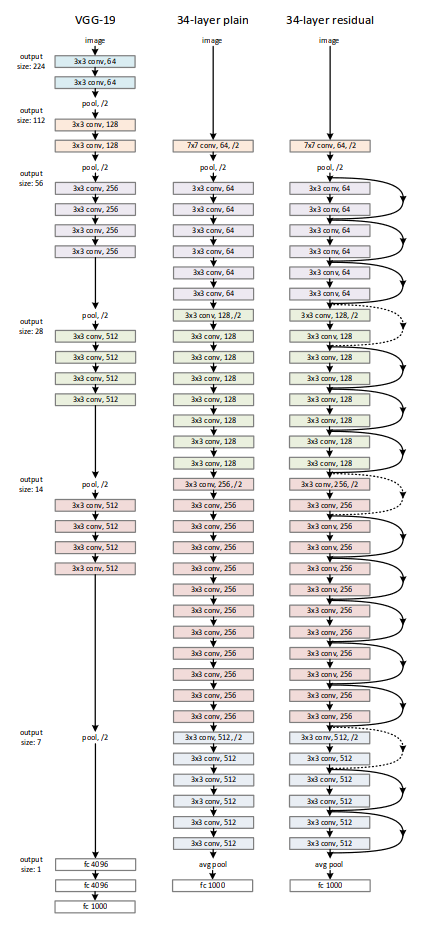

Network Architectures

实验

我本来想用keras来搭建一个ResNet跑一下MNIST看看效果,才想起来mnist是28*28的,算了吧,按照网上的代码理解了 一遍,自己写一遍注释,就不训练了(我的CPU咋训练)。

#coding=utf-8

from keras.models import Model

from keras.layers import Input,Dense,Dropout,BatchNormalization,Conv2D,MaxPooling2D,AveragePooling2D,Activation,ZeroPadding2D

#concatenate 通道合并,通道增加,但是每通道的数据不变;add 通道数不变,每通道的数据直接叠加

from keras.layers import add,Flatten

import numpy as np

# 这段代码是ResNet V2的代码实现,relu被放在了支路

def Conv2d_BN(x,nb_filter,kernel_size,strides=(1,1),padding='same',name=None):

if name is not None:

bn_name = name + '_bn'

conv_name = name + '_conv'

else:

bn_name = None

conv_name = None

x = Conv2D(nb_filter,kernel_size,padding=padding,strides = strides,activation = 'relu',name=conv_name)(x)#对输入进行卷积并且用relu激活

x = BatchNormalization(axis = 3,name=bn_name)(x)# BN

return x

def Conv_Block(inpt,nb_filter,kernel_size,strides = (1,1),with_conv_shortcut=False):# ResNet V2,参数with_conv_shortcut是为了改变shape,否则与inpt加不了,因此把inpt也经过一次conv就可以相加了

x=Conv2d_BN(inpt,nb_filter=nb_filter,kernel_size=kernel_size,strides = strides,padding='same') #卷积+BN

x=Conv2d_BN(x,nb_filter=nb_filter,kernel_size=kernel_size,padding='same')# 再卷积+BN

if with_conv_shortcut:# 如果shape改变

shortcut = Conv2d_BN(inpt,nb_filter=nb_filter,strides=strides,kernel_size=kernel_size)#把输入变成与x相同的shape

x = add([x,shortcut])#相加

return x

else:#shape不改变

x = add([x,inpt])#直接相加

return x

inpt = Input(shape= (224,224,3))

x = ZeroPadding2D((3,3))(inpt)# (230,230,3)

x = Conv2d_BN(x,nb_filter=64,kernel_size=(7,7),strides=(2,2),padding='valid')#(113,113,64)

x = MaxPooling2D(pool_size=(3,3),strides = (2,2),padding='same')(x) # (56,56,64)

x = Conv_Block(x,nb_filter = 64,kernel_size = (3,3))# (56,56,64)

x = Conv_Block(x,nb_filter = 64,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 64,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 128,kernel_size = (3,3),strides=(2,2),with_conv_shortcut=True)#(28,28,128)

x = Conv_Block(x,nb_filter = 128,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 128,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 128,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 256,kernel_size = (3,3),strides=(2,2),with_conv_shortcut=True)#(14,14,256)

x = Conv_Block(x,nb_filter = 256,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 256,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 256,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 512,kernel_size = (3,3),strides=(2,2),with_conv_shortcut=True)#(7,7,512)

x = Conv_Block(x,nb_filter = 512,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 512,kernel_size = (3,3))

x = Conv_Block(x,nb_filter = 512,kernel_size = (3,3))

x = AveragePooling2D(pool_size = (7,7))(x)

x = Flatten()(x)

x = Dense(1000,activation = "softmax")(x)

model = Model(inputs = inpt,outputs=x)

model.compile(loss = "categorical_crossentropy",optimizer="rmsprop",metrics=["accuracy"])

model.summary()

‘’‘

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 224, 224, 3) 0

__________________________________________________________________________________________________

zero_padding2d_1 (ZeroPadding2D (None, 230, 230, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 112, 112, 64) 9472 zero_padding2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 112, 112, 64) 256 conv2d_1[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 56, 56, 64) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 56, 56, 64) 36928 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 56, 56, 64) 256 conv2d_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 56, 56, 64) 36928 batch_normalization_2[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 56, 56, 64) 256 conv2d_3[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 56, 56, 64) 0 batch_normalization_3[0][0]

max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 56, 56, 64) 36928 add_1[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 56, 56, 64) 256 conv2d_4[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 56, 56, 64) 36928 batch_normalization_4[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 56, 56, 64) 256 conv2d_5[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 56, 56, 64) 0 batch_normalization_5[0][0]

add_1[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 56, 56, 64) 36928 add_2[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 56, 56, 64) 256 conv2d_6[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 56, 56, 64) 36928 batch_normalization_6[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 56, 56, 64) 256 conv2d_7[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 56, 56, 64) 0 batch_normalization_7[0][0]

add_2[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 28, 28, 128) 73856 add_3[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 28, 28, 128) 512 conv2d_8[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 28, 28, 128) 147584 batch_normalization_8[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 28, 28, 128) 73856 add_3[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 28, 28, 128) 512 conv2d_9[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 28, 28, 128) 512 conv2d_10[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 28, 28, 128) 0 batch_normalization_9[0][0]

batch_normalization_10[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 28, 28, 128) 147584 add_4[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 28, 28, 128) 512 conv2d_11[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 28, 28, 128) 147584 batch_normalization_11[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 28, 28, 128) 512 conv2d_12[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 28, 28, 128) 0 batch_normalization_12[0][0]

add_4[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 28, 28, 128) 147584 add_5[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 28, 28, 128) 512 conv2d_13[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 28, 28, 128) 147584 batch_normalization_13[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 28, 28, 128) 512 conv2d_14[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 28, 28, 128) 0 batch_normalization_14[0][0]

add_5[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 28, 28, 128) 147584 add_6[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 28, 28, 128) 512 conv2d_15[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 28, 28, 128) 147584 batch_normalization_15[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 28, 28, 128) 512 conv2d_16[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 28, 28, 128) 0 batch_normalization_16[0][0]

add_6[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 14, 14, 256) 295168 add_7[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 14, 14, 256) 1024 conv2d_17[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 14, 14, 256) 590080 batch_normalization_17[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 14, 14, 256) 295168 add_7[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 14, 14, 256) 1024 conv2d_18[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 14, 14, 256) 1024 conv2d_19[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 14, 14, 256) 0 batch_normalization_18[0][0]

batch_normalization_19[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 14, 14, 256) 590080 add_8[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 14, 14, 256) 1024 conv2d_20[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 14, 14, 256) 590080 batch_normalization_20[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 14, 14, 256) 1024 conv2d_21[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 14, 14, 256) 0 batch_normalization_21[0][0]

add_8[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 14, 14, 256) 590080 add_9[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 14, 14, 256) 1024 conv2d_22[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 14, 14, 256) 590080 batch_normalization_22[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 14, 14, 256) 1024 conv2d_23[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 14, 14, 256) 0 batch_normalization_23[0][0]

add_9[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 14, 14, 256) 590080 add_10[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 14, 14, 256) 1024 conv2d_24[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 14, 14, 256) 590080 batch_normalization_24[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 14, 14, 256) 1024 conv2d_25[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 14, 14, 256) 0 batch_normalization_25[0][0]

add_10[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 7, 7, 512) 1180160 add_11[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 7, 7, 512) 2048 conv2d_26[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 7, 7, 512) 2359808 batch_normalization_26[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 7, 7, 512) 1180160 add_11[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 7, 7, 512) 2048 conv2d_27[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 7, 7, 512) 2048 conv2d_28[0][0]

__________________________________________________________________________________________________

add_12 (Add) (None, 7, 7, 512) 0 batch_normalization_27[0][0]

batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 7, 7, 512) 2359808 add_12[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 7, 7, 512) 2048 conv2d_29[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 7, 7, 512) 2359808 batch_normalization_29[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 7, 7, 512) 2048 conv2d_30[0][0]

__________________________________________________________________________________________________

add_13 (Add) (None, 7, 7, 512) 0 batch_normalization_30[0][0]

add_12[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 7, 7, 512) 2359808 add_13[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 7, 7, 512) 2048 conv2d_31[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 7, 7, 512) 2359808 batch_normalization_31[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 7, 7, 512) 2048 conv2d_32[0][0]

__________________________________________________________________________________________________

add_14 (Add) (None, 7, 7, 512) 0 batch_normalization_32[0][0]

add_13[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 7, 7, 512) 2359808 add_14[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 7, 7, 512) 2048 conv2d_33[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 7, 7, 512) 2359808 batch_normalization_33[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 7, 7, 512) 2048 conv2d_34[0][0]

__________________________________________________________________________________________________

add_15 (Add) (None, 7, 7, 512) 0 batch_normalization_34[0][0]

add_14[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 1, 1, 512) 0 add_15[0][0]

__________________________________________________________________________________________________

flatten_1 (Flatten) (None, 512) 0 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

dense_1 (Dense) (None, 1000) 513000 flatten_1[0][0]

==================================================================================================

Total params: 25,558,760

Trainable params: 25,541,736

Non-trainable params: 17,024

__________________________________________________________________________________________________

’‘’