目录

2.2.1 Transformation on DStreams

2.2.2 Output Operations on DStream

3.1 用Spark Streaming实现实时WordCount

3.3 从flume中拉取数据(FlumePollWordCount 常用)

3.4 flume向spark发送数据( FlumePush不常用,只能有一台机器接收数据)

3.5 Spark Streaming整合Kafka(Receiver方式)

3.6 Spark Streaming整合Kafka(Direct方式)

一 Spark Streaming介绍

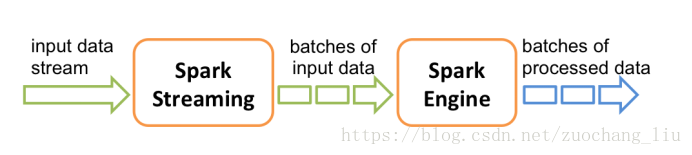

1.1 什么是Spark Streaming

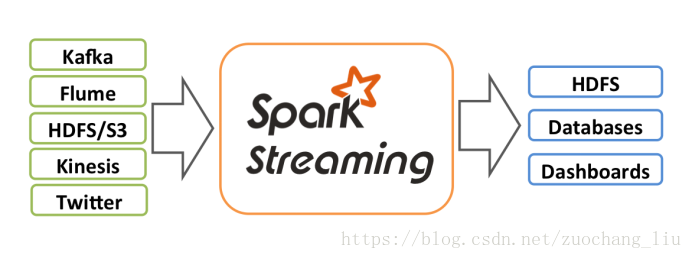

Spark Streaming类似于Apache Storm,用于流式数据的处理。根据其官方文档介绍,Spark Streaming有高吞吐量和容错能力强等特点。Spark Streaming支持的数据输入源很多,例如:Kafka、Flume、Twitter、ZeroMQ和简单的TCP套接字等等。数据输入后可以用Spark的高度抽象原语如:map、reduce、join、window等进行运算。而结果也能保存在很多地方,如HDFS,数据库等。另外Spark Streaming也能和MLlib(机器学习)以及Graphx完美融合。

1.2 为什么要学习Spark Streaming

- 易用

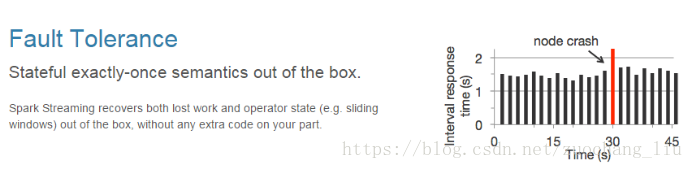

- 容错

- 易整合到Spark体系

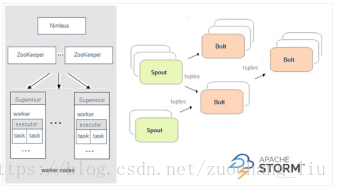

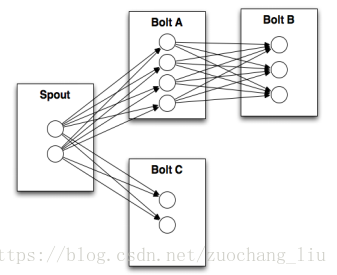

1.3 Spark与Storm的对比

| Spark |

Storm |

| |

|

| 开发语言:Scala |

开发语言:Clojure |

| 编程模型:DStream |

编程模型:Spout/Bolt |

| |

|

二 DStream

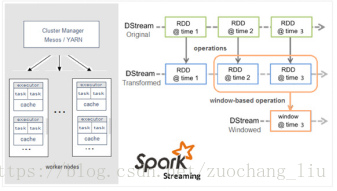

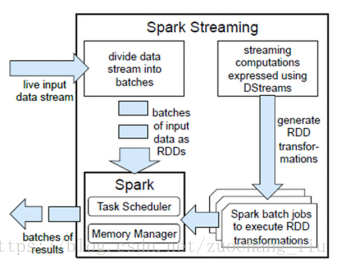

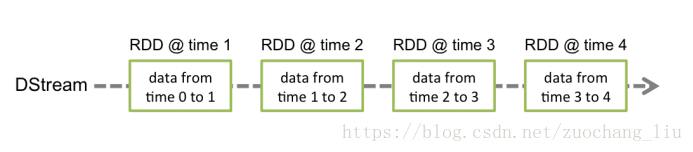

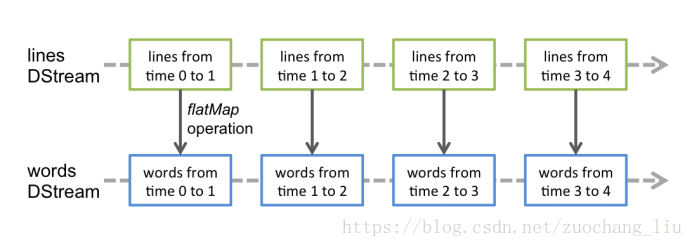

2.1 什么是Dstream

Discretized Stream是Spark Streaming的基础抽象,代表持续性的数据流和经过各种Spark原语操作后的结果数据流。在内部实现上,DStream是一系列连续的RDD来表示。每个RDD含有一段时间间隔内的数据,如下图:

对数据的操作也是按照RDD为单位来进行的

计算过程由Spark engine来完成

2.2 DStream相关操作

DStream上的原语与RDD的类似,分为Transformations(转换)和Output Operations(输出)两种,此外转换操作中还有一些比较特殊的原语,如:updateStateByKey()、transform()以及各种Window相关的原语。

2.2.1 Transformation on DStreams

| Transformation |

Meaning |

| map(func) |

Return a new DStream by passing each element of the source DStream through a function func. |

| flatMap(func) |

Similar to map, but each input item can be mapped to 0 or more output items. |

| filter(func) |

Return a new DStream by selecting only the records of the source DStream on which func returns true. |

| repartition(numPartitions) |

Changes the level of parallelism in this DStream by creating more or fewer partitions. |

| union(otherStream) |

Return a new DStream that contains the union of the elements in the source DStream and otherDStream. |

| count() |

Return a new DStream of single-element RDDs by counting the number of elements in each RDD of the source DStream. |

| reduce(func) |

Return a new DStream of single-element RDDs by aggregating the elements in each RDD of the source DStream using a function func (which takes two arguments and returns one). The function should be associative so that it can be computed in parallel. |

| countByValue() |

When called on a DStream of elements of type K, return a new DStream of (K, Long) pairs where the value of each key is its frequency in each RDD of the source DStream. |

| reduceByKey(func, [numTasks]) |

When called on a DStream of (K, V) pairs, return a new DStream of (K, V) pairs where the values for each key are aggregated using the given reduce function. Note: By default, this uses Spark's default number of parallel tasks (2 for local mode, and in cluster mode the number is determined by the config property spark.default.parallelism) to do the grouping. You can pass an optional numTasks argument to set a different number of tasks. |

| join(otherStream, [numTasks]) |

When called on two DStreams of (K, V) and (K, W) pairs, return a new DStream of (K, (V, W)) pairs with all pairs of elements for each key. |

| cogroup(otherStream, [numTasks]) |

When called on a DStream of (K, V) and (K, W) pairs, return a new DStream of (K, Seq[V], Seq[W]) tuples. |

| transform(func) |

Return a new DStream by applying a RDD-to-RDD function to every RDD of the source DStream. This can be used to do arbitrary RDD operations on the DStream. |

| updateStateByKey(func) |

Return a new "state" DStream where the state for each key is updated by applying the given function on the previous state of the key and the new values for the key. This can be used to maintain arbitrary state data for each key. |

特殊的Transformations

- UpdateStateByKey Operation

UpdateStateByKey原语用于记录历史记录,上文中Word Count示例中就用到了该特性。若不用UpdateStateByKey来更新状态,那么每次数据进来后分析完成后,结果输出后将不在保存

- Transform Operation

Transform原语允许DStream上执行任意的RDD-to-RDD函数。通过该函数可以方便的扩展Spark API。此外,MLlib(机器学习)以及Graphx也是通过本函数来进行结合的。

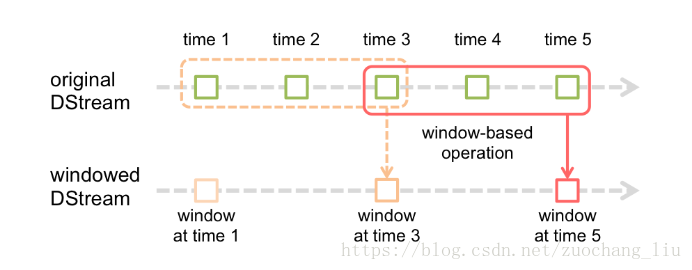

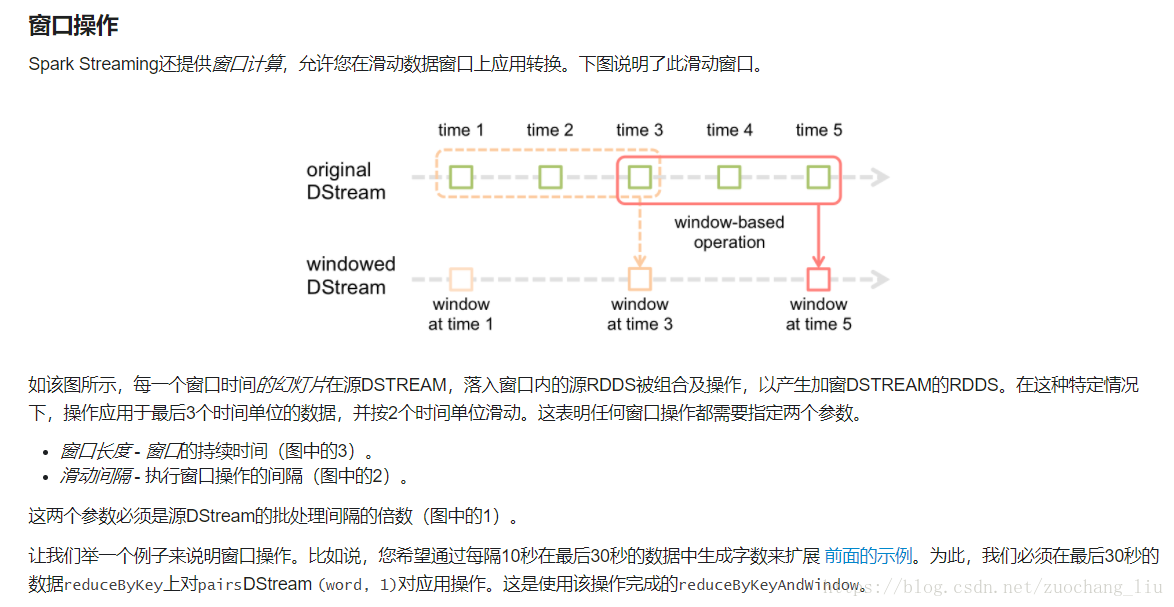

- Window Operations

Window Operations有点类似于Storm中的State,可以设置窗口的大小和滑动窗口的间隔来动态的获取当前Steaming的允许状态

2.2.2 Output Operations on DStream

Output Operations可以将DStream的数据输出到外部的数据库或文件系统,当某个Output Operations原语被调用时(与RDD的Action相同),streaming程序才会开始真正的计算过程。

| Output Operation |

Meaning |

| print() |

Prints the first ten elements of every batch of data in a DStream on the driver node running the streaming application. This is useful for development and debugging. |

| saveAsTextFiles(prefix, [suffix]) |

Save this DStream's contents as text files. The file name at each batch interval is generated based on prefix and suffix: "prefix-TIME_IN_MS[.suffix]". |

| saveAsObjectFiles(prefix, [suffix]) |

Save this DStream's contents as SequenceFiles of serialized Java objects. The file name at each batch interval is generated based on prefix and suffix: "prefix-TIME_IN_MS[.suffix]". |

| saveAsHadoopFiles(prefix, [suffix]) |

Save this DStream's contents as Hadoop files. The file name at each batch interval is generated based on prefix and suffix: "prefix-TIME_IN_MS[.suffix]". |

| foreachRDD(func) |

The most generic output operator that applies a function, func, to each RDD generated from the stream. This function should push the data in each RDD to an external system, such as saving the RDD to files, or writing it over the network to a database. Note that the function func is executed in the driver process running the streaming application, and will usually have RDD actions in it that will force the computation of the streaming RDDs. |

三 实战

3.1 用Spark Streaming实现实时WordCount

安装并启动生成者

首先在一台Linux(ip:192.168.10.101)上用YUM安装nc工具

yum install -y nc

启动一个服务端并监听9999端口

nc -lk 9999

package com.zgcbank.sparkstreaming

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.streaming.{Seconds, StreamingContext}

object StreamingWordCount {

// 屏蔽日志

Logger.getLogger("org.apache").setLevel(Level.ERROR)

def main(args: Array[String]) {

//StreamingContext

val conf = new SparkConf()

.setAppName("StreamingWordCount")

.setMaster("local[2]")

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc, Seconds(5))

//接收数据

val ds = ssc.socketTextStream("172.16.0.11", 8888)

//DStream是一个特殊的RDD

//hello tom hello jerry

val result = ds.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_+_)

//打印结果

result.print()

ssc.start()

ssc.awaitTermination()

}

}

3.2 实时WordCount汇总

package com.zgcbank.sparkstreaming

import org.apache.log4j.{Level, Logger}

import org.apache.spark.{HashPartitioner, SparkConf}

import org.apache.spark.streaming.dstream.ReceiverInputDStream

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* Created by 32047 on 2018/7/4.

*/

object WordCountAll {

// newValues当前批次的出现的单词次数, runningCount表示之前运行的单词出现的结果

/* def updateFunction(newValues: Seq[Int], runningCount: Option[Int]): Option[Int] = {

val newCount = newValues.sum + runningCount.getOrElse(0)// 将历史前几个批次的值和当前批次的值进行累加返回当前批次最终的结果

Some(newCount)

}*/

/**

* String : 单词 hello

* Seq[Int] :单词在当前批次出现的次数

* Option[Int] : 历史结果

*/

val updateFunc = (iter: Iterator[(String, Seq[Int], Option[Int])]) => {

//iter.flatMap(it=>Some(it._2.sum + it._3.getOrElse(0)).map(x=>(it._1,x)))

iter.flatMap{case(x,y,z)=>Some(y.sum + z.getOrElse(0)).map(m=>(x, m))}

}

// 屏蔽日志

Logger.getLogger("org.apache").setLevel(Level.ERROR)

def main(args: Array[String]) {

// 必须要开启2个以上的线程,一个线程用来接收数据,另外一个线程用来计算

val conf = new SparkConf().setMaster("local[2]").setAppName("NetworkWordCount")

// 设置sparkjob计算时所采用的序列化方式

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.set("spark.rdd.compress", "true") // 节约大量的内存内容

// 如果你的程序出现垃圾回收时间过程,可以设置一下java的垃圾回收参数

// 同时也会创建sparkContext对象

// 批次时间 >= 批次处理的总时间 (批次数据量,集群的计算节点数量和配置)

val ssc = new StreamingContext(conf, Seconds(5))

//做checkpoint 写入共享存储中

ssc.checkpoint("c://aaa")

// 创建一个将要连接到 hostname:port 的 DStream,如 localhost:9999

val lines: ReceiverInputDStream[String] = ssc.socketTextStream("192.168.175.101", 44444)

//updateStateByKey结果可以累加但是需要传入一个自定义的累加函数:updateFunc

val results = lines.flatMap(_.split(" ")).map((_,1)).updateStateByKey(updateFunc, new HashPartitioner(ssc.sparkContext.defaultParallelism), true)

//打印结果到控制台

results.print()

//开始计算

ssc.start()

//等待停止

ssc.awaitTermination()

}

}

3.3 从flume中拉取数据(FlumePollWordCount 常用)

flume-poll.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1# source

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = /export/data/flume

a1.sources.r1.fileHeader = true# Describe the sink

a1.sinks.k1.type = org.apache.spark.streaming.flume.sink.SparkSink

a1.sinks.k1.hostname = 172.16.0.11

a1.sinks.k1.port = 8888# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

package com.zgcbank.sparkstreaming

import java.net.InetSocketAddress

import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.flume.FlumeUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

object FlumePollWordCount {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("FlumePollWordCount").setMaster("local[2]")

val ssc = new StreamingContext(conf, Seconds(5))

//从flume中拉取数据(flume的地址,可以有多个地址)

val address = Seq(new InetSocketAddress("172.16.0.11", 8888))

val flumeStream = FlumeUtils.createPollingStream(ssc, address, StorageLevel.MEMORY_AND_DISK)

val words = flumeStream.flatMap(x => new String(x.event.getBody().array()).split(" ")).map((_,1))

val results = words.reduceByKey(_+_)

results.print()

ssc.start()

ssc.awaitTermination()

}

}

3.4 flume向spark发送数据( FlumePush不常用,只能有一台机器接收数据)

flume-push.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1# source

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = /export/data/flume

a1.sources.r1.fileHeader = true# Describe the sink

a1.sinks.k1.type = avro

#这是接收方

a1.sinks.k1.hostname = 192.168.31.172

a1.sinks.k1.port = 8888# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

package com.zgcbank.sparkstreaming

import org.apache.spark.SparkConf

import org.apache.spark.streaming.flume.FlumeUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

object FlumePushWordCount {

def main(args: Array[String]) {

// val host = args(0)

//val port = args(1).toInt

LoggerLevels.setStreamingLogLevels()

val conf = new SparkConf().setAppName("FlumeWordCount")//.setMaster("local[2]")

val ssc = new StreamingContext(conf, Seconds(5))

//推送方式: flume向spark发送数据

val flumeStream = FlumeUtils.createStream(ssc, "192.168.31.172", 8888)

//flume中的数据通过event.getBody()才能拿到真正的内容

val words = flumeStream.flatMap(x => new String(x.event.getBody().array()).split(" ")).map((_, 1))

val results = words.reduceByKey(_ + _)

results.print()

ssc.start()

ssc.awaitTermination()

}

}

3.5 Spark Streaming整合Kafka(Receiver方式)

此方式不常用,receiver会一直接收数据,数据过多会存储在内存中,且还需要设置checkpoint,效率太低

package com.zgcbank.sparkstreaming

import org.apache.spark.storage.StorageLevel

import org.apache.spark.{HashPartitioner, SparkConf}

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

object KafkaWordCount {

val updateFunc = (iter: Iterator[(String, Seq[Int], Option[Int])]) => {

//iter.flatMap(it=>Some(it._2.sum + it._3.getOrElse(0)).map(x=>(it._1,x)))

iter.flatMap { case (x, y, z) => Some(y.sum + z.getOrElse(0)).map(i => (x, i)) }

}

def main(args: Array[String]) {

val Array(zkQuorum, group, topics, numThreads) = args

val sparkConf = new SparkConf().setAppName("KafkaWordCount").setMaster("local[2]")

val ssc = new StreamingContext(sparkConf, Seconds(5))

ssc.checkpoint("c://ck2")

val topicMap = topics.split(",").map((_, numThreads.toInt)).toMap

val data = KafkaUtils.createStream(ssc, zkQuorum, group, topicMap, StorageLevel.MEMORY_AND_DISK_SER)

val words = data.map(_._2).flatMap(_.split(" "))

val wordCounts = words.map((_, 1)).updateStateByKey(updateFunc, new HashPartitioner(ssc.sparkContext.defaultParallelism), true)

wordCounts.print()

ssc.start()

ssc.awaitTermination()

}

}

3.6 Spark Streaming整合Kafka(Direct方式)

3.6.1 手动提交偏移量到kafka

package spark.streaming.kafka

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.streaming.kafka010._

import org.apache.spark.streaming.{Seconds, StreamingContext}

import spark.streaming.utils.JPools

/**

* streaming 整合 kafka

*/

object StreamingKafka {

Logger.getLogger("org.apache").setLevel(Level.WARN)

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf()

.setMaster("local[*]")

.setAppName(s"${this.getClass.getSimpleName}")

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

val ssc = new StreamingContext(sparkConf, Seconds(2))

// kafka的连接相关参数

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "kk-01:9092,kk-02:9092,kk-03:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> "g001",

"auto.offset.reset" -> "earliest",

"enable.auto.commit" -> (false: java.lang.Boolean) // 是否要自动提交偏移量

)

// 指定要从哪个主题的消费数据

val topics = Array("test001") // 3

// 使用KafkaUtils直接从对应的主题里面拉去数据 - 直连方式

val stream = KafkaUtils.createDirectStream[String, String](

ssc,

// 如果kafka数据节点和spark计算节点不在同一台机器,可是设置数据偏好为PreferConsistent, This will distribute partitions evenly across available executors.

// 如果kafka数据节点和spark计算节点在同一台机器,可是设置数据偏好为 PreferBrokers

LocationStrategies.PreferConsistent,

ConsumerStrategies.Subscribe[String, String](topics, kafkaParams) // 订阅的主题

)

// wordcount统计

// val currentBatchResult: DStream[(String, Int)] = stream.flatMap(crd => crd.value().split(" ")).map(word => (word, 1)).reduceByKey(_ + _)

// transform 可以改变数据格式

stream.foreachRDD(rdd => {

// 判断当前的rdd有没有数据,如果有数据则进行计算,否则不处理

if (!rdd.isEmpty()) {

// 要想获取每个RDD 里面的偏移量数据,必须是一手的DStream

val offsetRanges: Array[OffsetRange] = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

// 单词统计代码 - 带历史状态的

rdd.flatMap(crd => crd.value().split(" "))

.map(word => (word, 1))

.reduceByKey(_ + _)

.foreachPartition(itr => {

val jedis = JPools.getJedis

itr.foreach(tp => {

// hashes key:[fields: values]

jedis.hincrBy("hellword-29-0320", tp._1, tp._2)

})

jedis.close()

})

/*for (o <- offsetRanges ) {

println(s"${o.topic} ${o.partition} ${o.fromOffset} ${o.untilOffset}")

}*/

// 等计算完成之后,手动的提交偏移量, 这个偏移量最终会提交到kafka里面了【__consumer_offsets】, 0.10 +才有的

stream.asInstanceOf[CanCommitOffsets].commitAsync(offsetRanges)

}

})

ssc.start()

ssc.awaitTermination()

}

}

3.6.2 基于MySQL 自己管理偏移量

package spark.streaming.kafka

import java.sql.DriverManager

import org.apache.kafka.common.TopicPartition

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.kafka010._

import org.apache.spark.streaming.{Seconds, StreamingContext}

import spark.streaming.utils.OffsetManager

/**

* 基于MySQL 自己管理偏移量

*/

object StreamingKafka_MySQL {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf()

.setMaster("local[*]")

.setAppName(s"${this.getClass.getSimpleName}")

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

val ssc = new StreamingContext(sparkConf, Seconds(1))

// kafka的相关参数

val groupId = "laoyang"

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "kk-01:9092,kk-02:9092,kk-03:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> groupId,

"enable.auto.commit" -> (false: java.lang.Boolean),

"auto.offset.reset" -> "earliest"

)

// 主题

val topics = Array("test001")

val dbOffsetMap = OffsetManager./*isFirstRuning(topics, groupId)*/isFirstRuningV2(topics, groupId)

val stream = if (dbOffsetMap.size > 0) { // 程序非第一次启动

println("-------------程序非第一次启动-------------")

KafkaUtils.createDirectStream[String, String](

ssc,

LocationStrategies.PreferConsistent,

ConsumerStrategies.Assign[String, String](dbOffsetMap.keys, kafkaParams, dbOffsetMap)

)

} else { // 第一次启动

println("-------------程序第一次启动-------------")

KafkaUtils.createDirectStream[String, String](ssc,

LocationStrategies.PreferConsistent,

ConsumerStrategies.Subscribe[String, String](topics, kafkaParams)

)

}

stream.foreachRDD(rdd => {

// 先拿偏移量 - 该rdd里面从kafka获取到的数据偏移量 Array size 和kafka的分区数量是一致的

val offsetRanges: Array[OffsetRange] = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

// 计算逻辑 - 单词统计

rdd.flatMap(cr => cr.value().split(" ")).map((_, 1)).reduceByKey(_ + _).foreach(println)

// 存储偏移量到MySQL中

//1.创建一个连接

val connection = DriverManager.getConnection("jdbc:mysql://localhost:3306/offset", "root", "123456")

/*//2.创建一个prepareStatement对象

val insert = connection.prepareStatement("insert into streaming_offset_29 values(?,?,?,?)")

// 更新对象

val update = connection.prepareStatement("update streaming_offset_29 set untilOffset = ? where topic=? and partitionId=? and groupId=?")

// 查询对象

val search = connection.prepareStatement("select count(*) as ct from streaming_offset_29 where topic=? and partitionId=? and groupId=?")

// 设置参数

for (o <- offsetRanges) {

// 判断即将要插入的数据是否已经存在,如果已经存在则更新,否则插入

search.setString(1, o.topic)

search.setInt(2, o.partition)

search.setString(3, groupId)

// 查询到的结果集

val resultSet = search.executeQuery()

while (resultSet.next()) {

val ct = resultSet.getInt("ct")

// 如果ct > 0 说明该groupId topic paritionId 这个主键已经存在,需要对该主键进行更新操作

if (ct > 0) {

update.setString(1, o.untilOffset.toString)

update.setString(2, o.topic)

update.setInt(3, o.partition)

update.setString(4, groupId)

update.executeUpdate()

}

// 反正,则是插入

else {

insert.setString(1, o.topic)

insert.setInt(2, o.partition)

insert.setString(3, groupId)

insert.setString(4, o.untilOffset.toString)

insert.executeUpdate()

}

}

}

if (insert != null) insert.close()

if (update != null) update.close()

if (search != null) search.close()

*/

val pstmt = connection.prepareStatement("replace into streaming_offset_29 values(?,?,?,?)")

for (o <- offsetRanges ) {

pstmt.setString(1, o.topic)

pstmt.setInt(2, o.partition)

pstmt.setString(3, groupId)

pstmt.setString(4, o.untilOffset.toString)

pstmt.executeUpdate()

}

if (pstmt != null) pstmt.close()

if (connection != null) connection.close()

})

ssc.start()

ssc.awaitTermination()

}

}

3.6.3 基于redis 自己管理偏移量

package spark.streaming.kafka

import org.apache.kafka.common.TopicPartition

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.kafka010.{ConsumerStrategies, HasOffsetRanges, KafkaUtils, LocationStrategies}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import spark.streaming.utils.{JPools, OffsetManager}

/**

* 基于redis做个wordcount

* 手动的将偏移量写入到redis中

*/

object StreamingKafka_Redis {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf()

.setMaster("local[*]")

.setAppName(s"${this.getClass.getSimpleName}")

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.set("spark.streaming.kafka.maxRatePerPartition", "1000") // 3 partition

.set("spark.streaming.stopGracefullyOnShutdown", "true") // 死缓

val ssc = new StreamingContext(sparkConf, Seconds(2)) // batchRecord = 2 * 3 * 1000 = 6000

// 定义kafka的相关参数

val groupId = "cs001"

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "kk-01:9092,kk-02:9092,kk-03:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> groupId,

"auto.offset.reset" -> "earliest",

"enable.auto.commit" -> (false: java.lang.Boolean)

)

val topics = Array("test001")

// 判断程序是否是第一次启动, 从redis中去查询我们之前存储的偏移量数据并封装成一个map对象返回

val dbOffset = OffsetManager(topics, groupId)

val stream = if (dbOffset.size == 0) {

// 获取kafka数据

KafkaUtils.createDirectStream(ssc,

LocationStrategies.PreferConsistent,

ConsumerStrategies.Subscribe[String, String](topics, kafkaParams)

)

} else { // 非第一期启动的情况下,需要告诉kafkautils从主题的那个分区获取那个偏移量

println("---------------------------------")

KafkaUtils.createDirectStream(ssc,

LocationStrategies.PreferConsistent,

ConsumerStrategies.Assign[String, String](dbOffset.keySet, kafkaParams, dbOffset)

)

}

stream.foreachRDD(rdd => {

// 获取偏移量

val offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

// 计算wordcount

rdd.flatMap(cr => cr.value().split(" ")).map((_, 1)).reduceByKey(_ + _).foreach(println)

// 存储偏移量

val jedis = JPools.getJedis

offsetRanges.foreach(or => {

jedis.hset(or.topic+"-"+groupId, or.partition.toString, or.untilOffset.toString)

})

jedis.close()

})

ssc.start()

ssc.awaitTermination()

}

}

3.7 窗口函数 Window Operations

package com.zgcbank.sparkstreaming

import org.apache.spark.SparkConf

import org.apache.spark.streaming.{Milliseconds, Seconds, StreamingContext}

object WindowOpts {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("WindowOpts").setMaster("local[2]")

val ssc = new StreamingContext(conf, Milliseconds(5000))

val lines = ssc.socketTextStream("172.16.0.11", 9999)

val pairs = lines.flatMap(_.split(" ")).map((_, 1))

val windowedWordCounts = pairs.reduceByKeyAndWindow((a:Int,b:Int) => (a + b), Seconds(15), Seconds(10))

//Map((hello, 5), (jerry, 2), (kitty, 3))

windowedWordCounts.print()

// val a = windowedWordCounts.map(_._2).reduce(_+_)

// a.foreachRDD(rdd => {

// println(rdd.take(0))

// })

// a.print()

// //windowedWordCounts.map(t => (t._1, t._2.toDouble / a.toD))

// windowedWordCounts.print()

// //result.print()

ssc.start()

ssc.awaitTermination()

}

}