Spark Streaming与Spark SQL协同工作

Spark Streaming可以和Spark Core,Spark SQL整合在一起使用,这也是它最强大的一个地方。

实例:实时统计搜索次数大于3次的搜索词

package StreamingDemo

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* Spark Streaming与Spark相结合

* 需求:实时统计搜索次数大于3次的搜索词

*/

object StreamingAndSQLDemo {

def main(args: Array[String]): Unit = {

Logger.getLogger("org").setLevel(Level.WARN)

System.setProperty("HADOOP_USER_NAME", "Setsuna")

val conf = new SparkConf()

.setAppName(this.getClass.getSimpleName)

.setMaster("local[2]")

val ssc = new StreamingContext(conf, Seconds(2))

//开启checkpoint

ssc.checkpoint("hdfs://Hadoop01:9000/checkpoint")

//分词,wordcount,记录状态

val resultDStream =

ssc

.socketTextStream("Hadoop01", 6666)

.flatMap(_.split(" "))

.map((_, 1))

.updateStateByKey((values: Seq[Int], state: Option[Int]) => {

var count = state.getOrElse(0)

for (value <- values) {

count += value

}

Option(count)

})

//将wordcount结果存进表里

resultDStream.foreachRDD(rdd=>{

//创建SparkSession对象

val sparkSession=SparkSession.builder().getOrCreate()

//创建Row类型的RDD

val rowRDD=rdd.map(x=>Row(x._1,x._2))

//创建schema

val schema=StructType(List(

StructField("word",StringType,true),

StructField("count",IntegerType,true)

))

//创建DataFrame,并注册临时视图

sparkSession.createDataFrame(rowRDD,schema).

createOrReplaceTempView("wordcount")

//进行查询并在Console里输出

sparkSession.sql("select * from wordcount where count>3").show()

})

ssc.start()

ssc.awaitTermination()

}

}

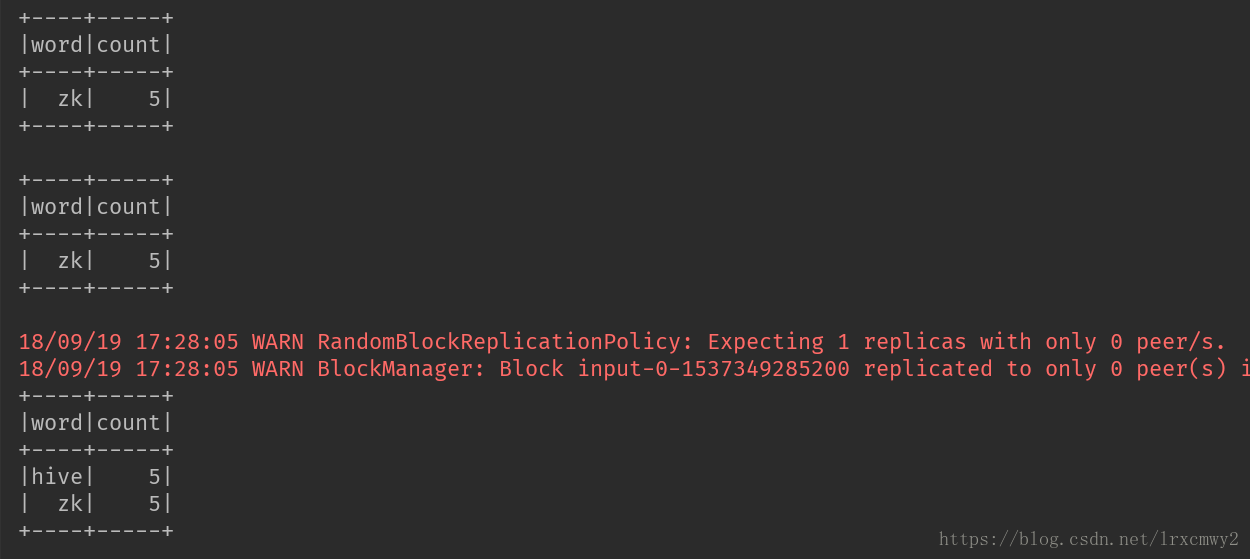

测试

在nc里输入数据

Console里的输出