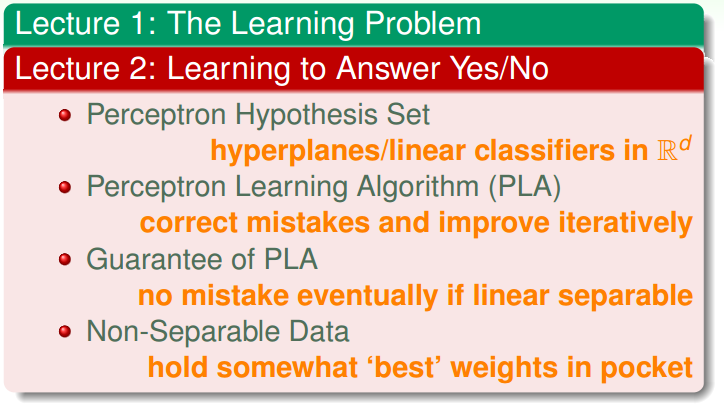

Lecture 2: Learning to Answer Yes/No

Perceptron

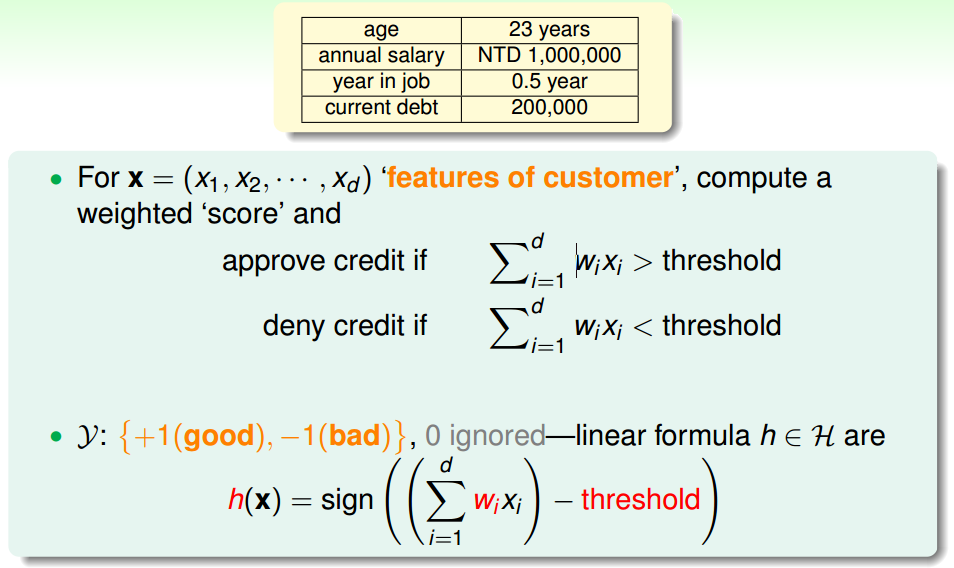

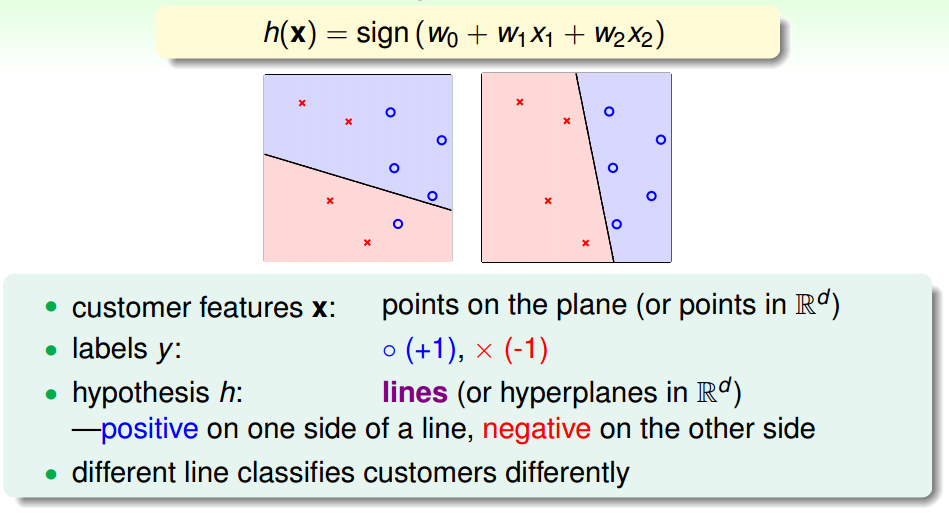

A Simple Hypothesis Set: the ‘Perceptron’

感知器类比神经网络,threshold类比考试60分及格

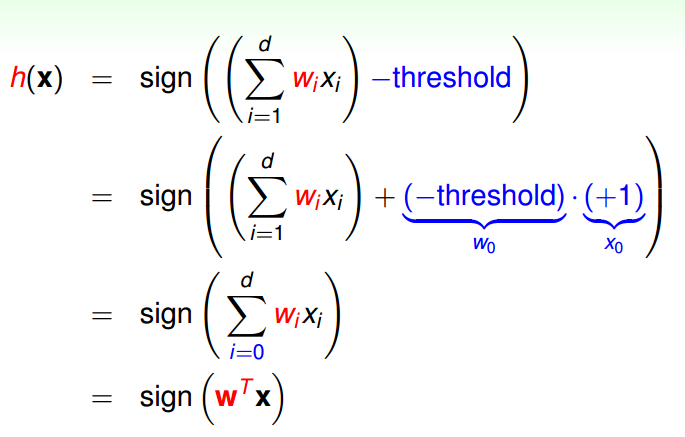

Vector Form of Perceptron Hypothesis

each ‘tall’

Perceptrons in

R2

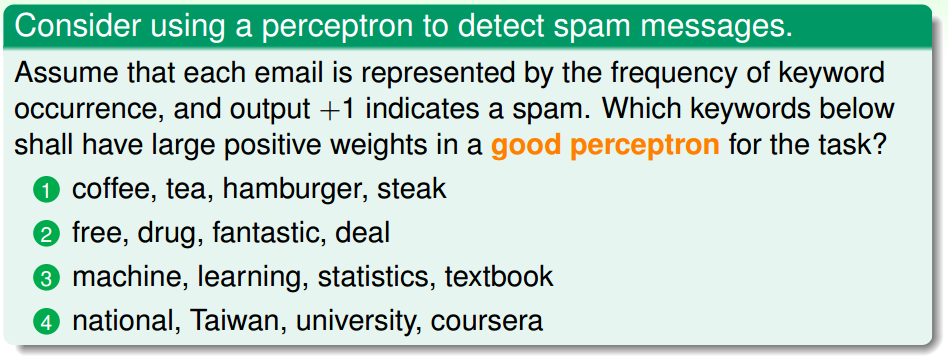

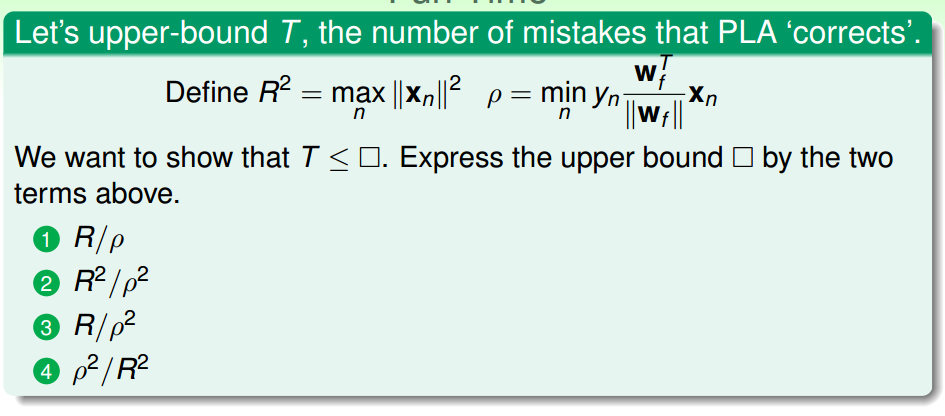

Fun time

Select

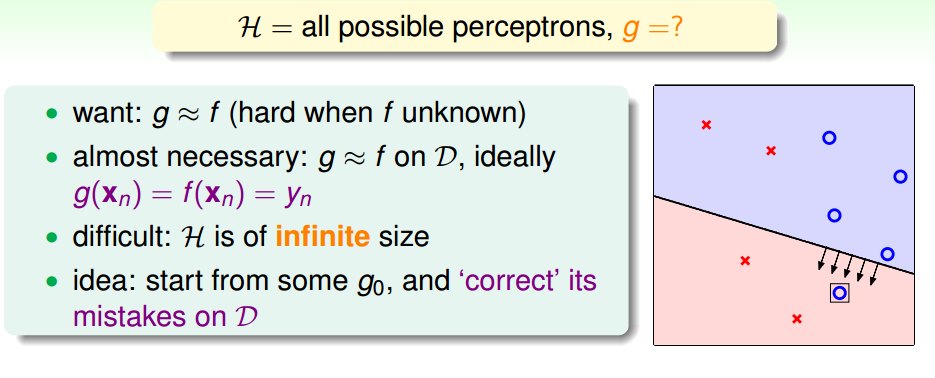

g

from

H

遍历是不现实的,所以还是迭代吧

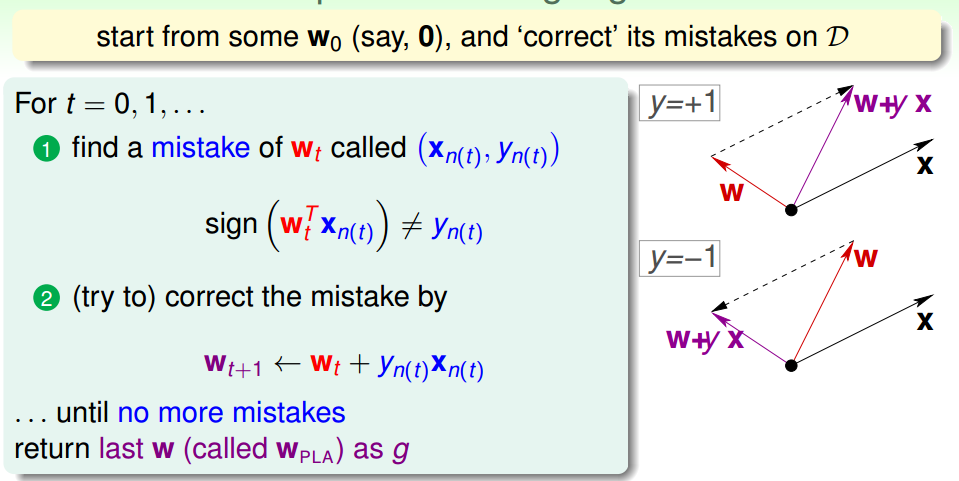

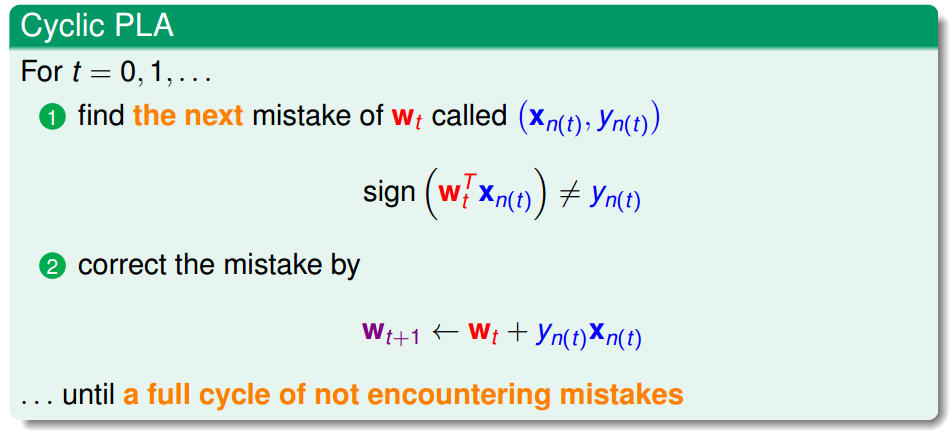

Perceptron Learning Algorithm

A fault confessed is half redressed.

因为

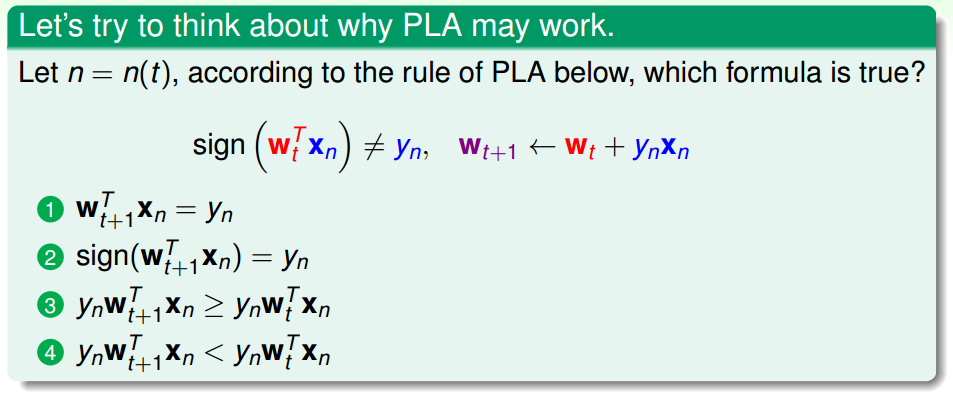

Fun time

说明了什么含义 ? ② 为什么不对?

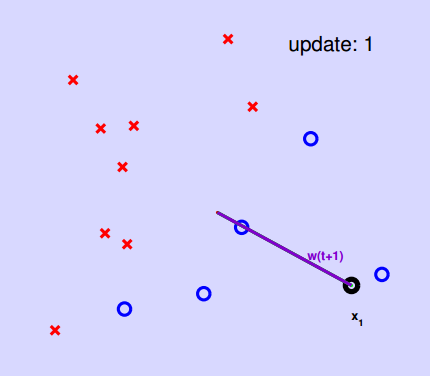

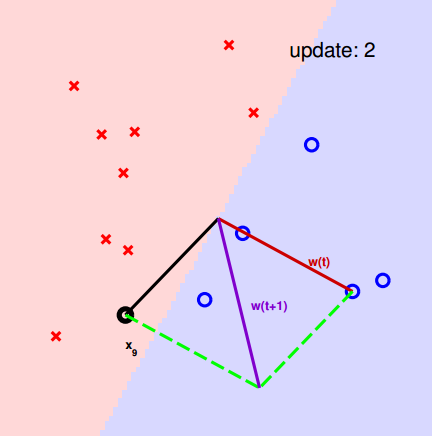

Implementation

start from some

(note: made

xi≫x0=1 for visual purpose) Why ?

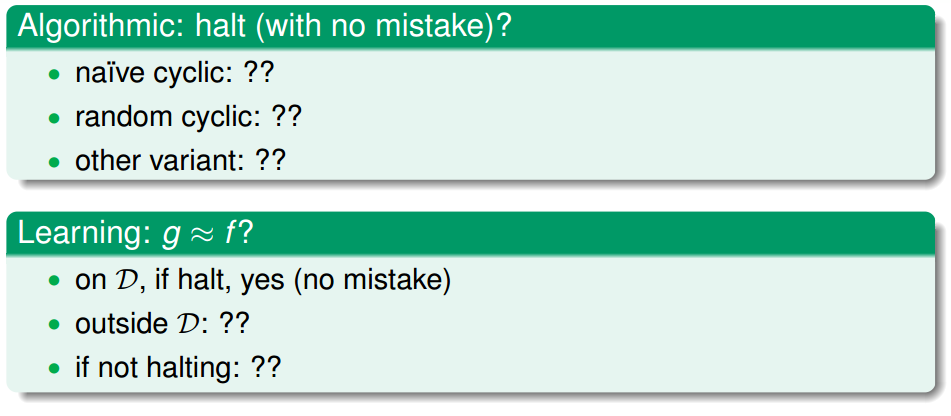

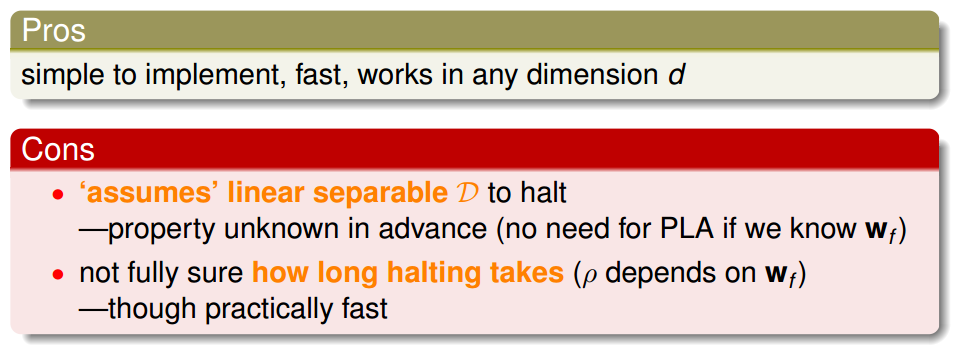

Issues of PLA

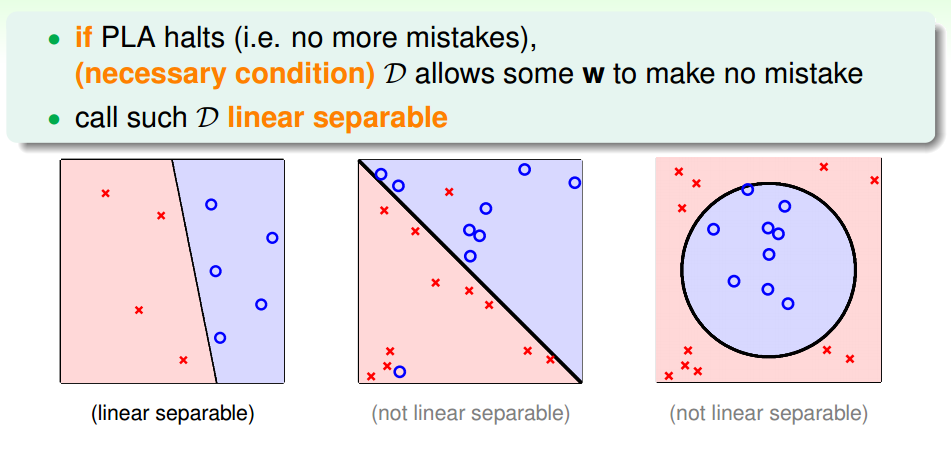

Linear Separability

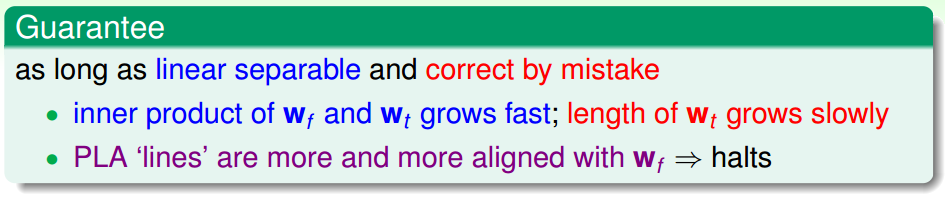

assume linear separable

halts!

因为

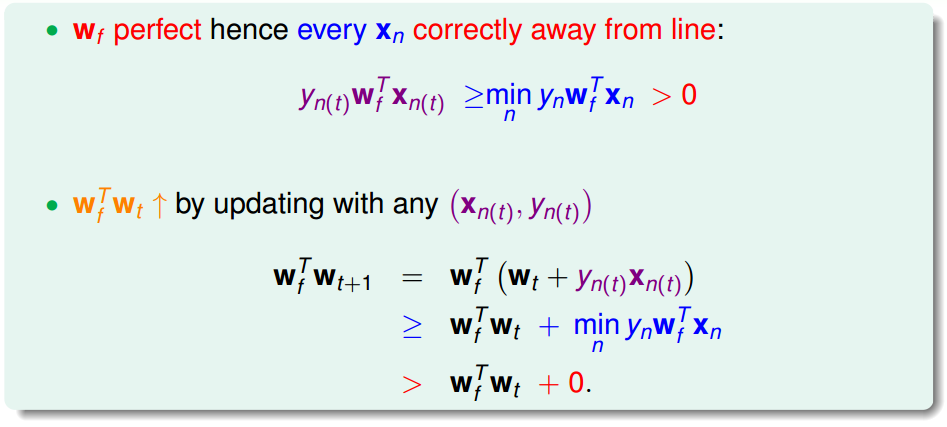

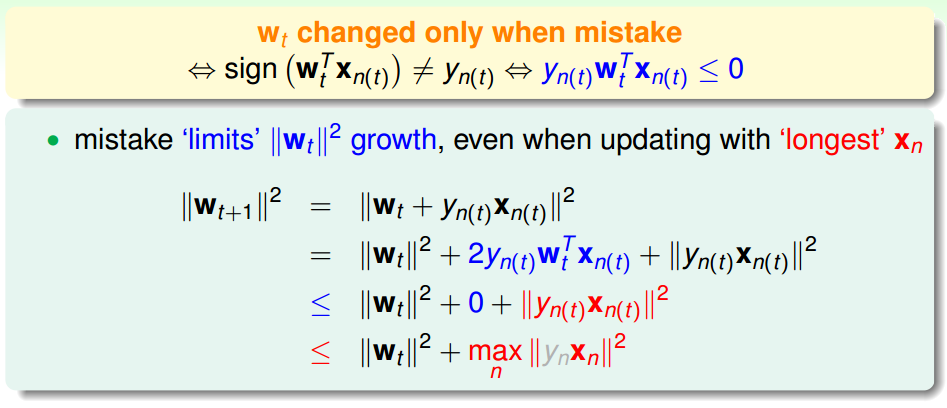

PLA Fact:

wt

Gets More Aligned with

wf

wt

appears more aligned with

wf

after update really?

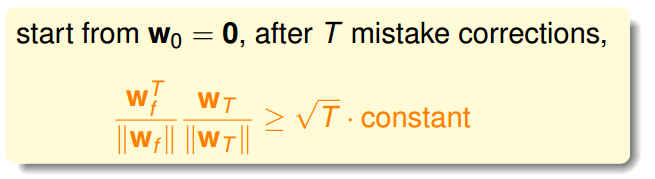

PLA Fact:

推导过程中需要注意的是,

得到是上限,而且无法准确求出,因为

wf 未知

即使w0≠0 也是能证明有上限的

特性

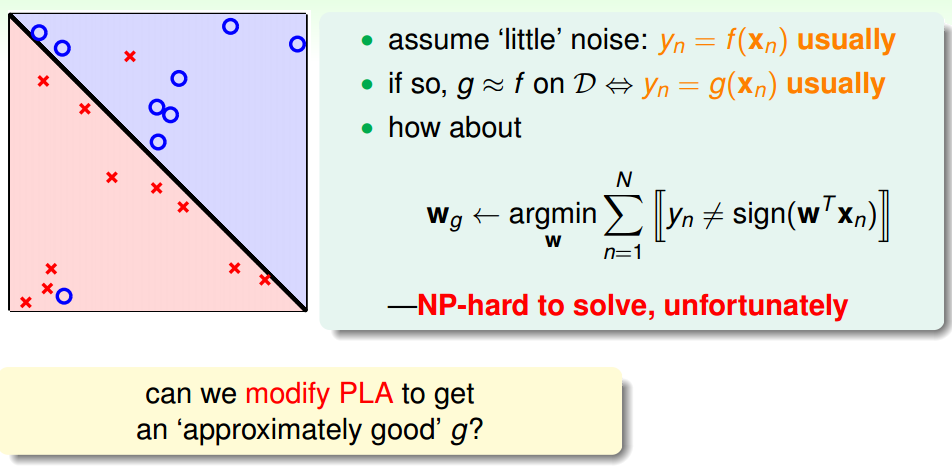

Learning with Noisy Data

NP难问题

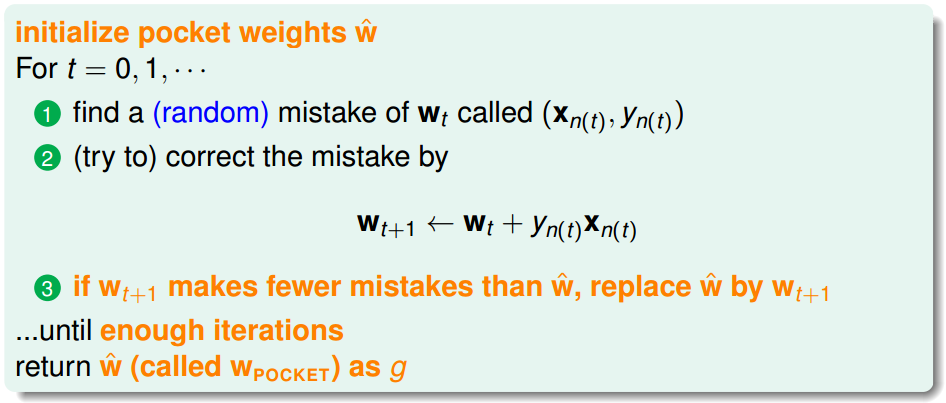

Pocket Algorithm

modify PLA algorithm (black lines) by keeping best weights in pocket