最近总想写点东西,把以前的这些网络都翻出来自己实现一遍。计划上从经典的分类网络到目标检测再到目标分割的都过一下。这篇从最简单的LeNet写起。

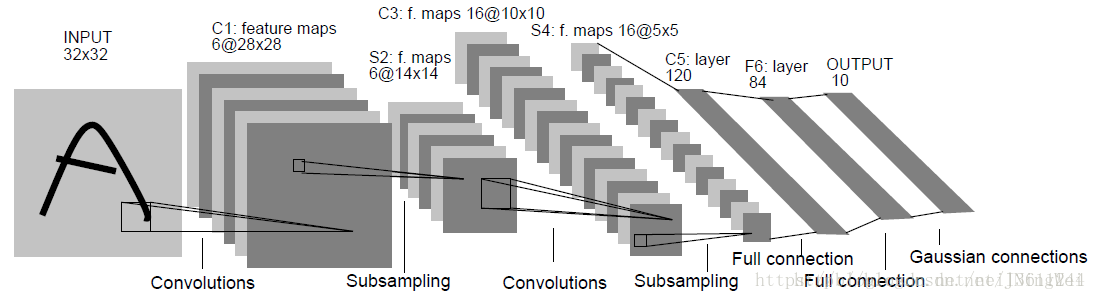

先上一张经典的LeNet模型结果图:

该网络结构包含2个Conv,2个pooling,2个fully connection外加一个softmax分类。至于每层的卷积核尺寸,多少个卷积核这些可以直接从代码中了解,在这就不细说了。

网络特点:相比之前的MLP神经网络,LeNet采用卷积做特征提取,采用池化做降采样,采用激活函数做非线性变换。利用卷积神经网络的稀疏连接和权值共享的特性大幅度减少参数数量。忽然发现之前写了篇简单的CNN分类的文章就是用的LeNet做mnist识别。那我们这篇就用TF的高级库slim重写下,对man/woman进行二分类,图片尺寸与上图略有不同。话不多说先上代码,本次例程分为三个文件:

read_data.py:用于数据的读取和整理,包括随机打乱,拆分训练测试级等

import os

from skimage import io, transform

import glob

import numpy as np

label_map = {'man': 0, 'woman': 1}

def get_data_list(path):

category = [path + x for x in os.listdir(path) if os.path.isdir(path + x)]

images = []

labels = []

category.sort()

for idx, folder in enumerate(category):

for img in glob.glob(folder + '/*.jpg'):

images.append(img)

labels.append(idx)

image_list = np.asarray(images, np.str)

label_list = np.asarray(labels, np.int32)

return image_list, label_list

def shuffle_data(images, labels):

num_example = len(images)

arr = np.arange(num_example)

np.random.shuffle(arr)

images = images[arr]

labels = labels[arr]

return images, labels

def divide_dataset(images, labels, ratio):

num_example = images.shape[0]

split = np.int(num_example * ratio)

train_images = images[:split]

train_labels = labels[:split]

val_images = images[split:]

val_labels = labels[split:]

return train_images, train_labels, val_images, val_labels

def mini_batches(inputs=None, targets=None, batch_size=None, height=224, width=224):

assert len(inputs) == len(targets)

indices = np.arange(len(inputs))

for start_idx in range(0, len(inputs) - batch_size + 1, batch_size):

excerpt = indices[start_idx:start_idx + batch_size]

images = []

for i in excerpt:

img = io.imread(inputs[i])

img = transform.resize(img, (height, width))

images.append(img)

images = np.asarray(images, np.float32)

yield images, targets[excerpt]

def one_hot(labels, num_classes):

n_sample = len(labels)

n_class = num_classes

onehot_labels = np.zeros((n_sample, n_class))

onehot_labels[np.arange(n_sample), labels] = 1

return onehot_labelsLeNet.py:简单的LeNet五层网络,直接用slim实现。

import tensorflow as tf

import tensorflow.contrib.slim as slim

def build_LeNet(inputs, num_classes):

# block_1

with tf.variable_scope('conv_layer_1'):

conv1 = slim.conv2d(inputs, num_outputs=32, kernel_size=[5, 5], scope='conv')

pool1 = slim.max_pool2d(conv1, kernel_size=[2, 2], stride=2, scope='pool')

with tf.variable_scope('conv_layer_2'):

conv2 = slim.conv2d(pool1, num_outputs=64, kernel_size=[5, 5], scope='conv')

pool2 = slim.max_pool2d(conv2, kernel_size=[2, 2], stride=2, scope='pool')

with tf.variable_scope('fatten'):

feature_shape = pool2.get_shape()

fatten_shape = feature_shape[1].value * feature_shape[2].value * feature_shape[3].value

pool2_flatten = tf.reshape(pool2, [-1, fatten_shape])

with tf.variable_scope('fc_layer_1'):

fc1 = slim.fully_connected(pool2_flatten, num_outputs=1024, scope='fc')

with tf.variable_scope('fc_layer_2'):

fc2 = slim.fully_connected(fc1, num_outputs=1024, scope='fc')

with tf.variable_scope('output'):

logits = slim.fully_connected(fc2, num_outputs=num_classes, activation_fn=tf.nn.softmax, scope='fc')

return logits

最后是训练代码train.py,与之前的文章中基本相同。

# -*- coding: utf-8 -*-

import tensorflow as tf

from model.LeNet import *

from utils.read_data import *

tf.app.flags.DEFINE_integer('num_classes', 2, 'classification number.')

tf.app.flags.DEFINE_integer('crop_width', 256, 'width of input image.')

tf.app.flags.DEFINE_integer('crop_height', 256, 'height of input image.')

tf.app.flags.DEFINE_integer('channels', 3, 'channel number of image.')

tf.app.flags.DEFINE_integer('batch_size', 32, 'num of each batch')

tf.app.flags.DEFINE_integer('num_epochs', 400, 'number of epoch')

tf.app.flags.DEFINE_bool('continue_training', False, 'whether is continue training')

tf.app.flags.DEFINE_float('learning_rate', 0.01, 'learning rate')

tf.app.flags.DEFINE_string('dataset_path', './datasets/', 'path of dataset')

tf.app.flags.DEFINE_string('checkpoints', './checkpoints/model.ckpt', 'path of checkpoints')

FLAGS = tf.app.flags.FLAGS

def main(_):

# dataset process

images, labels = get_data_list(FLAGS.dataset_path)

images, labels = shuffle_data(images, labels)

train_images, train_labels, val_images, val_labels = divide_dataset(images, labels, ratio=0.8)

# define network input

input = tf.placeholder(tf.float32, shape=[FLAGS.batch_size, FLAGS.crop_width, FLAGS.crop_height, FLAGS.channels], name='input')

output = tf.placeholder(tf.int32, shape=[FLAGS.batch_size, FLAGS.num_classes], name='output')

# Control GPU resource utilization

config = tf.ConfigProto(allow_soft_placement=True)

config.gpu_options.allow_growth = True

sess = tf.Session(config=config)

# build network

logits = build_LeNet(input, FLAGS.num_classes)

# loss

cross_entropy_loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=logits, labels=output))

regularization_loss = tf.reduce_sum(tf.get_collection(tf.GraphKeys.REGULARIZATION_LOSSES))

loss = cross_entropy_loss + regularization_loss

# optimizer

train_op = tf.train.AdamOptimizer(FLAGS.learning_rate).minimize(loss)

# calculate correct

correct_prediction = tf.equal(tf.argmax(logits, 1), tf.argmax(output, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

with sess.as_default():

# init all paramters

saver = tf.train.Saver(max_to_keep=1000)

sess.run(tf.global_variables_initializer())

# restore weights file

if FLAGS.continue_training:

saver.restore(sess, FLAGS.checkpoints)

# begin training

for epoch in range(FLAGS.num_epochs):

print("===epoch %d===" % epoch)

# training

train_loss, train_acc, n_batch = 0, 0, 0

for train_images_batch, train_labels_batch in mini_batches(train_images, train_labels, FLAGS.batch_size, FLAGS.crop_height, FLAGS.crop_width):

train_labels_batch = one_hot(train_labels_batch, FLAGS.num_classes)

_, err, acc = sess.run([train_op, loss, accuracy], feed_dict={input: train_images_batch, output: train_labels_batch})

train_loss += err

train_acc += acc

n_batch += 1

print(" train loss: %f" % (np.sum(train_loss) / n_batch))

print(" train acc: %f" % (np.sum(train_acc) / n_batch))

# validation

val_loss, val_acc, n_batch = 0, 0, 0

for val_images_batch, val_labels_batch in mini_batches(val_images, val_labels, FLAGS.batch_size, FLAGS.crop_height, FLAGS.crop_width):

val_labels_batch = one_hot(val_labels_batch, FLAGS.num_classes)

err, acc = sess.run([loss, accuracy], feed_dict={input: val_images_batch, output: val_labels_batch})

val_loss += err

val_acc += acc

n_batch += 1

print(" validation loss: %f" % (np.sum(val_loss) / n_batch))

print(" validation acc: %f" % (np.sum(val_acc) / n_batch))

# Create directories if needed

if not os.path.isdir("checkpoints"):

os.makedirs("checkpoints")

saver.save(sess, "%s/model.ckpt" % ("checkpoints"))

sess.close()

if __name__ == '__main__':

tf.app.run()运行结果:

validation loss: 1.332082

validation acc: 0.572750估计是网络太小,而且这几篇文章旨在过下分类网络,对于数据增扩,调参技巧放在以后写。