论文地址:https://arxiv.org/abs/1604.03540v1

问题:

正负样本不均衡,总是训练好训练的样本。

已有解决办法:

Bootstrapping

应该就是机器学习里常用的Boosting算法吧,有名就有AdaBoosting,就是每次训练完成后,把训练错误的样本的权重增加,多次训练得到多个分类器,最后多个分类器联合做决策。但是在现在神经网络中不好用,因为咱训练的时间太长了,不能等到训练完一次再调。

主要解决办法:

Our main observation is that these alternating steps can be combined with how FRCN is trained using online SGD. The key is that although each SGD iteration samples only a small number of images, each image contains thousands of example RoIs from which we can select the hard examples rather than a heuristically sampled subset. This strategy fits the alternation template to SGD by “freezing” the model for only one mini-batch. Thus the model is updated exactly as frequently as with the baseline SGD approach and therefore learning is not delayed.

我们就不训完再换阶段了。我们可以在每次的mini-batch SGD中寻找难样本。

实现细节

- 每次训练的难样本,就是正向计算中loss大的。

- loss排序之后保留loss大的有个错误,因为难样本也是多个proposal对应的,可能前几中都是一个区域。所以先NMS(非极大值抑制)

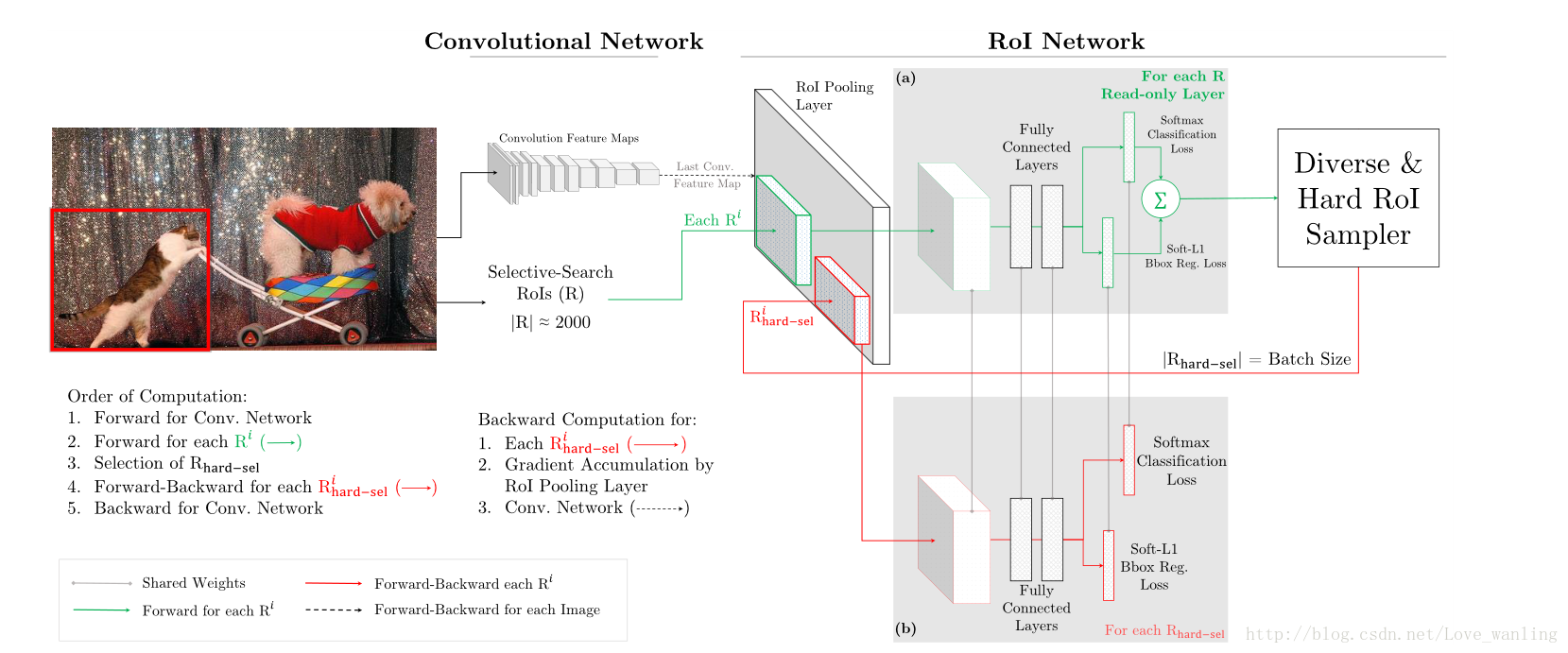

- 如果按照原来的方法,我们可以把非难样本的loss设为0,就跟往常训练一样了。但是这样0的样本也要bp,浪费计算。所以想了个法子。网络一式两份,一个readonly的做前向传播所有样本,筛选出难样本;一个只负责前向传播和bp训练难样本。网络结构如下:

博主就一直想:为啥要两个?一个不行吗?

原文也说了:

a limitation of current deep learning toolboxes