import requests

from bs4 import BeautifulSoup

import time

import random

#header里面加上cookie防止被ban

def getHTMLText(url,encoding='utf-8'):

header_list=["Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6"]

try:

kv={'user-agent':random.choice(header_list),'Cookie':'JSESSIONID=15A9FE2A6A2CC09A2FB61021BF8E8086; '

'user_trace_token=20170501124201-1adf364d88864075b61dde9bdd5871ea; '

'LGUID=20170501124202-850be946-2e28-11e7-b43c-5254005c3644; '

'index_location_city=%E5%8C%97%E4%BA%AC; TG-TRACK-CODE=index_search;'

' SEARCH_ID=0a596428cb014d3bab7284f879e214f0; Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1493613734;'

' Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1493622592;'

' LGRID=20170501150939-247d4c29-2e3d-11e7-8a78-525400f775ce;'

' _ga=GA1.2.1933438823.1493613734'}

r=requests.get(url,headers=kv,timeout=10)

r.raise_for_status()

r.encoding=encoding

return r.text

except:

return ''

def parserHTML(html):

soup=BeautifulSoup(html,'html.parser')

job_name=[]

addr=[]

money=[]

exp=[]

deg=[]

company_name=[]

industry=[]

sel=[]

p_link=[]

lst=[]

for i in soup.find('div',attrs={'class':'s_position_list'}).find_all('li',class_='con_list_item default_list'):

job_name.append(i.find('h3').text)

addr.append(i.find('span',class_='add').text)

money.append(i.find('span',class_='money').text)

exp.append(i.find('div',class_='li_b_l').text.split('\n')[2].split('/')[0])

deg.append(i.find('div',class_='li_b_l').text.split('\n')[2].split('/')[1])

company_name.append(i.find('div',class_='company_name').text)

industry.append(i.find('div',class_='industry').text.strip('\n').strip(' ').strip('\n'))

sel.append(i.find('div',class_='li_b_r').text)

aa=i.find('a',class_='position_link')

p_link.append(aa.get('href')) #获取职位详情url

lst.append(job_name)

lst.append(addr)

lst.append(money)

lst.append(exp)

lst.append(deg)

lst.append(company_name)

lst.append(industry)

lst.append(sel)

lst.append(p_link)

return lst

# def printList(lst,fpath):

# tplt='{0:{8}<10}\t{1:{8}<30}\t{2:{8}<8}\t{3:{8}<10}\t{4:{8}<5}\t{5:{8}<10}\t{6:{8}<10\t{7:{8}<20}}'

# with open(fpath,'a',encoding='utf-8') as f:

# f.write(tplt.format('职位名称','工作地点','薪资','经验','学历','公司名称','行业','自述',chr(12288))+'\n')

# # f.write(tplt.format('1','2','3','4','5','6','7','8',chr(12288))+'\n')

# for i in range(len(lst[0])):

# f.write(tplt.format(lst[0][i],lst[1][i],lst[2][i],lst[3][i],lst[4][i],lst[5][i],lst[6][i],lst[7][i],chr(12288))+'\n')

def printListCSV(lst,cpath):

import csv

with open(cpath,'a',newline='',encoding='utf_8_sig') as c:

csv.writer(c).writerow(['编号','职位名称','工作地点','薪资','经验','学历','公司名称','行业','自述','详细'])

for i in range(len(lst[0])):

csv.writer(c).writerow([i+1,lst[0][i],lst[1][i],lst[2][i],lst[3][i],lst[4][i],lst[5][i],lst[6][i],lst[7][i],lst[8][i]])

def getDetails(dlst):

details=[]

header_list = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6"]

for url in dlst:

time.sleep(1)

try:

r=requests.get(url,headers={'user-agent':random.choice(header_list),'Cookie':'JSESSIONID=15A9FE2A6A2CC09A2FB61021BF8E8086; '

'user_trace_token=20170501124201-1adf364d88864075b61dde9bdd5871ea; '

'LGUID=20170501124202-850be946-2e28-11e7-b43c-5254005c3644; '

'index_location_city=%E5%8C%97%E4%BA%AC; TG-TRACK-CODE=index_search;'

' SEARCH_ID=0a596428cb014d3bab7284f879e214f0; Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1493613734;'

' Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1493622592;'

' LGRID=20170501150939-247d4c29-2e3d-11e7-8a78-525400f775ce;'

' _ga=GA1.2.1933438823.1493613734'})

r.raise_for_status()

r.encoding='utf-8'

demo=r.text

except:

return ''

soup=BeautifulSoup(demo,'lxml')

a=soup.find('dl',attrs={'class':'job_detail'}).find('dd',attrs={'class':'job_bt'})

b=a.find('div').find_all('p')

d1=[]

for l in b:

d1.append(l.text)

details.append('\n'.join(d1))

return details

def main():

start_url='https://www.lagou.com/zhaopin/Python/'

URLS=[]

fpath='D://lagou.txt'

cpath='D://lagou.csv'

LST=[[],[],[],[],[],[],[],[],[]]

for i in range(1,3):

if i==0:

URLS.append(start_url)

else:

url=start_url+str(i+1)

URLS.append(url)

for i in URLS:

time.sleep(1)

html=getHTMLText(i)

lst=parserHTML(html)

print(lst)

for i in range(9):

LST[i]=LST[i]+lst[i]

dlst=LST[-1]

d=getDetails(dlst)

LST[-1]=d

print(LST)

printListCSV(LST,cpath)

main()这个爬虫程序爬取lagou网上以python为关键字搜索的职位的前两页共30条招聘信息,并输出成csv文件

下面将这个csv文件中的信息导入mysql数据库中

首先创建一个表格:

>create table lagou_python

(职位名称 varchar(100),

工作地点 varchar(50),

薪资 varchar(50),

经验 varchar(50),

学历 varchar(20),

公司名称 varchar(200),

行业 varchar(200),

自述 varchar(200),

详细 varchar(5000));

将csv文件的信息输入到新建的table中(删掉了csv中的第一列和第一行)

>load data infile'D:\\lagou.csv'

into table lagou_python

fields terminated by ',' optionally enclosed by '"' escaped by '"'

lines terminated by '\r\n';成功导入后再添加上一列序号作为主键,放在第一列

>alter table lagou_python add 编号 int auto_increment primary key first;看到多了一列,正是添加进的编号列

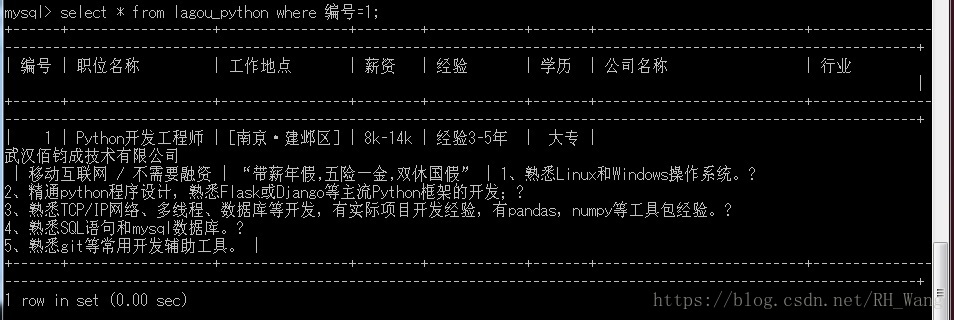

利用select语句选取第一条信息:

>select * from lagou_python where 编号=1;