在上一篇对拉勾网url分析的基础上,这一篇开始爬取拉勾网上面的职位信息。既然,现在是使用的爬虫,那么就获取拉钩网上的爬虫工程师的职位的信息。上一篇的链接:python爬虫 —爬拉勾网python爬虫职位(一)

(一)动工前分析

1.需要获取的信息:

(1)职位名称

(2)薪资

(3)要求工作时间

(4)岗位所在地点

2.程序功能分析

根据上面的分析,可以简单地将程序分为三个部分:(1)获取url, (2)获取职位信息,( 3)存储获取的信息

有了以上的分析,既可以开始动手写程序了。

扫描二维码关注公众号,回复:

1846828 查看本文章

(二)编写程序

1.导入相关模块

import requests

from bs4 import BeautifulSoup

from time import sleep, time

import csv

import json

import random最终的信息保存在csv文件中,因此,在这里导入 csv 模块

2.url构造

根据python爬虫 —爬拉勾网python爬虫职位(一)的介绍,url 的构造函数如下:

#构造 url

def url_create():

headers={'Cookie':'user_trace_token=20180617062143-a2c67f89-f721-42a0-a431-0713866d0fc1; __guid=237742470.3953059058839704600.1529187726497.5256;\

LGUID=20180617062145-a70aea81-71b3-11e8-a55c-525400f775ce; index_location_city=%E5%85%A8%E5%9B%BD;\

JSESSIONID=ABAAABAAAIAACBIA653C35B2B23133DCDB86365CEC619AE; PRE_UTM=; PRE_HOST=; PRE_SITE=;\

PRE_LAND=https%3A%2F%2Fwww.lagou.com%2Fjobs%2Flist_pythonpytho%25E7%2588%25AC%25E8%2599%25AB%3Fcity%3D%25E5%2585%25A8%25E5%259B%25BD;\

TG-TRACK-CODE=search_code; X_MIDDLE_TOKEN=8a8c6419e33ae49c13de4c9881b4eb1e; X_HTTP_TOKEN=5dd639be7b63288ce718c96fdd4a0035;\

_ga=GA1.2.1060168094.1529187728; _gid=GA1.2.1053446384.1529187728; _gat=1;\

Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1529190520,1529198463,1529212181,1529212712;\

Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1529225935; LGSID=20180617164003-0752289a-720a-11e8-a8bc-525400f775ce;\

LGRID=20180617165832-9c78c400-720c-11e8-a8bf-525400f775ce; SEARCH_ID=1dab13db9fc14397a080b2d8a32b7f27; monitor_count=70',

'Host':'www.lagou.com',

'Origin':'https://www.lagou.com',

'Referer':'https://www.lagou.com/jobs/list_python%E6%95%B0%E6%8D%AE%E6%8C%96%E6%8E%98?city=%E5%85%A8%E5%9B%BD&cl=false&fromSearch=true&labelWords=&suginput=',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36',

'X-Requested-With':'XMLHttpRequest'}

city_list = ['北京', '上海', '深圳', '广州','杭州','成都','南京','武汉','西安','厦门','长沙','苏州','天津']

position_list=['python爬虫',] #职位列表,可以继续添加职位

for city in city_list:

save_single_info(city) #写入城市信息

print("I am in {}".format(city))

for position in position_list:

url = "https://www.lagou.com/jobs/positionAjax.json?needAddtionalResult=false"

#获取职位页数的链接

url_page = "https://www.lagou.com/jobs/list_{}?city={}".format(position, city)

#获取职位页码的总页数

r = requests.post(url_page, headers=headers)

doc = BeautifulSoup(r.text, 'lxml')

page_count = int(doc.find(class_='span totalNum').string)

#职位页 遍历

for page in range(1,page_count+1):

if page == 1:

flag = 'true'

else:

flag = 'false'

data = {

'first':flag,

'pn':page,

'kd':position,

}

time_sleep = random.random()

sleep(time_sleep*10)

response = requests.post(url, headers = headers, data= data, timeout=10)

data= response.json()

html_parse(data)

2.职位信息的获取:

#职位详细信息获取

def html_parse(item):

info = item.get('content')

p_lsit = info.get('positionResult').get('result')

print(len(p_lsit))

for p in p_lsit:

result_item = {

"positionName": p.get("positionName"),

"salary": p.get("salary"),

"workYear": p.get('workYear'),

}

result_save(result_item) 职位信息还可以根据需要继续添加,这里只获取了名称,薪资和要求的工作年限

3.信息的存储

#结果信息存储

def result_save(result_item):

with open('lagou.csv','a',newline='',encoding='utf-8') as csvfile: #打开一个csv文件,用于存储

fieldnames=['positionName','salary','workYear']

writer=csv.DictWriter(csvfile,fieldnames=fieldnames)

writer.writerow(result_item)

#单行信息存储

def save_single_info(info):

with open('lagou.csv','a',newline='',encoding='utf-8') as csvfile:

writer=csv.writer(csvfile)

if type(info) == list:

writer.writerow(info)

else:

writer.writerow([info])4.主函数

#主程序

def main():

box_header = ['positionName','salary','workYear']

save_single_info(box_header) #写入表头

url_create() #url创建,并返回提取到的信息5.运行

#运行程序

if __name__ == '__main__':

start_time = time()

print("working...")

main()

end_time = time()

print("运行结束,用时:")

total_time = (end_time - start_time)/60

print(total_time)6.运行结果

(1)运行过程和总用时

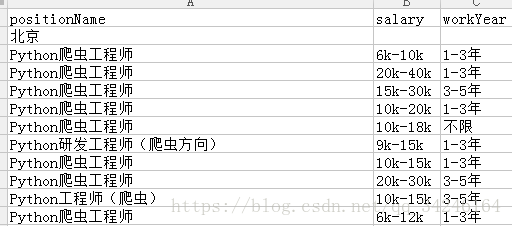

(2)存储的信息

(3)获取的职位总数:

(三)全部源码

import requests

from bs4 import BeautifulSoup

from time import sleep, time

import csv

import json

import random

#构造 url

def url_create():

headers={'Cookie':'user_trace_token=20180617062143-a2c67f89-f721-42a0-a431-0713866d0fc1; __guid=237742470.3953059058839704600.1529187726497.5256;\

LGUID=20180617062145-a70aea81-71b3-11e8-a55c-525400f775ce; index_location_city=%E5%85%A8%E5%9B%BD;\

JSESSIONID=ABAAABAAAIAACBIA653C35B2B23133DCDB86365CEC619AE; PRE_UTM=; PRE_HOST=; PRE_SITE=;\

PRE_LAND=https%3A%2F%2Fwww.lagou.com%2Fjobs%2Flist_pythonpytho%25E7%2588%25AC%25E8%2599%25AB%3Fcity%3D%25E5%2585%25A8%25E5%259B%25BD;\

TG-TRACK-CODE=search_code; X_MIDDLE_TOKEN=8a8c6419e33ae49c13de4c9881b4eb1e; X_HTTP_TOKEN=5dd639be7b63288ce718c96fdd4a0035;\

_ga=GA1.2.1060168094.1529187728; _gid=GA1.2.1053446384.1529187728; _gat=1;\

Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1529190520,1529198463,1529212181,1529212712;\

Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1529225935; LGSID=20180617164003-0752289a-720a-11e8-a8bc-525400f775ce;\

LGRID=20180617165832-9c78c400-720c-11e8-a8bf-525400f775ce; SEARCH_ID=1dab13db9fc14397a080b2d8a32b7f27; monitor_count=70',

'Host':'www.lagou.com',

'Origin':'https://www.lagou.com',

'Referer':'https://www.lagou.com/jobs/list_python%E6%95%B0%E6%8D%AE%E6%8C%96%E6%8E%98?city=%E5%85%A8%E5%9B%BD&cl=false&fromSearch=true&labelWords=&suginput=',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36',

'X-Requested-With':'XMLHttpRequest'}

city_list = ['北京', '上海', '深圳', '广州','杭州','成都','南京','武汉','西安','厦门','长沙','苏州','天津']

position_list=['python爬虫',] #职位列表,可以继续添加职位

for city in city_list:

save_single_info(city) #写入城市信息

print("I am in {}".format(city))

for position in position_list:

url = "https://www.lagou.com/jobs/positionAjax.json?needAddtionalResult=false"

#获取职位页数的链接

url_page = "https://www.lagou.com/jobs/list_{}?city={}".format(position, city)

#获取职位页码的总页数

r = requests.post(url_page, headers=headers)

doc = BeautifulSoup(r.text, 'lxml')

page_count = int(doc.find(class_='span totalNum').string)

#职位页 遍历

for page in range(1,page_count+1):

if page == 1:

flag = 'true'

else:

flag = 'false'

data = {

'first':flag,

'pn':page,

'kd':position,

}

time_sleep = random.random()

sleep(time_sleep*10)

response = requests.post(url, headers = headers, data= data, timeout=10)

data= response.json()

html_parse(data)

#职位详细信息获取

def html_parse(item):

info = item.get('content')

p_lsit = info.get('positionResult').get('result')

print(len(p_lsit))

for p in p_lsit:

result_item = {

"positionName": p.get("positionName"),

"salary": p.get("salary"),

"workYear": p.get('workYear'),

}

result_save(result_item)

#结果信息存储

def result_save(result_item):

with open('lagou.csv','a',newline='',encoding='utf-8') as csvfile: #打开一个csv文件,用于存储

fieldnames=['positionName','salary','workYear']

writer=csv.DictWriter(csvfile,fieldnames=fieldnames)

writer.writerow(result_item)

#单行信息存储

def save_single_info(info):

with open('lagou.csv','a',newline='',encoding='utf-8') as csvfile:

writer=csv.writer(csvfile)

if type(info) == list:

writer.writerow(info)

else:

writer.writerow([info])

#主程序

def main():

box_header = ['positionName','salary','workYear']

save_single_info(box_header) #写入表头

url_create() #url创建,并返回提取到的信息

#运行程序

if __name__ == '__main__':

start_time = time()

print("working...")

main()

end_time = time()

print("运行结束,用时:")

total_time = (end_time - start_time)/60

print(total_time)