一、Python

import numpy as np

import matplotlib.pyplot as plt

# 定义sigmoid函数

def sigmoid(x):

return 1 / (1 + np.exp(-x))

# 定义训练函数

def train(X, y, learning_rate, epochs):

m, n = X.shape # 获取数据集大小和特征数量

theta = np.zeros(n) # 初始化权重

for i in range(epochs): # 迭代训练

z = np.dot(X, theta) # 计算z值

h = sigmoid(z) # 计算预测值

gradient = np.dot(X.T, (h - y)) / m # 计算梯度

theta -= learning_rate * gradient # 更新权重

return theta

# 定义生成数据函数

def generate_data(num_examples):

# 生成随机特征和标签

X = np.random.normal(0.0, 1.0, (num_examples, 2))

y = np.zeros(num_examples)

for i in range(num_examples):

if X[i][0] + X[i][1] > 1:

y[i] = 1

return X, y

# 生成数据集

X, y = generate_data(1000)

# 标准化特征

X = (X - np.mean(X, axis=0)) / np.std(X, axis=0)

# 添加偏置项

X = np.hstack((np.ones((X.shape[0], 1)), X))

# 训练模型

theta = train(X, y, learning_rate=0.1, epochs=1000)

# 输出训练结果

print("theta: ", theta)

# 定义预测数据

x1_min, x1_max = X[:, 1].min(), X[:, 1].max()

x2_min, x2_max = X[:, 2].min(), X[:, 2].max()

xx1, xx2 = np.meshgrid(np.linspace(x1_min, x1_max), np.linspace(x2_min, x2_max))

X_pred = np.hstack((np.ones((xx1.ravel().shape[0], 1)), xx1.ravel().reshape(-1, 1), xx2.ravel().reshape(-1, 1)))

# 预测结果

y_pred = sigmoid(np.dot(X_pred, theta))

y_pred = y_pred.reshape(xx1.shape)

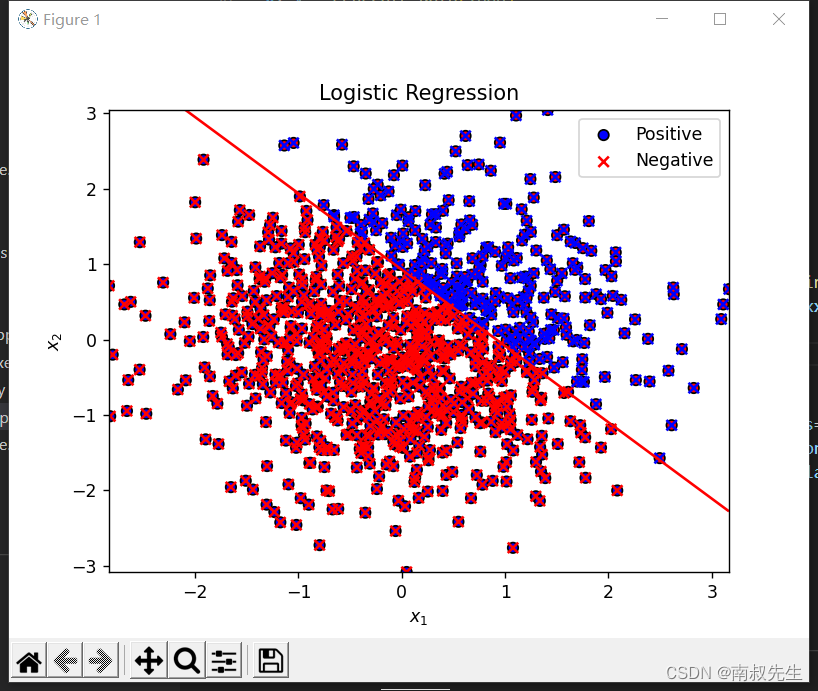

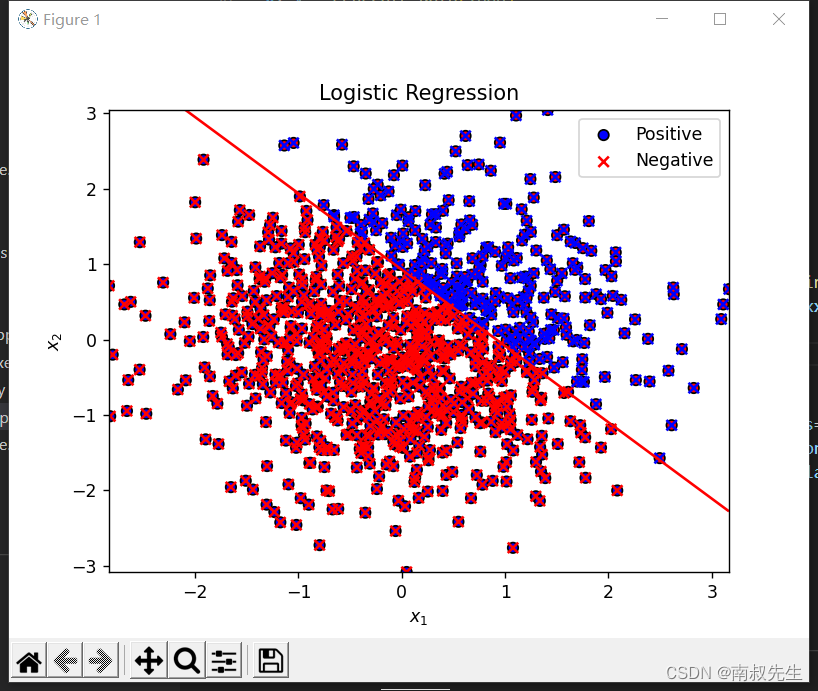

# 绘制训练数据和预测结果

plt.scatter(X[:, 1], X[:, 2], c=y, cmap='bwr', edgecolors='k', label='Positive') # 绘制正例散点图

plt.scatter(X[:, 1], X[:, 2], c=1-y, cmap='bwr', edgecolors='k', marker='x', label='Negative') # 绘制反例散点图

plt.contour(xx1, xx2, y_pred, levels=[0.5], colors='r', label='Decision Boundary') # 绘制决策边界等高线图

plt.legend() # 添加图例

plt.xlabel(r'$x_1$') # 添加x轴标签

plt.ylabel(r'$x_2$') # 添加y轴标签

plt.title('Logistic Regression') # 添加标题

plt.show() # 显示图形

二、C++

#include <iostream>

#include <vector>

#include <cmath>

#include <cstdlib>

#include <ctime>

using namespace std;

// 定义sigmoid函数

double sigmoid(double x) {

return 1.0 / (1.0 + exp(-x));

}

// 定义训练函数

vector<double> train(vector<vector<double>>& X, vector<double>& y, double learning_rate, int epochs) {

int m = X.size(); // 获取数据集大小

int n = X[0].size(); // 获取特征数量

vector<double> theta(n, 0.0); // 初始化权重

for (int i = 0; i < epochs; i++) { // 迭代训练

vector<double> z(m, 0.0); // 初始化z值

vector<double> h(m, 0.0); // 初始化预测值

for (int j = 0; j < m; j++) {

for (int k = 0; k < n; k++) {

z[j] += X[j][k] * theta[k]; // 计算z值

}

h[j] = sigmoid(z[j]); // 计算预测值

}

vector<double> gradient(n, 0.0); // 初始化梯度

for (int j = 0; j < n; j++) {

for (int k = 0; k < m; k++) {

gradient[j] += X[k][j] * (h[k] - y[k]); // 计算梯度

}

gradient[j] /= m;

theta[j] -= learning_rate * gradient[j]; // 更新权重

}

}

return theta;

}

// 定义生成数据函数

void generate_data(vector<vector<double>>& X, vector<double>& y, int num_examples) {

srand(time(NULL)); // 设置随机种子

for (int i = 0; i < num_examples; i++) {

double x1 = (double)rand() / RAND_MAX;

double x2 = (double)rand() / RAND_MAX;

X[i] = {1.0, x1, x2}; // 添加偏置项

if (x1 + x2 > 1) {

y[i] = 1.0;

} else {

y[i] = 0.0;

}

}

}

int main() {

int num_examples = 1000; // 数据集大小

vector<vector<double>> X(num_examples, vector<double>(3, 0.0)); // 特征矩阵

vector<double> y(num_examples, 0.0); // 标签向量

generate_data(X, y, num_examples); // 生成数据集

double learning_rate = 0.1; // 学习率

int epochs = 1000; // 迭代次数

vector<double> theta = train(X, y, learning_rate, epochs); // 训练模型

cout << "theta: ";

for (int i = 0; i < theta.size(); i++) {

cout << theta[i] << " ";

}

cout << endl;

return 0;

}