import numpy as np

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers import Dense,Activation

from keras import optimizers

#1.创建数据集

user_train=np.array([[1.85,1.05],

[1.32,2.45],

[1.66,3.56],

[1.33,1.56],

[1.90,2.33],

[2.88,2.11],

[1.01,2.42],

[3.66,3.34],

[0.11,0.34],

[0.78,0.32],

[0.65,0.45],

[0.56,0.99]])

user_lable=np.array([0,0,0,0,0,0,1,1,1,1,1,1])

#显示初始数据

# plt.scatter(user_train[user_lable == 0, 0], user_train[user_lable == 0, 1])

# plt.scatter(user_train[user_lable == 1, 0], user_train[user_lable == 1, 1])

# plt.show()

#2.创建模型

model = Sequential()

model.add(Dense(input_dim=2,units=1))

model.add(Activation('sigmoid')) #设置激活函数,将结果转化为概率

sgd = optimizers.SGD()

model.compile(loss='binary_crossentropy', optimizer=sgd) #选择损失函数和优化器

#3.训练

print('Training')

for step in range(1000):

const=model.train_on_batch(user_train,user_lable)

if step%50==0:

print('After %d training,the const:%f' %(step,const))

#4.测试

print('Testing......')

cost=model.evaluate(user_train,user_lable,batch_size=40)

print('cost= ',cost)

y_pred=model.predict(user_train)

y_pred=(y_pred*2).astype('int')

print(y_pred)

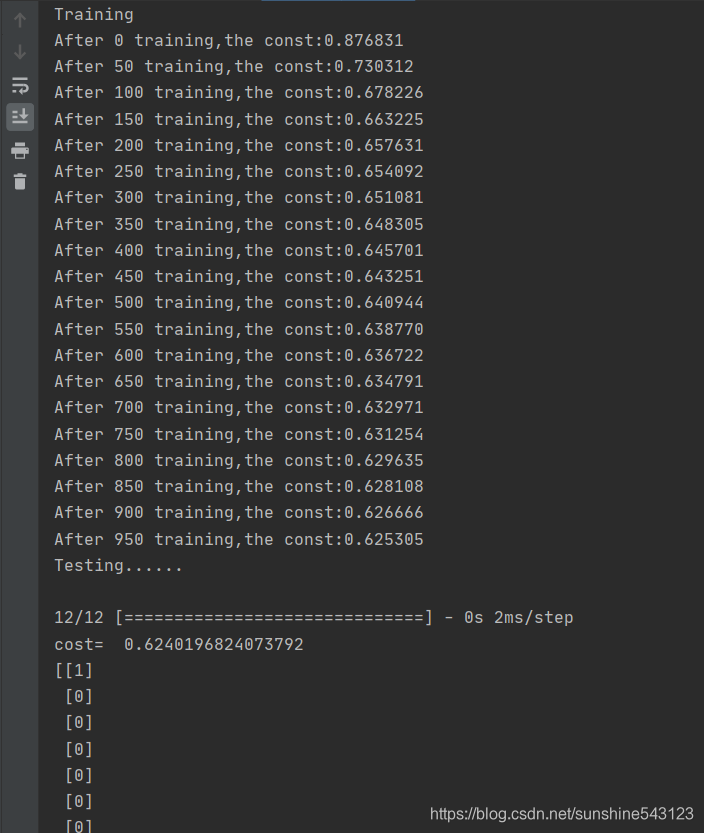

运行结果: