根据模型的数学原理进行简单的代码自我复现以及使用测试,仅作自我学习用。模型原理此处不作过多赘述,仅罗列自己将要使用到的部分公式。

如文中或代码有错误或是不足之处,还望能不吝指正。

本文侧重于使用numpy重新写出一个使用BP算法反向传播的MLP模型,故而不像其他文章那样加入图片演示正向传播与反相传播的原理或是某个特定函数的求导过程以及结论。

深度学习的一个典型例子就是将多层感知机(由输入到输出映射的数学函数)叠加在一起,由多个简单的函数符合在一起去拟合真实的目标函数;每一层隐藏层用以提取数据中的抽象特征,并且加入激活函数以拟合非线性函数。

前向传播:

其中,为输入层/隐藏层/输出层的输入;

为这一层每个神经元的权重,

为神经元的偏置,

为激活函数,

为这一层的输出。

我们训练的目标,就是通过梯度下降的思想,求出和

的梯度方向,在那个方向上,进行参数更新。

BP算法:输出层到隐含层

根据链式法则,

其中,为损失值Loss的逆向梯度,可以根据损失函数进求导进行计算;

为从输出层到隐含层的梯度,可以根据最后一个隐含层的激活函数求得;

为隐含层输出到自身某个权重参数

的梯度,因为

(其中

为上一层隐含层的输出),所以

。

隐含层到隐含层:

其中,为这一层的输出到上一层的梯度,可以通过激活函数求导获得;

,理由同上。

隐含层到输入层计算方法类似。

使用numpy实现的MLP:

import numpy as np

class MLP_np:

def __init__(self,act='sigmoid',loss='MSE',norm_initialize=True):

"""

初始化,初始化以下内容:

self.weights:各层的权重,以[ch_in,ch_out]实现

self.biases:各层的偏置

self.outs:各层输出,训练时保存正向传播的结果,反向传播时使用

self.act:激活函数,目前只支持sigmoid,relu,tanh

self.loss:损失函数,此处只支持MSE

norm_initialize:是否使用正态分布随机初始权重

"""

self.sizes = [784,30,30,10] #各层神经元个数,此处暂时不将sizes作为参数

#各层的权重,形状为[784,30][30,30][30,10]

self.weights = []

for i in range(len(self.sizes)-1):

if norm_initialize:

np.random.seed(i)

self.weights.append(np.random.normal(0,1,(self.sizes[i],self.sizes[i+1])))

else:

self.weights.append(np.random.randn(self.sizes[i],self.sizes[i+1]))

#初始化各层偏置

if norm_initialize:

np.random.seed(i)

self.biases = [np.random.normal(0,1,(i,)) for i in self.sizes[1:]]

else:

self.biases = [np.random.randn(i) for i in self.sizes[1:]]

#各层的输出

self.outs = []

self.act=act

self.loss = loss

#加入sigmoid函数

def _activation(self,z,act='sigmoid'):

"""

正向传播的激活函数

"""

if act == 'sigmoid':

return 1.0/(1.0+np.exp(-z))

elif act == 'relu':

return np.maximum(z,0)

elif act == 'tanh':

return (np.exp(z)-np.exp(-z))/(np.exp(z)+np.exp(-z))

else:

raise ValueError("必须是sigmoid,relu或是tanh")

def _d_activation(self,z,act='sigmoid'):

"""

激活函数求导,反向传播

"""

if act == 'sigmoid':

return z*(1-z)

elif act == 'relu':

z = np.maximum(z,0)

z[z>0] = 1

return z

elif act == 'tanh':

return 1-self._activation(z,act='tanh')**2

else:

raise ValueError("必须是sigmoid,relu或是tanh")

def _loss(self,pred,label,loss='MSE'):

"""

损失函数

"""

if loss == "MSE":

return ((pred-label)**2)/2

else:

raise ValueError("暂时不支持其他")

def _loss_back(self,pred,label,loss='MSE'):

"""

计算损失函数逆向的求导

此处没有考虑“在多分类问题之后加入softmax层”的情况

,所以MSE与交叉熵的损失函数不会有“softmax”的内容

"""

if loss == "MSE":

return (pred-label)

else:

raise ValueError("暂时不支持其他")

def forward(self,x,train=True):

"""

前向传播

x:输入

train:是否正在训练,True时将其存档

"""

self.outs.clear()

for i in range(len(self.sizes)-1):

w = self.weights[i]

b = self.biases[i]

z = np.dot(x,w)+b

#激活函数

x = self._activation(z)

if train:

self.outs.append(x)

return x

def backward(self,x,y,learning_rate = 0.01):

"""

后向传播,全量梯度下降

x:输入

y:输出

learning_rate:学习率

"""

self.forward(x)

#将个各层的梯度方向保存起来

nabla_w = [np.zeros(w.shape) for w in self.weights]

nabla_b = [np.zeros(b.shape) for b in self.biases]

#输出层到隐含层

total_to_out = self._loss_back(self.outs[-1],y,self.loss)

out_to_net = self._d_activation(self.outs[-1],self.act)

net_to_w = self.outs[-2]

delta = total_to_out*out_to_net

nabla_w[-1] = np.dot(net_to_w.T,delta)

nabla_b[-1] = np.sum(delta,axis=0)

#隐含层到隐含层

net2_to_net1 = self._d_activation(self.outs[-2],self.act)

delta = np.dot(delta,self.weights[-1].T)*net2_to_net1

nabla_w[-2] = np.dot(self.outs[-3].T,delta)

nabla_b[-2] = np.sum(delta,axis=0)

#隐含层到输入层

net1_to_input = self._d_activation(self.outs[-3],self.act)

delta = np.dot(delta,self.weights[-2].T)*net1_to_input

nabla_w[-3] = np.dot(x.T,delta)

nabla_b[-3] = np.sum(delta,axis=0)

#更新

for i in range(len(nabla_b)):

self.weights[i] -= learning_rate*nabla_w[i]

self.biases[i] -= learning_rate*nabla_b[i]

def calc_loss(self,pred,label):

"""

计算损失函数

pred:预测值

label:真实值

"""

return self._loss(pred,label,self.loss)

def pred(self,x):

"""

使用模型预测,进行一次正向传播

x:输入

"""

y = self.forward(x,train=False)

return y使用MNIST数据集进行测试:

from torchvision import datasets

from torchvision import transforms

from torch.utils.data import DataLoader

from visdom import Visdom

train_loader = DataLoader(

datasets.MNIST('mnist_data',train=True,download=False,

transform = transforms.Compose(

[transforms.ToTensor()

]

)),batch_size=512,shuffle=True

)

test_loader = DataLoader(

datasets.MNIST('mnist_data',train=False,download=False,

transform = transforms.Compose(

[transforms.ToTensor()

]

)),batch_size=512,shuffle=True

)

myMLP = MLP_np()

viz = Visdom()

global_step=0

test_loss = []

test_acc = []

for epoch in range(100):

for batch_size,(x,y) in enumerate(train_loader):

global_step+=1

x = np.array(x).reshape(x.shape[0],28*28)

y_idx = np.array(y)

y = np.eye(10)[y_idx]

myMLP.backward(x,y)

pred = myMLP.pred(x)

#print(np.sum(myMLP.calc_loss(pred,y)))

if global_step%50==0:

viz.line([np.mean(myMLP.calc_loss(pred,y))],[global_step],win="train_loss",update="append")

if epoch % 10 == 0:

test_loss_epoch = 0

test_acc_epoch = 0

for batch_size,(x,y) in enumerate(test_loader):

x = np.array(x).reshape(x.shape[0],28*28)

y_idx = np.array(y)

y_true = np.eye(10)[y_idx]

pred = myMLP.pred(x)

test_loss_epoch += np.sum(myMLP.calc_loss(pred,y_true))/10000 #测试集大小

pred = np.argmax(myMLP.pred(x),axis=1)

test_acc_epoch += np.sum(np.equal(pred,np.array(y)))/10000

test_loss.append(test_loss_epoch)

test_acc.append(test_acc_epoch)

print(epoch,"test_loss",test_loss_epoch)

print(epoch,"test_acc",test_acc_epoch)

test_loss_epoch = 0

test_acc_epoch = 0

for batch_size,(x,y) in enumerate(test_loader):

x = np.array(x).reshape(x.shape[0],28*28)

y_idx = np.array(y)

y_true = np.eye(10)[y_idx]

pred = myMLP.pred(x)

test_loss_epoch += np.sum(myMLP.calc_loss(pred,y_true))/10000 #测试集大小

pred = np.argmax(myMLP.pred(x),axis=1)

test_acc_epoch += np.sum(np.equal(pred,np.array(y)))/10000

test_loss.append(test_loss_epoch)

test_acc.append(test_acc_epoch)

print(epoch,"test_loss",test_loss_epoch)

print(epoch,"test_acc",test_acc_epoch)

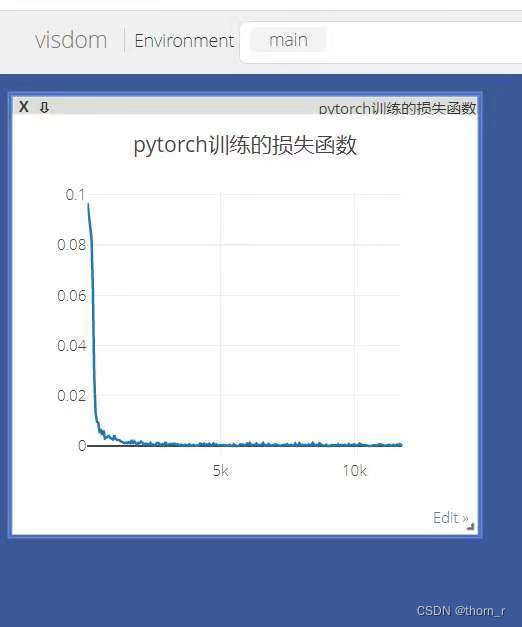

如图所示为在训练集上的损失

99 test_loss 0.04836542739525751

99 test_acc 0.9388000000000002在测试集上的准确率为0.9389

使用pytorch实现MLP做对比:

由于pytorch使用的优化器不是每一次都选取所有样本进行梯度下降,故而需要增加每层神经元个数

import numpy as np

from torchvision import datasets

from torchvision import transforms

from torch.utils.data import DataLoader

from visdom import Visdom

import torch

from torch import nn

from torch.nn import functional as F

train_loader = DataLoader(

datasets.MNIST('mnist_data',train=True,download=False,

transform = transforms.Compose(

[transforms.ToTensor()

]

)),batch_size=512,shuffle=True

)

test_loader = DataLoader(

datasets.MNIST('mnist_data',train=False,download=False,

transform = transforms.Compose(

[transforms.ToTensor()

]

)),batch_size=512,shuffle=True

)

model = nn.Sequential(

nn.Linear(784,200),

nn.Sigmoid(),

nn.Linear(200,200),

nn.Sigmoid(),

nn.Linear(200,10),

nn.Sigmoid()

)

optim = torch.optim.Adam(model.parameters(),lr=0.01)

using_loss = nn.MSELoss()

viz = Visdom()

global_step=0

test_loss = []

test_acc = []

for epoch in range(100):

for batch_idx,(x,y) in enumerate(train_loader):

global_step+=1

x.resize_([x.shape[0],28*28])

y = F.one_hot(y).to(torch.float32)

y_pred = model(x)

loss = using_loss(y_pred,y)

optim.zero_grad()

loss.backward()

optim.step()

if global_step%50==0:

viz.line([loss.item()],[global_step],win="train_loss_torch",update="append",opts = dict(title="pytorch训练的损失函数"))

if epoch % 10 == 0:

test_loss_epoch = 0

test_acc_epoch = 0

with torch.no_grad():

for batch_idx,(x,y) in enumerate(test_loader):

x.resize_([x.shape[0],28*28])

y_onehot = F.one_hot(y).to(torch.float32)

y_pred = model(x)

test_loss_epoch += using_loss(y_pred,y_onehot).item()

pred = np.argmax(np.array(y_pred),axis=1)

test_acc_epoch += np.sum(np.equal(pred,np.array(y)))

test_loss.append(test_loss_epoch)

test_acc.append(test_acc_epoch/10000)

print(epoch,"test_loss",test_loss_epoch)

print(epoch,"test_acc",test_acc_epoch/10000)

90 test_loss 0.08563304203562438

90 test_acc 0.9749