会议: 2020 interspeech

单位:FaceBook

作者:Adam Polyak

abstract

- 使用了speech & sing的数据;cross-domain的意思是可以把source singing utt转换成原始为说话or歌唱的音色。

- wav2wav的转换,GAN网络

- 使用了ASR提取声学特征,CNN提取基频,另外提取loudness feature,

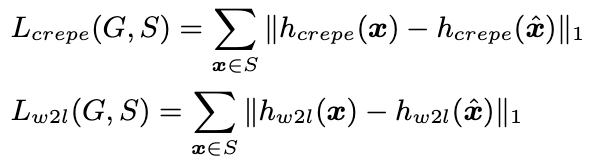

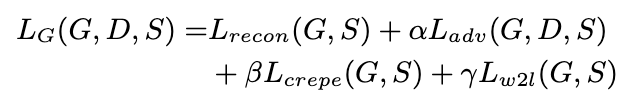

- 提出perceptual loss:计算重建x和原始x的基频一致性,以及内容一致性;

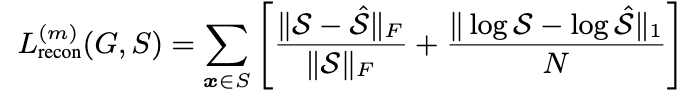

Figure 1: Proposed GAN architecture. (a) Generator architecture. Musical and speech features are extracted from a singing waveform (floud(x), fw2l(x), Γ(fcrepe(x))) and passed through context stacks (colored green). The features are then concatenated and tempo- rally upsampled to match the audio frequency. The joint embedding is used to condition a non-causal WaveNet (colored blue), which receives random noise as input. (b) Discriminator architecture. Losses are drawn with dashed lines, input/output with solid lines. The discriminator (colored orange) differentiates between synthesized and real singing. Multi-scale spectral loss and perceptual losses are computed between matching real and generated samples.

- 多说话人的时候用到了back-translation:

x u j = G ( z , E ( x j ) , u ) x^j_u =G(z,E(x_j),u) xuj=G(z,E(xj),u)

aechitecture

input——conv block (8层non-casual layer)——generator(wavenet) :将U(0,1)之间分布的数据预测为采样点级别的wav———discriminator

Experiments

- 单人数据:LJSpeech,LCSING-单人歌唱数据

- 多人数据:VCTK, NUS

分别用纯speech数据、纯歌唱数据、speech+sing的数据用作模型训练,作为target speaker,测试的时候输入是nus的数据。