一、配置文件(core-site.xml hdfs-site.xml yarn-site.xml )

上述三个文件夹均在此目录下:

cd /usr/local/hadoop/etc/hadoop

把配置信息里面的所有hadoopm换成自己的主机名

Hadoop core-site.xml 配置信息:

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/root/hadoop_tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoopm:9000</value>

</property>

</configuration>

Hadoop hdfs-site.xml 配置信息:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///usr/local/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///usr/local/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>hadoopm:50070</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoopm:50090</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

Hadoop yarn-site.xml 配置信息:

<configuration>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>hadoopm:8033</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoopm:8025</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hdoopm:8030</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hdoopm:8050</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoopm:8030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>hadoopm:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>hadoopm:8090</value>

</property>

</configuration>

记住:以上配置文件自带的 这条语句删除

这条语句删除

另外要新建三个文件:

mkdir ~/hadoop_tmp //在~目录在建立hadoop_tmp文件

/usr/local/hadoop/etc/hadoop# vim masters //建立一个masters文件,在里面写上本机的主机名

/usr/local/hadoop/etc/hadoop# vim slaves //修改一个slaves文件,在里面写上本机的主机名

配置完成后;

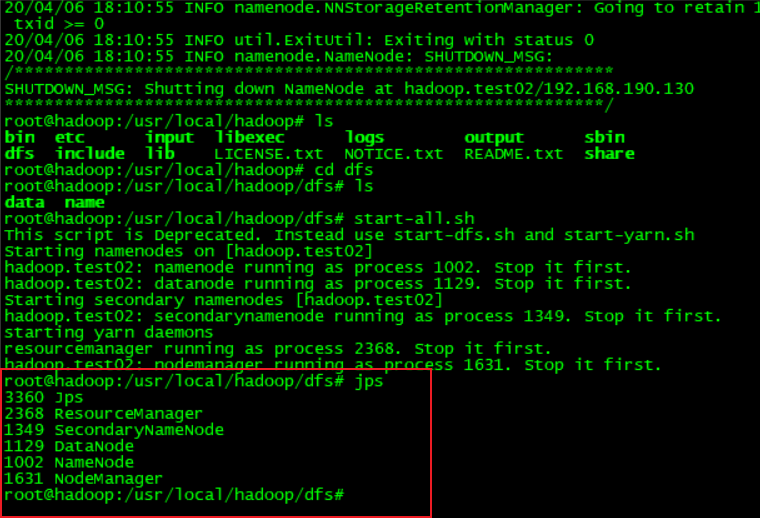

进行dfs格式化:

root@hadoop:/usr/local/hadoop/etc/hadoop# cd .. //后退一个目录 root@hadoop:/usr/local/hadoop/etc# cd .. //再后退一个目录 root@hadoop:/usr/local/hadoop# hdfs namenode -format //进行格式化

出来上面的界面就说明格式化成功了

# cd dfs //进入dfs文件

# ls //查看dfs里面的内容,格式化成功之后里面就会有data和name两个文件夹

之后启动hadoop:

# start-all.sh //启动hadoop的命令

......

# jps //启动之后用jps来查看进程,如果启动成功 进程有六个

# stop-all.sh //结束hadoop命令