//查看系统自带JDK

[root@Master local]# rpm -qa | grep java

java-1.8.0-openjdk-1.8.0.131-11.b12.el7.x86_64

java-1.7.0-openjdk-headless-1.7.0.141-2.6.10.5.el7.x86_64

java-1.7.0-openjdk-1.7.0.141-2.6.10.5.el7.x86_64

java-1.8.0-openjdk-headless-1.8.0.131-11.b12.el7.x86_64

javapackages-tools-3.4.1-11.el7.noarch

tzdata-java-2017b-1.el7.noarch

python-javapackages-3.4.1-11.el7.noarch

//删除

rpm -e --nodeps java-1.8.0-openjdk-1.8.0.131-11.b12.el7.x86_64

rpm -e --nodeps java-1.7.0-openjdk-headless-1.7.0.141-2.6.10.5.el7.x86_64

rpm -e --nodeps java-1.7.0-openjdk-1.7.0.141-2.6.10.5.el7.x86_64

rpm -e --nodeps java-1.8.0-openjdk-headless-1.8.0.131-11.b12.el7.x86_64

安装JDK

上传到 /usr/local目录

tax -zxvf jdk-7u80-linux-x64.tar.gz

vim /etc/profile

export JAVA_HOME=/usr/local/jdk1.7.0_80

export PATH=$PATH:$JAVA_HOME/bin

source /etc/profile //生效配置文件,环境变量

// 查看是否已安装JAVA环境

[root@Master local]# java -version

java version "1.7.0_80"

Java(TM) SE Runtime Environment (build 1.7.0_80-b15)

Java HotSpot(TM) 64-Bit Server VM (build 24.80-b11, mixed mode)

////////////////////////////////////////////////////////////////

mkdir /usr/local/hadoop

上传 hadoop-2.6.4.tar.gz

tax -zxvf hadoop-2.6.4.tar.gz

//设置免密码登录

//无密攻略

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa // 注意是大写 P,此处纠结过很久

// 攻略添加

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

vim /etc/hostname

修改成 Master

vim /etc/hosts

#127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.21 Master

mkdir /usr/local/hadoop/tmp

mkdir /usr/local/hadoop/hdfs/name

mkdir /usr/local/hadoop/hdfs/data

vim ~/.bash_profile

末尾添加:

HADOOP_HOME=/usr/local/hadoop/hadoop-2.6.4

PATH=$PATH:$HADOOP_HOME/bin

export HADOOP_HOME PATH

source ~/.bash_profile // 生效配置文件

切换路径:

cd /usr/local/hadoop/hadoop-2.6.4

vim etc/hadoop/hadoop-env.sh

vim etc/hadoop/core-site.xml

# The java implementation to use.

#export JAVA_HOME=${JAVA_HOME} /////找到这个并注释掉

export JAVA_HOME=/usr/local/jdk1.7.0_80 /////添加

vim etc/hadoop/yarn-env.sh

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/ /////找到这个并注释掉

export JAVA_HOME=/usr/local/jdk1.7.0_80 /////添加

vim etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://Master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

</configuration>

vim etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data</name>

<value>file:/usr/local/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

切换路径:cd /usr/local/hadoop/hadoop-2.6.4/etc/hadoop

cp mapred-site.xml.template mapred-site.xml

vim mapred-site.xml

<configuration>

<name>mapreduce.framwork.name</name>

<value>yarn</value>

</configuration>

vim yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

//格式化

./hdfs namenode -format

切换路径:cd ../sbin/

启动hadoop

./start-all.sh

///查看是否启动

[root@Master sbin]# jps

3479 NameNode

4269 Jps

3968 NodeManager

3879 ResourceManager

3566 DataNode

3738 SecondaryNameNode

[root@Master sbin]#

//启动成功

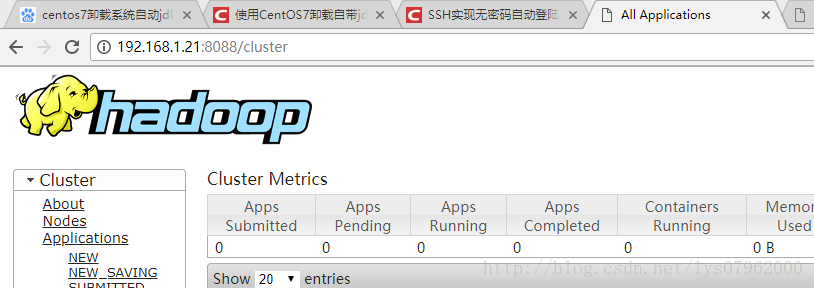

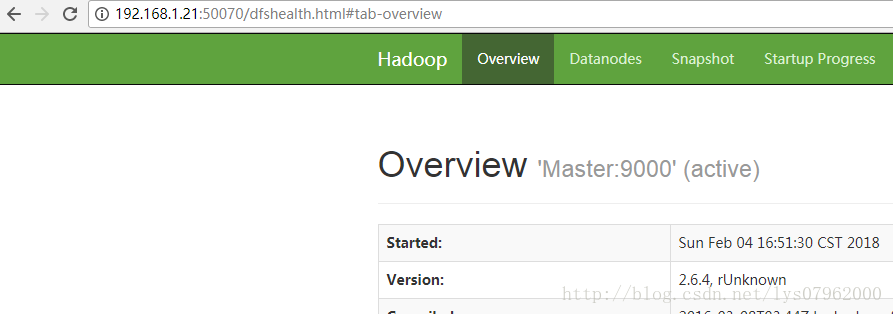

IE 访问: http://192.168.1.21:8088/

http://192.168.1.21:50070

无法打开页面,查看防火墙

[root@Master sbin]# firewall-cmd --state

running

// 停止防火墙

systemctl stop firewalld.service

再次:IE访问 http://192.168.1.21:8088/, http://192.168.1.21:50070

Hadoop分布式集群搭建

[root@localhost ~]# mkdir apps

[root@localhost ~]# rz 上传 hadoop-2.6.4.tar.gz ,若RZ命令认不到则安装

[root@localhost ~]# yum install lrzsz

// 解压,放到apps目录

[root@localhost ~]# tar -zxvf hadoop-2.6.4.tar.gz -C apps/

[root@localhost ~]# cd apps/

[root@localhost hadoop]# cd hadoop-2.6.4/etc/hadoop/

//查看JDK

[root@localhost hadoop]# echo $JAVA_HOME

/usr/local/jdk1.7.0_80

[root@localhost hadoop]# vim hadoop-env.sh

#export JAVA_HOME=${JAVA_HOME} // 找到这个并注释掉

export JAVA_HOME=/usr/local/jdk1.7.0_80 // 添加这一行

[root@master sbin]# vim /etc/host //添加以下三行

192.168.1.20 master

192.168.1.22 slave1

192.168.1.23 slave2

//设置免密码登录

//无密攻略

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa // 注意是大写 P,此处纠结过很久

// 攻略添加,并发送到slave1,slave2上

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

scp ~/.ssh/authorized_keys root@slave1:~/.ssh/

scp ~/.ssh/authorized_keys root@slave2:~/.ssh/

并保证 ssh slave1,ssh slave2能登录上去

[root@master sbin]# ssh slave1

Last login: Wed Feb 7 14:16:37 2018 from 192.168.1.20

[root@slave1 ~]# exit

登出

Connection to slave1 closed.

[root@master sbin]# ssh slave2

Last login: Wed Feb 7 14:18:36 2018 from 192.168.1.20

[root@slave2 ~]# exit

登出

Connection to slave2 closed.

[root@master sbin]# cd /home/hadoop/apps/hadoop-2.6.4/etc/hadoop

[root@master hadoop]# vim slaves

#localhost // 注释,添加以下2行

slave1

slave2

[root@localhost hadoop]# vim core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.20:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

</property>

</configuration>

[root@localhost hadoop]# vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

[root@localhost hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@localhost hadoop]# vim mapred-site.xml

<configuration>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</configuration>

[root@localhost hadoop]# vim yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.20</value> // 机器名称或IP

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

//传到其它机器上,当前的 apps 与其它机器 apps 路径是否相同,同时会将所有配置发送到其它机器上

[root@localhost ~]# scp -r apps 192.168.1.22:/home/hadoop

[root@localhost ~]# scp -r apps 192.168.1.23:/home/hadoop

[root@localhost ~]# scp -r apps 192.168.1.24:/home/hadoop

//切换路径:

cd /root/apps/hadoop-2.6.4/bin

[root@localhost bin]# hadoop namenode -format

-bash: hadooop: 未找到命令

[root@localhost bin]# vim /etc/profile

// 添加:

export HADOOP_HOME= /root/apps/hadoop-2.6.4 //hadoop路径

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

//配置文件传到其它机器上,注意不同机器上的路径是否相同

[root@localhost bin]# scp /etc/profile 192.168.1.22:/etc

[root@localhost bin]# scp /etc/profile 192.168.1.23:/etc

[root@localhost bin]# scp /etc/profile 192.168.1.24:/etc

[root@localhost bin]# source /etc/profile //每台机器都要执行

// 再执行格式化

[root@localhost bin]# hadoop namenode -format

//以下提示 successfully formatted. 表示成功了

18/02/05 12:32:12 INFO common.Storage: Storage directory /home/hadoop/tmp/dfs/name has been successfully formatted.

18/02/05 12:32:12 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/02/05 12:32:12 INFO util.ExitUtil: Exiting with status 0

18/02/05 12:32:12 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1

************************************************************/

[root@localhost ~]# /root/apps/hadoop-2.6.4/sbin

// 启动节点

[root@localhost sbin]# hadoop-daemon.sh start namenode

starting namenode, logging to /root/apps/hadoop-2.6.4/logs/hadoop-root-namenode-localhost.localdomain.out

//查看进程

[root@localhost sbin]# jps

1535 NameNode

1569 Jps

//停止

[root@localhost sbin]# hadoop-daemon.sh stop namenode

stopping namenode

[root@localhost sbin]#

// 启动所有服务

[root@localhost sbin]# ./start-all.sh

6951 Jps

5305 NameNode

5633 ResourceManager

5488 SecondaryNameNode

[root@master hadoop]#

[root@slave1 ~]# jps

1684 NodeManager

1595 DataNode

1781 Jps

IE访问 http://192.168.1.21:8088/, http://192.168.1.21:50070